mirror of

https://github.com/kubesphere/website.git

synced 2025-12-26 00:12:48 +00:00

Merge branch 'kubesphere:master' into master

This commit is contained in:

commit

7f4d425c37

|

|

@ -44,7 +44,7 @@ Give a title first before you write a paragraph. It can be grouped into differen

|

|||

- When you submit your md files to GitHub, make sure you add related image files that appear in md files in the pull request as well. Please save your image files in static/images/docs. You can create a folder in the directory to save your images.

|

||||

- If you want to add remarks (for example, put a box on a UI button), use the color **green**. As some screenshot apps does not support the color picking function for a specific color code, as long as the color is **similar** to #09F709, #00FF00, #09F709 or #09F738, it is acceptable.

|

||||

- Image format: PNG.

|

||||

- Make sure images in your guide match the content. For example, you mention that users need to log in to KubeSphere using an account of a role; this means the account that displays in your image is expected to be the one you are talking about. It confuses your readers if the content you are describing is not consistent with the image used.

|

||||

- Make sure images in your guide match the content. For example, you mention that users need to log in to KubeSphere using a user of a role; this means the account that displays in your image is expected to be the one you are talking about. It confuses your readers if the content you are describing is not consistent with the image used.

|

||||

- Recommended: [Xnip](https://xnipapp.com/) for Mac and [Sniptool](https://www.reasyze.com/sniptool/) for Windows.

|

||||

|

||||

|

||||

|

|

@ -184,7 +184,7 @@ When describing the UI, you can use the following prepositions.

|

|||

|

||||

```bash

|

||||

# Assume your original Kubernetes cluster is v1.17.9

|

||||

./kk create config --with-kubesphere --with-kubernetes v1.17.9

|

||||

./kk create config --with-kubesphere --with-kubernetes v1.20.4

|

||||

```

|

||||

|

||||

- If the comment is used for all the code (for example, serving as a header for explanations), put the comment at the beginning above the code. For example:

|

||||

|

|

|

|||

3

OWNERS

3

OWNERS

|

|

@ -1,7 +1,10 @@

|

|||

approvers:

|

||||

- Felixnoo #oncall

|

||||

- Patrick-LuoYu #oncall

|

||||

- zryfish

|

||||

- rayzhou2017

|

||||

- faweizhao26

|

||||

- yangchuansheng

|

||||

- FeynmanZhou

|

||||

|

||||

reviewers:

|

||||

|

|

|

|||

|

|

@ -15,18 +15,6 @@

|

|||

|

||||

& > ul {

|

||||

|

||||

li:nth-child(1) {

|

||||

.top-div {

|

||||

background-image: linear-gradient(270deg, rgb(101, 193, 148), rgb(76, 169, 134))

|

||||

}

|

||||

}

|

||||

|

||||

li:nth-child(2) {

|

||||

.top-div {

|

||||

background-image: linear-gradient(to left, rgb(52, 197, 209), rgb(95, 182, 216))

|

||||

}

|

||||

}

|

||||

|

||||

& > li {

|

||||

.top-div {

|

||||

position: relative;

|

||||

|

|

|

|||

|

|

@ -6,7 +6,7 @@

|

|||

}

|

||||

|

||||

.main-section {

|

||||

& > div {

|

||||

&>div {

|

||||

position: relative;

|

||||

padding-top: 93px;

|

||||

|

||||

|

|

@ -50,7 +50,7 @@

|

|||

h1 {

|

||||

margin-top: 20px;

|

||||

margin-bottom: 40px;

|

||||

text-shadow: 0 8px 16px rgba(35,45,65,.1);

|

||||

text-shadow: 0 8px 16px rgba(35, 45, 65, .1);

|

||||

font-size: 40px;

|

||||

font-weight: 500;

|

||||

line-height: 1.4;

|

||||

|

|

@ -80,7 +80,7 @@

|

|||

line-height: 2.29;

|

||||

color: #36435c;

|

||||

}

|

||||

|

||||

|

||||

.md-body h2 {

|

||||

font-weight: 500;

|

||||

line-height: 64px;

|

||||

|

|

@ -90,13 +90,13 @@

|

|||

margin-bottom: 20px;

|

||||

border-bottom: 1px solid #ccd3db;

|

||||

}

|

||||

|

||||

|

||||

.md-body h3 {

|

||||

font-weight: 600;

|

||||

line-height: 1.5;

|

||||

color: #171c34;

|

||||

}

|

||||

|

||||

|

||||

.md-body img {

|

||||

max-width: 100%;

|

||||

box-sizing: content-box;

|

||||

|

|

@ -104,30 +104,30 @@

|

|||

border-radius: 5px;

|

||||

box-shadow: none;

|

||||

}

|

||||

|

||||

|

||||

.md-body blockquote {

|

||||

padding: 4px 20px 4px 12px;

|

||||

border-radius: 4px;

|

||||

background-color: #ecf0f2;

|

||||

}

|

||||

|

||||

|

||||

&-metadata {

|

||||

margin-bottom: 28px;

|

||||

|

||||

|

||||

&-title {

|

||||

font-size: 16px;

|

||||

font-weight: 500;

|

||||

line-height: 1.5;

|

||||

color: #171c34;

|

||||

}

|

||||

|

||||

|

||||

&-time {

|

||||

font-size: 14px;

|

||||

line-height: 1.43;

|

||||

color: #919aa3;

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

&-title {

|

||||

text-shadow: 0 8px 16px rgba(35, 45, 65, 0.1);

|

||||

font-size: 40px;

|

||||

|

|

@ -135,7 +135,7 @@

|

|||

line-height: 1.4;

|

||||

color: #171c34;

|

||||

margin-bottom: 40px;

|

||||

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

font-size: 28px;

|

||||

}

|

||||

|

|

@ -150,6 +150,7 @@

|

|||

bottom: 10px;

|

||||

transform: translateX(350px);

|

||||

width: 230px;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

display: none;

|

||||

}

|

||||

|

|

@ -158,7 +159,7 @@

|

|||

max-height: 100%;

|

||||

position: relative;

|

||||

overflow-y: auto;

|

||||

}

|

||||

}

|

||||

|

||||

.title {

|

||||

height: 32px;

|

||||

|

|

@ -166,13 +167,14 @@

|

|||

line-height: 1.33;

|

||||

color: #36435c;

|

||||

padding-bottom: 10px;

|

||||

border-bottom: solid 1px #ccd3db;;

|

||||

border-bottom: solid 1px #ccd3db;

|

||||

}

|

||||

|

||||

.tabs {

|

||||

#TableOfContents > ul > li > a {

|

||||

#TableOfContents>ul>li>a {

|

||||

font-weight: 500;

|

||||

}

|

||||

|

||||

li {

|

||||

margin: 10px 0;

|

||||

font-size: 16px;

|

||||

|

|

@ -195,6 +197,7 @@

|

|||

color: #55bc8a;

|

||||

}

|

||||

}

|

||||

|

||||

li li {

|

||||

padding-left: 20px;

|

||||

}

|

||||

|

|

@ -202,121 +205,137 @@

|

|||

}

|

||||

}

|

||||

}

|

||||

.SubscribeForm {

|

||||

position: fixed;

|

||||

right: 49px;

|

||||

bottom: 32px;

|

||||

box-shadow: 0px 8px 16px rgba(36, 46, 66, 0.05), 0px 4px 8px rgba(36, 46, 66, 0.06);

|

||||

|

||||

.innerBox {

|

||||

width: 440px;

|

||||

height: 246px;

|

||||

overflow: hidden;

|

||||

background: url('/images/home/modal-noText.svg');

|

||||

position: relative;

|

||||

padding: -8px -16px;

|

||||

background-position: -16px -8px;

|

||||

@media only screen and (min-width: $mobile-max-width) {

|

||||

.SubscribeForm {

|

||||

position: fixed;

|

||||

right: 49px;

|

||||

bottom: 32px;

|

||||

|

||||

.close {

|

||||

position: absolute;

|

||||

top: 24px;

|

||||

right: 24px;

|

||||

cursor: pointer;

|

||||

}

|

||||

box-shadow: 0px 8px 16px rgba(36, 46, 66, 0.05),

|

||||

0px 4px 8px rgba(36, 46, 66, 0.06);

|

||||

|

||||

p {

|

||||

width: 360px;

|

||||

height: 44px;

|

||||

left: 40px;

|

||||

top: 103px;

|

||||

right: 40px;

|

||||

position: absolute;

|

||||

font-family: ProximaNova;

|

||||

font-size: 16px;

|

||||

line-height: 22px;

|

||||

color: #919AA3;

|

||||

.innerBox {

|

||||

width: 440px;

|

||||

height: 246px;

|

||||

overflow: hidden;

|

||||

background: url('/images/home/modal-noText.svg');

|

||||

position: relative;

|

||||

padding: -8px -16px;

|

||||

background-position: -16px -8px;

|

||||

|

||||

}

|

||||

|

||||

div {

|

||||

bottom: 32px;

|

||||

left: 40px;

|

||||

position: absolute;

|

||||

width: 358px;

|

||||

height: 48px;

|

||||

margin-top: 20px;

|

||||

border-radius: 24px;

|

||||

border: solid 1px #ccd3db;

|

||||

background-color: #f5f8f9;

|

||||

|

||||

@mixin placeholder {

|

||||

font-family: PingFangSC;

|

||||

font-size: 14px;

|

||||

line-height: 16px;

|

||||

text-align: right;

|

||||

color: #CCD3DB;

|

||||

.close {

|

||||

position: absolute;

|

||||

top: 24px;

|

||||

right: 24px;

|

||||

cursor: pointer;

|

||||

}

|

||||

|

||||

input {

|

||||

width: 207px;

|

||||

height: 20px;

|

||||

font-size: 14px;

|

||||

margin-left: 16px;

|

||||

color: #ccd3db;

|

||||

border: none;

|

||||

outline: none;

|

||||

p {

|

||||

width: 360px;

|

||||

height: 44px;

|

||||

left: 40px;

|

||||

top: 103px;

|

||||

right: 40px;

|

||||

position: absolute;

|

||||

font-family: ProximaNova;

|

||||

font-size: 16px;

|

||||

line-height: 22px;

|

||||

color: #919AA3;

|

||||

|

||||

}

|

||||

|

||||

div {

|

||||

bottom: 32px;

|

||||

left: 40px;

|

||||

position: absolute;

|

||||

width: 358px;

|

||||

height: 48px;

|

||||

margin-top: 20px;

|

||||

border-radius: 24px;

|

||||

border: solid 1px #ccd3db;

|

||||

background-color: #f5f8f9;

|

||||

|

||||

&:-webkit-input-placeholder {

|

||||

@include placeholder();

|

||||

@mixin placeholder {

|

||||

font-family: PingFangSC;

|

||||

font-size: 14px;

|

||||

line-height: 16px;

|

||||

text-align: right;

|

||||

color: #CCD3DB;

|

||||

}

|

||||

|

||||

&:-ms-input-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

|

||||

&:-moz-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

|

||||

&:-moz-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

}

|

||||

|

||||

button {

|

||||

width: 111px;

|

||||

height: 40px;

|

||||

margin: 4px 5px 4px 14px;

|

||||

border-radius: 20px;

|

||||

border: none;

|

||||

font-size: 14px;

|

||||

color: #ffffff;

|

||||

cursor: pointer;

|

||||

box-shadow: 0 10px 50px 0 rgba(34, 43, 62, 0.1), 0 8px 16px 0 rgba(33, 43, 61, 0.2);

|

||||

background-image: linear-gradient(to bottom, rgba(0, 0, 0, 0), rgba(0, 0, 0, 0.1) 97%), linear-gradient(to bottom, #55bc8a, #55bc8a);

|

||||

|

||||

&:hover {

|

||||

box-shadow: none;

|

||||

}

|

||||

}

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 326px;

|

||||

|

||||

input {

|

||||

width: 196px;

|

||||

width: 207px;

|

||||

height: 20px;

|

||||

font-size: 14px;

|

||||

margin-left: 16px;

|

||||

color: #ccd3db;

|

||||

border: none;

|

||||

outline: none;

|

||||

background-color: #f5f8f9;

|

||||

|

||||

&:-webkit-input-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

|

||||

&:-ms-input-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

|

||||

&:-moz-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

|

||||

&:-moz-placeholder {

|

||||

@include placeholder();

|

||||

}

|

||||

}

|

||||

|

||||

button {

|

||||

width: 90px;

|

||||

width: 111px;

|

||||

height: 40px;

|

||||

margin: 4px 5px 4px 14px;

|

||||

border-radius: 20px;

|

||||

border: none;

|

||||

font-size: 14px;

|

||||

color: #ffffff;

|

||||

cursor: pointer;

|

||||

box-shadow: 0 10px 50px 0 rgba(34, 43, 62, 0.1), 0 8px 16px 0 rgba(33, 43, 61, 0.2);

|

||||

background-image: linear-gradient(to bottom, rgba(0, 0, 0, 0), rgba(0, 0, 0, 0.1) 97%), linear-gradient(to bottom, #55bc8a, #55bc8a);

|

||||

|

||||

&:hover {

|

||||

box-shadow: none;

|

||||

}

|

||||

}

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 326px;

|

||||

|

||||

input {

|

||||

width: 196px;

|

||||

}

|

||||

|

||||

button {

|

||||

width: 90px;

|

||||

}

|

||||

}

|

||||

|

||||

span {

|

||||

color: red;

|

||||

}

|

||||

}

|

||||

|

||||

span {

|

||||

color: red;

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

}

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

|

||||

.SubscribeForm {

|

||||

display: none !important;

|

||||

}

|

||||

}

|

||||

|

||||

#videoPlayer {

|

||||

width: 100%;

|

||||

}

|

||||

|

|

@ -853,7 +853,7 @@ footer {

|

|||

|

||||

p {

|

||||

width: 360px;

|

||||

font-family: ProximaNova;

|

||||

font-family: 'Proxima Nova';

|

||||

font-size: 16px;

|

||||

line-height: 22px;

|

||||

color: #919AA3;

|

||||

|

|

|

|||

|

|

@ -1,5 +1,40 @@

|

|||

@import "variables";

|

||||

|

||||

@mixin tooltip {

|

||||

.tooltip {

|

||||

visibility: hidden;

|

||||

width: 80px;

|

||||

padding: 8px 12px;

|

||||

background: #242E42;

|

||||

box-shadow: 0px 4px 8px rgba(36, 46, 66, 0.2);

|

||||

border-radius: 4px;

|

||||

transform: translateX(-50%);

|

||||

box-sizing: border-box;

|

||||

/* 定位 */

|

||||

position: absolute;

|

||||

z-index: 1;

|

||||

|

||||

font-family: PingFang SC;

|

||||

font-style: normal;

|

||||

font-size: 12px;

|

||||

line-height: 20px;

|

||||

color: #fff;

|

||||

text-align: center;

|

||||

|

||||

&::after {

|

||||

content: " ";

|

||||

position: absolute;

|

||||

top: 100%;

|

||||

/* 提示工具底部 */

|

||||

left: 50%;

|

||||

margin-left: -5px;

|

||||

border-width: 5px;

|

||||

border-style: solid;

|

||||

border-color: #242E42 transparent transparent;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.navigation {

|

||||

box-shadow: 0 4px 8px 0 rgba(36, 46, 66, 0.06), 0 8px 16px 0 rgba(36, 46, 66, 0.05);

|

||||

background-image: linear-gradient(to bottom, rgba(134, 219, 162, 0.9), rgba(0, 170, 114, 0.9));

|

||||

|

|

@ -88,7 +123,8 @@

|

|||

.right {

|

||||

box-sizing: border-box;

|

||||

width: 368px;

|

||||

padding: 24px;

|

||||

padding: 24px 20px 0 20px;

|

||||

min-height: 488px;

|

||||

max-height: 600px;

|

||||

margin-left: 15px;

|

||||

overflow: auto;

|

||||

|

|

@ -98,163 +134,200 @@

|

|||

display: none;

|

||||

}

|

||||

|

||||

.lesson-div {

|

||||

margin-top: 20px;

|

||||

.sections {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

margin-bottom: 12px;

|

||||

|

||||

&:first-child {

|

||||

margin-top: 0;

|

||||

}

|

||||

|

||||

& > p {

|

||||

.sectionFolder {

|

||||

box-sizing: border-box;

|

||||

width: 328px;

|

||||

display: flex;

|

||||

align-items: center;

|

||||

flex-direction: row;

|

||||

padding: 9px 16px;

|

||||

background: #F9FBFD;

|

||||

border-radius: 4px;

|

||||

position: relative;

|

||||

padding-left: 9px;

|

||||

font-size: 16px;

|

||||

font-weight: 500;

|

||||

line-height: 1.5;

|

||||

letter-spacing: -0.04px;

|

||||

|

||||

&::before {

|

||||

position: absolute;

|

||||

top: 10px;

|

||||

left: 0;

|

||||

content: "";

|

||||

width: 4px;

|

||||

height: 4px;

|

||||

border-radius: 50%;

|

||||

background-color: #36435c;

|

||||

&:hover {

|

||||

cursor: pointer;

|

||||

background: #EFF4F9;

|

||||

}

|

||||

|

||||

a {

|

||||

color: #36435c;

|

||||

&:hover {

|

||||

color: #55bc8a;

|

||||

}

|

||||

.text {

|

||||

font-weight: 500;

|

||||

font-size: 16px;

|

||||

line-height: 22px;

|

||||

width: 264px;

|

||||

text-overflow: ellipsis;

|

||||

white-space: nowrap;

|

||||

overflow: hidden;

|

||||

}

|

||||

|

||||

.icon {

|

||||

display: inline-block;

|

||||

margin-left: 6px;

|

||||

width: 12px;

|

||||

height: 12px;

|

||||

background-image: url("/images/learn/video.svg");

|

||||

}

|

||||

|

||||

.play-span {

|

||||

display: none;

|

||||

height: 12px;

|

||||

font-size: 0;

|

||||

span {

|

||||

display: inline-block;

|

||||

width: 2px;

|

||||

height: 100%;

|

||||

margin-right: 2px;

|

||||

background-color: #55bc8a;

|

||||

}

|

||||

}

|

||||

|

||||

.playing {

|

||||

display: inline-block;

|

||||

span {

|

||||

animation-name: playing;

|

||||

animation-duration: 1s;

|

||||

animation-timing-function: ease;

|

||||

animation-delay: 0s;

|

||||

animation-iteration-count: infinite;

|

||||

|

||||

&:first-child {

|

||||

animation-delay: 0.3s;

|

||||

}

|

||||

&:last-child {

|

||||

animation-delay: 0.5s;

|

||||

}

|

||||

}

|

||||

display: block;

|

||||

height: 10px;

|

||||

width: 10px;

|

||||

background-image: url('/images/learn/icon-setion-close.svg');

|

||||

background-repeat: no-repeat;

|

||||

position: absolute;

|

||||

right: 17px;

|

||||

}

|

||||

}

|

||||

& > p.active {

|

||||

a {

|

||||

color: #55bc8a;

|

||||

}

|

||||

|

||||

&::before {

|

||||

background-color: #55bc8a;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.lesson-link-div {

|

||||

margin-top: 10px;

|

||||

display: flex;

|

||||

a {

|

||||

display: block;

|

||||

box-sizing: border-box;

|

||||

width: 100px;

|

||||

height: 72px;

|

||||

padding: 11px 20px 10px;

|

||||

margin-left: 10px;

|

||||

margin-right: 0;

|

||||

font-size: 14px;

|

||||

line-height: 24px;

|

||||

text-align: center;

|

||||

color: #8f94a1;

|

||||

border-radius: 4px;

|

||||

background-color: #f5f9fa;

|

||||

border: solid 1px transparent;

|

||||

|

||||

&:first-child {

|

||||

margin-left: 0;

|

||||

}

|

||||

|

||||

&:hover {

|

||||

border: solid 1px #4ca986;

|

||||

}

|

||||

|

||||

span {

|

||||

display: inline-block;

|

||||

width: 24px;

|

||||

height: 24px;

|

||||

}

|

||||

}

|

||||

|

||||

.active {

|

||||

color: #00a971;

|

||||

border: solid 1px #55bc8a;

|

||||

background-color: #cdf6d5;

|

||||

}

|

||||

background: linear-gradient(180deg, #242E42 0%, #36435C 100%) !important;

|

||||

color: #ffffff;

|

||||

|

||||

.lesson {

|

||||

span {

|

||||

background-image: url("/images/learn/icon-image.svg");

|

||||

&>.icon {

|

||||

background-image: url('/images/learn/icon-setion-open.svg');

|

||||

}

|

||||

}

|

||||

|

||||

.lesson.active {

|

||||

span {

|

||||

background-image: url("/images/learn/icon-image-active.svg");

|

||||

ul {

|

||||

transition: 1.2s;

|

||||

|

||||

li {

|

||||

width: 320px;

|

||||

height: 24px;

|

||||

margin: 16px 0px;

|

||||

list-style: none;

|

||||

display: flex;

|

||||

align-items: center;

|

||||

position: relative;

|

||||

cursor: pointer;

|

||||

|

||||

.textLink {

|

||||

width: 252px;

|

||||

display: flex;

|

||||

align-items: center;

|

||||

|

||||

.videoIcon {

|

||||

display: block;

|

||||

width: 12px;

|

||||

height: 12px;

|

||||

margin-right: 8px;

|

||||

background-image: url('/images/learn/lesson-video.svg');

|

||||

}

|

||||

|

||||

.text {

|

||||

flex: 1;

|

||||

font-family: PingFang SC;

|

||||

font-style: normal;

|

||||

font-weight: normal;

|

||||

font-size: 14px;

|

||||

line-height: 24px;

|

||||

display: block;

|

||||

overflow: hidden;

|

||||

white-space: nowrap;

|

||||

text-overflow: ellipsis;

|

||||

}

|

||||

}

|

||||

|

||||

.actions {

|

||||

width: 68px;

|

||||

height: 24px;

|

||||

display: flex;

|

||||

flex-direction: row;

|

||||

align-items: center;

|

||||

justify-content: flex-end;

|

||||

|

||||

.picture {

|

||||

width: 16px;

|

||||

height: 12px;

|

||||

background-image: url('/images/learn/actions-picture.svg');

|

||||

background-repeat: no-repeat;

|

||||

position: relative;

|

||||

|

||||

@include tooltip();

|

||||

|

||||

&:hover {

|

||||

background-image: url('/images/learn/actions-picture-active.svg');

|

||||

|

||||

.tooltip {

|

||||

visibility: visible;

|

||||

bottom: 20px;

|

||||

left: 8px;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.activePicture {

|

||||

background-image: url('/images/learn/actions-picture-open.svg') !important;

|

||||

}

|

||||

|

||||

.ppt {

|

||||

width: 16px;

|

||||

height: 16px;

|

||||

background-image: url('/images/learn/actions-ppt.svg');

|

||||

background-repeat: no-repeat;

|

||||

position: relative;

|

||||

margin: 0 10px;

|

||||

@include tooltip();

|

||||

|

||||

&:hover {

|

||||

.tooltip {

|

||||

visibility: visible;

|

||||

bottom: 20px;

|

||||

left: 8px;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.download {

|

||||

width: 16px;

|

||||

height: 16px;

|

||||

background-image: url('/images/learn/actions-download.svg');

|

||||

background-repeat: no-repeat;

|

||||

position: relative;

|

||||

@include tooltip();

|

||||

|

||||

&:hover {

|

||||

.tooltip {

|

||||

visibility: visible;

|

||||

bottom: 20px;

|

||||

left: 8px;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

&:hover {

|

||||

.textLink {

|

||||

.videoIcon {

|

||||

background-image: url('/images/learn/lesson-video-hover.svg');

|

||||

}

|

||||

}

|

||||

|

||||

.text {

|

||||

color: #4CA986;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.pptActive{

|

||||

.text {

|

||||

color: #4CA986;

|

||||

}

|

||||

}

|

||||

|

||||

.activeLine {

|

||||

.textLink {

|

||||

.videoIcon {

|

||||

background-image: url('/images/learn/lesson-video-play.svg') !important;

|

||||

}

|

||||

|

||||

.text {

|

||||

color: #4CA986;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.courseware {

|

||||

span {

|

||||

background-image: url("/images/learn/icon-ppt.svg");

|

||||

}

|

||||

}

|

||||

|

||||

.courseware.active {

|

||||

span {

|

||||

background-image: url("/images/learn/icon-ppt-active.svg");

|

||||

}

|

||||

}

|

||||

|

||||

.examination {

|

||||

span {

|

||||

background-image: url("/images/learn/icon-download.svg");

|

||||

}

|

||||

}

|

||||

|

||||

.examination.active {

|

||||

span {

|

||||

background-image: url("/images/learn/icon-download-active.svg");

|

||||

}

|

||||

.hideLesson {

|

||||

display: none;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -144,14 +144,15 @@ h2 {

|

|||

font-size: 0;

|

||||

overflow-x: auto;

|

||||

white-space: nowrap;

|

||||

display: flex;

|

||||

|

||||

li {

|

||||

position: relative;

|

||||

display: inline-block;

|

||||

box-sizing: border-box;

|

||||

white-space: normal;

|

||||

width: 323px;

|

||||

height: 237px;

|

||||

min-width: 323px;

|

||||

min-height: 237px;

|

||||

padding: 30px 20px 30px 62px;

|

||||

margin-left: 70px;

|

||||

font-size: 14px;

|

||||

|

|

@ -169,6 +170,7 @@ h2 {

|

|||

left: -50px;

|

||||

width: 100px;

|

||||

height: 100px;

|

||||

object-fit: cover;

|

||||

border-radius: 50%;

|

||||

}

|

||||

|

||||

|

|

@ -250,7 +252,7 @@ h2 {

|

|||

margin-top: 68px;

|

||||

& > li {

|

||||

position: relative;

|

||||

padding: 50px 39px 20px 40px;

|

||||

padding: 50px 39px 40px 40px;

|

||||

margin-bottom: 58px;

|

||||

border-radius: 8px;

|

||||

background-color: #ffffff;

|

||||

|

|

@ -276,6 +278,10 @@ h2 {

|

|||

top: -20px;

|

||||

left: 30px;

|

||||

border-radius: 5px;

|

||||

white-space: nowrap;

|

||||

text-overflow: ellipsis;

|

||||

max-width: 75%;

|

||||

overflow: hidden;

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -371,6 +377,46 @@ h2 {

|

|||

}

|

||||

}

|

||||

}

|

||||

|

||||

.button{

|

||||

position: absolute;

|

||||

height: 48px;

|

||||

width: 100%;

|

||||

bottom: 0;

|

||||

left: 0;

|

||||

background: linear-gradient(360deg, rgba(85, 188, 138, 0.25) 0%, rgba(85, 188, 138, 0) 100%);

|

||||

border: none;

|

||||

font-weight: 600;

|

||||

font-size: 14px;

|

||||

line-height: 20px;

|

||||

color: #0F8049;

|

||||

display: flex;

|

||||

align-items: center;

|

||||

justify-content: center;

|

||||

cursor: pointer;

|

||||

|

||||

#close{

|

||||

display: none;

|

||||

}

|

||||

}

|

||||

|

||||

.hideButton{

|

||||

display: none;

|

||||

}

|

||||

|

||||

.active{

|

||||

#open{

|

||||

display: none;

|

||||

}

|

||||

|

||||

#close{

|

||||

display: block;

|

||||

}

|

||||

|

||||

svg{

|

||||

transform: rotateX(180deg);

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -1,5 +1,6 @@

|

|||

@import 'variables';

|

||||

@import 'mixin';

|

||||

|

||||

.btn-a {

|

||||

display: inline-block;

|

||||

padding: 0 53px;

|

||||

|

|

@ -10,10 +11,12 @@

|

|||

color: #ffffff;

|

||||

box-shadow: 0 10px 50px 0 rgba(34, 43, 62, 0.1), 0 8px 16px 0 rgba(33, 43, 61, 0.2), 0 10px 50px 0 rgba(34, 43, 62, 0.1);

|

||||

background-image: linear-gradient(to bottom, rgba(85, 188, 138, 0), rgba(85, 188, 138, 0.1) 97%), linear-gradient(to bottom, #55bc8a, #55bc8a);

|

||||

|

||||

&:hover {

|

||||

box-shadow: none;

|

||||

}

|

||||

}

|

||||

|

||||

.section-1 {

|

||||

position: relative;

|

||||

padding-top: 124px;

|

||||

|

|

@ -40,16 +43,19 @@

|

|||

position: relative;

|

||||

width: 840px;

|

||||

height: 400px;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 100%;

|

||||

height: auto;

|

||||

}

|

||||

|

||||

img {

|

||||

width: 100%;

|

||||

height: 100%;

|

||||

min-height: 200px;

|

||||

object-fit: cover;

|

||||

}

|

||||

|

||||

button {

|

||||

position: absolute;

|

||||

right: 20px;

|

||||

|

|

@ -62,6 +68,7 @@

|

|||

cursor: pointer;

|

||||

box-shadow: 0 10px 50px 0 rgba(34, 43, 62, 0.1), 0 8px 16px 0 rgba(33, 43, 61, 0.2), 0 10px 50px 0 rgba(34, 43, 62, 0.1);

|

||||

background-image: linear-gradient(to bottom, rgba(85, 188, 138, 0), rgba(85, 188, 138, 0.1) 97%), linear-gradient(to bottom, #55bc8a, #55bc8a);

|

||||

|

||||

&:hover {

|

||||

box-shadow: none;

|

||||

}

|

||||

|

|

@ -73,12 +80,14 @@

|

|||

width: 320px;

|

||||

height: 400px;

|

||||

padding: 10px;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

max-width: 320px;

|

||||

width: auto;

|

||||

height: auto;

|

||||

margin: 0 auto;

|

||||

}

|

||||

|

||||

h2 {

|

||||

margin-bottom: 10px;

|

||||

font-size: 18px;

|

||||

|

|

@ -116,8 +125,8 @@

|

|||

font-size: 16px;

|

||||

line-height: 28px;

|

||||

letter-spacing: -0.04px;

|

||||

color: #919aa3;

|

||||

|

||||

color: #919aa3;

|

||||

|

||||

img {

|

||||

vertical-align: middle;

|

||||

margin-right: 4px;

|

||||

|

|

@ -127,8 +136,8 @@

|

|||

a {

|

||||

margin: 34px auto 0;

|

||||

height: 40px;

|

||||

padding: 0 28px;

|

||||

line-height: 40px;

|

||||

padding: 0 28px;

|

||||

line-height: 40px;

|

||||

}

|

||||

|

||||

.tag {

|

||||

|

|

@ -165,12 +174,12 @@

|

|||

padding-bottom: 40px;

|

||||

}

|

||||

|

||||

& > div {

|

||||

&>div {

|

||||

|

||||

& > .video-tab-ul {

|

||||

&>.video-tab-ul {

|

||||

padding: 0 34px;

|

||||

border-radius: 5px;

|

||||

box-shadow: 0 4px 16px 0 rgba(7,42,68,.1);

|

||||

box-shadow: 0 4px 16px 0 rgba(7, 42, 68, .1);

|

||||

background-color: #fff;

|

||||

|

||||

li {

|

||||

|

|

@ -188,18 +197,19 @@

|

|||

text-align: center;

|

||||

|

||||

&:hover {

|

||||

box-shadow: 0 8px 16px 0 rgba(101,193,148,.2),0 0 50px 0 rgba(101,193,148,.1);

|

||||

box-shadow: 0 8px 16px 0 rgba(101, 193, 148, .2), 0 0 50px 0 rgba(101, 193, 148, .1);

|

||||

background-color: #55bc8a;

|

||||

color: #fff;

|

||||

}

|

||||

}

|

||||

|

||||

.active {

|

||||

box-shadow: 0 8px 16px 0 rgba(101,193,148,.2),0 0 50px 0 rgba(101,193,148,.1);

|

||||

box-shadow: 0 8px 16px 0 rgba(101, 193, 148, .2), 0 0 50px 0 rgba(101, 193, 148, .1);

|

||||

background-color: #55bc8a;

|

||||

color: #fff;

|

||||

}

|

||||

|

||||

li + li {

|

||||

li+li {

|

||||

margin-left: 12px;

|

||||

}

|

||||

}

|

||||

|

|

@ -207,11 +217,12 @@

|

|||

.video-ul {

|

||||

margin-top: 20px;

|

||||

font-size: 0;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

text-align: center;

|

||||

}

|

||||

|

||||

& > li {

|

||||

&>li {

|

||||

position: relative;

|

||||

display: inline-block;

|

||||

width: 360px;

|

||||

|

|

@ -225,18 +236,18 @@

|

|||

text-align: left;

|

||||

cursor: pointer;

|

||||

|

||||

& > img {

|

||||

&>img {

|

||||

width: 100%;

|

||||

height: 100%;

|

||||

}

|

||||

|

||||

&:hover {

|

||||

& > div {

|

||||

&>div {

|

||||

height: 202px;

|

||||

}

|

||||

}

|

||||

|

||||

& > div {

|

||||

&>div {

|

||||

position: absolute;

|

||||

left: 0;

|

||||

right: 0;

|

||||

|

|

@ -247,14 +258,14 @@

|

|||

transition: all .2s ease-in-out;

|

||||

overflow: hidden;

|

||||

|

||||

& > .btn {

|

||||

&>.btn {

|

||||

position: absolute;

|

||||

left: 50%;

|

||||

bottom: 120px;

|

||||

transform: translateX(-50%);

|

||||

}

|

||||

|

||||

& > div {

|

||||

&>div {

|

||||

position: absolute;

|

||||

left: 0;

|

||||

right: 0;

|

||||

|

|

@ -269,7 +280,7 @@

|

|||

color: #fff;

|

||||

padding: 8px 0;

|

||||

margin-bottom: 6px;

|

||||

border-bottom: 1px solid hsla(0,0%,100%,.1);

|

||||

border-bottom: 1px solid hsla(0, 0%, 100%, .1);

|

||||

text-overflow: ellipsis;

|

||||

white-space: nowrap;

|

||||

overflow: hidden;

|

||||

|

|

@ -306,7 +317,7 @@

|

|||

}

|

||||

}

|

||||

|

||||

& > div {

|

||||

&>div {

|

||||

margin-top: 20px;

|

||||

text-align: center;

|

||||

|

||||

|

|

@ -342,7 +353,7 @@

|

|||

padding: 0;

|

||||

border-radius: 0;

|

||||

font-size: 0;

|

||||

|

||||

|

||||

.video-div {

|

||||

height: 100%;

|

||||

}

|

||||

|

|

@ -363,7 +374,7 @@

|

|||

width: 100%;

|

||||

max-width: 100%;

|

||||

height: auto;

|

||||

|

||||

|

||||

iframe {

|

||||

width: 100%;

|

||||

height: 300px;

|

||||

|

|

@ -371,99 +382,270 @@

|

|||

}

|

||||

}

|

||||

|

||||

.section-4 {

|

||||

background-image: linear-gradient(113deg, #4a499a 27%, #8552c3 81%);

|

||||

.common-layout {

|

||||

white-space: nowrap;

|

||||

overflow: auto;

|

||||

& > div {

|

||||

box-sizing: border-box;

|

||||

display: inline-block;

|

||||

vertical-align: top;

|

||||

white-space: normal;

|

||||

width: 140px;

|

||||

height: 225px;

|

||||

margin: 80px 40px;

|

||||

padding-top: 20px;

|

||||

border-top: 1px solid #a1b3c4;

|

||||

|

||||

.time-div {

|

||||

.common-layout-special {

|

||||

white-space: nowrap;

|

||||

overflow: auto;

|

||||

height: 535px;

|

||||

background-image: linear-gradient(147.87deg, #4A499A 16%, #8552C3 85.01%);

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 100%;

|

||||

height: auto;

|

||||

}

|

||||

|

||||

.meetup-box {

|

||||

max-width: 1320px;

|

||||

margin: 0 auto;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 100%;

|

||||

}

|

||||

|

||||

.meetup-title {

|

||||

font-size: 32px;

|

||||

line-height: 45px;

|

||||

color: #fff;

|

||||

text-align: center;

|

||||

padding-top: 56px;

|

||||

}

|

||||

|

||||

.innerBox {

|

||||

padding: 0px 80px;

|

||||

position: relative;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 100%;

|

||||

box-sizing: border-box;

|

||||

padding: 0 20px;

|

||||

}

|

||||

|

||||

&>ul {

|

||||

margin-top: 16px;

|

||||

display: flex;

|

||||

overflow: scroll;

|

||||

background: linear-gradient(to top, rgba(255, 255, 255, 0.08) 5%, transparent 5%) no-repeat;

|

||||

|

||||

.right {

|

||||

margin-left: 4px;

|

||||

font-weight: bold;

|

||||

line-height: 1;

|

||||

color: #ffffff;

|

||||

.date {

|

||||

margin-bottom: 4px;

|

||||

font-size: 24px;

|

||||

}

|

||||

.time {

|

||||

font-size: 14px;

|

||||

}

|

||||

li {

|

||||

width: 80px;

|

||||

height: 40px;

|

||||

line-height: 24px;

|

||||

color: rgba(255, 255, 255, 0.15);

|

||||

display: flex;

|

||||

justify-content: center;

|

||||

align-items: center;

|

||||

flex-shrink: 0;

|

||||

box-sizing: border-box;

|

||||

}

|

||||

|

||||

.tab_active {

|

||||

color: rgba(255, 255, 255, 0.7);

|

||||

border-bottom: solid 2px rgba(255, 255, 255, 0.7);

|

||||

}

|

||||

}

|

||||

|

||||

h3 {

|

||||

height: 60px;

|

||||

margin: 21px 0 47px;

|

||||

font-size: 14px;

|

||||

font-weight: 500;

|

||||

line-height: 1.43;

|

||||

color: #d5dee7;

|

||||

a {

|

||||

color: #d5dee7;

|

||||

.yearBox {

|

||||

display: flex;

|

||||

align-items: center;

|

||||

min-height: 377px;

|

||||

|

||||

.hiddenUl {

|

||||

display: none;

|

||||

}

|

||||

|

||||

.autoMeetUp {

|

||||

width: 100%;

|

||||

height: 260px;

|

||||

margin: 0 auto;

|

||||

display: flex;

|

||||

position: relative;

|

||||

|

||||

.swiper-slide {

|

||||

display: flex;

|

||||

overflow: hidden;

|

||||

flex-direction: column;

|

||||

}

|

||||

|

||||

li {

|

||||

height: 258px;

|

||||

|

||||

.imgBox {

|

||||

position: relative;

|

||||

display: flex;

|

||||

align-items: center;

|

||||

flex-direction: column;

|

||||

|

||||

p {

|

||||

text-align: center;

|

||||

font-weight: 500;

|

||||

font-size: 20px;

|

||||

line-height: 28px;

|

||||

color: #FFFFFF;

|

||||

}

|

||||

|

||||

img {

|

||||

margin-top: 20px;

|

||||

width: 373px;

|

||||

height: 210px;

|

||||

}

|

||||

|

||||

.button {

|

||||

position: absolute;

|

||||

right: 10px;

|

||||

bottom: 10px;

|

||||

padding: 10px 20px;

|

||||

border-radius: 28px;

|

||||

font-size: 16px;

|

||||

color: #fff;

|

||||

border: none;

|

||||

cursor: pointer;

|

||||

box-shadow: 0 10px 50px 0 rgba(34, 43, 62, 0.1), 0 8px 16px 0 rgba(33, 43, 61, 0.2), 0 10px 50px 0 rgba(34, 43, 62, 0.1);

|

||||

background-image: linear-gradient(to bottom, rgba(85, 188, 138, 0), rgba(85, 188, 138, 0.1) 97%), linear-gradient(to bottom, #55bc8a, #55bc8a);

|

||||

|

||||

&:hover {

|

||||

box-shadow: none;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

}

|

||||

|

||||

@media only screen and (max-width: 375px) {

|

||||

.swiper-slide {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

}

|

||||

|

||||

li {

|

||||

|

||||

&:nth-child(2) {

|

||||

margin: 0 0;

|

||||

}

|

||||

|

||||

img {

|

||||

width: 100% !important;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@media only screen and (min-width: 376px) and (max-width: $mobile-max-width) {

|

||||

|

||||

.swiper-slide {

|

||||

display: flex;

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

}

|

||||

|

||||

li {

|

||||

&:nth-child(2) {

|

||||

margin: 0 0;

|

||||

}

|

||||

|

||||

img {

|

||||

width: 373px !important;

|

||||

}

|

||||

|

||||

.button {

|

||||

right: 15px !important;

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

@media only screen and (min-width: 769px) and (max-width: 1160px) {

|

||||

|

||||

li {

|

||||

flex-direction: column;

|

||||

align-items: center;

|

||||

|

||||

img {

|

||||

width: 320px !important;

|

||||

height: 180px !important;

|

||||

}

|

||||

|

||||

.button {

|

||||

right: 10px;

|

||||

bottom: 40px;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.prev-button {

|

||||

display: block;

|

||||

background: url('/images/live/arrow.svg');

|

||||

width: 40px;

|

||||

height: 40px;

|

||||

position: absolute;

|

||||

bottom: 153.5px;

|

||||

left: 0px;

|

||||

transform: rotate(180deg);

|

||||

cursor: pointer;

|

||||

|

||||

@media only screen and (max-width: 767px) {

|

||||

display: none;

|

||||

}

|

||||

|

||||

@media only screen and (min-width: $mobile-max-width) and (max-width: 1160px) {

|

||||

left: 20px;

|

||||

z-index: 200;

|

||||

}

|

||||

|

||||

&:hover {

|

||||

color: #008a5c;

|

||||

background: url('/images/live/arrow-hover.svg');

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

button {

|

||||

font-size: 12px;

|

||||

font-weight: 600;

|

||||

line-height: 2;

|

||||

border: none;

|

||||

padding: 5px 28px;

|

||||

border-radius: 17px;

|

||||

cursor: pointer;

|

||||

box-shadow: 0 10px 50px 0 rgba(34, 43, 62, 0.1), 0 8px 16px 0 rgba(33, 43, 61, 0.2);

|

||||

&:hover {

|

||||

box-shadow: none;

|

||||

.next-button {

|

||||

display: block;

|

||||

background: url('/images/live/arrow.svg');

|

||||

width: 40px;

|

||||

height: 40px;

|

||||

position: absolute;

|

||||

bottom: 153.5px;

|

||||

right: 0px;

|

||||

cursor: pointer;

|

||||

|

||||

@media only screen and (max-width: 767px) {

|

||||

display: none;

|

||||

}

|

||||

|

||||

@media only screen and (min-width: $mobile-max-width) and (max-width: 1160px) {

|

||||

right: 20px;

|

||||

z-index: 200;

|

||||

}

|

||||

|

||||

&:hover {

|

||||

background: url('/images/live/arrow-hover.svg');

|

||||

}

|

||||

|

||||

}

|

||||

}

|

||||

|

||||

.over-btn {

|

||||

color: #ffffff;

|

||||

background-image: linear-gradient(to bottom, rgba(0, 0, 0, 0), rgba(0, 0, 0, 0.1) 97%), linear-gradient(to bottom, #242e42, #242e42);

|

||||

}

|

||||

|

||||

.notive-btn {

|

||||

color: #3d3e49;

|

||||

background-image: linear-gradient(to bottom, rgba(0, 0, 0, 0), rgba(0, 0, 0, 0.1) 97%), linear-gradient(to bottom, #ffffff, #ffffff);

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

||||

.section-5 {

|

||||

.common-layout {

|

||||

position: relative;

|

||||

padding-top: 100px;

|

||||

padding-left: 60px;

|

||||

padding-bottom: 30px;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

padding-left: 20px;

|

||||

}

|

||||

|

||||

.left-div {

|

||||

position: relative;

|

||||

width: 600px;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

width: 100%;

|

||||

z-index: 2;

|

||||

}

|

||||

|

||||

h2 {

|

||||

font-size: 32px;

|

||||

font-weight: 600;

|

||||

|

|

@ -485,13 +667,28 @@

|

|||

}

|

||||

}

|

||||

|

||||

& > img {

|

||||

&>img {

|

||||

position: absolute;

|

||||

top: 88px;

|

||||

right: 0;

|

||||

|

||||

@media only screen and (max-width: $mobile-max-width) {

|

||||

opacity: 0.3;

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

.my-bullet-active {

|

||||

background: #55bc8a;

|

||||

opacity: 1;

|

||||

}

|

||||

|

||||

.swiper-horizontal>.swiper-pagination-bullets,

|

||||

.swiper-pagination-bullets.swiper-pagination-horizontal,

|

||||

.swiper-pagination-custom,

|

||||

.swiper-pagination-fraction {

|

||||

bottom: 5px;

|

||||

left: 0;

|

||||

width: 100%;

|

||||

}

|

||||

|

|

@ -180,3 +180,19 @@

|

|||

padding-top: 20px;

|

||||

}

|

||||

}

|

||||

|

||||

@mixin common-layout-special {

|

||||

position: relative;

|

||||

width: 1300px;

|

||||

margin: 0 auto;

|

||||

padding-left: 260px;

|

||||

|

||||

@media only screen and (max-width: $width-01) {

|

||||

width: 100%;

|

||||

}

|

||||

|

||||

@media only screen and (max-width: $width-02) {

|

||||

padding: 10px;

|

||||

padding-top: 20px;

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -3,6 +3,8 @@ baseURL = "https://kubesphere-v3.netlify.app"

|

|||

enableRobotsTXT = true

|

||||

|

||||

[markup]

|

||||

[markup.goldmark.extensions]

|

||||

typographer = false

|

||||

[markup.tableOfContents]

|

||||

endLevel = 3

|

||||

ordered = false

|

||||

|

|

@ -10,6 +12,7 @@ enableRobotsTXT = true

|

|||

[markup.goldmark.renderer]

|

||||

unsafe= true

|

||||

|

||||

|

||||

[Taxonomies]

|

||||

|

||||

[params]

|

||||

|

|

@ -21,8 +24,6 @@ githubBlobUrl = "https://github.com/kubesphere/website/blob/master/content"

|

|||

|

||||

githubEditUrl = "https://github.com/kubesphere/website/edit/master/content"

|

||||

|

||||

mailchimpSubscribeUrl = "https://kubesphere.us10.list-manage.com/subscribe/post?u=c85ea2b944b08b951f607bdd4&id=83f673a2d9"

|

||||

|

||||

gcs_engine_id = "018068616810858123755%3Apb1pt8sx6ve"

|

||||

|

||||

githubLink = "https://github.com/kubesphere/kubesphere"

|

||||

|

|

@ -34,6 +35,7 @@ twitterLink = "https://twitter.com/KubeSphere"

|

|||

mediumLink = "https://itnext.io/@kubesphere"

|

||||

linkedinLink = "https://www.linkedin.com/company/kubesphere/"

|

||||

|

||||

|

||||

[languages.en]

|

||||

contentDir = "content/en"

|

||||

weight = 1

|

||||

|

|

@ -45,6 +47,7 @@ title = "KubeSphere | The Kubernetes platform tailored for hybrid multicloud"

|

|||

description = "KubeSphere is a distributed operating system managing cloud native applications with Kubernetes as its kernel, and provides plug-and-play architecture for the seamless integration of third-party applications to boost its ecosystem."

|

||||

keywords = "KubeSphere, Kubernetes, container platform, DevOps, hybrid cloud, cloud native"

|

||||

snapshot = "/images/common/snapshot-en.png"

|

||||

mailchimpSubscribeUrl = "https://kubesphere.us10.list-manage.com/subscribe/post?u=c85ea2b944b08b951f607bdd4&id=83f673a2d9"

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

weight = 2

|

||||

|

|

@ -105,33 +108,39 @@ hasChildren = true

|

|||

|

||||

[[languages.en.menu.main]]

|

||||

parent = "Documentation"

|

||||

name = "v3.1.x <img src='/images/header/star.svg' alt='star'>"

|

||||

URL = "docs/"

|

||||

name = "v3.2.x <img src='/images/header/star.svg' alt='star'>"

|

||||

URL = "/docs"

|

||||

weight = 1

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

parent = "Documentation"

|

||||

name = "v3.1.x"

|

||||

URL = "https://v3-1.docs.kubesphere.io/docs"

|

||||

weight = 2

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

parent = "Documentation"

|

||||

name = "v3.0.0"

|

||||

URL = "https://v3-0.docs.kubesphere.io/docs"

|

||||

weight = 2

|

||||

weight = 3

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

parent = "Documentation"

|

||||

name = "v2.1.x"

|

||||

URL = "https://v2-1.docs.kubesphere.io/docs"

|

||||

weight = 3

|

||||

weight = 4

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

parent = "Documentation"

|

||||

name = "v2.0.x"

|

||||

URL = "https://v2-0.docs.kubesphere.io/docs/"

|

||||

weight = 4

|

||||

weight = 5

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

parent = "Documentation"

|

||||

name = "v1.0.0"

|

||||

URL = "https://v1-0.docs.kubesphere.io/docs/"

|

||||

weight = 5

|

||||

weight = 6

|

||||

|

||||

[[languages.en.menu.main]]

|

||||

weight = 5

|

||||

|

|

@ -185,6 +194,7 @@ title = "KubeSphere | 面向云原生应用的容器混合云"

|

|||

description = "KubeSphere 是在 Kubernetes 之上构建的以应用为中心的多租户容器平台,提供全栈的 IT 自动化运维的能力,简化企业的 DevOps 工作流。KubeSphere 提供了运维友好的向导式操作界面,帮助企业快速构建一个强大和功能丰富的容器云平台。"

|

||||

keywords = "KubeSphere, Kubernetes, 容器平台, DevOps, 混合云"

|

||||

snapshot = "/images/common/snapshot-zh.png"

|

||||

mailchimpSubscribeUrl = "https://yunify.us2.list-manage.com/subscribe/post?u=f29f08cef80223b46bad069b5&id=4838e610c2"

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

weight = 2

|

||||

|

|

@ -244,33 +254,39 @@ hasChildren = true

|

|||

name = "文档中心"

|

||||

[[languages.zh.menu.main]]

|

||||

parent = "文档中心"

|

||||

name = "v3.1.x <img src='/images/header/star.svg' alt='star'>"

|

||||

URL = "docs/"

|

||||

name = "v3.2.x <img src='/images/header/star.svg' alt='star'>"

|

||||

URL = "/docs/"

|

||||

weight = 1

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

parent = "文档中心"

|

||||

name = "v3.1.x"

|

||||

URL = "https://v3-1.docs.kubesphere.io/zh/docs/"

|

||||

weight = 2

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

parent = "文档中心"

|

||||

name = "v3.0.0"

|

||||

URL = "https://v3-0.docs.kubesphere.io/zh/docs/"

|

||||

weight = 2

|

||||

weight = 3

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

parent = "文档中心"

|

||||

name = "v2.1.x"

|

||||

URL = "https://v2-1.docs.kubesphere.io/docs/zh-CN/"

|

||||

weight = 3

|

||||

weight = 4

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

parent = "文档中心"

|

||||

name = "v2.0.x"

|

||||

URL = "https://v2-0.docs.kubesphere.io/docs/zh-CN/"

|

||||

weight = 4

|

||||

weight = 5

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

parent = "文档中心"

|

||||

name = "v1.0.0"

|

||||

URL = "https://v1-0.docs.kubesphere.io/docs/zh-CN/"

|

||||

weight = 5

|

||||

weight = 6

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

weight = 5

|

||||

|

|

|

|||

|

|

@ -4,5 +4,12 @@ defaultContentLanguage = "zh"

|

|||

[params]

|

||||

showCaseNumber = true

|

||||

|

||||

addBaiduAnalytics = true

|

||||

|

||||

bilibiliLink = "https://space.bilibili.com/438908638"

|

||||

mailchimpSubscribeUrl = "https://yunify.us2.list-manage.com/subscribe/post?u=f29f08cef80223b46bad069b5&id=4838e610c2"

|

||||

|

||||

[languages.en.params]

|

||||

mailchimpSubscribeUrl = "https://yunify.us2.list-manage.com/subscribe/post?u=f29f08cef80223b46bad069b5&id=4838e610c2"

|

||||

|

||||

[languages.zh.params]

|

||||

mailchimpSubscribeUrl = "https://yunify.us2.list-manage.com/subscribe/post?u=f29f08cef80223b46bad069b5&id=4838e610c2"

|

||||

|

|

|

|||

|

|

@ -90,7 +90,7 @@ section4:

|

|||

|

||||

- name: Multiple Storage and Networking Solutions

|

||||

icon: /images/home/multi-tenant-management.svg

|

||||

content: Support GlusterFS, CephRBD, NFS, LocalPV solutions, and provide CSI plugins to consume storage from multiple cloud providers. Provide a <a class='inner-a' target='_blank' href='https://porterlb.io'>load balancer Porter</a> for bare metal Kubernetes, and offers network policy management, support Calico and Flannel CNI

|

||||

content: Support GlusterFS, CephRBD, NFS, LocalPV solutions, and provide CSI plugins to consume storage from multiple cloud providers. Provide a <a class='inner-a' target='_blank' href='https://porterlb.io'>load balancer OpenELB</a> for bare metal Kubernetes, and offers network policy management, support Calico and Flannel CNI

|

||||

|

||||

features:

|

||||

|

||||

|

|

|

|||

|

|

@ -20,9 +20,9 @@ In this article, we will introduce the deployment of Kasten K10 on KubeSphere.

|

|||

|

||||

## Provision a KubeSphere Cluster

|

||||

|

||||

This article will introduce how to deploy Kasten on on KubeSphere Container Platform. You can install KubeSphere on any Kubernetes cluster or Linux system, refer to [KubeSphere documentation](https://kubesphere.io/docs/quick-start/all-in-one-on-linux/) for more details or vist the [Github]( https://github.com/kubesphere/website) of KubeSphere.

|

||||

This article will introduce how to deploy Kasten on KubeSphere Container Platform. You can install KubeSphere on any Kubernetes cluster or Linux system, refer to [KubeSphere documentation](https://kubesphere.io/docs/quick-start/all-in-one-on-linux/) for more details or vist the [Github]( https://github.com/kubesphere/website) of KubeSphere.

|

||||

|

||||

After the creation of KubeSphere cluster, you can log in to KubeSphere web console:

|

||||

After the creation of KubeSphere cluster, you can log in to the KubeSphere web console:

|

||||

|

||||

|

||||

Click the button "Platform" in the upper left corner and then select "Access Control"; Create a new workspace called Kasten-Workspace.

|

||||

|

|

@ -34,7 +34,7 @@ Enter "Kasten-workspace" and select "App Repositoties"; Add an application repos

|

|||

Add the official Helm Repository of Kasten to KubeSphere. **Helm repository address**[2]:`https://charts.kasten.io/`

|

||||

|

||||

|

||||

Once completed, the repository will find its status be "successful".

|

||||

Once completed, the repository will find its status to be "successful".

|

||||

|

||||

|

||||

## Deploy Kasten K10 on Kubernetes to Backup and Restore Cluster

|

||||

|

|

@ -76,7 +76,7 @@ global:

|

|||

create: "true"

|

||||

class: "nginx"

|

||||

```

|

||||

Click "Deploy" and wait the status to turn into "running".

|

||||

Click "Deploy" and wait for the status to turn into "running".

|

||||

|

||||

Click "Deployment" to check if Kasten has deployed workload and is in running status.

|

||||

|

||||

|

|

@ -93,7 +93,7 @@ In “Application Workloads” - “Routes” page, we can find the Gateway of I

|

|||

Input `https://192.168.99.100/k10/#` to the browser for the following log-in interface; Input the company and e-mail address to sign up.

|

||||

|

||||

|

||||

Set the locations for storing our backup data. In this case S3 compatible storage is selected.

|

||||

Set the locations for storing our backup data. In this case, S3 compatible storage is selected.

|

||||

|

||||

|

||||

|

||||

|

|

@ -105,7 +105,7 @@ Finally, start "K10 Disaster Recovery" and we can start to set "Disaster Recover

|

|||

|

||||

## Deploy Cloud Native Applications on Kubernetes

|

||||

|

||||

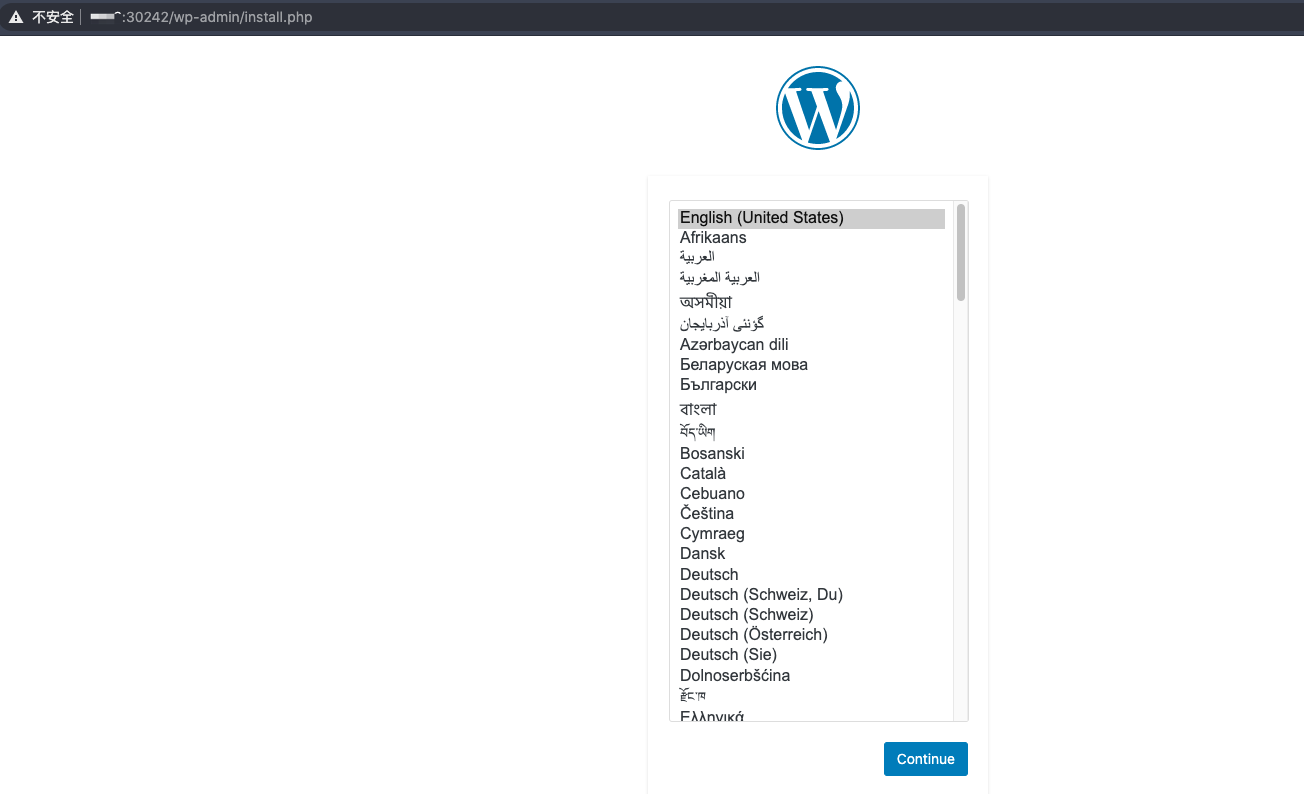

Kasten Dashboard holds 16 applications, which are shown as follows. We can create a Wordpress application with a Wordpress Pod and Mysql Pod, a typical application that is partly stateful and partly stateless. Here are the steps.

|

||||

Kasten Dashboard holds 16 applications, which are shown as follows. We can create a WordPress application with a WordPress Pod and Mysql Pod, a typical application that is partly stateful and partly stateless. Here are the steps.

|

||||

|

||||

|

||||

|

||||

|

|

@ -149,7 +149,7 @@ In addition, applications of the WordPress can also be find in "Applications".

|

|||

|

||||

## Back Up Cloud Native Applications

|

||||

|

||||

Click "Create Policy" and create a data backup strategy. In such case, Kasten can protect applications by creating local snapshot, and back up the application data to cloud, thus to realize the long-term retention of data.

|

||||

Click "Create Policy" and create a data backup strategy. In such a case, Kasten can protect applications by creating local snapshot, and back up the application data to cloud, thus to realize the long-term retention of data.

|

||||

|

||||

|