| OS | +Minimum Requirements | +

|---|---|

| Ubuntu 16.04, 18.04 | +2 CPU cores, 2 GB memory, and 40 GB disk space | +

| Debian Buster, Stretch | +2 CPU cores, 2 GB memory, and 40 GB disk space | +

| CentOS 7.x | +2 CPU cores, 2 GB memory, and 40 GB disk space | +

| Red Hat Enterprise Linux 7 | +2 CPU cores, 2 GB memory, and 40 GB disk space | +

| SUSE Linux Enterprise Server 15/openSUSE Leap 15.2 | +2 CPU cores, 2 GB memory, and 40 GB disk space | +

| Dependency | +Kubernetes Version ≥ 1.18 | +Kubernetes Version < 1.18 | +

|---|---|---|

socat |

+ Required | +Optional but recommended | +

conntrack |

+ Required | +Optional but recommended | +

ebtables |

+ Optional but recommended | +Optional but recommended | +

ipset |

+ Optional but recommended | +Optional but recommended | +

version |

- The Kubernetes version to be installed. If you do not specify a Kubernetes version, {{< contentLink "docs/installing-on-linux/introduction/kubekey" "KubeKey" >}} v1.1.0 will install Kubernetes v1.19.8 by default. For more information, see {{< contentLink "docs/installing-on-linux/introduction/kubekey/#support-matrix" "Support Matrix" >}}. | +The Kubernetes version to be installed. If you do not specify a Kubernetes version, {{< contentLink "docs/installing-on-linux/introduction/kubekey" "KubeKey" >}} v1.2.1 will install Kubernetes v1.21.5 by default. For more information, see {{< contentLink "docs/installing-on-linux/introduction/kubekey/#support-matrix" "Support Matrix" >}}. |

imageRepo |

@@ -116,10 +116,11 @@ The below table describes the above parameters in detail.

on the right and then select **Edit YAML** to edit `ks-installer`.

-

-

5. In the YAML file of `ks-installer`, change the value of `jwtSecret` to the corresponding value shown above and set the value of `clusterRole` to `member`. Click **Update** to save your changes.

```yaml

@@ -63,20 +59,12 @@ Log in to the web console of Alibaba Cloud. Go to **Clusters** under **Container

-### Step 3: Import the ACK Member Cluster

+### Step 3: Import the ACK member cluster

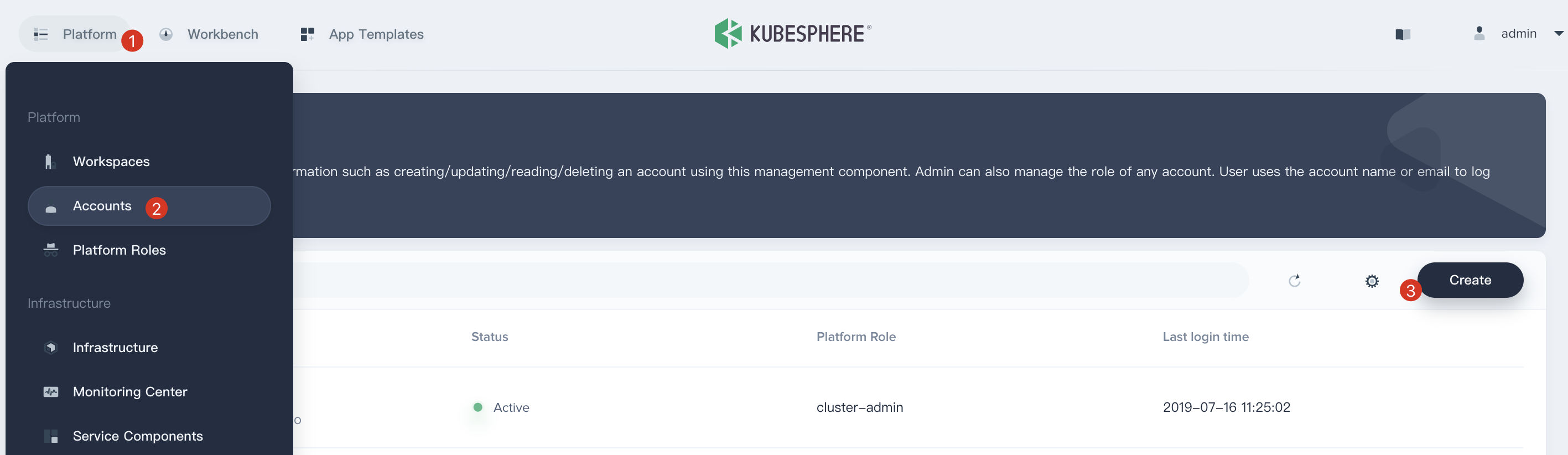

-1. Log in to the KubeSphere console on your Host Cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

-

-

+1. Log in to the KubeSphere console on your host cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

2. Enter the basic information based on your needs and click **Next**.

-

+3. In **Connection Method**, select **Direct connection**. Fill in the kubeconfig file of the ACK member cluster and then click **Create**.

-3. In **Connection Method**, select **Direct Connection**. Fill in the kubeconfig file of the ACK Member Cluster and then click **Create**.

-

-

-

-4. Wait for cluster initialization to finish.

-

-

\ No newline at end of file

+4. Wait for cluster initialization to finish.

\ No newline at end of file

diff --git a/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md b/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md

index 8cac5d377..1882a0ac9 100644

--- a/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md

+++ b/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md

@@ -10,18 +10,18 @@ This tutorial demonstrates how to import an AWS EKS cluster through the [direct

## Prerequisites

-- You have a Kubernetes cluster with KubeSphere installed, and prepared this cluster as the Host Cluster. For more information about how to prepare a Host Cluster, refer to [Prepare a Host Cluster](../../../multicluster-management/enable-multicluster/direct-connection/#prepare-a-host-cluster).

-- You have an EKS cluster to be used as the Member Cluster.

+- You have a Kubernetes cluster with KubeSphere installed, and prepared this cluster as the host cluster. For more information about how to prepare a host cluster, refer to [Prepare a host cluster](../../../multicluster-management/enable-multicluster/direct-connection/#prepare-a-host-cluster).

+- You have an EKS cluster to be used as the member cluster.

## Import an EKS Cluster

-### Step 1: Deploy KubeSphere on your EKS Cluster

+### Step 1: Deploy KubeSphere on your EKS cluster

You need to deploy KubeSphere on your EKS cluster first. For more information about how to deploy KubeSphere on EKS, refer to [Deploy KubeSphere on AWS EKS](../../../installing-on-kubernetes/hosted-kubernetes/install-kubesphere-on-eks/#install-kubesphere-on-eks).

-### Step 2: Prepare the EKS Member Cluster

+### Step 2: Prepare the EKS member cluster

-1. In order to manage the Member Cluster from the Host Cluster, you need to make `jwtSecret` the same between them. Therefore, get it first by executing the following command on your Host Cluster.

+1. In order to manage the member cluster from the host cluster, you need to make `jwtSecret` the same between them. Therefore, get it first by executing the following command on your host cluster.

```bash

kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret

@@ -37,12 +37,8 @@ You need to deploy KubeSphere on your EKS cluster first. For more information ab

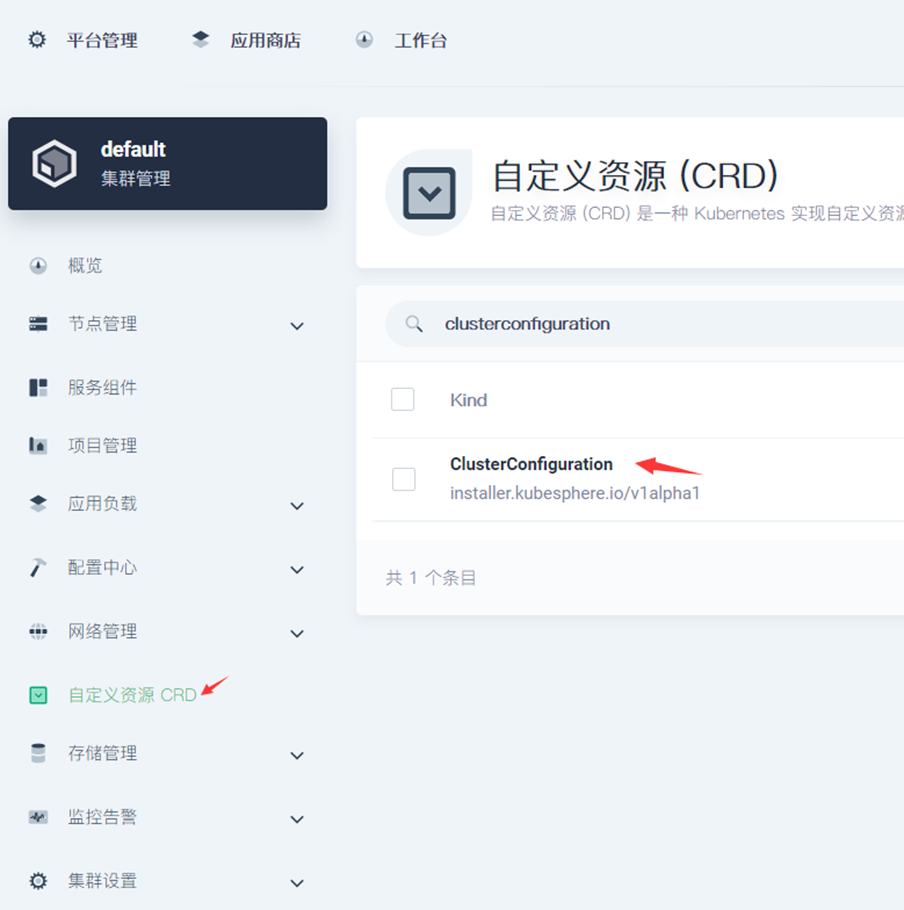

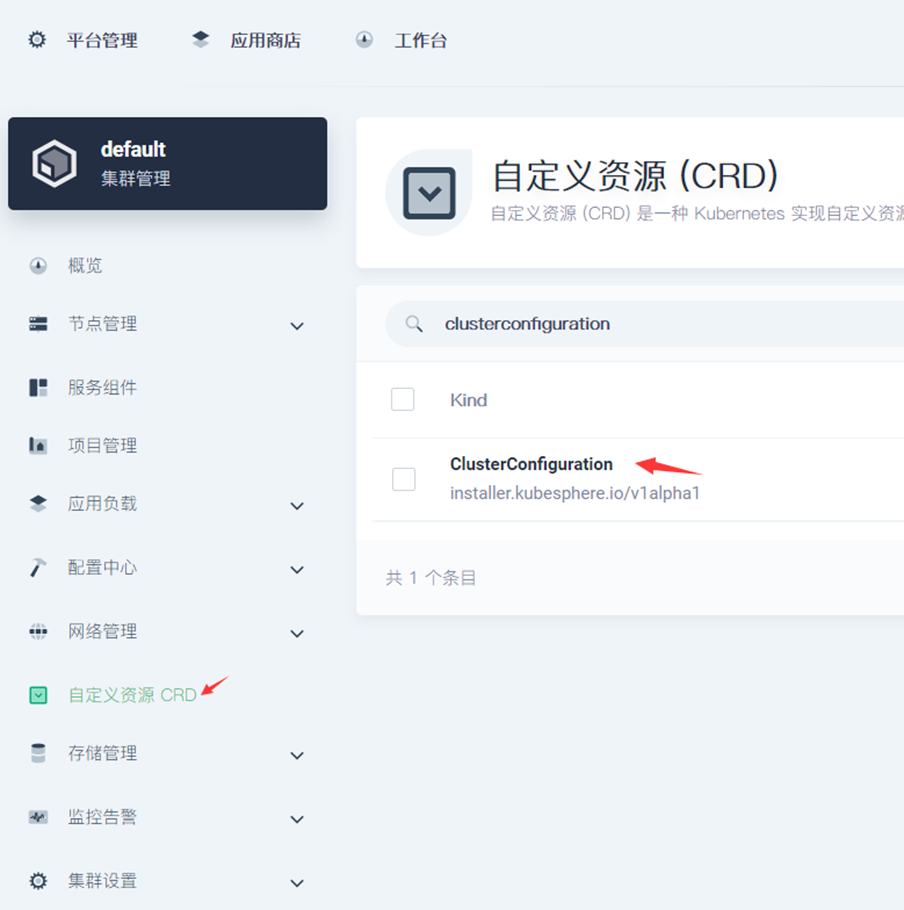

3. Go to **CRDs**, enter `ClusterConfiguration` in the search bar, and then press **Enter** on your keyboard. Click **ClusterConfiguration** to go to its detail page.

-

-

4. Click

on the right and then select **Edit YAML** to edit `ks-installer`.

-

-

5. In the YAML file of `ks-installer`, change the value of `jwtSecret` to the corresponding value shown above and set the value of `clusterRole` to `member`. Click **Update** to save your changes.

```yaml

@@ -63,20 +59,12 @@ Log in to the web console of Alibaba Cloud. Go to **Clusters** under **Container

-### Step 3: Import the ACK Member Cluster

+### Step 3: Import the ACK member cluster

-1. Log in to the KubeSphere console on your Host Cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

-

-

+1. Log in to the KubeSphere console on your host cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

2. Enter the basic information based on your needs and click **Next**.

-

+3. In **Connection Method**, select **Direct connection**. Fill in the kubeconfig file of the ACK member cluster and then click **Create**.

-3. In **Connection Method**, select **Direct Connection**. Fill in the kubeconfig file of the ACK Member Cluster and then click **Create**.

-

-

-

-4. Wait for cluster initialization to finish.

-

-

\ No newline at end of file

+4. Wait for cluster initialization to finish.

\ No newline at end of file

diff --git a/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md b/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md

index 8cac5d377..1882a0ac9 100644

--- a/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md

+++ b/content/en/docs/multicluster-management/import-cloud-hosted-k8s/import-aws-eks.md

@@ -10,18 +10,18 @@ This tutorial demonstrates how to import an AWS EKS cluster through the [direct

## Prerequisites

-- You have a Kubernetes cluster with KubeSphere installed, and prepared this cluster as the Host Cluster. For more information about how to prepare a Host Cluster, refer to [Prepare a Host Cluster](../../../multicluster-management/enable-multicluster/direct-connection/#prepare-a-host-cluster).

-- You have an EKS cluster to be used as the Member Cluster.

+- You have a Kubernetes cluster with KubeSphere installed, and prepared this cluster as the host cluster. For more information about how to prepare a host cluster, refer to [Prepare a host cluster](../../../multicluster-management/enable-multicluster/direct-connection/#prepare-a-host-cluster).

+- You have an EKS cluster to be used as the member cluster.

## Import an EKS Cluster

-### Step 1: Deploy KubeSphere on your EKS Cluster

+### Step 1: Deploy KubeSphere on your EKS cluster

You need to deploy KubeSphere on your EKS cluster first. For more information about how to deploy KubeSphere on EKS, refer to [Deploy KubeSphere on AWS EKS](../../../installing-on-kubernetes/hosted-kubernetes/install-kubesphere-on-eks/#install-kubesphere-on-eks).

-### Step 2: Prepare the EKS Member Cluster

+### Step 2: Prepare the EKS member cluster

-1. In order to manage the Member Cluster from the Host Cluster, you need to make `jwtSecret` the same between them. Therefore, get it first by executing the following command on your Host Cluster.

+1. In order to manage the member cluster from the host cluster, you need to make `jwtSecret` the same between them. Therefore, get it first by executing the following command on your host cluster.

```bash

kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret

@@ -37,12 +37,8 @@ You need to deploy KubeSphere on your EKS cluster first. For more information ab

3. Go to **CRDs**, enter `ClusterConfiguration` in the search bar, and then press **Enter** on your keyboard. Click **ClusterConfiguration** to go to its detail page.

-

-

4. Click  on the right and then select **Edit YAML** to edit `ks-installer`.

-

-

5. In the YAML file of `ks-installer`, change the value of `jwtSecret` to the corresponding value shown above and set the value of `clusterRole` to `member`. Click **Update** to save your changes.

```yaml

@@ -164,20 +160,12 @@ You need to deploy KubeSphere on your EKS cluster first. For more information ab

ip-10-0-8-148.cn-north-1.compute.internal Ready

on the right and then select **Edit YAML** to edit `ks-installer`.

-

-

5. In the YAML file of `ks-installer`, change the value of `jwtSecret` to the corresponding value shown above and set the value of `clusterRole` to `member`. Click **Update** to save your changes.

```yaml

@@ -164,20 +160,12 @@ You need to deploy KubeSphere on your EKS cluster first. For more information ab

ip-10-0-8-148.cn-north-1.compute.internal Ready  on the right and then select **Edit YAML** to edit `ks-installer`.

-

-

5. In the YAML file of `ks-installer`, change the value of `jwtSecret` to the corresponding value shown above and set the value of `clusterRole` to `member`.

```yaml

@@ -109,20 +105,12 @@ You need to deploy KubeSphere on your GKE cluster first. For more information ab

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InNjOFpIb3RrY3U3bGNRSV9NWV8tSlJzUHJ4Y2xnMDZpY3hhc1BoVy0xTGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlc3BoZXJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlc3BoZXJlLXRva2VuLXpocmJ3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6Imt1YmVzcGhlcmUiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIyMGFmZGI1Ny01MTBkLTRjZDgtYTAwYS1hNDQzYTViNGM0M2MiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXNwaGVyZS1zeXN0ZW06a3ViZXNwaGVyZSJ9.ic6LaS5rEQ4tXt_lwp7U_C8rioweP-ZdDjlIZq91GOw9d6s5htqSMQfTeVlwTl2Bv04w3M3_pCkvRzMD0lHg3mkhhhP_4VU0LIo4XeYWKvWRoPR2kymLyskAB2Khg29qIPh5ipsOmGL9VOzD52O2eLtt_c6tn-vUDmI_Zw985zH3DHwUYhppGM8uNovHawr8nwZoem27XtxqyBkqXGDD38WANizyvnPBI845YqfYPY5PINPYc9bQBFfgCovqMZajwwhcvPqS6IpG1Qv8TX2lpuJIK0LLjiKaHoATGvHLHdAZxe_zgAC2cT_9Ars3HIN4vzaSX0f-xP--AcRgKVSY9g

```

-### Step 4: Import the GKE Member Cluster

+### Step 4: Import the GKE member cluster

-1. Log in to the KubeSphere console on your Host Cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

-

-

+1. Log in to the KubeSphere console on your host cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

2. Enter the basic information based on your needs and click **Next**.

-

+3. In **Connection Method**, select **Direct connection**. Fill in the new kubeconfig file of the GKE member cluster and then click **Create**.

-3. In **Connection Method**, select **Direct Connection**. Fill in the new kubeconfig file of the GKE Member Cluster and then click **Create**.

-

-

-

-4. Wait for cluster initialization to finish.

-

-

\ No newline at end of file

+4. Wait for cluster initialization to finish.

\ No newline at end of file

diff --git a/content/en/docs/multicluster-management/import-on-prem-k8s/_index.md b/content/en/docs/multicluster-management/import-on-prem-k8s/_index.md

deleted file mode 100644

index 8d0aeb228..000000000

--- a/content/en/docs/multicluster-management/import-on-prem-k8s/_index.md

+++ /dev/null

@@ -1,7 +0,0 @@

----

-linkTitle: "Import On-premises Kubernetes Clusters"

-weight: 5400

-

-_build:

- render: false

----

diff --git a/content/en/docs/multicluster-management/import-on-prem-k8s/import-kubeadm-k8s.md b/content/en/docs/multicluster-management/import-on-prem-k8s/import-kubeadm-k8s.md

deleted file mode 100644

index 9370f4355..000000000

--- a/content/en/docs/multicluster-management/import-on-prem-k8s/import-kubeadm-k8s.md

+++ /dev/null

@@ -1,10 +0,0 @@

----

-title: "Import Kubeadm Kubernetes Cluster"

-keywords: 'kubernetes, kubesphere, multicluster, kubeadm'

-description: 'Learn how to import a Kubernetes cluster created with kubeadm.'

-

-

-weight: 5410

----

-

-TBD

diff --git a/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md b/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md

index ffad56b3c..f4dcc4d82 100644

--- a/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md

+++ b/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md

@@ -1,7 +1,7 @@

---

title: "KubeSphere Federation"

keywords: 'Kubernetes, KubeSphere, federation, multicluster, hybrid-cloud'

-description: 'Understand the fundamental concept of Kubernetes federation in KubeSphere, including M clusters and H clusters.'

+description: 'Understand the fundamental concept of Kubernetes federation in KubeSphere, including member clusters and host clusters.'

linkTitle: "KubeSphere Federation"

weight: 5120

---

@@ -10,11 +10,11 @@ The multi-cluster feature relates to the network connection among multiple clust

## How the Multi-cluster Architecture Works

-Before you use the central control plane of KubeSphere to management multiple clusters, you need to create a Host Cluster, also known as **H** Cluster. The H Cluster, essentially, is a KubeSphere cluster with the multi-cluster feature enabled. It provides you with the control plane for unified management of Member Clusters, also known as **M** Cluster. M Clusters are common KubeSphere clusters without the central control plane. Namely, tenants with necessary permissions (usually cluster administrators) can access the control plane from the H Cluster to manage all M Clusters, such as viewing and editing resources on M Clusters. Conversely, if you access the web console of any M Cluster separately, you cannot see any resources on other clusters.

+Before you use the central control plane of KubeSphere to management multiple clusters, you need to create a host cluster, also known as **host** cluster. The host cluster, essentially, is a KubeSphere cluster with the multi-cluster feature enabled. It provides you with the control plane for unified management of member clusters, also known as **member** cluster. Member clusters are common KubeSphere clusters without the central control plane. Namely, tenants with necessary permissions (usually cluster administrators) can access the control plane from the host cluster to manage all member clusters, such as viewing and editing resources on member clusters. Conversely, if you access the web console of any member cluster separately, you cannot see any resources on other clusters.

-

+There can only be one host cluster while multiple member clusters can exist at the same time. In a multi-cluster architecture, the network between the host cluster and member clusters can be [connected directly](../../enable-multicluster/direct-connection/) or [through an agent](../../enable-multicluster/agent-connection/). The network between member clusters can be set in a completely isolated environment.

-There can only be one H Cluster while multiple M Clusters can exist at the same time. In a multi-cluster architecture, the network between the H Cluster and M Clusters can be connected directly or through an agent. The network between M Clusters can be set in a completely isolated environment.

+If you are using on-premises Kubernetes clusters built through kubeadm, install KubeSphere on your Kubernetes clusters by referring to [Air-gapped Installation on Kubernetes](../../../installing-on-kubernetes/on-prem-kubernetes/install-ks-on-linux-airgapped/), and then enable KubeSphere multi-cluster management through direct connection or agent connection.

@@ -38,12 +38,12 @@ Before you enable multi-cluster management, make sure you have enough resources

{{< notice note >}}

- The request and limit of CPU and memory resources all refer to single replica.

-- After the multi-cluster feature is enabled, tower and controller-manager will be installed on the H Cluster. If you use [agent connection](../../../multicluster-management/enable-multicluster/agent-connection/), only tower is needed for M Clusters. If you use [direct connection](../../../multicluster-management/enable-multicluster/direct-connection/), no additional component is needed for M Clusters.

+- After the multi-cluster feature is enabled, tower and controller-manager will be installed on the host cluster. If you use [agent connection](../../../multicluster-management/enable-multicluster/agent-connection/), only tower is needed for member clusters. If you use [direct connection](../../../multicluster-management/enable-multicluster/direct-connection/), no additional component is needed for member clusters.

{{}}

## Use the App Store in a Multi-cluster Architecture

-Different from other components in KubeSphere, the [KubeSphere App Store](../../../pluggable-components/app-store/) serves as a global application pool for all clusters, including H Cluster and M Clusters. You only need to enable the App Store on the H Cluster and you can use functions related to the App Store on M Clusters directly (no matter whether the App Store is enabled on M Clusters or not), such as [app templates](../../../project-user-guide/application/app-template/) and [app repositories](../../../workspace-administration/app-repository/import-helm-repository/).

+Different from other components in KubeSphere, the [KubeSphere App Store](../../../pluggable-components/app-store/) serves as a global application pool for all clusters, including host cluster and member clusters. You only need to enable the App Store on the host cluster and you can use functions related to the App Store on member clusters directly (no matter whether the App Store is enabled on member clusters or not), such as [app templates](../../../project-user-guide/application/app-template/) and [app repositories](../../../workspace-administration/app-repository/import-helm-repository/).

-However, if you only enable the App Store on M Clusters without enabling it on the H Cluster, you will not be able to use the App Store on any cluster in the multi-cluster architecture.

\ No newline at end of file

+However, if you only enable the App Store on member clusters without enabling it on the host cluster, you will not be able to use the App Store on any cluster in the multi-cluster architecture.

\ No newline at end of file

diff --git a/content/en/docs/multicluster-management/unbind-cluster.md b/content/en/docs/multicluster-management/unbind-cluster.md

index b3326402a..9f5dae030 100644

--- a/content/en/docs/multicluster-management/unbind-cluster.md

+++ b/content/en/docs/multicluster-management/unbind-cluster.md

@@ -11,20 +11,16 @@ This tutorial demonstrates how to unbind a cluster from the central control plan

## Prerequisites

- You have enabled multi-cluster management.

-- You need an account granted a role including the authorization of **Cluster Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to an account.

+- You need a user granted a role including the authorization of **Cluster Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to a user.

## Unbind a Cluster

-1. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Click **Platform** in the upper-left corner and select **Cluster Management**.

-2. On the **Cluster Management** page, click the cluster that you want to remove from the central control plane.

-

-

+2. On the **Cluster Management** page, click the cluster that you want to remove from the control plane.

3. Go to **Basic Information** under **Cluster Settings**, check **I confirm I want to unbind the cluster** and click **Unbind**.

-

-

{{< notice note >}}

After you unbind the cluster, you cannot manage it from the control plane while Kubernetes resources on the cluster will not be deleted.

diff --git a/content/en/docs/pluggable-components/alerting.md b/content/en/docs/pluggable-components/alerting.md

index 0cce528fd..851e365b9 100644

--- a/content/en/docs/pluggable-components/alerting.md

+++ b/content/en/docs/pluggable-components/alerting.md

@@ -6,9 +6,9 @@ linkTitle: "KubeSphere Alerting"

weight: 6600

---

-Alerting is an important building block of observability, closely related to monitoring and logging. The alerting system in KubeSphere, coupled with the proactive failure notification system, allows users to know activities of interest based on alerting policies. When a predefined threshold of a certain metric is reached, an alert will be sent to preconfigured recipients. Therefore, you need to configure the notification method beforehand, including Email, Slack, DingTalk, WeCom and Webhook. With a highly functional alerting and notification system in place, you can quickly identify and resolve potential issues in advance before they affect your business.

+Alerting is an important building block of observability, closely related to monitoring and logging. The alerting system in KubeSphere, coupled with the proactive failure notification system, allows users to know activities of interest based on alerting policies. When a predefined threshold of a certain metric is reached, an alert will be sent to preconfigured recipients. Therefore, you need to configure the notification method beforehand, including Email, Slack, DingTalk, WeCom, and Webhook. With a highly functional alerting and notification system in place, you can quickly identify and resolve potential issues in advance before they affect your business.

-## Enable Alerting before Installation

+## Enable Alerting Before Installation

### Installing on Linux

@@ -39,9 +39,9 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

### Installing on Kubernetes

-As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable Alerting first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) file.

+As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable Alerting first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) file.

-1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) and edit it.

+1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) and edit it.

```bash

vi cluster-configuration.yaml

@@ -57,14 +57,14 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

3. Execute the following commands to start installation:

```bash

- kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

+ kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

```

-## Enable Alerting after Installation

+## Enable Alerting After Installation

-1. Log in to the console as `admin`. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Log in to the console as `admin`. Click **Platform** in the upper-left corner and select **Cluster Management**.

2. Click **CRDs** and enter `clusterconfiguration` in the search bar. Click the result to view its detail page.

@@ -72,9 +72,9 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

A Custom Resource Definition (CRD) allows users to create a new type of resources without adding another API server. They can use these resources like any other native Kubernetes objects.

{{}}

-3. In **Resource List**, click

on the right and then select **Edit YAML** to edit `ks-installer`.

-

-

5. In the YAML file of `ks-installer`, change the value of `jwtSecret` to the corresponding value shown above and set the value of `clusterRole` to `member`.

```yaml

@@ -109,20 +105,12 @@ You need to deploy KubeSphere on your GKE cluster first. For more information ab

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InNjOFpIb3RrY3U3bGNRSV9NWV8tSlJzUHJ4Y2xnMDZpY3hhc1BoVy0xTGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlc3BoZXJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlc3BoZXJlLXRva2VuLXpocmJ3Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6Imt1YmVzcGhlcmUiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIyMGFmZGI1Ny01MTBkLTRjZDgtYTAwYS1hNDQzYTViNGM0M2MiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXNwaGVyZS1zeXN0ZW06a3ViZXNwaGVyZSJ9.ic6LaS5rEQ4tXt_lwp7U_C8rioweP-ZdDjlIZq91GOw9d6s5htqSMQfTeVlwTl2Bv04w3M3_pCkvRzMD0lHg3mkhhhP_4VU0LIo4XeYWKvWRoPR2kymLyskAB2Khg29qIPh5ipsOmGL9VOzD52O2eLtt_c6tn-vUDmI_Zw985zH3DHwUYhppGM8uNovHawr8nwZoem27XtxqyBkqXGDD38WANizyvnPBI845YqfYPY5PINPYc9bQBFfgCovqMZajwwhcvPqS6IpG1Qv8TX2lpuJIK0LLjiKaHoATGvHLHdAZxe_zgAC2cT_9Ars3HIN4vzaSX0f-xP--AcRgKVSY9g

```

-### Step 4: Import the GKE Member Cluster

+### Step 4: Import the GKE member cluster

-1. Log in to the KubeSphere console on your Host Cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

-

-

+1. Log in to the KubeSphere console on your host cluster as `admin`. Click **Platform** in the upper-left corner and then select **Cluster Management**. On the **Cluster Management** page, click **Add Cluster**.

2. Enter the basic information based on your needs and click **Next**.

-

+3. In **Connection Method**, select **Direct connection**. Fill in the new kubeconfig file of the GKE member cluster and then click **Create**.

-3. In **Connection Method**, select **Direct Connection**. Fill in the new kubeconfig file of the GKE Member Cluster and then click **Create**.

-

-

-

-4. Wait for cluster initialization to finish.

-

-

\ No newline at end of file

+4. Wait for cluster initialization to finish.

\ No newline at end of file

diff --git a/content/en/docs/multicluster-management/import-on-prem-k8s/_index.md b/content/en/docs/multicluster-management/import-on-prem-k8s/_index.md

deleted file mode 100644

index 8d0aeb228..000000000

--- a/content/en/docs/multicluster-management/import-on-prem-k8s/_index.md

+++ /dev/null

@@ -1,7 +0,0 @@

----

-linkTitle: "Import On-premises Kubernetes Clusters"

-weight: 5400

-

-_build:

- render: false

----

diff --git a/content/en/docs/multicluster-management/import-on-prem-k8s/import-kubeadm-k8s.md b/content/en/docs/multicluster-management/import-on-prem-k8s/import-kubeadm-k8s.md

deleted file mode 100644

index 9370f4355..000000000

--- a/content/en/docs/multicluster-management/import-on-prem-k8s/import-kubeadm-k8s.md

+++ /dev/null

@@ -1,10 +0,0 @@

----

-title: "Import Kubeadm Kubernetes Cluster"

-keywords: 'kubernetes, kubesphere, multicluster, kubeadm'

-description: 'Learn how to import a Kubernetes cluster created with kubeadm.'

-

-

-weight: 5410

----

-

-TBD

diff --git a/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md b/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md

index ffad56b3c..f4dcc4d82 100644

--- a/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md

+++ b/content/en/docs/multicluster-management/introduction/kubefed-in-kubesphere.md

@@ -1,7 +1,7 @@

---

title: "KubeSphere Federation"

keywords: 'Kubernetes, KubeSphere, federation, multicluster, hybrid-cloud'

-description: 'Understand the fundamental concept of Kubernetes federation in KubeSphere, including M clusters and H clusters.'

+description: 'Understand the fundamental concept of Kubernetes federation in KubeSphere, including member clusters and host clusters.'

linkTitle: "KubeSphere Federation"

weight: 5120

---

@@ -10,11 +10,11 @@ The multi-cluster feature relates to the network connection among multiple clust

## How the Multi-cluster Architecture Works

-Before you use the central control plane of KubeSphere to management multiple clusters, you need to create a Host Cluster, also known as **H** Cluster. The H Cluster, essentially, is a KubeSphere cluster with the multi-cluster feature enabled. It provides you with the control plane for unified management of Member Clusters, also known as **M** Cluster. M Clusters are common KubeSphere clusters without the central control plane. Namely, tenants with necessary permissions (usually cluster administrators) can access the control plane from the H Cluster to manage all M Clusters, such as viewing and editing resources on M Clusters. Conversely, if you access the web console of any M Cluster separately, you cannot see any resources on other clusters.

+Before you use the central control plane of KubeSphere to management multiple clusters, you need to create a host cluster, also known as **host** cluster. The host cluster, essentially, is a KubeSphere cluster with the multi-cluster feature enabled. It provides you with the control plane for unified management of member clusters, also known as **member** cluster. Member clusters are common KubeSphere clusters without the central control plane. Namely, tenants with necessary permissions (usually cluster administrators) can access the control plane from the host cluster to manage all member clusters, such as viewing and editing resources on member clusters. Conversely, if you access the web console of any member cluster separately, you cannot see any resources on other clusters.

-

+There can only be one host cluster while multiple member clusters can exist at the same time. In a multi-cluster architecture, the network between the host cluster and member clusters can be [connected directly](../../enable-multicluster/direct-connection/) or [through an agent](../../enable-multicluster/agent-connection/). The network between member clusters can be set in a completely isolated environment.

-There can only be one H Cluster while multiple M Clusters can exist at the same time. In a multi-cluster architecture, the network between the H Cluster and M Clusters can be connected directly or through an agent. The network between M Clusters can be set in a completely isolated environment.

+If you are using on-premises Kubernetes clusters built through kubeadm, install KubeSphere on your Kubernetes clusters by referring to [Air-gapped Installation on Kubernetes](../../../installing-on-kubernetes/on-prem-kubernetes/install-ks-on-linux-airgapped/), and then enable KubeSphere multi-cluster management through direct connection or agent connection.

@@ -38,12 +38,12 @@ Before you enable multi-cluster management, make sure you have enough resources

{{< notice note >}}

- The request and limit of CPU and memory resources all refer to single replica.

-- After the multi-cluster feature is enabled, tower and controller-manager will be installed on the H Cluster. If you use [agent connection](../../../multicluster-management/enable-multicluster/agent-connection/), only tower is needed for M Clusters. If you use [direct connection](../../../multicluster-management/enable-multicluster/direct-connection/), no additional component is needed for M Clusters.

+- After the multi-cluster feature is enabled, tower and controller-manager will be installed on the host cluster. If you use [agent connection](../../../multicluster-management/enable-multicluster/agent-connection/), only tower is needed for member clusters. If you use [direct connection](../../../multicluster-management/enable-multicluster/direct-connection/), no additional component is needed for member clusters.

{{}}

## Use the App Store in a Multi-cluster Architecture

-Different from other components in KubeSphere, the [KubeSphere App Store](../../../pluggable-components/app-store/) serves as a global application pool for all clusters, including H Cluster and M Clusters. You only need to enable the App Store on the H Cluster and you can use functions related to the App Store on M Clusters directly (no matter whether the App Store is enabled on M Clusters or not), such as [app templates](../../../project-user-guide/application/app-template/) and [app repositories](../../../workspace-administration/app-repository/import-helm-repository/).

+Different from other components in KubeSphere, the [KubeSphere App Store](../../../pluggable-components/app-store/) serves as a global application pool for all clusters, including host cluster and member clusters. You only need to enable the App Store on the host cluster and you can use functions related to the App Store on member clusters directly (no matter whether the App Store is enabled on member clusters or not), such as [app templates](../../../project-user-guide/application/app-template/) and [app repositories](../../../workspace-administration/app-repository/import-helm-repository/).

-However, if you only enable the App Store on M Clusters without enabling it on the H Cluster, you will not be able to use the App Store on any cluster in the multi-cluster architecture.

\ No newline at end of file

+However, if you only enable the App Store on member clusters without enabling it on the host cluster, you will not be able to use the App Store on any cluster in the multi-cluster architecture.

\ No newline at end of file

diff --git a/content/en/docs/multicluster-management/unbind-cluster.md b/content/en/docs/multicluster-management/unbind-cluster.md

index b3326402a..9f5dae030 100644

--- a/content/en/docs/multicluster-management/unbind-cluster.md

+++ b/content/en/docs/multicluster-management/unbind-cluster.md

@@ -11,20 +11,16 @@ This tutorial demonstrates how to unbind a cluster from the central control plan

## Prerequisites

- You have enabled multi-cluster management.

-- You need an account granted a role including the authorization of **Cluster Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to an account.

+- You need a user granted a role including the authorization of **Cluster Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to a user.

## Unbind a Cluster

-1. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Click **Platform** in the upper-left corner and select **Cluster Management**.

-2. On the **Cluster Management** page, click the cluster that you want to remove from the central control plane.

-

-

+2. On the **Cluster Management** page, click the cluster that you want to remove from the control plane.

3. Go to **Basic Information** under **Cluster Settings**, check **I confirm I want to unbind the cluster** and click **Unbind**.

-

-

{{< notice note >}}

After you unbind the cluster, you cannot manage it from the control plane while Kubernetes resources on the cluster will not be deleted.

diff --git a/content/en/docs/pluggable-components/alerting.md b/content/en/docs/pluggable-components/alerting.md

index 0cce528fd..851e365b9 100644

--- a/content/en/docs/pluggable-components/alerting.md

+++ b/content/en/docs/pluggable-components/alerting.md

@@ -6,9 +6,9 @@ linkTitle: "KubeSphere Alerting"

weight: 6600

---

-Alerting is an important building block of observability, closely related to monitoring and logging. The alerting system in KubeSphere, coupled with the proactive failure notification system, allows users to know activities of interest based on alerting policies. When a predefined threshold of a certain metric is reached, an alert will be sent to preconfigured recipients. Therefore, you need to configure the notification method beforehand, including Email, Slack, DingTalk, WeCom and Webhook. With a highly functional alerting and notification system in place, you can quickly identify and resolve potential issues in advance before they affect your business.

+Alerting is an important building block of observability, closely related to monitoring and logging. The alerting system in KubeSphere, coupled with the proactive failure notification system, allows users to know activities of interest based on alerting policies. When a predefined threshold of a certain metric is reached, an alert will be sent to preconfigured recipients. Therefore, you need to configure the notification method beforehand, including Email, Slack, DingTalk, WeCom, and Webhook. With a highly functional alerting and notification system in place, you can quickly identify and resolve potential issues in advance before they affect your business.

-## Enable Alerting before Installation

+## Enable Alerting Before Installation

### Installing on Linux

@@ -39,9 +39,9 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

### Installing on Kubernetes

-As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable Alerting first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) file.

+As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable Alerting first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) file.

-1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) and edit it.

+1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) and edit it.

```bash

vi cluster-configuration.yaml

@@ -57,14 +57,14 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

3. Execute the following commands to start installation:

```bash

- kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

+ kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

```

-## Enable Alerting after Installation

+## Enable Alerting After Installation

-1. Log in to the console as `admin`. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Log in to the console as `admin`. Click **Platform** in the upper-left corner and select **Cluster Management**.

2. Click **CRDs** and enter `clusterconfiguration` in the search bar. Click the result to view its detail page.

@@ -72,9 +72,9 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

A Custom Resource Definition (CRD) allows users to create a new type of resources without adding another API server. They can use these resources like any other native Kubernetes objects.

{{}}

-3. In **Resource List**, click  on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click

on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click  on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `alerting` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `alerting` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

alerting:

@@ -89,14 +89,12 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-You can find the web kubectl tool by clicking

on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `alerting` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `alerting` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

alerting:

@@ -89,14 +89,12 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-You can find the web kubectl tool by clicking  in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking

in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking  in the lower-right corner of the console.

{{}}

## Verify the Installation of the Component

If you can see **Alerting Messages** and **Alerting Policies** on the **Cluster Management** page, it means the installation is successful as the two parts won't display until the component is installed.

-

-

diff --git a/content/en/docs/pluggable-components/app-store.md b/content/en/docs/pluggable-components/app-store.md

index 279506b9d..1f8eb9601 100644

--- a/content/en/docs/pluggable-components/app-store.md

+++ b/content/en/docs/pluggable-components/app-store.md

@@ -6,15 +6,13 @@ linkTitle: "KubeSphere App Store"

weight: 6200

---

-As an open-source and app-centric container platform, KubeSphere provides users with a Helm-based App Store for application lifecycle management on the back of [OpenPitrix](https://github.com/openpitrix/openpitrix), an open-source web-based system to package, deploy and manage different types of apps. The KubeSphere App Store allows ISVs, developers and users to upload, test, deploy and release apps with just several clicks in a one-stop shop.

+As an open-source and app-centric container platform, KubeSphere provides users with a Helm-based App Store for application lifecycle management on the back of [OpenPitrix](https://github.com/openpitrix/openpitrix), an open-source web-based system to package, deploy and manage different types of apps. The KubeSphere App Store allows ISVs, developers, and users to upload, test, install, and release apps with just several clicks in a one-stop shop.

-Internally, the KubeSphere App Store can serve as a place for different teams to share data, middleware, and office applications. Externally, it is conducive to setting industry standards of building and delivery. By default, there are 17 built-in apps in the App Store. After you enable this feature, you can add more apps with app templates.

-

-

+Internally, the KubeSphere App Store can serve as a place for different teams to share data, middleware, and office applications. Externally, it is conducive to setting industry standards of building and delivery. After you enable this feature, you can add more apps with app templates.

For more information, see [App Store](../../application-store/).

-## Enable the App Store before Installation

+## Enable the App Store Before Installation

### Installing on Linux

@@ -46,9 +44,9 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

### Installing on Kubernetes

-As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable the KubeSphere App Store first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) file.

+As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable the KubeSphere App Store first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) file.

-1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) and edit it.

+1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) and edit it.

```bash

vi cluster-configuration.yaml

@@ -65,14 +63,14 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

3. Execute the following commands to start installation:

```bash

- kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

+ kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

```

-## Enable the App Store after Installation

+## Enable the App Store After Installation

-1. Log in to the console as `admin`. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Log in to the console as `admin`. Click **Platform** in the upper-left corner and select **Cluster Management**.

2. Click **CRDs** and enter `clusterconfiguration` in the search bar. Click the result to view its detail page.

@@ -82,9 +80,9 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{}}

-3. In **Resource List**, click

in the lower-right corner of the console.

{{}}

## Verify the Installation of the Component

If you can see **Alerting Messages** and **Alerting Policies** on the **Cluster Management** page, it means the installation is successful as the two parts won't display until the component is installed.

-

-

diff --git a/content/en/docs/pluggable-components/app-store.md b/content/en/docs/pluggable-components/app-store.md

index 279506b9d..1f8eb9601 100644

--- a/content/en/docs/pluggable-components/app-store.md

+++ b/content/en/docs/pluggable-components/app-store.md

@@ -6,15 +6,13 @@ linkTitle: "KubeSphere App Store"

weight: 6200

---

-As an open-source and app-centric container platform, KubeSphere provides users with a Helm-based App Store for application lifecycle management on the back of [OpenPitrix](https://github.com/openpitrix/openpitrix), an open-source web-based system to package, deploy and manage different types of apps. The KubeSphere App Store allows ISVs, developers and users to upload, test, deploy and release apps with just several clicks in a one-stop shop.

+As an open-source and app-centric container platform, KubeSphere provides users with a Helm-based App Store for application lifecycle management on the back of [OpenPitrix](https://github.com/openpitrix/openpitrix), an open-source web-based system to package, deploy and manage different types of apps. The KubeSphere App Store allows ISVs, developers, and users to upload, test, install, and release apps with just several clicks in a one-stop shop.

-Internally, the KubeSphere App Store can serve as a place for different teams to share data, middleware, and office applications. Externally, it is conducive to setting industry standards of building and delivery. By default, there are 17 built-in apps in the App Store. After you enable this feature, you can add more apps with app templates.

-

-

+Internally, the KubeSphere App Store can serve as a place for different teams to share data, middleware, and office applications. Externally, it is conducive to setting industry standards of building and delivery. After you enable this feature, you can add more apps with app templates.

For more information, see [App Store](../../application-store/).

-## Enable the App Store before Installation

+## Enable the App Store Before Installation

### Installing on Linux

@@ -46,9 +44,9 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

### Installing on Kubernetes

-As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable the KubeSphere App Store first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) file.

+As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable the KubeSphere App Store first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) file.

-1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) and edit it.

+1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) and edit it.

```bash

vi cluster-configuration.yaml

@@ -65,14 +63,14 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

3. Execute the following commands to start installation:

```bash

- kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

+ kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

```

-## Enable the App Store after Installation

+## Enable the App Store After Installation

-1. Log in to the console as `admin`. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Log in to the console as `admin`. Click **Platform** in the upper-left corner and select **Cluster Management**.

2. Click **CRDs** and enter `clusterconfiguration` in the search bar. Click the result to view its detail page.

@@ -82,9 +80,9 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{}}

-3. In **Resource List**, click  on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click

on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click  on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `openpitrix` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `openpitrix` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

openpitrix:

@@ -100,25 +98,23 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-You can find the web kubectl tool by clicking

on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `openpitrix` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `openpitrix` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

openpitrix:

@@ -100,25 +98,23 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-You can find the web kubectl tool by clicking  in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking

in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking  in the lower-right corner of the console.

{{}}

## Verify the Installation of the Component

-After you log in to the console, if you can see **App Store** in the top-left corner and 17 built-in apps in it, it means the installation is successful.

-

-

+After you log in to the console, if you can see **App Store** in the upper-left corner and apps in it, it means the installation is successful.

{{< notice note >}}

-- You can even access the App Store without logging in to the console by visiting `

in the lower-right corner of the console.

{{}}

## Verify the Installation of the Component

-After you log in to the console, if you can see **App Store** in the top-left corner and 17 built-in apps in it, it means the installation is successful.

-

-

+After you log in to the console, if you can see **App Store** in the upper-left corner and apps in it, it means the installation is successful.

{{< notice note >}}

-- You can even access the App Store without logging in to the console by visiting ` on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click

on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click  on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `auditing` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `auditing` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

auditing:

@@ -116,7 +116,7 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

```

{{< notice note >}}

-By default, Elasticsearch will be installed internally if Auditing is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Auditing, especially `externalElasticsearchUrl` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.

+By default, Elasticsearch will be installed internally if Auditing is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Auditing, especially `externalElasticsearchHost` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.

{{}}

```yaml

@@ -127,7 +127,7 @@ By default, Elasticsearch will be installed internally if Auditing is enabled. F

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention day in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-

on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `auditing` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `auditing` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

auditing:

@@ -116,7 +116,7 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

```

{{< notice note >}}

-By default, Elasticsearch will be installed internally if Auditing is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Auditing, especially `externalElasticsearchUrl` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.

+By default, Elasticsearch will be installed internally if Auditing is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Auditing, especially `externalElasticsearchHost` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.

{{}}

```yaml

@@ -127,7 +127,7 @@ By default, Elasticsearch will be installed internally if Auditing is enabled. F

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention day in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks- in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking

in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking  in the lower-right corner of the console.

{{}}

## Verify the Installation of the Component

@@ -148,9 +148,7 @@ You can find the web kubectl tool by clicking

in the lower-right corner of the console.

{{}}

## Verify the Installation of the Component

@@ -148,9 +148,7 @@ You can find the web kubectl tool by clicking  }}

-Verify that you can use the **Auditing Operating** function from the **Toolbox** in the bottom-right corner.

-

-

+Verify that you can use the **Audit Log Search** function from the **Toolbox** in the lower-right corner.

{{}}

diff --git a/content/en/docs/pluggable-components/devops.md b/content/en/docs/pluggable-components/devops.md

index fd06550b4..f8fcc858c 100644

--- a/content/en/docs/pluggable-components/devops.md

+++ b/content/en/docs/pluggable-components/devops.md

@@ -12,7 +12,7 @@ The DevOps System offers an enabling environment for users as apps can be automa

For more information, see [DevOps User Guide](../../devops-user-guide/).

-## Enable DevOps before Installation

+## Enable DevOps Before Installation

### Installing on Linux

@@ -43,9 +43,9 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

### Installing on Kubernetes

-As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable KubeSphere DevOps first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) file.

+As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable KubeSphere DevOps first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) file.

-1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) and edit it.

+1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) and edit it.

```bash

vi cluster-configuration.yaml

@@ -61,14 +61,14 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

3. Execute the following commands to start installation:

```bash

- kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

+ kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

```

-## Enable DevOps after Installation

+## Enable DevOps After Installation

-1. Log in to the console as `admin`. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Log in to the console as `admin`. Click **Platform** in the upper-left corner and select **Cluster Management**.

2. Click **CRDs** and enter `clusterconfiguration` in the search bar. Click the result to view its detail page.

@@ -78,9 +78,9 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{}}

-3. In **Resource List**, click

}}

-Verify that you can use the **Auditing Operating** function from the **Toolbox** in the bottom-right corner.

-

-

+Verify that you can use the **Audit Log Search** function from the **Toolbox** in the lower-right corner.

{{}}

diff --git a/content/en/docs/pluggable-components/devops.md b/content/en/docs/pluggable-components/devops.md

index fd06550b4..f8fcc858c 100644

--- a/content/en/docs/pluggable-components/devops.md

+++ b/content/en/docs/pluggable-components/devops.md

@@ -12,7 +12,7 @@ The DevOps System offers an enabling environment for users as apps can be automa

For more information, see [DevOps User Guide](../../devops-user-guide/).

-## Enable DevOps before Installation

+## Enable DevOps Before Installation

### Installing on Linux

@@ -43,9 +43,9 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

### Installing on Kubernetes

-As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable KubeSphere DevOps first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) file.

+As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introduction/overview/), you can enable KubeSphere DevOps first in the [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) file.

-1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml) and edit it.

+1. Download the file [cluster-configuration.yaml](https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml) and edit it.

```bash

vi cluster-configuration.yaml

@@ -61,14 +61,14 @@ As you [install KubeSphere on Kubernetes](../../installing-on-kubernetes/introdu

3. Execute the following commands to start installation:

```bash

- kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yaml

+ kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

```

-## Enable DevOps after Installation

+## Enable DevOps After Installation

-1. Log in to the console as `admin`. Click **Platform** in the top-left corner and select **Cluster Management**.

+1. Log in to the console as `admin`. Click **Platform** in the upper-left corner and select **Cluster Management**.

2. Click **CRDs** and enter `clusterconfiguration` in the search bar. Click the result to view its detail page.

@@ -78,9 +78,9 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{}}

-3. In **Resource List**, click  on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click

on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click  on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `devops` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `devops` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

devops:

@@ -95,7 +95,7 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-You can find the web kubectl tool by clicking

on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `devops` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `devops` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

devops:

@@ -95,7 +95,7 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-You can find the web kubectl tool by clicking  in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking

in the bottom-right corner of the console.

+You can find the web kubectl tool by clicking  in the lower-right corner of the console.

{{}}

@@ -105,9 +105,7 @@ You can find the web kubectl tool by clicking

in the lower-right corner of the console.

{{}}

@@ -105,9 +105,7 @@ You can find the web kubectl tool by clicking  }}

-Go to **Components** and check the status of **DevOps**. You may see an image as follows:

-

-

+Go to **System Components** and check that all components on the **DevOps** tab page is in **Healthy** state.

{{}}

@@ -123,7 +121,7 @@ The output may look as follows if the component runs successfully:

```bash

NAME READY STATUS RESTARTS AGE

-ks-jenkins-5cbbfbb975-hjnll 1/1 Running 0 40m

+devops-jenkins-5cbbfbb975-hjnll 1/1 Running 0 40m

s2ioperator-0 1/1 Running 0 41m

```

diff --git a/content/en/docs/pluggable-components/events.md b/content/en/docs/pluggable-components/events.md

index edff6744d..ce7927b25 100644

--- a/content/en/docs/pluggable-components/events.md

+++ b/content/en/docs/pluggable-components/events.md

@@ -6,11 +6,11 @@ linkTitle: "KubeSphere Events"

weight: 6500

---

-KubeSphere events allow users to keep track of what is happening inside a cluster, such as node scheduling status and image pulling result. They will be accurately recorded with the specific reason, status and message displayed in the web console. To query events, users can quickly launch the web Toolkit and enter related information in the search bar with different filters (e.g keyword and project) available. Events can also be archived to third-party tools, such as Elasticsearch, Kafka or Fluentd.

+KubeSphere events allow users to keep track of what is happening inside a cluster, such as node scheduling status and image pulling result. They will be accurately recorded with the specific reason, status and message displayed in the web console. To query events, users can quickly launch the web Toolkit and enter related information in the search bar with different filters (e.g keyword and project) available. Events can also be archived to third-party tools, such as Elasticsearch, Kafka, or Fluentd.

For more information, see [Event Query](../../toolbox/events-query/).

-## Enable Events before Installation

+## Enable Events Before Installation

### Installing on Linux

@@ -36,7 +36,7 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

```

{{< notice note >}}

-By default, KubeKey will install Elasticsearch internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in `config-sample.yaml` if you want to enable Events, especially `externalElasticsearchUrl` and `externalElasticsearchPort`. Once you provide the following information before installation, KubeKey will integrate your external Elasticsearch directly instead of installing an internal one.

+By default, KubeKey will install Elasticsearch internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in `config-sample.yaml` if you want to enable Events, especially `externalElasticsearchHost` and `externalElasticsearchPort`. Once you provide the following information before installation, KubeKey will integrate your external Elasticsearch directly instead of installing an internal one.

{{}}

```yaml

@@ -47,7 +47,7 @@ By default, KubeKey will install Elasticsearch internally if Events is enabled.

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention day in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-

}}

-Go to **Components** and check the status of **DevOps**. You may see an image as follows:

-

-

+Go to **System Components** and check that all components on the **DevOps** tab page is in **Healthy** state.

{{}}

@@ -123,7 +121,7 @@ The output may look as follows if the component runs successfully:

```bash

NAME READY STATUS RESTARTS AGE

-ks-jenkins-5cbbfbb975-hjnll 1/1 Running 0 40m

+devops-jenkins-5cbbfbb975-hjnll 1/1 Running 0 40m

s2ioperator-0 1/1 Running 0 41m

```

diff --git a/content/en/docs/pluggable-components/events.md b/content/en/docs/pluggable-components/events.md

index edff6744d..ce7927b25 100644

--- a/content/en/docs/pluggable-components/events.md

+++ b/content/en/docs/pluggable-components/events.md

@@ -6,11 +6,11 @@ linkTitle: "KubeSphere Events"

weight: 6500

---

-KubeSphere events allow users to keep track of what is happening inside a cluster, such as node scheduling status and image pulling result. They will be accurately recorded with the specific reason, status and message displayed in the web console. To query events, users can quickly launch the web Toolkit and enter related information in the search bar with different filters (e.g keyword and project) available. Events can also be archived to third-party tools, such as Elasticsearch, Kafka or Fluentd.

+KubeSphere events allow users to keep track of what is happening inside a cluster, such as node scheduling status and image pulling result. They will be accurately recorded with the specific reason, status and message displayed in the web console. To query events, users can quickly launch the web Toolkit and enter related information in the search bar with different filters (e.g keyword and project) available. Events can also be archived to third-party tools, such as Elasticsearch, Kafka, or Fluentd.

For more information, see [Event Query](../../toolbox/events-query/).

-## Enable Events before Installation

+## Enable Events Before Installation

### Installing on Linux

@@ -36,7 +36,7 @@ If you adopt [All-in-One Installation](../../quick-start/all-in-one-on-linux/),

```

{{< notice note >}}

-By default, KubeKey will install Elasticsearch internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in `config-sample.yaml` if you want to enable Events, especially `externalElasticsearchUrl` and `externalElasticsearchPort`. Once you provide the following information before installation, KubeKey will integrate your external Elasticsearch directly instead of installing an internal one.

+By default, KubeKey will install Elasticsearch internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in `config-sample.yaml` if you want to enable Events, especially `externalElasticsearchHost` and `externalElasticsearchPort`. Once you provide the following information before installation, KubeKey will integrate your external Elasticsearch directly instead of installing an internal one.

{{}}

```yaml

@@ -47,7 +47,7 @@ By default, KubeKey will install Elasticsearch internally if Events is enabled.

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention day in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks- on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click

on the right of `ks-installer` and select **Edit YAML**.

+3. In **Custom Resources**, click  on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `events` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `events` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

events:

@@ -121,7 +121,7 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-By default, Elasticsearch will be installed internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Events, especially `externalElasticsearchUrl` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.

+By default, Elasticsearch will be installed internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Events, especially `externalElasticsearchHost` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.

{{}}

```yaml

@@ -132,7 +132,7 @@ By default, Elasticsearch will be installed internally if Events is enabled. For

elasticsearchDataVolumeSize: 20Gi # The volume size of Elasticsearch data nodes.

logMaxAge: 7 # Log retention day in built-in Elasticsearch. It is 7 days by default.

elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-

on the right of `ks-installer` and select **Edit YAML**.

-4. In this YAML file, navigate to `events` and change `false` to `true` for `enabled`. After you finish, click **Update** in the bottom-right corner to save the configuration.

+4. In this YAML file, navigate to `events` and change `false` to `true` for `enabled`. After you finish, click **OK** in the lower-right corner to save the configuration.

```yaml

events:

@@ -121,7 +121,7 @@ A Custom Resource Definition (CRD) allows users to create a new type of resource

{{< notice note >}}

-By default, Elasticsearch will be installed internally if Events is enabled. For a production environment, it is highly recommended that you set the following values in this yaml file if you want to enable Events, especially `externalElasticsearchUrl` and `externalElasticsearchPort`. Once you provide the following information, KubeSphere will integrate your external Elasticsearch directly instead of installing an internal one.