mirror of

https://github.com/kubesphere/website.git

synced 2025-12-30 17:52:56 +00:00

fix conflicts

Signed-off-by: FeynmanZhou <pengfeizhou@yunify.com>

This commit is contained in:

commit

e70a7e7cee

|

|

@ -44,7 +44,7 @@ Give a title first before you write a paragraph. It can be grouped into differen

|

|||

- When you submit your md files to GitHub, make sure you add related image files that appear in md files in the pull request as well. Please save your image files in static/images/docs. You can create a folder in the directory to save your images.

|

||||

|

||||

- If you want to add remarks (e.g. put a box on a UI button), use the color **green**. As some screenshot apps does not support the color picking function for a specific color code, as long as the color is **similar** to #09F709, #00FF00, #09F709 or #09F738, it is acceptable.

|

||||

- Make sure images in your guide match the content. For example, you mention that users need to log in KubeSphere using an account of a role; this means the account that displays in your image is expected to be the one you are talking about. It confuses your readers if the content you are describing is not consistent with the image used.

|

||||

- Make sure images in your guide match the content. For example, you mention that users need to log in to KubeSphere using an account of a role; this means the account that displays in your image is expected to be the one you are talking about. It confuses your readers if the content you are describing is not consistent with the image used.

|

||||

- Recommended: [Xnip](https://xnipapp.com/) for Mac and [Sniptool](https://www.reasyze.com/sniptool/) for Windows.

|

||||

|

||||

|

||||

|

|

@ -122,7 +122,7 @@ kubectl edit svc ks-console -o yaml -n kubesphere-system

|

|||

|

||||

| Do | Don't |

|

||||

| ------------------------------------------------------ | ---------------------------------------------------- |

|

||||

| Log in the console as `admin`. | Log in the console as admin. |

|

||||

| Log in to the console as `admin`. | Log in to the console as admin. |

|

||||

| The account will be assigned the role `users-manager`. | The account will be assigned the role users-manager. |

|

||||

|

||||

### Code Comments

|

||||

|

|

|

|||

|

|

@ -69,7 +69,7 @@ As I will upload individual Helm charts of TiDB later, I need to first download

|

|||

|

||||

Now that you have Helm charts ready, you can upload them to KubeSphere as app templates.

|

||||

|

||||

1. Log in the web console of KubeSphere. As I described in my last blog, you need to create a workspace before you create any resources in it. You can see [the official documentation of KubeSphere](https://kubesphere.io/docs/quick-start/create-workspace-and-project/) to learn how to create a workspace.

|

||||

1. Log in to the web console of KubeSphere. As I described in my last blog, you need to create a workspace before you create any resources in it. You can see [the official documentation of KubeSphere](https://kubesphere.io/docs/quick-start/create-workspace-and-project/) to learn how to create a workspace.

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -26,7 +26,7 @@ As you can imagine, the very first thing to consider is to have a Kubernetes clu

|

|||

|

||||

Therefore, I select QingCloud Kubernetes Engine (QKE) to prepare the environment. In fact, you can also use instances on the platform directly and [deploy a highly-available Kubernetes cluster with KubeSphere installed](https://kubesphere.io/docs/installing-on-linux/public-cloud/kubesphere-on-qingcloud-instance/). Here is how I deploy the cluster and TiDB:

|

||||

|

||||

1. Log in the [web console of QingCloud](https://console.qingcloud.com/). Simply select **KubeSphere (QKE)** from the menu and create a Kubernetes cluster with KubeSphere installed. The platform allows you to install different components of KubeSphere. Here, we need to enable [OpenPitrix](https://github.com/openpitrix/openpitrix), which powers the app management feature in KubeSphere.

|

||||

1. Log in to the [web console of QingCloud](https://console.qingcloud.com/). Simply select **KubeSphere (QKE)** from the menu and create a Kubernetes cluster with KubeSphere installed. The platform allows you to install different components of KubeSphere. Here, we need to enable [OpenPitrix](https://github.com/openpitrix/openpitrix), which powers the app management feature in KubeSphere.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

|

|

@ -36,7 +36,7 @@ Therefore, I select QingCloud Kubernetes Engine (QKE) to prepare the environment

|

|||

|

||||

|

||||

|

||||

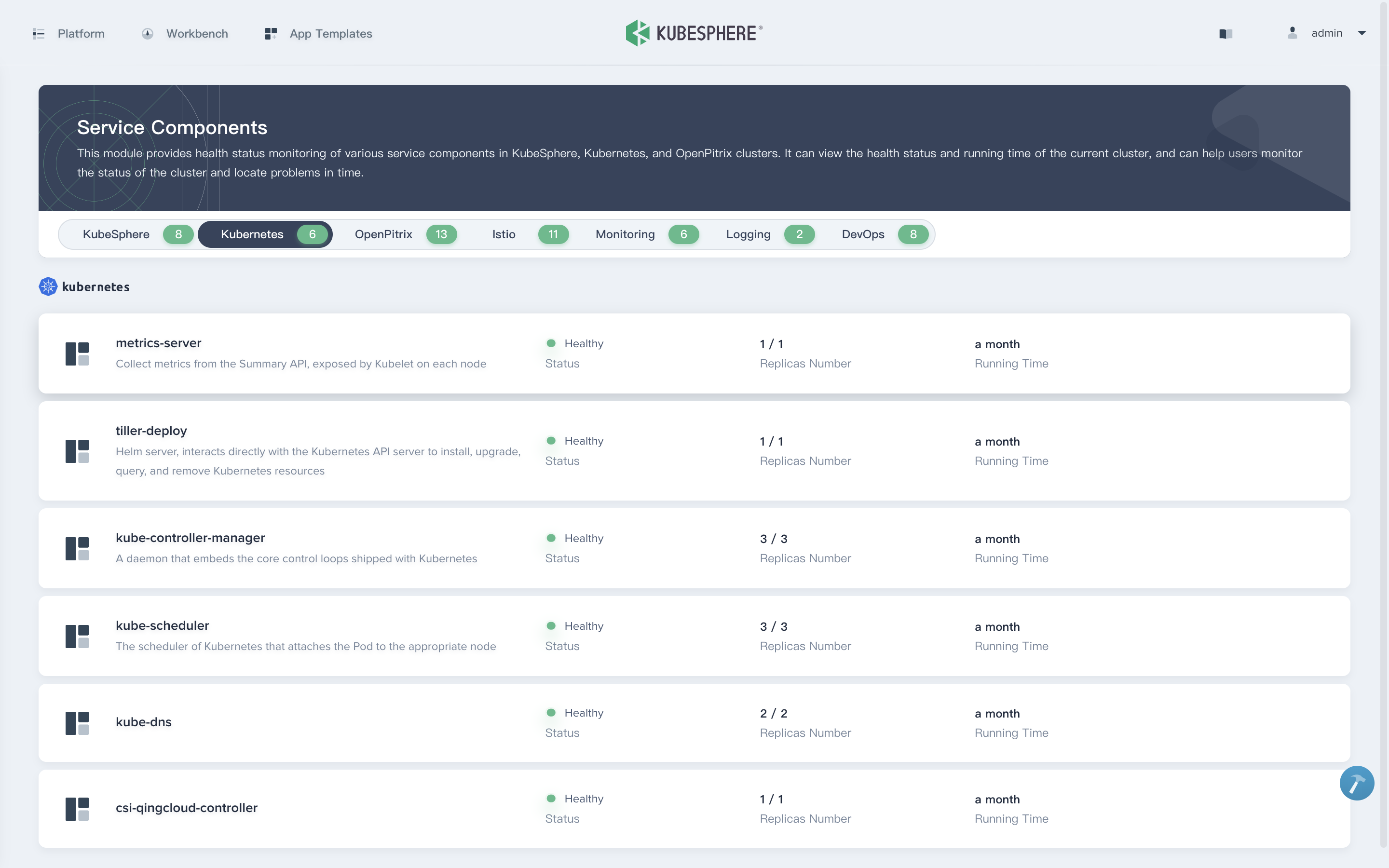

2. The cluster will be up and running in around 10 minutes. In this example, I select 3 working nodes to make sure I have enough resources for the deployment later. You can also customize configurations based on your needs. When the cluster is ready, log in the web console of KubeSphere with the default account and password (`admin/P@88w0rd`). Here is the cluster **Overview** page:

|

||||

2. The cluster will be up and running in around 10 minutes. In this example, I select 3 working nodes to make sure I have enough resources for the deployment later. You can also customize configurations based on your needs. When the cluster is ready, log in to the web console of KubeSphere with the default account and password (`admin/P@88w0rd`). Here is the cluster **Overview** page:

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -74,4 +74,5 @@ Three grayscale strategies are provided by KubeSphere based on Istio: blue-green

|

|||

|

||||

## KubeSphere Installation

|

||||

|

||||

KubeSphere can be deployed and run on any infrastructure, including public clouds, private clouds, virtual machines, bare metals and Kubernetes. It can be installed either online or offline. Please refer to [KubeSphere Installation Guide](https://kubesphere.io/docs/installation/intro/) for installation.

|

||||

KubeSphere can be deployed and run on any infrastructure, including public clouds, private clouds, virtual machines, bare metals and Kubernetes. It can be installed either online or offline. For more information, refer to [Installing on Linux](https://kubesphere.io/docs/installing-on-linux/) and [Installing on Kubernetes](https://kubesphere.io/docs/installing-on-kubernetes/).

|

||||

|

||||

|

|

|

|||

|

|

@ -1,7 +1,7 @@

|

|||

---

|

||||

title: "Configure Authentication"

|

||||

keywords: "LDAP, identity provider"

|

||||

description: "How to configure identity provider"

|

||||

description: "How to configure authentication"

|

||||

|

||||

linkTitle: "Configure Authentication"

|

||||

weight: 12200

|

||||

|

|

@ -21,18 +21,19 @@ KubeSphere includes a built-in OAuth server. Users obtain OAuth access tokens to

|

|||

|

||||

As an administrator, you can configure OAuth by editing configmap to specify an identity provider.

|

||||

|

||||

## Identity Providers

|

||||

|

||||

KubeSphere has an internal account management system.

|

||||

|

||||

You can modify the kubesphere authentication configuration using your desired identity provider by the following command:

|

||||

|

||||

## Authentication Configuration

|

||||

|

||||

KubeSphere has an internal account management system. You can modify the kubesphere authentication configuration by the following command:

|

||||

|

||||

*Example Configuration*:

|

||||

|

||||

```bash

|

||||

kubectl -n kubesphere-system edit cm kubesphere-config

|

||||

```

|

||||

|

||||

*Example Configuration*:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

data:

|

||||

|

|

@ -51,7 +52,19 @@ data:

|

|||

...

|

||||

```

|

||||

|

||||

You can define additional authentication configuration in the `identityProviders `section.

|

||||

For the above example:

|

||||

|

||||

| Parameter | Description |

|

||||

|-----------|-------------|

|

||||

| authenticateRateLimiterMaxTries | AuthenticateRateLimiter defines under which circumstances we will block user. |

|

||||

| authenticateRateLimiterDuration | A user will be blocked if his/her failed login attempt reaches AuthenticateRateLimiterMaxTries in AuthenticateRateLimiterDuration for about AuthenticateRateLimiterDuration. |

|

||||

| loginHistoryRetentionPeriod | Retention login history, records beyond this amount will be deleted. |

|

||||

| maximumClockSkew | Controls the maximum allowed clock skew when performing time-sensitive operations, such as validating the expiration time of a user token. The default value for maximum clock skew is `10 seconds`. |

|

||||

| multipleLogin | Allow multiple users login from different location at the same time. The default value for multiple login is `true`. |

|

||||

| jwtSecret | Secret to sign user token. Multi-cluster environments [need to use the same secret](../../multicluster-management/enable-multicluster/direct-connection/#prepare-a-member-cluster). |

|

||||

| accessTokenMaxAge | AccessTokenMaxAge control the lifetime of access tokens. The default lifetime is 2 hours. Setting the `accessTokenMaxAge` to 0 means the token will not expire, it will be set to 0 when the cluster role is member. |

|

||||

| accessTokenInactivityTimeout | Inactivity timeout for tokens. The value represents the maximum amount of time that can occur between consecutive uses of the token. Tokens become invalid if they are not used within this temporal window. The user will need to acquire a new token to regain access once a token times out. |

|

||||

|

||||

|

||||

After modifying the identity provider configuration, you need to restart the ks-apiserver.

|

||||

|

||||

|

|

@ -59,7 +72,11 @@ After modifying the identity provider configuration, you need to restart the ks-

|

|||

kubectl -n kubesphere-system rollout restart deploy/ks-apiserver

|

||||

```

|

||||

|

||||

## LDAP Authentication

|

||||

## Identity Providers

|

||||

|

||||

You can define additional authentication configuration in the `identityProviders `section.

|

||||

|

||||

### LDAP Authentication

|

||||

|

||||

Set LDAPIdentityProvider in the identityProviders section to validate username and password against an LDAPv3 server using simple bind authentication.

|

||||

|

||||

|

|

@ -70,7 +87,7 @@ There are four parameters common to all identity providers:

|

|||

| Parameter | Description |

|

||||

|-----------|-------------|

|

||||

| name | The name of the identity provider is associated with the user label. |

|

||||

| mappingMethod | Defines how new identities are mapped to users when they log in. |

|

||||

| mappingMethod | The account mapping configuration. You can use different mapping methods, such as:<br/>- `auto`: The default value. The user account will be automatically created and mapped if the login is successful. <br/>- `lookup`: Using this method requires you to manually provision accounts. |

|

||||

|

||||

*Example Configuration Using LDAPIdentityProvider*:

|

||||

|

||||

|

|

|

|||

|

|

@ -0,0 +1,89 @@

|

|||

---

|

||||

title: "OAuth2 Identity Providers"

|

||||

keywords: 'Kubernetes, KubeSphere, OAuth2, Identity Provider'

|

||||

description: 'OAuth2 Identity Provider'

|

||||

linkTitle: "OAuth2 Identity Providers"

|

||||

weight: 12200

|

||||

---

|

||||

|

||||

## Overview

|

||||

|

||||

You can integrate external OAuth2 providers with KubeSphere using the standard OAuth2 protocol. After the account authentication by external OAuth2 servers, accounts can be associated with KubeSphere.

|

||||

|

||||

|

||||

|

||||

## GitHubIdentityProvider

|

||||

|

||||

KubeSphere provides you with an example of configuring GitHubIdentityProvider for OAuth2 authentication.

|

||||

|

||||

### Parameter settings

|

||||

|

||||

To set IdentityProvider parameters, edit the ConfigMap of `kubesphere-config` in the namespace of `kubesphere-system`.

|

||||

|

||||

1. Execute the following command.

|

||||

|

||||

```bash

|

||||

kubectl -n kubesphere-system edit cm kubesphere-config

|

||||

```

|

||||

|

||||

2. This is an example configuration for your reference.

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

data:

|

||||

kubesphere.yaml: |

|

||||

authentication:

|

||||

authenticateRateLimiterMaxTries: 10

|

||||

authenticateRateLimiterDuration: 10m0s

|

||||

loginHistoryRetentionPeriod: 7d

|

||||

maximumClockSkew: 10s

|

||||

multipleLogin: true

|

||||

kubectlImage: kubesphere/kubectl:v1.0.0

|

||||

jwtSecret: "jwt secret"

|

||||

oauthOptions:

|

||||

accessTokenMaxAge: 1h

|

||||

accessTokenInactivityTimeout: 30m

|

||||

identityProviders:

|

||||

- name: github

|

||||

type: GitHubIdentityProvider

|

||||

mappingMethod: auto

|

||||

provider:

|

||||

clientID: 'Iv1.547165ce1cf2f590'

|

||||

clientSecret: 'c53e80ab92d48ab12f4e7f1f6976d1bdc996e0d7'

|

||||

endpoint:

|

||||

authURL: 'https://github.com/login/oauth/authorize'

|

||||

tokenURL: 'https://github.com/login/oauth/access_token'

|

||||

redirectURL: 'https://ks-console/oauth/redirect'

|

||||

scopes:

|

||||

- user

|

||||

...

|

||||

```

|

||||

|

||||

3. Add the configuration block for GitHubIdentityProvider in `authentication.oauthOptions.identityProviders`. See the following table for more information about different fields.

|

||||

|

||||

| Field | Description |

|

||||

| --------------- | ------------------------------------------------------------ |

|

||||

| `name` | The unique name of IdentityProvider. |

|

||||

| `type` | The type of IdentityProvider plugin. GitHubIdentityProvider is a default implementation type. |

|

||||

| `mappingMethod` | The account mapping configuration. You can use different mapping methods, such as:<br/>- `auto`: The default value. The user account will be automatically created and mapped if the login is successful. <br/>- `lookup`: Using this method requires you to manually provision accounts. <br/>For more information, see [the parameters in GitHub](https://github.com/kubesphere/kubesphere/blob/master/pkg/apiserver/authentication/oauth/oauth_options.go#L37-L44). |

|

||||

| `clientID` | The OAuth2 client ID. |

|

||||

| `clientSecret` | The OAuth2 client secret. |

|

||||

| `authURL` | The OAuth2 endpoint. |

|

||||

| `tokenURL` | The OAuth2 endpoint. |

|

||||

| `redirectURL` | The redirected URL to ks-console. |

|

||||

|

||||

4. Restart `ks-apiserver` to update the configuration.

|

||||

|

||||

```bash

|

||||

kubectl -n kubesphere-system rollout restart deploy ks-apiserver

|

||||

```

|

||||

|

||||

5. Access the login page of the KubeSphere console and you can see the option **Log in with GitHub**.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

6. After you log in the console, the account [can be invited to a workspace](../../workspace-administration/role-and-member-management/) to work in one or more projects.

|

||||

|

|

@ -1,8 +1,157 @@

|

|||

---

|

||||

title: "Helm Developer Guide"

|

||||

keywords: 'kubernetes, kubesphere'

|

||||

description: ''

|

||||

|

||||

|

||||

keywords: 'Kubernetes, KubeSphere, helm, development'

|

||||

description: 'Helm developer guide'

|

||||

linkTitle: "Helm Developer Guide"

|

||||

weight: 14410

|

||||

---

|

||||

|

||||

You can upload the Helm chart of an app to KubeSphere so that tenants with necessary permissions can deploy it. This tutorial demonstrates how prepare Helm charts using NGINX as an example.

|

||||

|

||||

## Install Helm

|

||||

|

||||

If you have already installed KubeSphere, then Helm is deployed in your environment. Otherwise, refer to the [Helm documentation](https://helm.sh/docs/intro/install/) to install Helm first.

|

||||

|

||||

## Create a Local Repository

|

||||

|

||||

Execute the following commands to create a repository on your machine.

|

||||

|

||||

```bash

|

||||

mkdir helm-repo

|

||||

```

|

||||

|

||||

```bash

|

||||

cd helm-repo

|

||||

```

|

||||

|

||||

## Create an App

|

||||

|

||||

Use `helm create` to create a folder named `nginx`, which automatically creates YAML templates and directories for your app. Generally, it is not recommended to change the name of files and directories in the top level directory.

|

||||

|

||||

```bash

|

||||

$ helm create nginx

|

||||

$ tree nginx/

|

||||

nginx/

|

||||

├── charts

|

||||

├── Chart.yaml

|

||||

├── templates

|

||||

│ ├── deployment.yaml

|

||||

│ ├── _helpers.tpl

|

||||

│ ├── ingress.yaml

|

||||

│ ├── NOTES.txt

|

||||

│ └── service.yaml

|

||||

└── values.yaml

|

||||

```

|

||||

|

||||

`Chart.yaml` is used to define the basic information of the chart, including name, API, and app version. For more information, see [Chart.yaml File](../helm-specification/#chartyaml-file).

|

||||

|

||||

An example of the `Chart.yaml` file:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

appVersion: "1.0"

|

||||

description: A Helm chart for Kubernetes

|

||||

name: nginx

|

||||

version: 0.1.0

|

||||

```

|

||||

|

||||

When you deploy Helm-based apps to Kubernetes, you can edit the `values.yaml` file on the KubeSphere console directly.

|

||||

|

||||

An example of the `values.yaml` file:

|

||||

|

||||

```yaml

|

||||

# Default values for test.

|

||||

# This is a YAML-formatted file.

|

||||

# Declare variables to be passed into your templates.

|

||||

|

||||

replicaCount: 1

|

||||

|

||||

image:

|

||||

repository: nginx

|

||||

tag: stable

|

||||

pullPolicy: IfNotPresent

|

||||

|

||||

nameOverride: ""

|

||||

fullnameOverride: ""

|

||||

|

||||

service:

|

||||

type: ClusterIP

|

||||

port: 80

|

||||

|

||||

ingress:

|

||||

enabled: false

|

||||

annotations: {}

|

||||

# kubernetes.io/ingress.class: nginx

|

||||

# kubernetes.io/tls-acme: "true"

|

||||

path: /

|

||||

hosts:

|

||||

- chart-example.local

|

||||

tls: []

|

||||

# - secretName: chart-example-tls

|

||||

# hosts:

|

||||

# - chart-example.local

|

||||

|

||||

resources: {}

|

||||

# We usually recommend not to specify default resources and to leave this as a conscious

|

||||

# choice for the user. This also increases chances charts run on environments with little

|

||||

# resources, such as Minikube. If you do want to specify resources, uncomment the following

|

||||

# lines, adjust them as necessary, and remove the curly braces after 'resources:'.

|

||||

# limits:

|

||||

# cpu: 100m

|

||||

# memory: 128Mi

|

||||

# requests:

|

||||

# cpu: 100m

|

||||

# memory: 128Mi

|

||||

|

||||

nodeSelector: {}

|

||||

|

||||

tolerations: []

|

||||

|

||||

affinity: {}

|

||||

```

|

||||

|

||||

Refer to [Helm Specifications](../helm-specification/) to edit files in the `nginx` folder and save them when you finish editing.

|

||||

|

||||

## Create an Index File (Optional)

|

||||

|

||||

To add a repository with an HTTP or HTTPS URL in KubeSphere, you need to upload an `index.yaml` file to the object storage in advance. Use Helm to create the index file by executing the following command in the previous directory of `nginx`.

|

||||

|

||||

```bash

|

||||

helm repo index .

|

||||

```

|

||||

|

||||

```bash

|

||||

$ ls

|

||||

index.yaml nginx

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- If the repository URL is S3-styled, an index file will be created automatically in the object storage when you add apps to the repository.

|

||||

|

||||

- For more information about how to add repositories to KubeSphere, see [Import an Helm Repository](../../../workspace-administration/app-repository/import-helm-repository/).

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## Package the Chart

|

||||

|

||||

Go to the previous directory of `nginx` and execute the following command to package your chart which creates a .tgz package.

|

||||

|

||||

```bash

|

||||

helm package nginx

|

||||

```

|

||||

|

||||

```bash

|

||||

$ ls

|

||||

nginx nginx-0.1.0.tgz

|

||||

```

|

||||

|

||||

## Upload Your App

|

||||

|

||||

Now that you have your Helm-based app ready, you can load it to KubeSphere and test it on the platform.

|

||||

|

||||

## See Also

|

||||

|

||||

[Helm Specifications](../helm-specification/)

|

||||

|

||||

[Import an Helm Repository](../../../workspace-administration/app-repository/import-helm-repository/)

|

||||

|

|

|

|||

|

|

@ -1,8 +1,130 @@

|

|||

---

|

||||

title: "Helm Specification"

|

||||

keywords: 'kubernetes, kubesphere'

|

||||

description: 'Helm Specification'

|

||||

|

||||

|

||||

title: "Helm Specifications"

|

||||

keywords: 'Kubernetes, KubeSphere, Helm, specifications'

|

||||

description: 'Helm Specifications'

|

||||

linkTitle: "Helm Specifications"

|

||||

weight: 14420

|

||||

---

|

||||

|

||||

Helm charts serve as a packaging format. A chart is a collection of files that describe a related set of Kubernetes resources. For more information, see the [Helm documentation](https://helm.sh/docs/topics/charts/).

|

||||

|

||||

## Structure

|

||||

|

||||

All related files of a chart is stored in a directory which generally contains:

|

||||

|

||||

```text

|

||||

chartname/

|

||||

Chart.yaml # A YAML file containing basic information about the chart, such as version and name.

|

||||

LICENSE # (Optional) A plain text file containing the license for the chart.

|

||||

README.md # (Optional) The description of the app and how-to guide.

|

||||

values.yaml # The default configuration values for this chart.

|

||||

values.schema.json # (Optional) A JSON Schema for imposing a structure on the values.yaml file.

|

||||

charts/ # A directory containing any charts upon which this chart depends.

|

||||

crds/ # Custom Resource Definitions.

|

||||

templates/ # A directory of templates that will generate valid Kubernetes configuration files with corresponding values provided.

|

||||

templates/NOTES.txt # (Optional) A plain text file with usage notes.

|

||||

```

|

||||

|

||||

## Chart.yaml File

|

||||

|

||||

You must provide the `chart.yaml` file for a chart. Here is an example of the file with explanations for each field.

|

||||

|

||||

```yaml

|

||||

apiVersion: (Required) The chart API version.

|

||||

name: (Required) The name of the chart.

|

||||

version: (Required) The version, following the SemVer 2 standard.

|

||||

kubeVersion: (Optional) The compatible Kubernetes version, following the SemVer 2 standard.

|

||||

description: (Optional) A single-sentence description of the app.

|

||||

type: (Optional) The type of the chart.

|

||||

keywords:

|

||||

- (Optional) A list of keywords about the app.

|

||||

home: (Optional) The URL of the app.

|

||||

sources:

|

||||

- (Optional) A list of URLs to source code for this app.

|

||||

dependencies: (Optional) A list of the chart requirements.

|

||||

- name: The name of the chart, such as nginx.

|

||||

version: The version of the chart, such as "1.2.3".

|

||||

repository: The repository URL ("https://example.com/charts") or alias ("@repo-name").

|

||||

condition: (Optional) A yaml path that resolves to a boolean, used for enabling/disabling charts (e.g. subchart1.enabled ).

|

||||

tags: (Optional)

|

||||

- Tags can be used to group charts for enabling/disabling together.

|

||||

import-values: (Optional)

|

||||

- ImportValues holds the mapping of source values to parent key to be imported. Each item can be a string or pair of child/parent sublist items.

|

||||

alias: (Optional) Alias to be used for the chart. It is useful when you have to add the same chart multiple times.

|

||||

maintainers: (Optional)

|

||||

- name: (Required) The maintainer name.

|

||||

email: (Optional) The maintainer email.

|

||||

url: (Optional) A URL for the maintainer.

|

||||

icon: (Optional) A URL to an SVG or PNG image to be used as an icon.

|

||||

appVersion: (Optional) The app version. This needn't be SemVer.

|

||||

deprecated: (Optional, boolean) Whether this chart is deprecated.

|

||||

annotations:

|

||||

example: (Optional) A list of annotations keyed by name.

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- The field `dependencies` is used to define chart dependencies which were located in a separate file `requirements.yaml` for `v1` charts. For more information, see [Chart Dependencies](https://helm.sh/docs/topics/charts/#chart-dependencies).

|

||||

- The field `type` is used to define the type of chart. Allowed values are `application` and `library`. For more information, see [Chart Types](https://helm.sh/docs/topics/charts/#chart-types).

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## Values.yaml and Templates

|

||||

|

||||

Written in the [Go template language](https://golang.org/pkg/text/template/), Helm chart templates are stored in the `templates` folder of a chart. There are two ways to provide values for the templates:

|

||||

|

||||

1. Make a `values.yaml` file inside of a chart with default values that can be referenced.

|

||||

2. Make a YAML file that contains necessary values and use the file through the command line with `helm install`.

|

||||

|

||||

Here is an example of the template in the `templates` folder.

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: ReplicationController

|

||||

metadata:

|

||||

name: deis-database

|

||||

namespace: deis

|

||||

labels:

|

||||

app.kubernetes.io/managed-by: deis

|

||||

spec:

|

||||

replicas: 1

|

||||

selector:

|

||||

app.kubernetes.io/name: deis-database

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

app.kubernetes.io/name: deis-database

|

||||

spec:

|

||||

serviceAccount: deis-database

|

||||

containers:

|

||||

- name: deis-database

|

||||

image: {{.Values.imageRegistry}}/postgres:{{.Values.dockerTag}}

|

||||

imagePullPolicy: {{.Values.pullPolicy}}

|

||||

ports:

|

||||

- containerPort: 5432

|

||||

env:

|

||||

- name: DATABASE_STORAGE

|

||||

value: {{default "minio" .Values.storage}}

|

||||

```

|

||||

|

||||

The above example defines a ReplicationController template in Kubernetes. There some values referenced in it which are defined in `values.yaml`.

|

||||

|

||||

- `imageRegistry`: The Docker image registry.

|

||||

- `dockerTag`: The Docker image tag.

|

||||

- `pullPolicy`: The image pulling policy.

|

||||

- `storage`: The storage backend. It defaults to `minio`.

|

||||

|

||||

An example `values.yaml` file:

|

||||

|

||||

```text

|

||||

imageRegistry: "quay.io/deis"

|

||||

dockerTag: "latest"

|

||||

pullPolicy: "Always"

|

||||

storage: "s3"

|

||||

```

|

||||

|

||||

## Reference

|

||||

|

||||

[Helm Documentation](https://helm.sh/docs/)

|

||||

|

||||

[Charts](https://helm.sh/docs/topics/charts/)

|

||||

|

|

@ -18,7 +18,7 @@ Using [Redis](https://redis.io/) as an example application, this tutorial demons

|

|||

## Prerequisites

|

||||

|

||||

- You need to enable [KubeSphere App Store (OpenPitrix)](../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project and an account (`project-regular`). For more information, see [Create Workspace, Project, Account and Role](../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project and an account (`project-regular`). For more information, see [Create Workspaces, Projects, Accounts and Roles](../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

|

|

@ -26,7 +26,7 @@ Using [Redis](https://redis.io/) as an example application, this tutorial demons

|

|||

|

||||

You need to create two accounts first, one for ISVs (`isv`) and the other (`reviewer`) for app technical reviewers.

|

||||

|

||||

1. Log in the KubeSphere console with the account `admin`. Click **Platform** in the top left corner and select **Access Control**. In **Account Roles**, click **Create**.

|

||||

1. Log in to the KubeSphere console with the account `admin`. Click **Platform** in the top left corner and select **Access Control**. In **Account Roles**, click **Create**.

|

||||

|

||||

|

||||

|

||||

|

|

@ -56,7 +56,7 @@ You need to create two accounts first, one for ISVs (`isv`) and the other (`revi

|

|||

|

||||

### Step 2: Upload and submit an application

|

||||

|

||||

1. Log in KubeSphere as `isv` and go to your workspace. You need to upload the example app Redis to this workspace so that it can be used later. First, download the app [Redis 11.3.4](https://github.com/kubesphere/tutorial/raw/master/tutorial%205%20-%20app-store/redis-11.3.4.tgz) and click **Upload Template** in **App Templates**.

|

||||

1. Log in to KubeSphere as `isv` and go to your workspace. You need to upload the example app Redis to this workspace so that it can be used later. First, download the app [Redis 11.3.4](https://github.com/kubesphere/tutorial/raw/master/tutorial%205%20-%20app-store/redis-11.3.4.tgz) and click **Upload Template** in **App Templates**.

|

||||

|

||||

|

||||

|

||||

|

|

@ -175,7 +175,7 @@ After the app is approved, `isv` can release the Redis application to the App St

|

|||

|

||||

`reviewer` can create multiple categories for different types of applications based on their function and usage. It is similar to setting tags and categories can be used in the App Store as filters, such as Big Data, Middleware, and IoT.

|

||||

|

||||

1. Log in KubeSphere as `reviewer`. To create a category, go to the **App Store Management** page and click the plus icon in **App Categories**.

|

||||

1. Log in to KubeSphere as `reviewer`. To create a category, go to the **App Store Management** page and click the plus icon in **App Categories**.

|

||||

|

||||

|

||||

|

||||

|

|

@ -205,7 +205,7 @@ After the app is approved, `isv` can release the Redis application to the App St

|

|||

|

||||

To allow workspace users to upgrade apps, you need to add new app versions to KubeSphere first. Follow the steps below to add a new version for the example app.

|

||||

|

||||

1. Log in KubeSphere as `isv` again and navigate to **App Templates**. Click the app Redis in the list.

|

||||

1. Log in to KubeSphere as `isv` again and navigate to **App Templates**. Click the app Redis in the list.

|

||||

|

||||

|

||||

|

||||

|

|

@ -233,7 +233,7 @@ To follow the steps below, you must deploy an app of one of its old versions fir

|

|||

|

||||

{{</ notice >}}

|

||||

|

||||

1. Log in KubeSphere as `project-regular`, navigate to the **Applications** page of the project, and click the app to be upgraded.

|

||||

1. Log in to KubeSphere as `project-regular`, navigate to the **Applications** page of the project, and click the app to be upgraded.

|

||||

|

||||

|

||||

|

||||

|

|

@ -257,7 +257,7 @@ To follow the steps below, you must deploy an app of one of its old versions fir

|

|||

|

||||

You can choose to remove an app entirely from the App Store or suspend a specific app version.

|

||||

|

||||

1. Log in KubeSphere as `reviewer`. Click **Platform** in the top left corner and go to **App Store Management**. On the **App Store** page, click Redis.

|

||||

1. Log in to KubeSphere as `reviewer`. Click **Platform** in the top left corner and go to **App Store Management**. On the **App Store** page, click Redis.

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ This tutorial walks you through an example of deploying etcd from the App Store

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy etcd from App Store

|

||||

### Step 1: Deploy etcd from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -47,11 +47,11 @@ This tutorial walks you through an example of deploying etcd from the App Store

|

|||

|

||||

|

||||

|

||||

### Step 2: Access etcd Service

|

||||

### Step 2: Access the etcd Service

|

||||

|

||||

After the app is deployed, you can use etcdctl, a command-line tool for interacting with etcd server, to access etcd on the KubeSphere console directly.

|

||||

|

||||

1. Navigate to **StatefulSets** in **Workloads**, click the service name of etcd.

|

||||

1. Navigate to **StatefulSets** in **Workloads**, and click the service name of etcd.

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -12,11 +12,11 @@ This tutorial walks you through an example of deploying Memcached from the App S

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy Memcached from App Store

|

||||

### Step 1: Deploy Memcached from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -42,7 +42,7 @@ This tutorial walks you through an example of deploying Memcached from the App S

|

|||

|

||||

### Step 2: Access Memcached

|

||||

|

||||

1. Navigate to **Services**, click the service name of Memcached.

|

||||

1. Navigate to **Services**, and click the service name of Memcached.

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -3,7 +3,6 @@ title: "Deploy MinIO on KubeSphere"

|

|||

keywords: 'Kubernetes, KubeSphere, Minio, app-store'

|

||||

description: 'How to deploy Minio on KubeSphere from the App Store of KubeSphere'

|

||||

linkTitle: "Deploy MinIO on KubeSphere"

|

||||

|

||||

weight: 14240

|

||||

---

|

||||

[MinIO](https://min.io/) object storage is designed for high performance and the S3 API. It is ideal for large, private cloud environments with stringent security requirements and delivers mission-critical availability across a diverse range of workloads.

|

||||

|

|

@ -13,11 +12,11 @@ This tutorial walks you through an example of deploying MinIO from the App Store

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](../../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy MinIO from App Store

|

||||

### Step 1: Deploy MinIO from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -41,7 +40,7 @@ This tutorial walks you through an example of deploying MinIO from the App Store

|

|||

|

||||

|

||||

|

||||

### Step 2: Access MinIO Browser

|

||||

### Step 2: Access the MinIO Browser

|

||||

|

||||

To access MinIO outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ This tutorial walks you through an example of deploying MongoDB from the App Sto

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](../../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy MongoDB from App Store

|

||||

### Step 1: Deploy MongoDB from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -47,7 +47,7 @@ This tutorial walks you through an example of deploying MongoDB from the App Sto

|

|||

|

||||

|

||||

|

||||

### Step 2: Access MongoDB Terminal

|

||||

### Step 2: Access the MongoDB Terminal

|

||||

|

||||

1. Go to **Services** and click the service name of MongoDB.

|

||||

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ This tutorial walks you through an example of deploying MySQL from the App Store

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy MySQL from App Store

|

||||

### Step 1: Deploy MySQL from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -33,7 +33,7 @@ This tutorial walks you through an example of deploying MySQL from the App Store

|

|||

|

||||

|

||||

|

||||

4. In **App Config**, uncomment the `mysqlRootPassword` field or customize the password. Click **Deploy** to continue.

|

||||

4. In **App Config**, uncomment the `mysqlRootPassword` field and customize the password. Click **Deploy** to continue.

|

||||

|

||||

|

||||

|

||||

|

|

@ -41,7 +41,7 @@ This tutorial walks you through an example of deploying MySQL from the App Store

|

|||

|

||||

|

||||

|

||||

### Step 2: Access MySQL Terminal

|

||||

### Step 2: Access the MySQL Terminal

|

||||

|

||||

1. Go to **Workloads** and click the service name of MySQL.

|

||||

|

||||

|

|

@ -51,11 +51,11 @@ This tutorial walks you through an example of deploying MySQL from the App Store

|

|||

|

||||

|

||||

|

||||

3. In the terminal, execute `mysql -uroot -ptesting` to log in MySQL as the root user.

|

||||

3. In the terminal, execute `mysql -uroot -ptesting` to log in to MySQL as the root user.

|

||||

|

||||

|

||||

|

||||

### Step 3: Access MySQL Database outside Cluster

|

||||

### Step 3: Access the MySQL Database outside the Cluster

|

||||

|

||||

To access MySQL outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

|

|

@ -88,4 +88,3 @@ To access MySQL outside the cluster, you need to expose the app through NodePort

|

|||

{{</ notice >}}

|

||||

|

||||

6. For more information about MySQL, refer to [the official documentation of MySQL](https://dev.mysql.com/doc/).

|

||||

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ This tutorial walks you through an example of deploying NGINX from the App Store

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](../../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy NGINX from App Store

|

||||

### Step 1: Deploy NGINX from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ This tutorial walks you through an example of how to deploy PostgreSQL from the

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](../../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy PostgreSQL from App Store

|

||||

### Step 1: Deploy PostgreSQL from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -47,9 +47,9 @@ This tutorial walks you through an example of how to deploy PostgreSQL from the

|

|||

|

||||

|

||||

|

||||

### Step 2: Access PostgreSQL Database

|

||||

### Step 2: Access the PostgreSQL Database

|

||||

|

||||

To access MySQL outside the cluster, you need to expose the app through NodePort first.

|

||||

To access PostgreSQL outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

1. Go to **Services** and click the service name of PostgreSQL.

|

||||

|

||||

|

|

|

|||

|

|

@ -2,8 +2,7 @@

|

|||

title: "Deploy RabbitMQ on KubeSphere"

|

||||

keywords: 'KubeSphere, RabbitMQ, Kubernetes, Installation'

|

||||

description: 'How to deploy RabbitMQ on KubeSphere through App Store'

|

||||

|

||||

link title: "Deploy RabbitMQ"

|

||||

linkTitle: "Deploy RabbitMQ on KubeSphere"

|

||||

weight: 14290

|

||||

---

|

||||

[RabbitMQ](https://www.rabbitmq.com/) is the most widely deployed open-source message broker. It is lightweight and easy to deploy on premises and in the cloud. It supports multiple messaging protocols. RabbitMQ can be deployed in distributed and federated configurations to meet high-scale, high-availability requirements.

|

||||

|

|

@ -13,11 +12,11 @@ This tutorial walks you through an example of how to deploy RabbitMQ from the Ap

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy RabbitMQ from App Store

|

||||

### Step 1: Deploy RabbitMQ from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -49,7 +48,7 @@ This tutorial walks you through an example of how to deploy RabbitMQ from the Ap

|

|||

|

||||

|

||||

|

||||

### Step 2: Access RabbitMQ Dashboard

|

||||

### Step 2: Access the RabbitMQ Dashboard

|

||||

|

||||

To access RabbitMQ outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

|

|

|

|||

|

|

@ -13,11 +13,11 @@ This tutorial walks you through an example of deploying Redis from the App Store

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](../../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account (`project-regular`) for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy Redis from App Store

|

||||

### Step 1: Deploy Redis from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -47,7 +47,7 @@ This tutorial walks you through an example of deploying Redis from the App Store

|

|||

|

||||

|

||||

|

||||

### Step 2: Access Redis Terminal

|

||||

### Step 2: Access the Redis Terminal

|

||||

|

||||

1. Go to **Services** and click the service name of Redis.

|

||||

|

||||

|

|

|

|||

|

|

@ -2,8 +2,7 @@

|

|||

title: "Deploy Tomcat on KubeSphere"

|

||||

keywords: 'KubeSphere, Kubernetes, Installation, Tomcat'

|

||||

description: 'How to deploy Tomcat on KubeSphere through App Store'

|

||||

|

||||

link title: "Deploy Tomcat"

|

||||

linkTitle: "Deploy Tomcat on KubeSphere"

|

||||

weight: 14292

|

||||

---

|

||||

[Apache Tomcat](https://tomcat.apache.org/index.html) powers numerous large-scale, mission-critical web applications across a diverse range of industries and organizations. Tomcat provides a pure Java HTTP web server environment in which Java code can run.

|

||||

|

|

@ -13,11 +12,11 @@ This tutorial walks you through an example of deploying Tomcat from the App Stor

|

|||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](../../../pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy Tomcat from App Store

|

||||

### Step 1: Deploy Tomcat from the App Store

|

||||

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

|

|

@ -41,7 +40,7 @@ This tutorial walks you through an example of deploying Tomcat from the App Stor

|

|||

|

||||

|

||||

|

||||

### Step 2: Access Tomcat Terminal

|

||||

### Step 2: Access the Tomcat Terminal

|

||||

|

||||

1. Go to **Services** and click the service name of Tomcat.

|

||||

|

||||

|

|

@ -55,9 +54,9 @@ This tutorial walks you through an example of deploying Tomcat from the App Stor

|

|||

|

||||

|

||||

|

||||

### Step 3: Access Tomcat Project from Browser

|

||||

### Step 3: Access a Tomcat Project from Your Browser

|

||||

|

||||

To access Tomcat projects outside the cluster, you need to expose the app through NodePort first.

|

||||

To access a Tomcat project outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

1. Go to **Services** and click the service name of Tomcat.

|

||||

|

||||

|

|

|

|||

|

|

@ -14,7 +14,7 @@ This tutorial shows you how to quickly deploy a [GitLab](https://gitlab.com/gitl

|

|||

## Prerequisites

|

||||

|

||||

- You have enabled [OpenPitrix](/docs/pluggable-components/app-store/).

|

||||

- You have completed the tutorial [Create Workspace, Project, Account and Role](/docs/quick-start/create-workspace-and-project/). The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, we'll work in the project `apps` of the workspace `apps`.

|

||||

- You have completed the tutorial [Create Workspaces, Projects, Accounts and Roles](/docs/quick-start/create-workspace-and-project/). The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, we'll work in the project `apps` of the workspace `apps`.

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

|

|

|

|||

|

|

@ -11,7 +11,7 @@ In addition to monitoring data at the physical resource level, cluster administr

|

|||

|

||||

## Prerequisites

|

||||

|

||||

You need an account granted a role including the authorization of **Clusters Management**. For example, you can log in the console as `admin` directly or create a new role with the authorization and assign it to an account.

|

||||

You need an account granted a role including the authorization of **Clusters Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to an account.

|

||||

|

||||

## Resource Usage

|

||||

|

||||

|

|

|

|||

|

|

@ -12,13 +12,13 @@ This guide demonstrates how to set cluster visibility.

|

|||

|

||||

## Prerequisites

|

||||

* You need to enable the [multi-cluster feature](../../../multicluster-management/).

|

||||

* You need to have a workspace and an account that has the permission to create workspaces, such as `ws-manager`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

* You need to have a workspace and an account that has the permission to create workspaces, such as `ws-manager`. For more information, see [Create Workspaces, Projects, Accounts and Roles](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Set Cluster Visibility

|

||||

|

||||

### Select available clusters when you create a workspace

|

||||

|

||||

1. Log in KubeSphere with an account that has the permission to create a workspace, such as `ws-manager`.

|

||||

1. Log in to KubeSphere with an account that has the permission to create a workspace, such as `ws-manager`.

|

||||

|

||||

2. Click **Platform** in the top left corner and select **Access Control**. In **Workspaces** from the navigation bar, click **Create**.

|

||||

|

||||

|

|

@ -44,7 +44,7 @@ Try not to create resources on the host cluster to avoid excessive loads, which

|

|||

|

||||

After a workspace is created, you can allocate additional clusters to the workspace through authorization or unbind a cluster from the workspace. Follow the steps below to adjust the visibility of a cluster.

|

||||

|

||||

1. Log in KubeSphere with an account that has the permission to manage clusters, such as `admin`.

|

||||

1. Log in to KubeSphere with an account that has the permission to manage clusters, such as `admin`.

|

||||

|

||||

2. Click **Platform** in the top left corner and select **Clusters Management**. Select a cluster from the list to view cluster information.

|

||||

|

||||

|

|

@ -52,12 +52,12 @@ After a workspace is created, you can allocate additional clusters to the worksp

|

|||

|

||||

4. You can see the list of authorized workspaces, which means the current cluster is available to resources in all these workspaces.

|

||||

|

||||

|

||||

|

||||

|

||||

5. Click **Edit Visibility** to set the cluster authorization. You can select new workspaces that will be able to use the cluster or unbind it from a workspace.

|

||||

|

||||

|

||||

|

||||

|

||||

### Make a cluster public

|

||||

|

||||

You can check **Set as public cluster** so that all platform users can access the cluster, in which they are able to create and schedule resources.

|

||||

You can check **Set as public cluster** so that platform users can access the cluster, in which they are able to create and schedule resources.

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ weight: 8630

|

|||

|

||||

## Objective

|

||||

|

||||

This guide demonstrates email notification settings (customized settings supported) for alert policies. You can specify user email addresses to receive alert messages.

|

||||

This guide demonstrates email notification settings (customized settings supported) for alerting policies. You can specify user email addresses to receive alerting messages.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

|

|

@ -17,7 +17,7 @@ This guide demonstrates email notification settings (customized settings support

|

|||

|

||||

## Hands-on Lab

|

||||

|

||||

1. Log in the web console with one account granted the role `platform-admin`.

|

||||

1. Log in to the web console with one account granted the role `platform-admin`.

|

||||

2. Click **Platform** in the top left corner and select **Clusters Management**.

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -10,7 +10,7 @@ KubeSphere provides monitoring of related metrics such as CPU, memory, network,

|

|||

|

||||

## Prerequisites

|

||||

|

||||

You need an account granted a role including the authorization of **Clusters Management**. For example, you can log in the console as `admin` directly or create a new role with the authorization and assign it to an account.

|

||||

You need an account granted a role including the authorization of **Clusters Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to an account.

|

||||

|

||||

## Cluster Status Monitoring

|

||||

|

||||

|

|

|

|||

|

|

@ -6,17 +6,17 @@ linkTitle: "Alerting Messages (Node Level)"

|

|||

weight: 8540

|

||||

---

|

||||

|

||||

Alert messages record detailed information of alerts triggered based on alert rules, including monitoring targets, alert policies, recent notifications and comments.

|

||||

Alerting messages record detailed information of alerts triggered based on alert rules, including monitoring targets, alerting policies, recent notifications and comments.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You have created a node-level alert policy and received alert notifications of it. If it is not ready, please refer to [Alert Policy (Node Level)](../alerting-policy/) to create one first.

|

||||

You have created a node-level alerting policy and received alert notifications of it. If it is not ready, please refer to [Alerting Policy (Node Level)](../alerting-policy/) to create one first.

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Task 1: View alert messages

|

||||

### Task 1: View alerting messages

|

||||

|

||||

1. Log in the console with one account granted the role `platform-admin`.

|

||||

1. Log in to the console with one account granted the role `platform-admin`.

|

||||

|

||||

2. Click **Platform** in the top left corner and select **Clusters Management**.

|

||||

|

||||

|

|

@ -24,17 +24,17 @@ You have created a node-level alert policy and received alert notifications of i

|

|||

|

||||

3. Select a cluster from the list and enter it (If you do not enable the [multi-cluster feature](../../../multicluster-management/), you will directly go to the **Overview** page).

|

||||

|

||||

4. Navigate to **Alerting Messages** under **Monitoring & Alerting**, and you can see alert messages in the list. In the example of [Alert Policy (Node Level)](../alerting-policy/), you set one node as the monitoring target, and its memory utilization rate is higher than the threshold of `50%`, so you can see an alert message of it.

|

||||

4. Navigate to **Alerting Messages** under **Monitoring & Alerting**, and you can see alerting messages in the list. In the example of [Alerting Policy (Node Level)](../alerting-policy/), you set one node as the monitoring target, and its memory utilization rate is higher than the threshold of `50%`, so you can see an alerting message of it.

|

||||

|

||||

|

||||

|

||||

5. Click the alert message to enter the detail page. In **Alerting Detail**, you can see the graph of memory utilization rate of the node over time, which has been continuously higher than the threshold of `50%` set in the alert rule, so the alert was triggered.

|

||||

5. Click the alerting message to enter the detail page. In **Alerting Detail**, you can see the graph of memory utilization rate of the node over time, which has been continuously higher than the threshold of `50%` set in the alert rule, so the alert was triggered.

|

||||

|

||||

|

||||

|

||||

### Task 2: View alert policies

|

||||

### Task 2: View alerting policies

|

||||

|

||||

Switch to **Alerting Policy** to view the alert policy corresponding to this alert message, and you can see the triggering rule of it set in the example of [Alert Policy (Node Level)](../alerting-policy/).

|

||||