mirror of

https://github.com/kubesphere/website.git

synced 2025-12-26 00:12:48 +00:00

Merge branch 'kubesphere:master' into master

This commit is contained in:

commit

d7cbec9e0c

|

|

@ -66,7 +66,7 @@

|

|||

|

||||

.nav {

|

||||

height:100%;

|

||||

margin-left: 280px;

|

||||

margin-left: 250px;

|

||||

margin-right: 360px;

|

||||

line-height: 93px;

|

||||

|

||||

|

|

|

|||

|

|

@ -392,6 +392,11 @@ weight = 6

|

|||

name = "用户论坛"

|

||||

URL = "https://ask.kubesphere.io/forum"

|

||||

|

||||

[[languages.zh.menu.main]]

|

||||

weight = 7

|

||||

name = "认证"

|

||||

URL = "https://kubesphere.cloud/certification/"

|

||||

|

||||

|

||||

# [languages.tr]

|

||||

# weight = 3

|

||||

|

|

|

|||

|

|

@ -162,7 +162,8 @@ section6:

|

|||

children:

|

||||

- icon: /images/home/section6-anchnet.jpg

|

||||

- icon: /images/home/section6-aviation-industry-corporation-of-china.jpg

|

||||

- icon: /images/home/section6-aqara.jpg

|

||||

- icon: /images/case/logo-alphaflow.png

|

||||

- icon: /images/home/section6-aqara.jpg

|

||||

- icon: /images/home/section6-bank-of-beijing.jpg

|

||||

- icon: /images/home/section6-benlai.jpg

|

||||

- icon: /images/home/section6-china-taiping.jpg

|

||||

|

|

@ -184,7 +185,7 @@ section6:

|

|||

- icon: /images/home/section6-webank.jpg

|

||||

- icon: /images/home/section6-wisdom-world.jpg

|

||||

- icon: /images/home/section6-yiliu.jpg

|

||||

- icon: /images/home/section6-zking-insurance.jpg

|

||||

|

||||

|

||||

|

||||

btnContent: Case Studies

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ In Kubernetes clusters, LoadBalancer services can be used to expose backend work

|

|||

|

||||

OpenELB is designed to expose LoadBalancer services in non-public-cloud Kubernetes clusters. It provides easy-to-use EIPs and makes IP address pool management easier for users in private environments.

|

||||

## OpenELB Adopters and Contributors

|

||||

Currently, OpenELB has been used in production environments by many enterprises, such as BENLAI, Suzhou TV, CVTE, Wisdom World, Jollychic, QingCloud, BAIWANG, Rocketbyte, and more. At the end of 2019, BENLAI has used an earlier version of OpenELB in production. Now, OpenELB has attracted 13 contributors and more than 100 community members.

|

||||

Currently, OpenELB has been used in production environments by many enterprises, such as BENLAI, Suzhou TV, CVTE, Wisdom World, Jollychic, QingCloud, BAIWANG and more. At the end of 2019, BENLAI has used an earlier version of OpenELB in production. Now, OpenELB has attracted 13 contributors and more than 100 community members.

|

||||

|

||||

|

||||

## Differences Between OpenELB and MetalLB

|

||||

|

|

|

|||

|

|

@ -74,6 +74,8 @@ section3:

|

|||

children:

|

||||

- name: 'msxf'

|

||||

icon: 'images/case/logo-msxf.png'

|

||||

- name: 'hshc'

|

||||

icon: 'images/case/logo-hshc.png'

|

||||

|

||||

- name: 'IT Service'

|

||||

children:

|

||||

|

|

|

|||

|

|

@ -146,7 +146,7 @@ Pipelines include [declarative pipelines](https://www.jenkins.io/doc/book/pipeli

|

|||

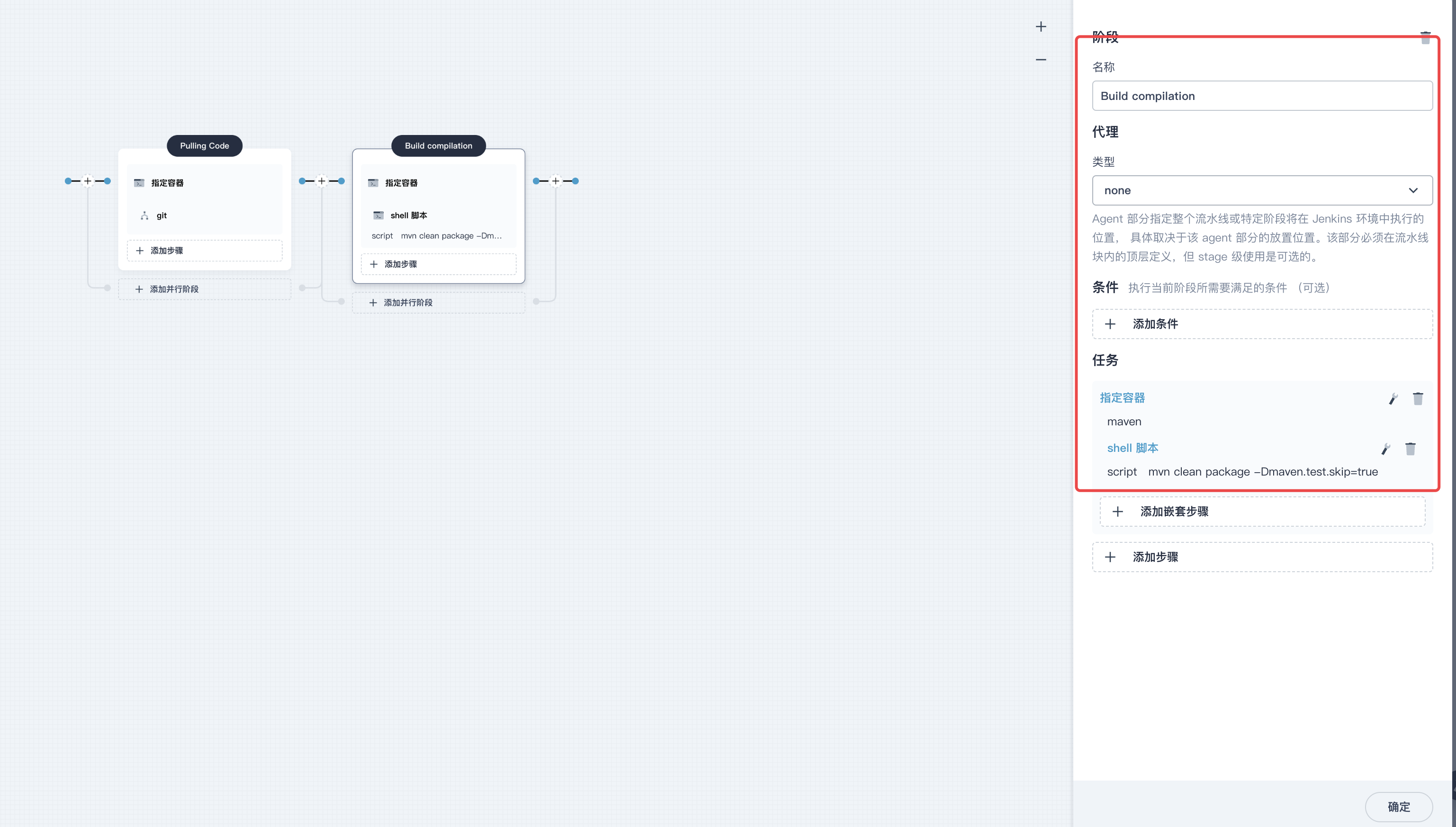

3. Click **Add Nesting Steps** to add a nested step under the `maven` container. Select **shell** from the list and enter the following command in the command line. Click **OK** to save it.

|

||||

|

||||

```shell

|

||||

mvn clean -gs `pwd`/configuration/settings.xml test

|

||||

mvn clean test

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

|

|||

|

|

@ -40,7 +40,7 @@ See the table below for the role of each cluster.

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

These Kubernetes clusters can be hosted across different cloud providers and their Kubernetes versions can also vary. Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

These Kubernetes clusters can be hosted across different cloud providers and their Kubernetes versions can also vary. Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

|

|

|

|||

|

|

@ -146,7 +146,7 @@ Pipelines include [declarative pipelines](https://www.jenkins.io/doc/book/pipeli

|

|||

3. Click **Add Nesting Steps** to add a nested step under the `maven` container. Select **shell** from the list and enter the following command in the command line. Click **OK** to save it.

|

||||

|

||||

```shell

|

||||

mvn clean -gs `pwd`/configuration/settings.xml test

|

||||

mvn clean test

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

|

|||

|

|

@ -28,7 +28,7 @@ You need to select:

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- 2 nodes are included in this example. You can add more nodes based on your own needs, especially in a production environment.

|

||||

- The machine type Standard/4 GB/2 vCPUs is for minimal installation. If you plan to enable several pluggable components or use the cluster for production, you can upgrade your nodes to a more powerful type (such as CPU-Optimized / 8 GB / 4 vCPUs). It seems that DigitalOcean provisions the control plane nodes based on the type of the worker nodes, and for Standard ones the API server can become unresponsive quite soon.

|

||||

|

||||

|

|

|

|||

|

|

@ -79,7 +79,7 @@ Check the installation with `aws --version`.

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- 3 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

- The machine type t3.medium (2 vCPU, 4GB memory) is for minimal installation. If you want to enable pluggable components or use the cluster for production, please select a machine type with more resources.

|

||||

- For other settings, you can change them as well based on your own needs or use the default value.

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ This guide walks you through the steps of deploying KubeSphere on [Google Kubern

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- 3 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

- The machine type e2-medium (2 vCPU, 4GB memory) is for minimal installation. If you want to enable pluggable components or use the cluster for production, please select a machine type with more resources.

|

||||

- For other settings, you can change them as well based on your own needs or use the default value.

|

||||

|

|

|

|||

|

|

@ -14,7 +14,7 @@ This guide walks you through the steps of deploying KubeSphere on [Huaiwei CCE](

|

|||

|

||||

First, create a Kubernetes cluster based on the requirements below.

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- Ensure the cloud computing network for your Kubernetes cluster works, or use an elastic IP when you use **Auto Create** or **Select Existing**. You can also configure the network after the cluster is created. Refer to [NAT Gateway](https://support.huaweicloud.com/en-us/productdesc-natgateway/en-us_topic_0086739762.html).

|

||||

- Select `s3.xlarge.2` `4-core|8GB` for nodes and add more if necessary (3 and more nodes are required for a production environment).

|

||||

|

||||

|

|

|

|||

|

|

@ -30,7 +30,7 @@ This guide walks you through the steps of deploying KubeSphere on [Oracle Kubern

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- It is recommended that you should select **Public** for **Visibility Type**, which will assign a public IP address for every node. The IP address can be used later to access the web console of KubeSphere.

|

||||

- In Oracle Cloud, a Shape is a template that determines the number of CPUs, amount of memory, and other resources that are allocated to an instance. `VM.Standard.E2.2 (2 CPUs and 16G Memory)` is used in this example. For more information, see [Standard Shapes](https://docs.cloud.oracle.com/en-us/iaas/Content/Compute/References/computeshapes.htm#vmshapes__vm-standard).

|

||||

- 3 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

|

|

|

|||

|

|

@ -8,7 +8,7 @@ weight: 4120

|

|||

|

||||

You can install KubeSphere on virtual machines and bare metal with Kubernetes also provisioned. In addition, KubeSphere can also be deployed on cloud-hosted and on-premises Kubernetes clusters as long as your Kubernetes cluster meets the prerequisites below.

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- Available CPU > 1 Core and Memory > 2 G. Only x86_64 CPUs are supported, and Arm CPUs are not fully supported at present.

|

||||

- A **default** StorageClass in your Kubernetes cluster is configured; use `kubectl get sc` to verify it.

|

||||

- The CSR signing feature is activated in kube-apiserver when it is started with the `--cluster-signing-cert-file` and `--cluster-signing-key-file` parameters. See [RKE installation issue](https://github.com/kubesphere/kubesphere/issues/1925#issuecomment-591698309).

|

||||

|

|

|

|||

|

|

@ -245,55 +245,18 @@ To access the console, make sure port 30880 is opened in your security group.

|

|||

### Image list of KubeSphere 3.4

|

||||

|

||||

```txt

|

||||

##k8s-images

|

||||

kubesphere/kube-apiserver:v1.23.10

|

||||

kubesphere/kube-controller-manager:v1.23.10

|

||||

kubesphere/kube-proxy:v1.23.10

|

||||

kubesphere/kube-scheduler:v1.23.10

|

||||

kubesphere/kube-apiserver:v1.24.3

|

||||

kubesphere/kube-controller-manager:v1.24.3

|

||||

kubesphere/kube-proxy:v1.24.3

|

||||

kubesphere/kube-scheduler:v1.24.3

|

||||

kubesphere/kube-apiserver:v1.22.12

|

||||

kubesphere/kube-controller-manager:v1.22.12

|

||||

kubesphere/kube-proxy:v1.22.12

|

||||

kubesphere/kube-scheduler:v1.22.12

|

||||

kubesphere/kube-apiserver:v1.21.14

|

||||

kubesphere/kube-controller-manager:v1.21.14

|

||||

kubesphere/kube-proxy:v1.21.14

|

||||

kubesphere/kube-scheduler:v1.21.14

|

||||

kubesphere/pause:3.7

|

||||

kubesphere/pause:3.6

|

||||

kubesphere/pause:3.5

|

||||

kubesphere/pause:3.4.1

|

||||

coredns/coredns:1.8.0

|

||||

coredns/coredns:1.8.6

|

||||

calico/cni:v3.23.2

|

||||

calico/kube-controllers:v3.23.2

|

||||

calico/node:v3.23.2

|

||||

calico/pod2daemon-flexvol:v3.23.2

|

||||

calico/typha:v3.23.2

|

||||

kubesphere/flannel:v0.12.0

|

||||

openebs/provisioner-localpv:2.10.1

|

||||

openebs/linux-utils:2.10.0

|

||||

library/haproxy:2.3

|

||||

kubesphere/nfs-subdir-external-provisioner:v4.0.2

|

||||

kubesphere/k8s-dns-node-cache:1.15.12

|

||||

##kubesphere-images

|

||||

kubesphere/ks-installer:v3.4.0

|

||||

kubesphere/ks-apiserver:v3.4.0

|

||||

kubesphere/ks-console:v3.4.0

|

||||

kubesphere/ks-controller-manager:v3.4.0

|

||||

kubesphere/ks-upgrade:v3.4.0

|

||||

kubesphere/kubectl:v1.22.0

|

||||

kubesphere/kubectl:v1.21.0

|

||||

kubesphere/kubectl:v1.20.0

|

||||

kubesphere/kubefed:v0.8.1

|

||||

kubesphere/tower:v0.2.0

|

||||

kubesphere/tower:v0.2.1

|

||||

minio/minio:RELEASE.2019-08-07T01-59-21Z

|

||||

minio/mc:RELEASE.2019-08-07T23-14-43Z

|

||||

csiplugin/snapshot-controller:v4.0.0

|

||||

kubesphere/nginx-ingress-controller:v1.1.0

|

||||

kubesphere/nginx-ingress-controller:v1.3.1

|

||||

mirrorgooglecontainers/defaultbackend-amd64:1.4

|

||||

kubesphere/metrics-server:v0.4.2

|

||||

redis:5.0.14-alpine

|

||||

|

|

@ -302,18 +265,18 @@ alpine:3.14

|

|||

osixia/openldap:1.3.0

|

||||

kubesphere/netshoot:v1.0

|

||||

##kubeedge-images

|

||||

kubeedge/cloudcore:v1.9.2

|

||||

kubeedge/iptables-manager:v1.9.2

|

||||

kubesphere/edgeservice:v0.2.0

|

||||

kubeedge/cloudcore:v1.13.0

|

||||

kubesphere/iptables-manager:v1.13.0

|

||||

kubesphere/edgeservice:v0.3.0

|

||||

##gatekeeper-images

|

||||

openpolicyagent/gatekeeper:v3.5.2

|

||||

##openpitrix-images

|

||||

kubesphere/openpitrix-jobs:v3.4.0

|

||||

kubesphere/openpitrix-jobs:v3.3.2

|

||||

##kubesphere-devops-images

|

||||

kubesphere/devops-apiserver:ks-v3.4.0

|

||||

kubesphere/devops-controller:ks-v3.4.0

|

||||

kubesphere/devops-tools:ks-v3.4.0

|

||||

kubesphere/ks-jenkins:v3.4.0-2.319.1

|

||||

kubesphere/ks-jenkins:v3.4.0-2.319.3-1

|

||||

jenkins/inbound-agent:4.10-2

|

||||

kubesphere/builder-base:v3.2.2

|

||||

kubesphere/builder-nodejs:v3.2.0

|

||||

|

|

@ -356,43 +319,46 @@ quay.io/argoproj/argocd-applicationset:v0.4.1

|

|||

ghcr.io/dexidp/dex:v2.30.2

|

||||

redis:6.2.6-alpine

|

||||

##kubesphere-monitoring-images

|

||||

jimmidyson/configmap-reload:v0.5.0

|

||||

prom/prometheus:v2.34.0

|

||||

jimmidyson/configmap-reload:v0.7.1

|

||||

prom/prometheus:v2.39.1

|

||||

kubesphere/prometheus-config-reloader:v0.55.1

|

||||

kubesphere/prometheus-operator:v0.55.1

|

||||

kubesphere/kube-rbac-proxy:v0.11.0

|

||||

kubesphere/kube-state-metrics:v2.5.0

|

||||

kubesphere/kube-state-metrics:v2.6.0

|

||||

prom/node-exporter:v1.3.1

|

||||

prom/alertmanager:v0.23.0

|

||||

thanosio/thanos:v0.25.2

|

||||

thanosio/thanos:v0.31.0

|

||||

grafana/grafana:8.3.3

|

||||

kubesphere/kube-rbac-proxy:v0.8.0

|

||||

kubesphere/notification-manager-operator:v1.4.0

|

||||

kubesphere/notification-manager:v1.4.0

|

||||

kubesphere/kube-rbac-proxy:v0.11.0

|

||||

kubesphere/notification-manager-operator:v2.3.0

|

||||

kubesphere/notification-manager:v2.3.0

|

||||

kubesphere/notification-tenant-sidecar:v3.2.0

|

||||

##kubesphere-logging-images

|

||||

kubesphere/elasticsearch-curator:v5.7.6

|

||||

kubesphere/opensearch-curator:v0.0.5

|

||||

kubesphere/elasticsearch-oss:6.8.22

|

||||

kubesphere/fluentbit-operator:v0.13.0

|

||||

opensearchproject/opensearch:2.6.0

|

||||

opensearchproject/opensearch-dashboards:2.6.0

|

||||

kubesphere/fluentbit-operator:v0.14.0

|

||||

docker:19.03

|

||||

kubesphere/fluent-bit:v1.8.11

|

||||

kubesphere/log-sidecar-injector:1.1

|

||||

kubesphere/fluent-bit:v1.9.4

|

||||

kubesphere/log-sidecar-injector:v1.2.0

|

||||

elastic/filebeat:6.7.0

|

||||

kubesphere/kube-events-operator:v0.4.0

|

||||

kubesphere/kube-events-exporter:v0.4.0

|

||||

kubesphere/kube-events-ruler:v0.4.0

|

||||

kubesphere/kube-events-operator:v0.6.0

|

||||

kubesphere/kube-events-exporter:v0.6.0

|

||||

kubesphere/kube-events-ruler:v0.6.0

|

||||

kubesphere/kube-auditing-operator:v0.2.0

|

||||

kubesphere/kube-auditing-webhook:v0.2.0

|

||||

##istio-images

|

||||

istio/pilot:1.11.1

|

||||

istio/proxyv2:1.11.1

|

||||

jaegertracing/jaeger-operator:1.27

|

||||

jaegertracing/jaeger-agent:1.27

|

||||

jaegertracing/jaeger-collector:1.27

|

||||

jaegertracing/jaeger-query:1.27

|

||||

jaegertracing/jaeger-es-index-cleaner:1.27

|

||||

kubesphere/kiali-operator:v1.38.1

|

||||

kubesphere/kiali:v1.38

|

||||

istio/pilot:1.14.6

|

||||

istio/proxyv2:1.14.6

|

||||

jaegertracing/jaeger-operator:1.29

|

||||

jaegertracing/jaeger-agent:1.29

|

||||

jaegertracing/jaeger-collector:1.29

|

||||

jaegertracing/jaeger-query:1.29

|

||||

jaegertracing/jaeger-es-index-cleaner:1.29

|

||||

kubesphere/kiali-operator:v1.50.1

|

||||

kubesphere/kiali:v1.50

|

||||

##example-images

|

||||

busybox:1.31.1

|

||||

nginx:1.14-alpine

|

||||

|

|

|

|||

|

|

@ -21,7 +21,7 @@ This tutorial demonstrates how to add an edge node to your cluster.

|

|||

## Prerequisites

|

||||

|

||||

- You have enabled [KubeEdge](../../../pluggable-components/kubeedge/).

|

||||

- To prevent compatability issues, you are advised to install Kubernetes v1.21.x.

|

||||

- To prevent compatability issues, you are advised to install Kubernetes v1.23.x.

|

||||

- You have an available node to serve as an edge node. The node can run either Ubuntu (recommended) or CentOS. This tutorial uses Ubuntu 18.04 as an example.

|

||||

- Edge nodes, unlike Kubernetes cluster nodes, should work in a separate network.

|

||||

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ You must create a load balancer in your environment to listen (also known as lis

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -64,7 +64,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -97,7 +97,7 @@ Create an example configuration file with default configurations. Here Kubernete

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -33,7 +33,7 @@ Refer to the following steps to download KubeKey.

|

|||

Download KubeKey from [its GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or run the following command.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -49,7 +49,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -82,7 +82,7 @@ Create an example configuration file with default configurations. Here Kubernete

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

||||

|

|

|

|||

|

|

@ -268,7 +268,7 @@ Before you start to create your Kubernetes cluster, make sure you have tested th

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -284,7 +284,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -317,7 +317,7 @@ Create an example configuration file with default configurations. Here Kubernete

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -28,7 +28,7 @@ In KubeKey v2.1.0, we bring in concepts of manifest and artifact, which provides

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.10 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -44,7 +44,7 @@ In KubeKey v2.1.0, we bring in concepts of manifest and artifact, which provides

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.10 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

|

|

|

|||

|

|

@ -38,7 +38,7 @@ With the configuration file in place, you execute the `./kk` command with varied

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -54,7 +54,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -84,6 +84,6 @@ If you want to use KubeKey to install both Kubernetes and KubeSphere 3.4, see th

|

|||

{{< notice note >}}

|

||||

|

||||

- You can also run `./kk version --show-supported-k8s` to see all supported Kubernetes versions that can be installed by KubeKey.

|

||||

- The Kubernetes versions that can be installed using KubeKey are different from the Kubernetes versions supported by KubeSphere 3.4. If you want to [install KubeSphere 3.4 on an existing Kubernetes cluster](../../../installing-on-kubernetes/introduction/overview/), your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x

|

||||

- The Kubernetes versions that can be installed using KubeKey are different from the Kubernetes versions supported by KubeSphere 3.4. If you want to [install KubeSphere 3.4 on an existing Kubernetes cluster](../../../installing-on-kubernetes/introduction/overview/), your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

|

||||

{{</ notice >}}

|

||||

|

|

@ -110,7 +110,7 @@ Follow the step below to download [KubeKey](../kubekey).

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -126,7 +126,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -165,7 +165,7 @@ Command:

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -32,7 +32,7 @@ Follow the step below to download [KubeKey](../../../installing-on-linux/introdu

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -48,7 +48,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

|

|||

|

|

@ -199,7 +199,7 @@ Follow the step below to download KubeKey.

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -215,7 +215,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -252,7 +252,7 @@ Create a Kubernetes cluster with KubeSphere installed (for example, `--with-kube

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command above, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -300,7 +300,7 @@ Follow the step below to download KubeKey.

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -316,7 +316,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -353,7 +353,7 @@ Create a Kubernetes cluster with KubeSphere installed (for example, `--with-kube

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -119,7 +119,7 @@ Follow the steps below to download [KubeKey](../../../installing-on-linux/introd

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -135,7 +135,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -170,7 +170,7 @@ chmod +x kk

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -71,7 +71,7 @@ Follow the steps below to download [KubeKey](../../../installing-on-linux/introd

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -87,7 +87,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -122,7 +122,7 @@ chmod +x kk

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -73,7 +73,7 @@ Follow the steps below to download [KubeKey](../../../installing-on-linux/introd

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -89,7 +89,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -124,7 +124,7 @@ chmod +x kk

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -101,7 +101,7 @@ ssh -i .ssh/id_rsa2 -p50200 kubesphere@40.81.5.xx

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -117,7 +117,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -150,7 +150,7 @@ The commands above download the latest release of KubeKey. You can change the ve

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

- If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

|

|

|

|||

|

|

@ -126,7 +126,7 @@ Follow the step below to download KubeKey.

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -142,7 +142,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -175,7 +175,7 @@ Create an example configuration file with default configurations. Here Kubernete

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed unless you install it using the `addons` field in the configuration file or add this flag again when you use `./kk create cluster` later.

|

||||

|

||||

|

|

|

|||

|

|

@ -145,7 +145,7 @@ Perform the following steps to download KubeKey.

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or run the following command:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -161,7 +161,7 @@ export KKZONE=cn

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

|

@ -202,7 +202,7 @@ To create a Kubernetes cluster with KubeSphere installed, refer to the following

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey installs Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- Recommended Kubernetes versions for KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey installs Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- For all-in-one installation, you do not need to change any configuration.

|

||||

- If you do not add the flag `--with-kubesphere` in the command in this step, KubeSphere will not be deployed. KubeKey will install Kubernetes only. If you add the flag `--with-kubesphere` without specifying a KubeSphere version, the latest version of KubeSphere will be installed.

|

||||

- KubeKey will install [OpenEBS](https://openebs.io/) to provision LocalPV for the development and testing environment by default, which is convenient for new users. For other storage classes, see [Persistent Storage Configurations](../../installing-on-linux/persistent-storage-configurations/understand-persistent-storage/).

|

||||

|

|

|

|||

|

|

@ -11,7 +11,7 @@ In addition to installing KubeSphere on a Linux machine, you can also deploy it

|

|||

|

||||

## Prerequisites

|

||||

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- To install KubeSphere 3.4 on Kubernetes, your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- Make sure your machine meets the minimal hardware requirement: CPU > 1 Core, Memory > 2 GB.

|

||||

- A **default** Storage Class in your Kubernetes cluster needs to be configured before the installation.

|

||||

|

||||

|

|

|

|||

|

|

@ -15,7 +15,7 @@ ks-installer is recommended for users whose Kubernetes clusters were not set up

|

|||

- Read [Release Notes for 3.4.0](../../../v3.4/release/release-v340/) carefully.

|

||||

- Back up any important component beforehand.

|

||||

- A Docker registry. You need to have a Harbor or other Docker registries. For more information, see [Prepare a Private Image Registry](../../installing-on-linux/introduction/air-gapped-installation/#step-2-prepare-a-private-image-registry).

|

||||

- Supported Kubernetes versions of KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- Supported Kubernetes versions of KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

|

||||

## Major Updates

|

||||

|

||||

|

|

|

|||

|

|

@ -9,8 +9,8 @@ Air-gapped upgrade with KubeKey is recommended for users whose KubeSphere and Ku

|

|||

|

||||

## Prerequisites

|

||||

|

||||

- You need to have a KubeSphere cluster running v3.2.x. If your KubeSphere version is v3.1.x or earlier, upgrade to v3.2.x first.

|

||||

- Your Kubernetes version must be v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- You need to have a KubeSphere cluster running v3.3.x. If your KubeSphere version is v3.2.x or earlier, upgrade to v3.3.x first.

|

||||

- Your Kubernetes version must be v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

- Read [Release Notes for 3.4.0](../../../v3.4/release/release-v340/) carefully.

|

||||

- Back up any important component beforehand.

|

||||

- A Docker registry. You need to have a Harbor or other Docker registries.

|

||||

|

|

@ -65,7 +65,7 @@ KubeKey upgrades Kubernetes from one MINOR version to the next MINOR version unt

|

|||

Download KubeKey from its [GitHub Release Page](https://github.com/kubesphere/kubekey/releases) or use the following command directly.

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

|

@ -81,7 +81,7 @@ KubeKey upgrades Kubernetes from one MINOR version to the next MINOR version unt

|

|||

Run the following command to download KubeKey:

|

||||

|

||||

```bash

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -

|

||||

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.13 sh -

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

|

|

@ -153,7 +153,7 @@ As you install KubeSphere and Kubernetes on Linux, you need to prepare an image

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

- You can change the Kubernetes version downloaded based on your needs. Recommended Kubernetes versions for KubeSphere 3.4 are v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

- You can change the Kubernetes version downloaded based on your needs. Recommended Kubernetes versions for KubeSphere 3.4 are v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x. If you do not specify a Kubernetes version, KubeKey will install Kubernetes v1.23.10 by default. For more information about supported Kubernetes versions, see [Support Matrix](../../installing-on-linux/introduction/kubekey/#support-matrix).

|

||||

|

||||

- After you run the script, a folder `kubekey` is automatically created. Note that this file and `kk` must be placed in the same directory when you create the cluster later.

|

||||

|

||||

|

|

@ -262,7 +262,7 @@ Set `privateRegistry` of your `config-sample.yaml` file:

|

|||

./kk upgrade -f config-sample.yaml

|

||||

```

|

||||

|

||||

To upgrade Kubernetes to a specific version, explicitly provide the version after the flag `--with-kubernetes`. Available versions are v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

To upgrade Kubernetes to a specific version, explicitly provide the version after the flag `--with-kubernetes`. Available versions are v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

|

||||

### Air-gapped upgrade for multi-node clusters

|

||||

|

||||

|

|

@ -346,4 +346,4 @@ Set `privateRegistry` of your `config-sample.yaml` file:

|

|||

./kk upgrade -f config-sample.yaml

|

||||

```

|

||||

|

||||

To upgrade Kubernetes to a specific version, explicitly provide the version after the flag `--with-kubernetes`. Available versions are v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

To upgrade Kubernetes to a specific version, explicitly provide the version after the flag `--with-kubernetes`. Available versions are v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

|

|

|

|||

|

|

@ -8,11 +8,11 @@ weight: 7100

|

|||

|

||||

## Make Your Upgrade Plan

|

||||

|

||||

KubeSphere 3.4 is compatible with Kubernetes v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x:

|

||||

KubeSphere 3.4 is compatible with Kubernetes v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x:

|

||||

|

||||

- Before you upgrade your cluster to KubeSphere 3.4, you need to have a KubeSphere cluster running v3.2.x.

|

||||

- You can choose to only upgrade KubeSphere to 3.4 or upgrade Kubernetes (to a higher version) and KubeSphere (to 3.4) at the same time.

|

||||

- For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

## Before the Upgrade

|

||||

|

||||

{{< notice warning >}}

|

||||

|

|

|

|||

|

|

@ -10,10 +10,10 @@ ks-installer is recommended for users whose Kubernetes clusters were not set up

|

|||

|

||||

## Prerequisites

|

||||

|

||||

- You need to have a KubeSphere cluster running v3.2.x. If your KubeSphere version is v3.1.x or earlier, upgrade to v3.2.x first.

|

||||

- You need to have a KubeSphere cluster running v3.3.x. If your KubeSphere version is v3.2.x or earlier, upgrade to v3.3.x first.

|

||||

- Read [Release Notes for 3.4.0](../../../v3.4/release/release-v340/) carefully.

|

||||

- Back up any important component beforehand.

|

||||

- Supported Kubernetes versions of KubeSphere 3.4: v1.20.x, v1.21.x, * v1.22.x, * v1.23.x, and v1.24.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.21.x.

|

||||

- Supported Kubernetes versions of KubeSphere 3.4: v1.20.x, v1.21.x, v1.22.x, v1.23.x, * v1.24.x, * v1.25.x, and * v1.26.x. For Kubernetes versions with an asterisk, some features of edge nodes may be unavailable due to incompatability. Therefore, if you want to use edge nodes, you are advised to install Kubernetes v1.23.x.

|

||||

|

||||

## Major Updates

|

||||

|

||||

|

|

|

|||

|

|

@ -11,7 +11,7 @@ This tutorial demonstrates how to upgrade your cluster using KubeKey.

|

|||

|

||||

## Prerequisites

|

||||

|

||||