mirror of

https://github.com/kubesphere/website.git

synced 2025-12-30 00:52:47 +00:00

commit

91ff0b6abb

|

|

@ -0,0 +1,56 @@

|

|||

---

|

||||

title: "Node Management"

|

||||

keywords: "Kubernetes, KubeSphere, taints, nodes, labels, requests, limits"

|

||||

description: "Monitor node status and learn how to add node labels or taints."

|

||||

|

||||

linkTitle: "Node Management"

|

||||

weight: 8100

|

||||

---

|

||||

|

||||

Kubernetes runs your workloads by placing containers into Pods to run on nodes. A node may be a virtual or physical machine, depending on the cluster. Each node contains the services necessary to run Pods, managed by the control plane. For more information about nodes, see the [official documentation of Kubernetes](https://kubernetes.io/docs/concepts/architecture/nodes/).

|

||||

|

||||

This tutorial demonstrates what a cluster administrator can view and do for nodes within a cluster.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You need a user granted a role including the authorization of **Cluster Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to a user.

|

||||

|

||||

## Node Status

|

||||

|

||||

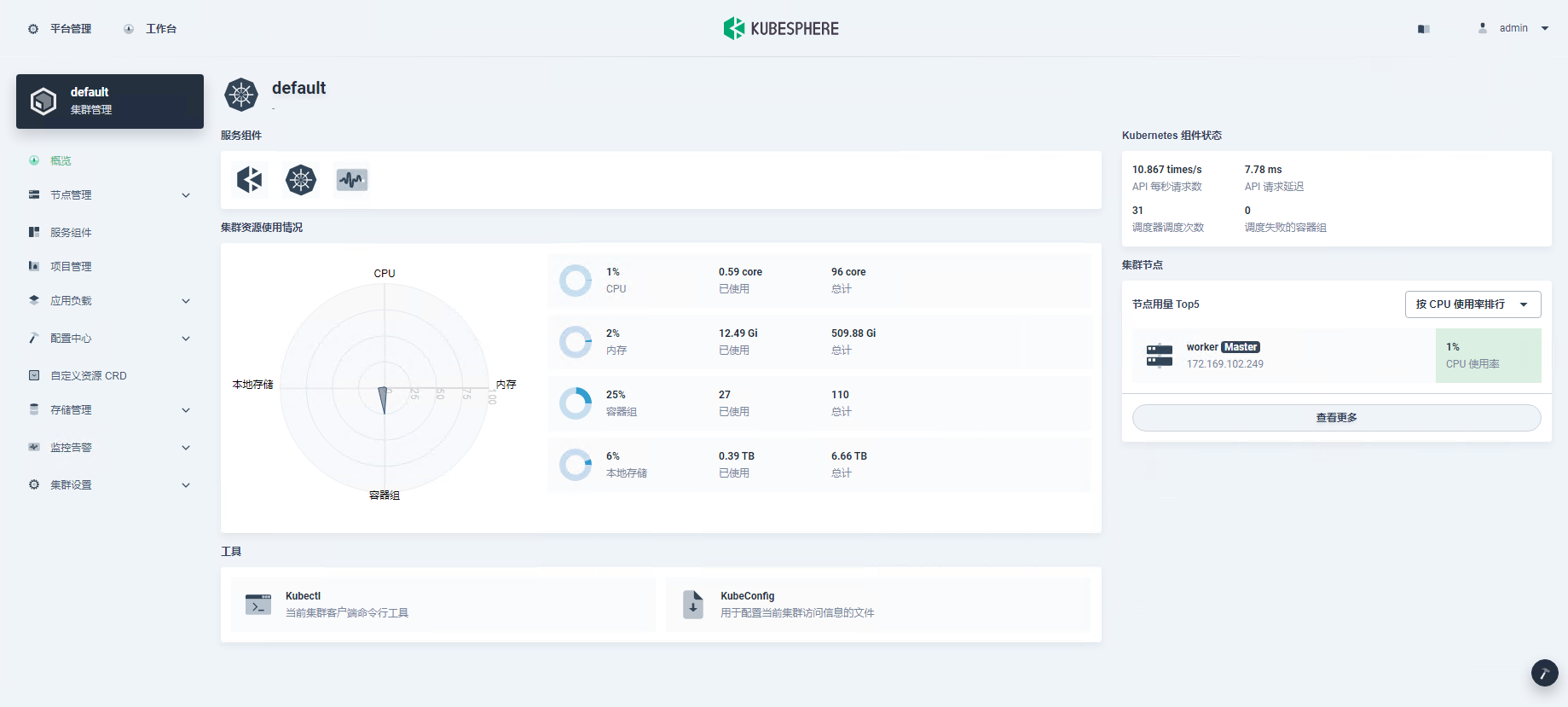

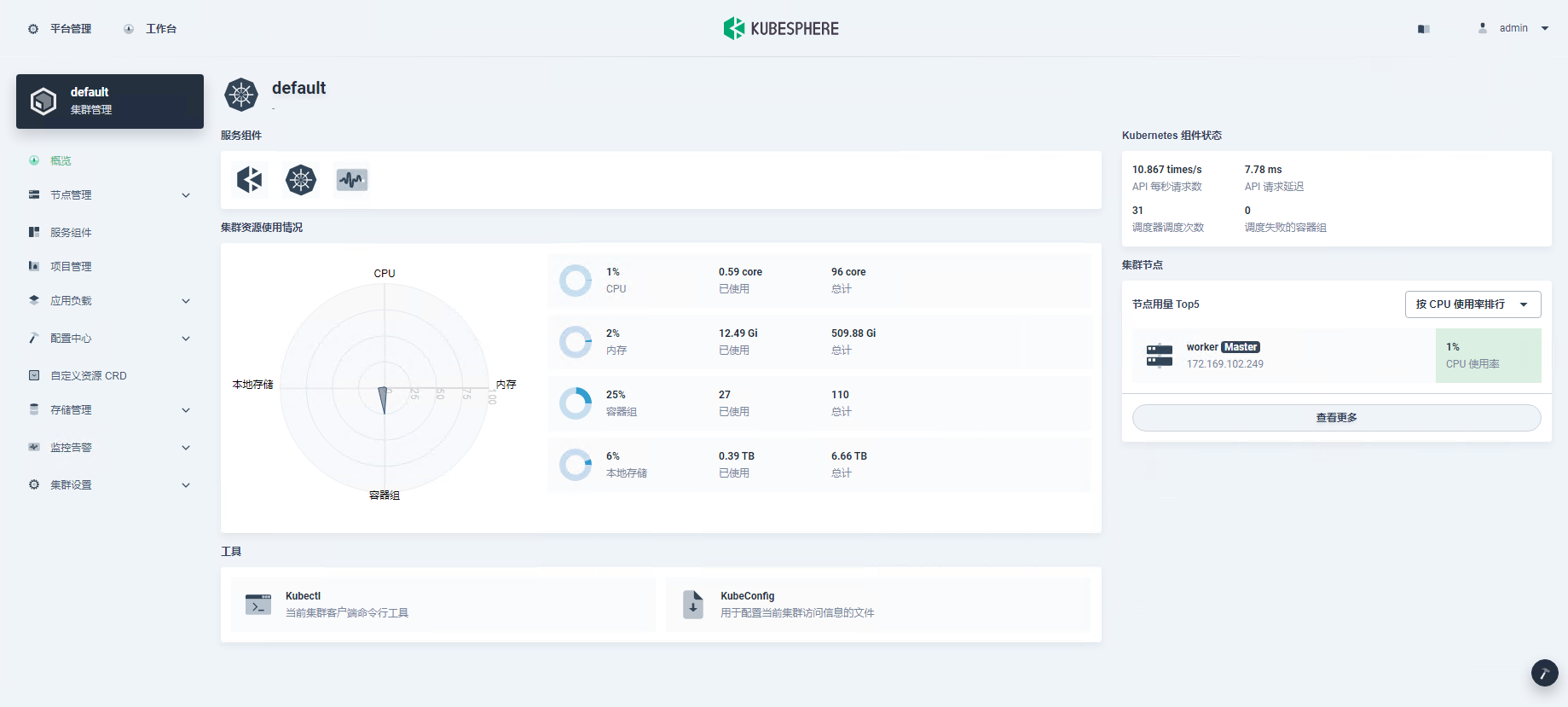

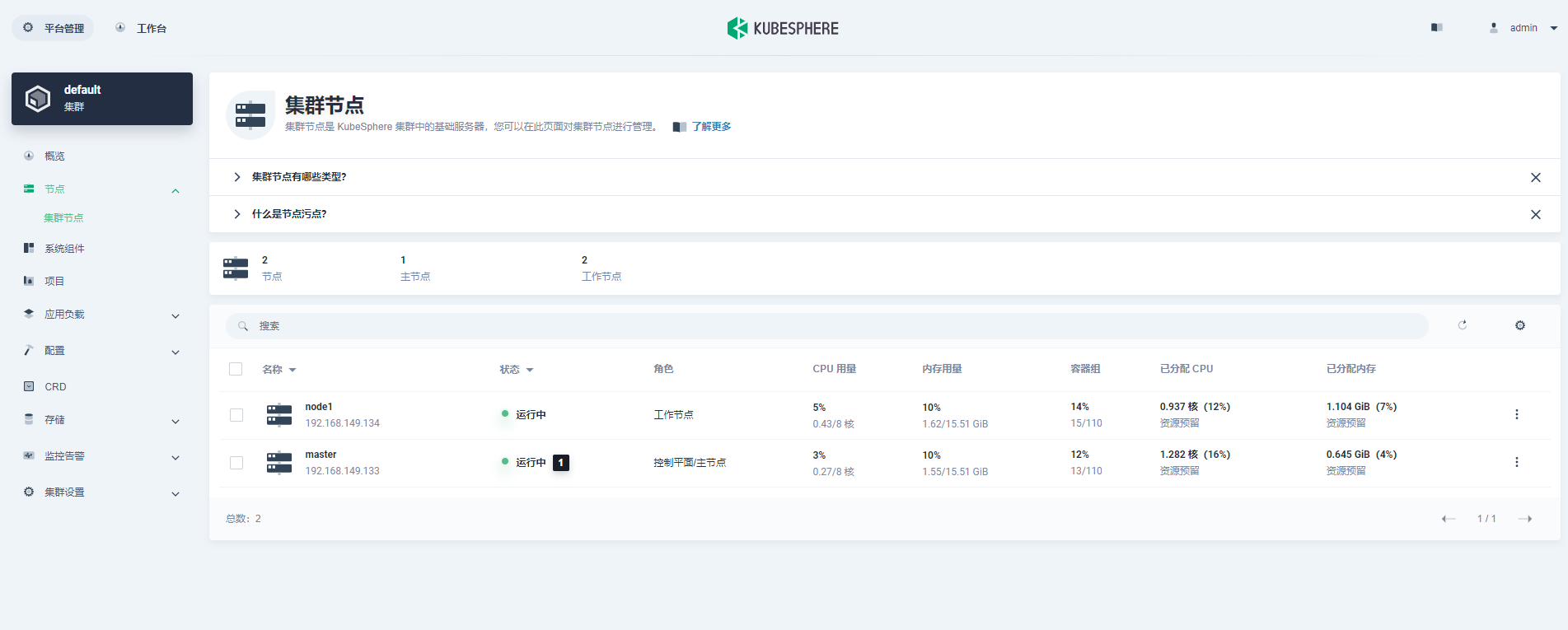

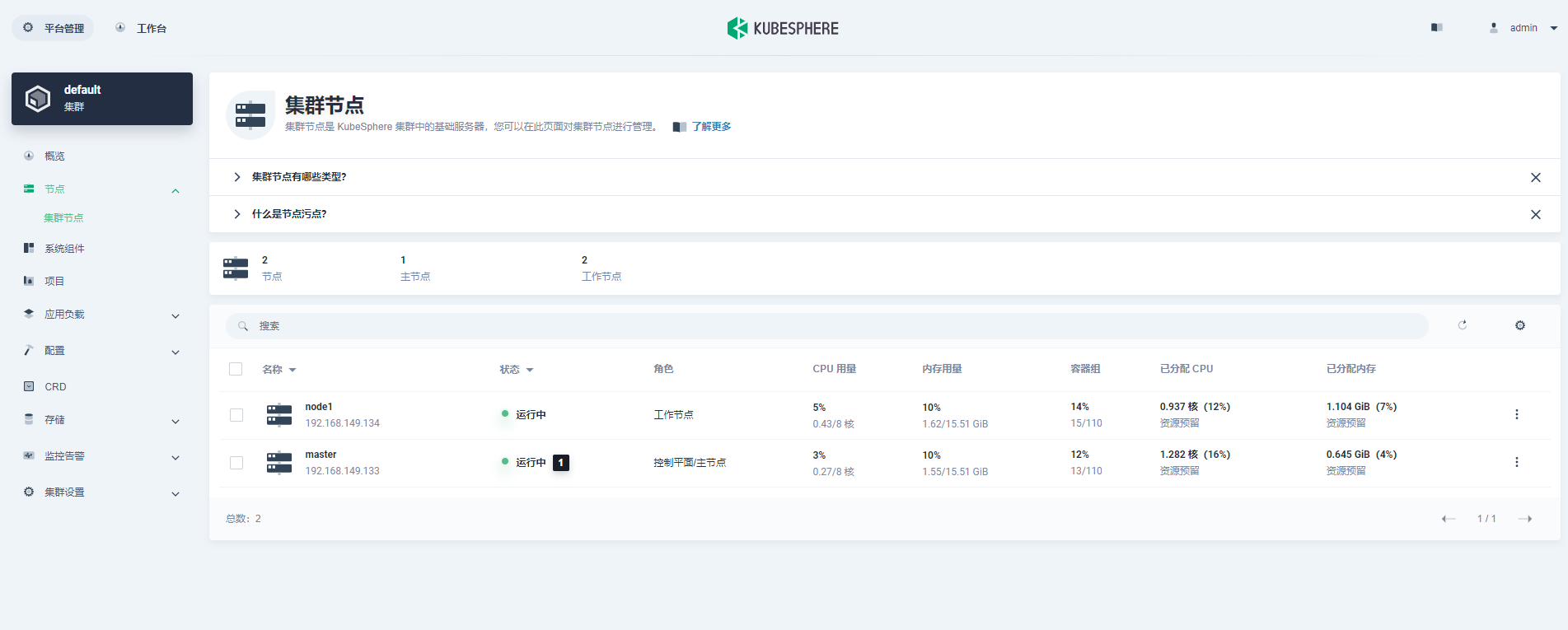

Cluster nodes are only accessible to cluster administrators. Some node metrics are very important to clusters. Therefore, it is the administrator's responsibility to watch over these numbers and make sure nodes are available. Follow the steps below to view node status.

|

||||

|

||||

1. Click **Platform** in the upper-left corner and select **Cluster Management**.

|

||||

|

||||

2. If you have enabled the [multi-cluster feature](../../multicluster-management/) with member clusters imported, you can select a specific cluster to view its nodes. If you have not enabled the feature, refer to the next step directly.

|

||||

|

||||

3. Choose **Cluster Nodes** under **Nodes**, where you can see detailed information of node status.

|

||||

|

||||

- **Name**: The node name and subnet IP address.

|

||||

- **Status**: The current status of a node, indicating whether a node is available or not.

|

||||

- **Role**: The role of a node, indicating whether a node is a worker or master.

|

||||

- **CPU Usage**: The real-time CPU usage of a node.

|

||||

- **Memory Usage**: The real-time memory usage of a node.

|

||||

- **Pods**: The real-time usage of Pods on a node.

|

||||

- **Allocated CPU**: This metric is calculated based on the total CPU requests of Pods on a node. It represents the amount of CPU reserved for workloads on this node, even if workloads are using fewer CPU resources. This figure is vital to the Kubernetes scheduler (kube-scheduler), which favors nodes with lower allocated CPU resources when scheduling a Pod in most cases. For more details, refer to [Managing Resources for Containers](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/).

|

||||

- **Allocated Memory**: This metric is calculated based on the total memory requests of Pods on a node. It represents the amount of memory reserved for workloads on this node, even if workloads are using fewer memory resources.

|

||||

|

||||

{{< notice note >}}

|

||||

**CPU** and **Allocated CPU** are different most times, so are **Memory** and **Allocated Memory**, which is normal. As a cluster administrator, you need to focus on both metrics instead of just one. It's always a good practice to set resource requests and limits for each node to match their real usage. Over-allocating resources can lead to low cluster utilization, while under-allocating may result in high pressure on a cluster, leaving the cluster unhealthy.

|

||||

{{</ notice >}}

|

||||

|

||||

## Node Management

|

||||

|

||||

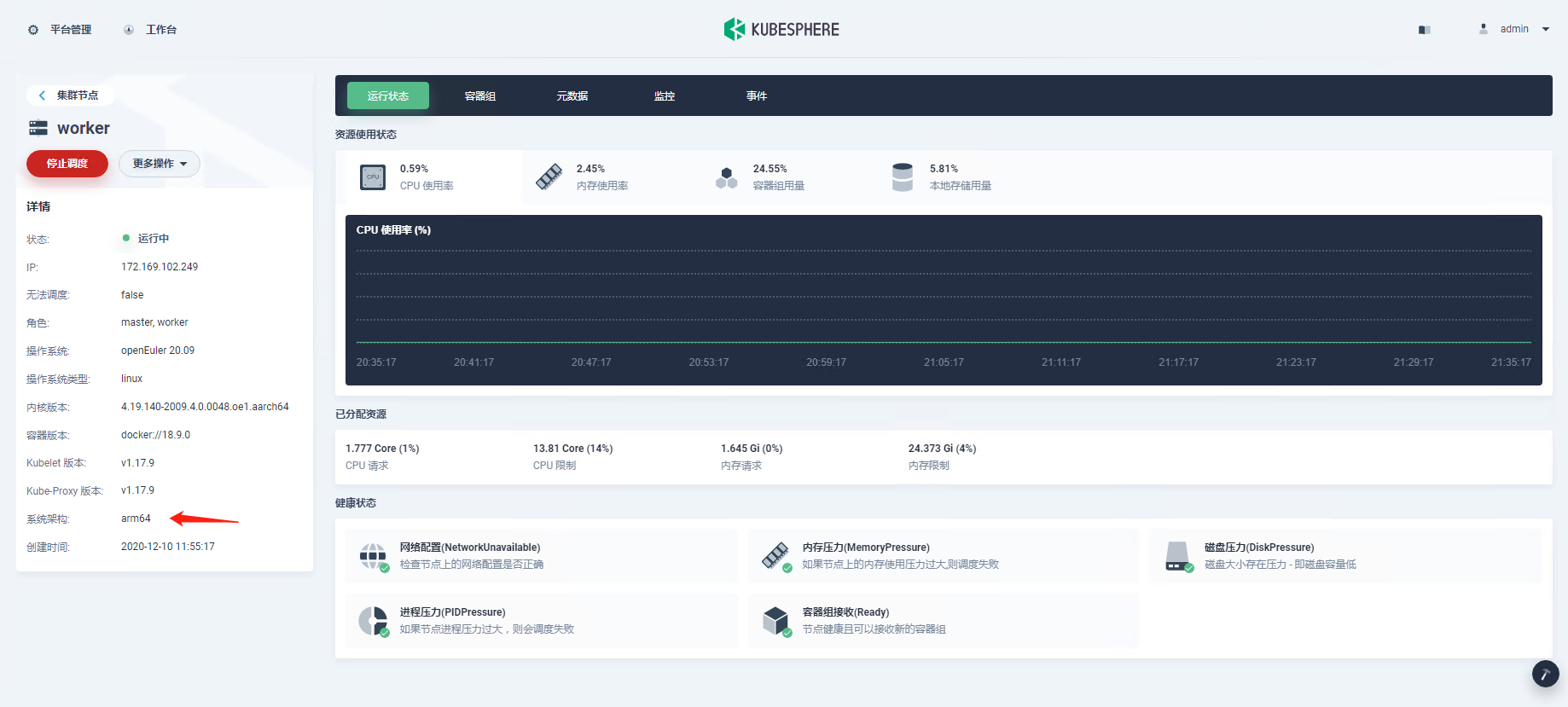

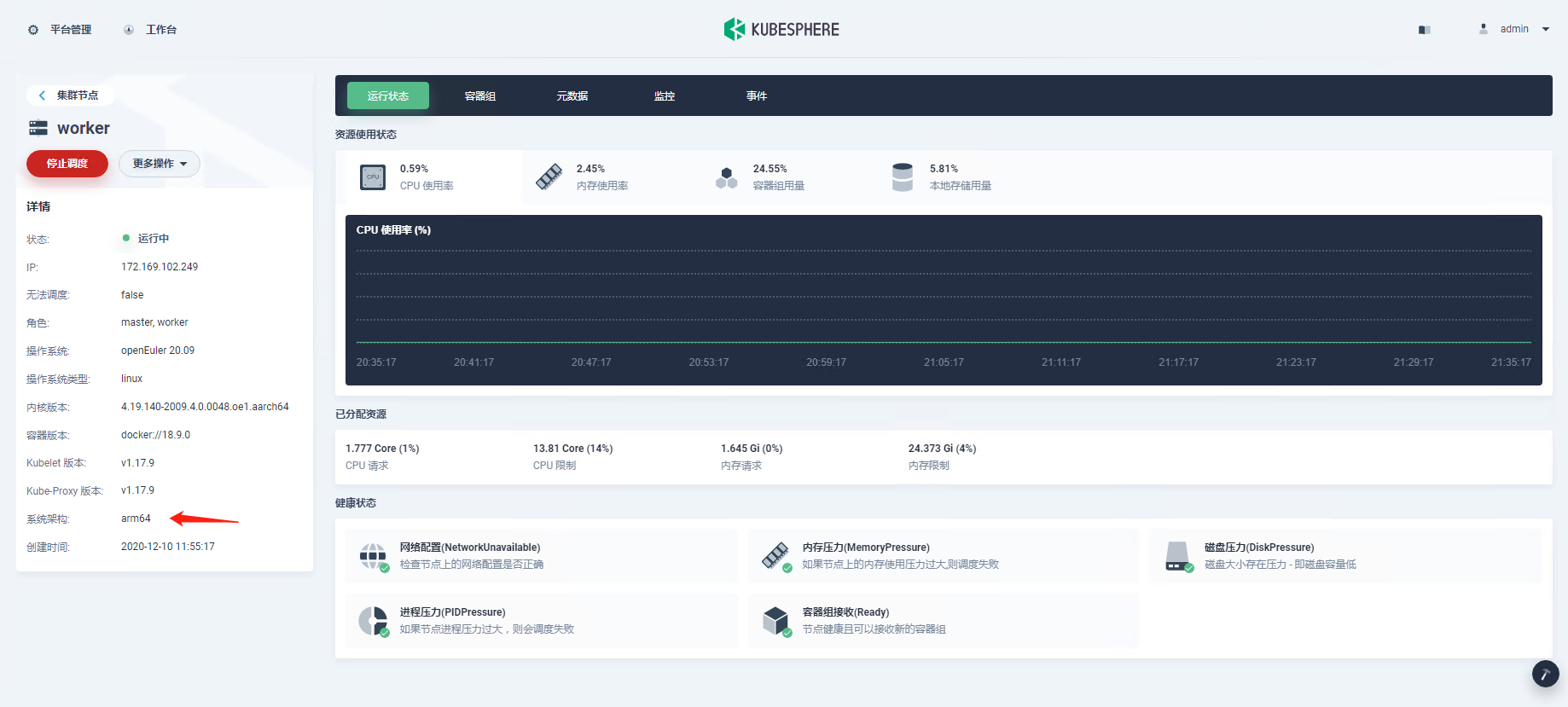

Click a node from the list and you can go to its detail page.

|

||||

|

||||

- **Cordon/Uncordon**: Marking a node as unschedulable is very useful during a node reboot or other maintenance. The Kubernetes scheduler will not schedule new Pods to this node if it's been marked unschedulable. Besides, this does not affect existing workloads already on the node. In KubeSphere, you mark a node as unschedulable by clicking **Cordon** on the node detail page. The node will be schedulable if you click the button (**Uncordon**) again.

|

||||

- **Labels**: Node labels can be very useful when you want to assign Pods to specific nodes. Label a node first (for example, label GPU nodes with `node-role.kubernetes.io/gpu-node`), and then add the label in **Advanced Settings** [when you create a workload](../../project-user-guide/application-workloads/deployments/#step-5-configure-advanced-settings) so that you can allow Pods to run on GPU nodes explicitly. To add node labels, click **More** and select **Edit Labels**.

|

||||

|

||||

- **Taints**: Taints allow a node to repel a set of pods. You add or remove node taints on the node detail page. To add or delete taints, click **More** and select **Edit Taints** from the drop-down menu.

|

||||

|

||||

{{< notice note >}}

|

||||

Be careful when you add taints as they may cause unexpected behavior, leading to services unavailable. For more information, see [Taints and Tolerations](https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/).

|

||||

{{</ notice >}}

|

||||

|

||||

## Add and Remove Nodes

|

||||

|

||||

Currently, you cannot add or remove nodes directly from the KubeSphere console, but you can do it by using [KubeKey](https://github.com/kubesphere/kubekey). For more information, see [Add New Nodes](../../installing-on-linux/cluster-operation/add-new-nodes/) and [Remove Nodes](../../installing-on-linux/cluster-operation/remove-nodes/).

|

||||

|

|

@ -0,0 +1,56 @@

|

|||

---

|

||||

title: "Node Management"

|

||||

keywords: "Kubernetes, KubeSphere, taints, nodes, labels, requests, limits"

|

||||

description: "Monitor node status and learn how to add node labels or taints."

|

||||

|

||||

linkTitle: "Node Management"

|

||||

weight: 8100

|

||||

---

|

||||

|

||||

Kubernetes runs your workloads by placing containers into Pods to run on nodes. A node may be a virtual or physical machine, depending on the cluster. Each node contains the services necessary to run Pods, managed by the control plane. For more information about nodes, see the [official documentation of Kubernetes](https://kubernetes.io/docs/concepts/architecture/nodes/).

|

||||

|

||||

This tutorial demonstrates what a cluster administrator can view and do for nodes within a cluster.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You need a user granted a role including the authorization of **Cluster Management**. For example, you can log in to the console as `admin` directly or create a new role with the authorization and assign it to a user.

|

||||

|

||||

## Node Status

|

||||

|

||||

Cluster nodes are only accessible to cluster administrators. Some node metrics are very important to clusters. Therefore, it is the administrator's responsibility to watch over these numbers and make sure nodes are available. Follow the steps below to view node status.

|

||||

|

||||

1. Click **Platform** in the upper-left corner and select **Cluster Management**.

|

||||

|

||||

2. If you have enabled the [multi-cluster feature](../../multicluster-management/) with member clusters imported, you can select a specific cluster to view its nodes. If you have not enabled the feature, refer to the next step directly.

|

||||

|

||||

3. Choose **Cluster Nodes** under **Nodes**, where you can see detailed information of node status.

|

||||

|

||||

- **Name**: The node name and subnet IP address.

|

||||

- **Status**: The current status of a node, indicating whether a node is available or not.

|

||||

- **Role**: The role of a node, indicating whether a node is a worker or the control plane.

|

||||

- **CPU Usage**: The real-time CPU usage of a node.

|

||||

- **Memory Usage**: The real-time memory usage of a node.

|

||||

- **Pods**: The real-time usage of Pods on a node.

|

||||

- **Allocated CPU**: This metric is calculated based on the total CPU requests of Pods on a node. It represents the amount of CPU reserved for workloads on this node, even if workloads are using fewer CPU resources. This figure is vital to the Kubernetes scheduler (kube-scheduler), which favors nodes with lower allocated CPU resources when scheduling a Pod in most cases. For more details, refer to [Managing Resources for Containers](https://kubernetes.io/docs/concepts/configuration/manage-resources-containers/).

|

||||

- **Allocated Memory**: This metric is calculated based on the total memory requests of Pods on a node. It represents the amount of memory reserved for workloads on this node, even if workloads are using fewer memory resources.

|

||||

|

||||

{{< notice note >}}

|

||||

**CPU** and **Allocated CPU** are different most times, so are **Memory** and **Allocated Memory**, which is normal. As a cluster administrator, you need to focus on both metrics instead of just one. It's always a good practice to set resource requests and limits for each node to match their real usage. Over-allocating resources can lead to low cluster utilization, while under-allocating may result in high pressure on a cluster, leaving the cluster unhealthy.

|

||||

{{</ notice >}}

|

||||

|

||||

## Node Management

|

||||

|

||||

Click a node from the list and you can go to its detail page.

|

||||

|

||||

- **Cordon/Uncordon**: Marking a node as unschedulable is very useful during a node reboot or other maintenance. The Kubernetes scheduler will not schedule new Pods to this node if it's been marked unschedulable. Besides, this does not affect existing workloads already on the node. In KubeSphere, you mark a node as unschedulable by clicking **Cordon** on the node detail page. The node will be schedulable if you click the button (**Uncordon**) again.

|

||||

- **Labels**: Node labels can be very useful when you want to assign Pods to specific nodes. Label a node first (for example, label GPU nodes with `node-role.kubernetes.io/gpu-node`), and then add the label in **Advanced Settings** [when you create a workload](../../project-user-guide/application-workloads/deployments/#step-5-configure-advanced-settings) so that you can allow Pods to run on GPU nodes explicitly. To add node labels, click **More** and select **Edit Labels**.

|

||||

|

||||

- **Taints**: Taints allow a node to repel a set of pods. You add or remove node taints on the node detail page. To add or delete taints, click **More** and select **Edit Taints** from the drop-down menu.

|

||||

|

||||

{{< notice note >}}

|

||||

Be careful when you add taints as they may cause unexpected behavior, leading to services unavailable. For more information, see [Taints and Tolerations](https://kubernetes.io/docs/concepts/scheduling-eviction/taint-and-toleration/).

|

||||

{{</ notice >}}

|

||||

|

||||

## Add and Remove Nodes

|

||||

|

||||

Currently, you cannot add or remove nodes directly from the KubeSphere console, but you can do it by using [KubeKey](https://github.com/kubesphere/kubekey). For more information, see [Add New Nodes](../../installing-on-linux/cluster-operation/add-new-nodes/) and [Remove Nodes](../../installing-on-linux/cluster-operation/remove-nodes/).

|

||||

|

|

@ -0,0 +1,74 @@

|

|||

---

|

||||

title: "Cluster Shutdown and Restart"

|

||||

description: "Learn how to gracefully shut down your cluster and restart it."

|

||||

layout: "single"

|

||||

|

||||

linkTitle: "Cluster Shutdown and Restart"

|

||||

weight: 8800

|

||||

|

||||

icon: "/images/docs/docs.svg"

|

||||

---

|

||||

This document describes the process of gracefully shutting down your Kubernetes cluster and how to restart it. You might need to temporarily shut down your cluster for maintenance reasons.

|

||||

|

||||

{{< notice warning >}}

|

||||

Shutting down a cluster is very dangerous. You must fully understand the operation and its consequences. Please make an etcd backup before you proceed.

|

||||

Usually, it is recommended to maintain your nodes one by one instead of restarting the whole cluster.

|

||||

{{</ notice >}}

|

||||

|

||||

## Prerequisites

|

||||

- Take an [etcd backup](https://etcd.io/docs/current/op-guide/recovery/#snapshotting-the-keyspace) prior to shutting down a cluster.

|

||||

- SSH [passwordless login](https://man.openbsd.org/ssh.1#AUTHENTICATION) is set up between hosts.

|

||||

|

||||

## Shut Down a Kubernetes Cluster

|

||||

{{< notice tip >}}

|

||||

|

||||

- You must back up your etcd data before you shut down the cluster as your cluster can be restored if you encounter any issues when restarting the cluster.

|

||||

- Using the method in this tutorial can shut down a cluster gracefully, while the possibility of data corruption still exists.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Step 1: Get the node list

|

||||

```bash

|

||||

nodes=$(kubectl get nodes -o name)

|

||||

```

|

||||

### Step 2: Shut down all nodes

|

||||

```bash

|

||||

for node in ${nodes[@]}

|

||||

do

|

||||

echo "==== Shut down $node ===="

|

||||

ssh $node sudo shutdown -h 1

|

||||

done

|

||||

```

|

||||

Then you can shut down other cluster dependencies, such as external storage.

|

||||

|

||||

## Restart a Cluster Gracefully

|

||||

You can restart a cluster gracefully after shutting down the cluster gracefully.

|

||||

|

||||

### Prerequisites

|

||||

You have shut down your cluster gracefully.

|

||||

|

||||

{{< notice tip >}}

|

||||

Usually, a cluster can be used after restarting, but the cluster may be unavailable due to unexpected conditions. For example:

|

||||

|

||||

- etcd data corruption during the shutdown.

|

||||

- Node failures.

|

||||

- Unexpected network errors.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Step 1: Check all cluster dependencies' status

|

||||

Ensure all cluster dependencies are ready, such as external storage.

|

||||

### Step 2: Power on cluster machines

|

||||

Wait for the cluster to be up and running, which may take about 10 minutes.

|

||||

### Step 3: Check all master nodes' status

|

||||

Check the status of core components, such as etcd services, and make sure everything is ready.

|

||||

```bash

|

||||

kubectl get nodes -l node-role.kubernetes.io/master

|

||||

```

|

||||

|

||||

### Step 4: Check all worker nodes' status

|

||||

```bash

|

||||

kubectl get nodes -l node-role.kubernetes.io/worker

|

||||

```

|

||||

|

||||

If your cluster fails to restart, please try to [restore the etcd cluster](https://etcd.io/docs/current/op-guide/recovery/#restoring-a-cluster).

|

||||

|

|

@ -0,0 +1,74 @@

|

|||

---

|

||||

title: "Cluster Shutdown and Restart"

|

||||

description: "Learn how to gracefully shut down your cluster and restart it."

|

||||

layout: "single"

|

||||

|

||||

linkTitle: "Cluster Shutdown and Restart"

|

||||

weight: 8800

|

||||

|

||||

icon: "/images/docs/docs.svg"

|

||||

---

|

||||

This document describes the process of gracefully shutting down your Kubernetes cluster and how to restart it. You might need to temporarily shut down your cluster for maintenance reasons.

|

||||

|

||||

{{< notice warning >}}

|

||||

Shutting down a cluster is very dangerous. You must fully understand the operation and its consequences. Please make an etcd backup before you proceed.

|

||||

Usually, it is recommended to maintain your nodes one by one instead of restarting the whole cluster.

|

||||

{{</ notice >}}

|

||||

|

||||

## Prerequisites

|

||||

- Take an [etcd backup](https://etcd.io/docs/current/op-guide/recovery/#snapshotting-the-keyspace) prior to shutting down a cluster.

|

||||

- SSH [passwordless login](https://man.openbsd.org/ssh.1#AUTHENTICATION) is set up between hosts.

|

||||

|

||||

## Shut Down a Kubernetes Cluster

|

||||

{{< notice tip >}}

|

||||

|

||||

- You must back up your etcd data before you shut down the cluster as your cluster can be restored if you encounter any issues when restarting the cluster.

|

||||

- Using the method in this tutorial can shut down a cluster gracefully, while the possibility of data corruption still exists.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Step 1: Get the node list

|

||||

```bash

|

||||

nodes=$(kubectl get nodes -o name)

|

||||

```

|

||||

### Step 2: Shut down all nodes

|

||||

```bash

|

||||

for node in ${nodes[@]}

|

||||

do

|

||||

echo "==== Shut down $node ===="

|

||||

ssh $node sudo shutdown -h 1

|

||||

done

|

||||

```

|

||||

Then you can shut down other cluster dependencies, such as external storage.

|

||||

|

||||

## Restart a Cluster Gracefully

|

||||

You can restart a cluster gracefully after shutting down the cluster gracefully.

|

||||

|

||||

### Prerequisites

|

||||

You have shut down your cluster gracefully.

|

||||

|

||||

{{< notice tip >}}

|

||||

Usually, a cluster can be used after restarting, but the cluster may be unavailable due to unexpected conditions. For example:

|

||||

|

||||

- etcd data corruption during the shutdown.

|

||||

- Node failures.

|

||||

- Unexpected network errors.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Step 1: Check all cluster dependencies' status

|

||||

Ensure all cluster dependencies are ready, such as external storage.

|

||||

### Step 2: Power on cluster machines

|

||||

Wait for the cluster to be up and running, which may take about 10 minutes.

|

||||

### Step 3: Check the status of all control plane components

|

||||

Check the status of core components, such as etcd services, and make sure everything is ready.

|

||||

```bash

|

||||

kubectl get nodes -l node-role.kubernetes.io/master

|

||||

```

|

||||

|

||||

### Step 4: Check all worker nodes' status

|

||||

```bash

|

||||

kubectl get nodes -l node-role.kubernetes.io/worker

|

||||

```

|

||||

|

||||

If your cluster fails to restart, please try to [restore the etcd cluster](https://etcd.io/docs/current/op-guide/recovery/#restoring-a-cluster).

|

||||

|

|

@ -0,0 +1,40 @@

|

|||

---

|

||||

title: "SSH Connection Failure"

|

||||

keywords: "Installation, SSH, KubeSphere, Kubernetes"

|

||||

description: "Understand why the SSH connection may fail when you use KubeKey to create a cluster."

|

||||

linkTitle: "SSH Connection Failure"

|

||||

Weight: 16600

|

||||

---

|

||||

|

||||

When you use KubeKey to set up a cluster, you create a configuration file which contains necessary host information. Here is an example of the field `hosts`:

|

||||

|

||||

```bash

|

||||

spec:

|

||||

hosts:

|

||||

- {name: master, address: 192.168.0.2, internalAddress: 192.168.0.2, user: ubuntu, password: Testing123}

|

||||

- {name: node1, address: 192.168.0.3, internalAddress: 192.168.0.3, user: ubuntu, password: Testing123}

|

||||

- {name: node2, address: 192.168.0.4, internalAddress: 192.168.0.4, user: ubuntu, password: Testing123}

|

||||

```

|

||||

|

||||

Before you start to use the `./kk` command to create your cluster, it is recommended that you test the connection between the taskbox and other instances using SSH.

|

||||

|

||||

## Possible Error Message

|

||||

|

||||

```bash

|

||||

Failed to connect to xx.xxx.xx.xxx: could not establish connection to xx.xxx.xx.xxx:xx: ssh: handshake failed: ssh: unable to authenticate , attempted methods [none], no supported methods remain node=xx.xxx.xx.xxx

|

||||

```

|

||||

|

||||

If you see an error message as above, verify that:

|

||||

|

||||

- You are using the correct port number. Port `22` is the default port of SSH and you need to add the port number after the IP address if your port is different. For example:

|

||||

|

||||

```bash

|

||||

hosts:

|

||||

- {name: master, address: 192.168.0.2, internalAddress: 192.168.0.2, port: 8022, user: ubuntu, password: Testing123}

|

||||

```

|

||||

|

||||

- SSH connections are not restricted in `/etc/ssh/sshd_config`. For example, `PasswordAuthentication` should be set to `true`.

|

||||

|

||||

- You are using the correct username, password or key. Note that the user must have sudo privileges.

|

||||

|

||||

- Your firewall configurations allow SSH connections.

|

||||

|

|

@ -0,0 +1,40 @@

|

|||

---

|

||||

title: "SSH Connection Failure"

|

||||

keywords: "Installation, SSH, KubeSphere, Kubernetes"

|

||||

description: "Understand why the SSH connection may fail when you use KubeKey to create a cluster."

|

||||

linkTitle: "SSH Connection Failure"

|

||||

Weight: 16600

|

||||

---

|

||||

|

||||

When you use KubeKey to set up a cluster, you create a configuration file which contains necessary host information. Here is an example of the field `hosts`:

|

||||

|

||||

```bash

|

||||

spec:

|

||||

hosts:

|

||||

- {name: control plane, address: 192.168.0.2, internalAddress: 192.168.0.2, user: ubuntu, password: Testing123}

|

||||

- {name: node1, address: 192.168.0.3, internalAddress: 192.168.0.3, user: ubuntu, password: Testing123}

|

||||

- {name: node2, address: 192.168.0.4, internalAddress: 192.168.0.4, user: ubuntu, password: Testing123}

|

||||

```

|

||||

|

||||

Before you start to use the `./kk` command to create your cluster, it is recommended that you test the connection between the taskbox and other instances using SSH.

|

||||

|

||||

## Possible Error Message

|

||||

|

||||

```bash

|

||||

Failed to connect to xx.xxx.xx.xxx: could not establish connection to xx.xxx.xx.xxx:xx: ssh: handshake failed: ssh: unable to authenticate , attempted methods [none], no supported methods remain node=xx.xxx.xx.xxx

|

||||

```

|

||||

|

||||

If you see an error message as above, verify that:

|

||||

|

||||

- You are using the correct port number. Port `22` is the default port of SSH and you need to add the port number after the IP address if your port is different. For example:

|

||||

|

||||

```bash

|

||||

hosts:

|

||||

- {name: master, address: 192.168.0.2, internalAddress: 192.168.0.2, port: 8022, user: ubuntu, password: Testing123}

|

||||

```

|

||||

|

||||

- SSH connections are not restricted in `/etc/ssh/sshd_config`. For example, `PasswordAuthentication` should be set to `true`.

|

||||

|

||||

- You are using the correct username, password or key. Note that the user must have sudo privileges.

|

||||

|

||||

- Your firewall configurations allow SSH connections.

|

||||

|

|

@ -0,0 +1,40 @@

|

|||

---

|

||||

title: "SSH Connection Failure"

|

||||

keywords: "Installation, SSH, KubeSphere, Kubernetes"

|

||||

description: "Understand why the SSH connection may fail when you use KubeKey to create a cluster."

|

||||

linkTitle: "SSH Connection Failure"

|

||||

Weight: 16600

|

||||

---

|

||||

|

||||

When you use KubeKey to set up a cluster, you create a configuration file which contains necessary host information. Here is an example of the field `hosts`:

|

||||

|

||||

```bash

|

||||

spec:

|

||||

hosts:

|

||||

- {name: control plane, address: 192.168.0.2, internalAddress: 192.168.0.2, user: ubuntu, password: Testing123}

|

||||

- {name: node1, address: 192.168.0.3, internalAddress: 192.168.0.3, user: ubuntu, password: Testing123}

|

||||

- {name: node2, address: 192.168.0.4, internalAddress: 192.168.0.4, user: ubuntu, password: Testing123}

|

||||

```

|

||||

|

||||

Before you start to use the `./kk` command to create your cluster, it is recommended that you test the connection between the taskbox and other instances using SSH.

|

||||

|

||||

## Possible Error Message

|

||||

|

||||

```bash

|

||||

Failed to connect to xx.xxx.xx.xxx: could not establish connection to xx.xxx.xx.xxx:xx: ssh: handshake failed: ssh: unable to authenticate , attempted methods [none], no supported methods remain node=xx.xxx.xx.xxx

|

||||

```

|

||||

|

||||

If you see an error message as above, verify that:

|

||||

|

||||

- You are using the correct port number. Port `22` is the default port of SSH and you need to add the port number after the IP address if your port is different. For example:

|

||||

|

||||

```bash

|

||||

hosts:

|

||||

- {name: control plane, address: 192.168.0.2, internalAddress: 192.168.0.2, port: 8022, user: ubuntu, password: Testing123}

|

||||

```

|

||||

|

||||

- SSH connections are not restricted in `/etc/ssh/sshd_config`. For example, `PasswordAuthentication` should be set to `true`.

|

||||

|

||||

- You are using the correct username, password or key. Note that the user must have sudo privileges.

|

||||

|

||||

- Your firewall configurations allow SSH connections.

|

||||

|

|

@ -0,0 +1,113 @@

|

|||

---

|

||||

title: "Deploy KubeSphere on DigitalOcean Kubernetes"

|

||||

keywords: 'Kubernetes, KubeSphere, DigitalOcean, Installation'

|

||||

description: 'Learn how to deploy KubeSphere on DigitalOcean.'

|

||||

|

||||

weight: 4230

|

||||

---

|

||||

|

||||

|

||||

|

||||

This guide walks you through the steps of deploying KubeSphere on [DigitalOcean Kubernetes](https://www.digitalocean.com/products/kubernetes/).

|

||||

|

||||

## Prepare a DOKS Cluster

|

||||

|

||||

A Kubernetes cluster in DO is a prerequisite for installing KubeSphere. Go to your [DO account](https://cloud.digitalocean.com/) and refer to the image below to create a cluster from the navigation menu.

|

||||

|

||||

|

||||

|

||||

You need to select:

|

||||

|

||||

1. Kubernetes version (for example, *1.18.6-do.0*)

|

||||

2. Datacenter region (for example, *Frankfurt*)

|

||||

3. VPC network (for example, *default-fra1*)

|

||||

4. Cluster capacity (for example, 2 standard nodes with 2 vCPUs and 4GB of RAM each)

|

||||

5. A name for the cluster (for example, *kubesphere-3*)

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- To install KubeSphere 3.2.1 on Kubernetes, your Kubernetes version must be v1.19.x, v1.20.x, v1.21.x, or v1.22.x (experimental).

|

||||

- 2 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

- The machine type Standard / 4 GB / 2 vCPUs is for minimal installation. If you plan to enable several pluggable components or use the cluster for production, you can upgrade your nodes to a more powerfull type (such as CPU-Optimized / 8 GB / 4 vCPUs). It seems that DigitalOcean provisions the master nodes based on the type of the worker nodes, and for Standard ones the API server can become unresponsive quite soon.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

When the cluster is ready, you can download the config file for kubectl.

|

||||

|

||||

|

||||

|

||||

## Install KubeSphere on DOKS

|

||||

|

||||

Now that the cluster is ready, you can install KubeSphere following the steps below:

|

||||

|

||||

- Install KubeSphere using kubectl. The following commands are only for the default minimal installation.

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

|

||||

|

||||

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

|

||||

```

|

||||

|

||||

- Inspect the logs of installation:

|

||||

|

||||

```bash

|

||||

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

|

||||

```

|

||||

|

||||

When the installation finishes, you can see the following message:

|

||||

|

||||

```bash

|

||||

#####################################################

|

||||

### Welcome to KubeSphere! ###

|

||||

#####################################################

|

||||

Console: http://10.XXX.XXX.XXX:30880

|

||||

Account: admin

|

||||

Password: P@88w0rd

|

||||

NOTES:

|

||||

1. After logging into the console, please check the

|

||||

monitoring status of service components in

|

||||

the "Cluster Management". If any service is not

|

||||

ready, please wait patiently until all components

|

||||

are ready.

|

||||

2. Please modify the default password after login.

|

||||

#####################################################

|

||||

https://kubesphere.io 2020-xx-xx xx:xx:xx

|

||||

```

|

||||

|

||||

## Access KubeSphere Console

|

||||

|

||||

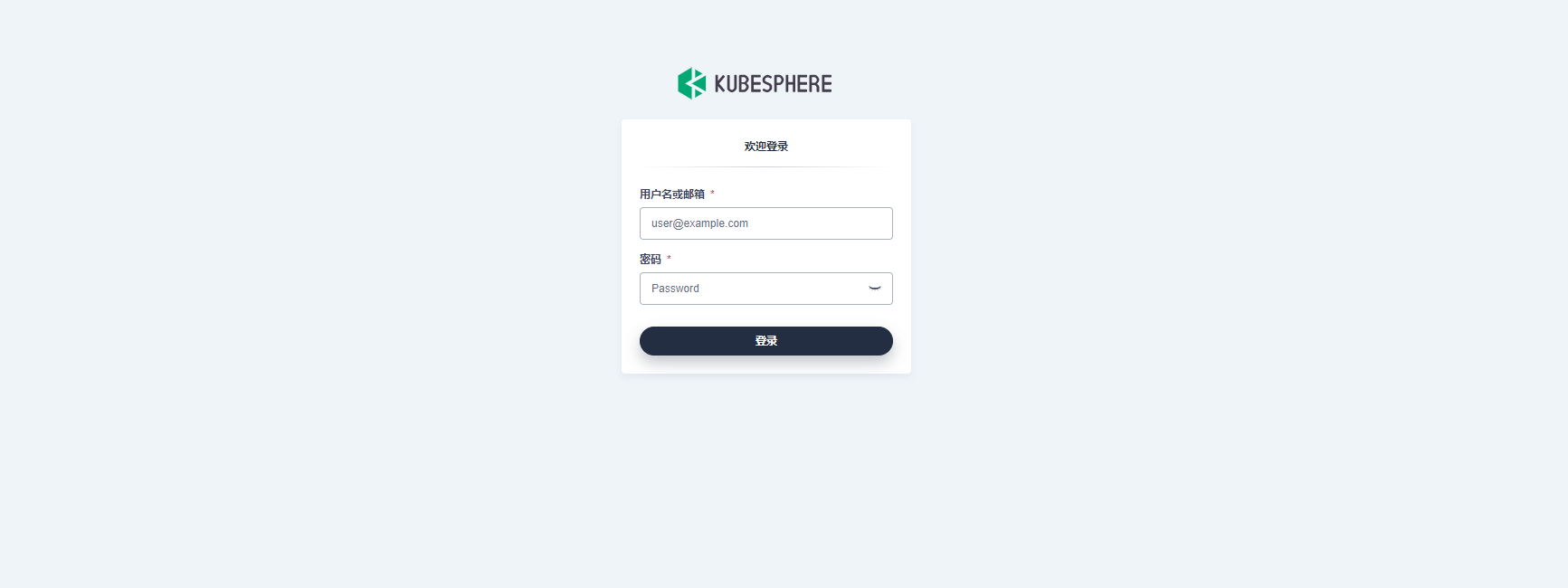

Now that KubeSphere is installed, you can access the web console of KubeSphere by following the steps below.

|

||||

|

||||

- Go to the Kubernetes Dashboard provided by DigitalOcean.

|

||||

|

||||

|

||||

|

||||

- Select the **kubesphere-system** namespace.

|

||||

|

||||

|

||||

|

||||

- In **Services** under **Service**, edit the service **ks-console**.

|

||||

|

||||

|

||||

|

||||

- Change the type from `NodePort` to `LoadBalancer`. Save the file when you finish.

|

||||

|

||||

|

||||

|

||||

- Access the KubeSphere's web console using the endpoint generated by DO.

|

||||

|

||||

|

||||

|

||||

{{< notice tip >}}

|

||||

|

||||

Instead of changing the service type to `LoadBalancer`, you can also access KubeSphere console via `NodeIP:NodePort` (service type set to `NodePort`). You need to get the public IP of one of your nodes.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

- Log in to the console with the default account and password (`admin/P@88w0rd`). In the cluster overview page, you can see the dashboard.

|

||||

|

||||

## Enable Pluggable Components (Optional)

|

||||

|

||||

The example above demonstrates the process of a default minimal installation. To enable other components in KubeSphere, see [Enable Pluggable Components](../../../pluggable-components/) for more details.

|

||||

|

|

@ -0,0 +1,113 @@

|

|||

---

|

||||

title: "Deploy KubeSphere on DigitalOcean Kubernetes"

|

||||

keywords: 'Kubernetes, KubeSphere, DigitalOcean, Installation'

|

||||

description: 'Learn how to deploy KubeSphere on DigitalOcean.'

|

||||

|

||||

weight: 4230

|

||||

---

|

||||

|

||||

|

||||

|

||||

This guide walks you through the steps of deploying KubeSphere on [DigitalOcean Kubernetes](https://www.digitalocean.com/products/kubernetes/).

|

||||

|

||||

## Prepare a DOKS Cluster

|

||||

|

||||

A Kubernetes cluster in DO is a prerequisite for installing KubeSphere. Go to your [DO account](https://cloud.digitalocean.com/) and refer to the image below to create a cluster from the navigation menu.

|

||||

|

||||

|

||||

|

||||

You need to select:

|

||||

|

||||

1. Kubernetes version (for example, *1.18.6-do.0*)

|

||||

2. Datacenter region (for example, *Frankfurt*)

|

||||

3. VPC network (for example, *default-fra1*)

|

||||

4. Cluster capacity (for example, 2 standard nodes with 2 vCPUs and 4GB of RAM each)

|

||||

5. A name for the cluster (for example, *kubesphere-3*)

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- To install KubeSphere 3.2.1 on Kubernetes, your Kubernetes version must be v1.19.x, v1.20.x, v1.21.x, or v1.22.x (experimental).

|

||||

- 2 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

- The machine type Standard / 4 GB / 2 vCPUs is for minimal installation. If you plan to enable several pluggable components or use the cluster for production, you can upgrade your nodes to a more powerful type (such as CPU-Optimized / 8 GB / 4 vCPUs). It seems that DigitalOcean provisions the control plane nodes based on the type of the worker nodes, and for Standard ones the API server can become unresponsive quite soon.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

When the cluster is ready, you can download the config file for kubectl.

|

||||

|

||||

|

||||

|

||||

## Install KubeSphere on DOKS

|

||||

|

||||

Now that the cluster is ready, you can install KubeSphere following the steps below:

|

||||

|

||||

- Install KubeSphere using kubectl. The following commands are only for the default minimal installation.

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/kubesphere-installer.yaml

|

||||

|

||||

kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.2.1/cluster-configuration.yaml

|

||||

```

|

||||

|

||||

- Inspect the logs of installation:

|

||||

|

||||

```bash

|

||||

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

|

||||

```

|

||||

|

||||

When the installation finishes, you can see the following message:

|

||||

|

||||

```bash

|

||||

#####################################################

|

||||

### Welcome to KubeSphere! ###

|

||||

#####################################################

|

||||

Console: http://10.XXX.XXX.XXX:30880

|

||||

Account: admin

|

||||

Password: P@88w0rd

|

||||

NOTES:

|

||||

1. After logging into the console, please check the

|

||||

monitoring status of service components in

|

||||

the "Cluster Management". If any service is not

|

||||

ready, please wait patiently until all components

|

||||

are ready.

|

||||

2. Please modify the default password after login.

|

||||

#####################################################

|

||||

https://kubesphere.io 2020-xx-xx xx:xx:xx

|

||||

```

|

||||

|

||||

## Access KubeSphere Console

|

||||

|

||||

Now that KubeSphere is installed, you can access the web console of KubeSphere by following the steps below.

|

||||

|

||||

- Go to the Kubernetes Dashboard provided by DigitalOcean.

|

||||

|

||||

|

||||

|

||||

- Select the **kubesphere-system** namespace.

|

||||

|

||||

|

||||

|

||||

- In **Services** under **Service**, edit the service **ks-console**.

|

||||

|

||||

|

||||

|

||||

- Change the type from `NodePort` to `LoadBalancer`. Save the file when you finish.

|

||||

|

||||

|

||||

|

||||

- Access the KubeSphere's web console using the endpoint generated by DO.

|

||||

|

||||

|

||||

|

||||

{{< notice tip >}}

|

||||

|

||||

Instead of changing the service type to `LoadBalancer`, you can also access KubeSphere console via `NodeIP:NodePort` (service type set to `NodePort`). You need to get the public IP of one of your nodes.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

- Log in to the console with the default account and password (`admin/P@88w0rd`). In the cluster overview page, you can see the dashboard.

|

||||

|

||||

## Enable Pluggable Components (Optional)

|

||||

|

||||

The example above demonstrates the process of a default minimal installation. To enable other components in KubeSphere, see [Enable Pluggable Components](../../../pluggable-components/) for more details.

|

||||

|

|

@ -0,0 +1,226 @@

|

|||

---

|

||||

title: "Add Edge Nodes"

|

||||

keywords: 'Kubernetes, KubeSphere, KubeEdge'

|

||||

description: 'Add edge nodes to your cluster.'

|

||||

linkTitle: "Add Edge Nodes"

|

||||

weight: 3630

|

||||

---

|

||||

|

||||

KubeSphere leverages [KubeEdge](https://kubeedge.io/en/), to extend native containerized application orchestration capabilities to hosts at edge. With separate cloud and edge core modules, KubeEdge provides complete edge computing solutions while the installation may be complex and difficult.

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

For more information about different components of KubeEdge, see [the KubeEdge documentation](https://docs.kubeedge.io/en/docs/kubeedge/#components).

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

After an edge node joins your cluster, the native KubeEdge cloud component requires you to manually configure iptables so that you can use commands such as `kubectl logs` and `kubectl exec`. In this connection, KubeSphere features an efficient and convenient way to add edge nodes to a Kubernetes cluster. It uses supporting components (for example, EdgeWatcher) to automatically configure iptables.

|

||||

|

||||

|

||||

|

||||

This tutorial demonstrates how to add an edge node to your cluster.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You have enabled [KubeEdge](../../../pluggable-components/kubeedge/).

|

||||

- You have an available node to serve as an edge node. The node can run either Ubuntu (recommended) or CentOS. This tutorial uses Ubuntu 18.04 as an example.

|

||||

- Edge nodes, unlike Kubernetes cluster nodes, should work in a separate network.

|

||||

|

||||

## Configure an Edge Node

|

||||

|

||||

You need to install a container runtime and configure EdgeMesh on your edge node.

|

||||

|

||||

### Install a container runtime

|

||||

|

||||

[KubeEdge](https://docs.kubeedge.io/en/docs/) supports several container runtimes including Docker, containerd, CRI-O and Virtlet. For more information, see [the KubeEdge documentation](https://docs.kubeedge.io/en/docs/advanced/cri/).

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you use Docker as the container runtime for your edge node, Docker v19.3.0 or later must be installed so that KubeSphere can get Pod metrics of it.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Configure EdgeMesh

|

||||

|

||||

Perform the following steps to configure [EdgeMesh](https://kubeedge.io/en/docs/advanced/edgemesh/) on your edge node.

|

||||

|

||||

1. Edit `/etc/nsswitch.conf`.

|

||||

|

||||

```bash

|

||||

vi /etc/nsswitch.conf

|

||||

```

|

||||

|

||||

2. Add the following content to this file:

|

||||

|

||||

```bash

|

||||

hosts: dns files mdns4_minimal [NOTFOUND=return]

|

||||

```

|

||||

|

||||

3. Save the file and run the following command to enable IP forwarding:

|

||||

|

||||

```bash

|

||||

sudo echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

|

||||

```

|

||||

|

||||

4. Verify your modification:

|

||||

|

||||

```bash

|

||||

sudo sysctl -p | grep ip_forward

|

||||

```

|

||||

|

||||

Expected result:

|

||||

|

||||

```bash

|

||||

net.ipv4.ip_forward = 1

|

||||

```

|

||||

|

||||

## Create Firewall Rules and Port Forwarding Rules

|

||||

|

||||

To make sure edge nodes can successfully talk to your cluster, you must forward ports for outside traffic to get into your network. Specifically, map an external port to the corresponding internal IP address (master node) and port based on the table below. Besides, you also need to create firewall rules to allow traffic to these ports (`10000` to `10004`).

|

||||

|

||||

| Fields | External Ports | Fields | Internal Ports |

|

||||

| ------------------- | -------------- | ----------------------- | -------------- |

|

||||

| `cloudhubPort` | `10000` | `cloudhubNodePort` | `30000` |

|

||||

| `cloudhubQuicPort` | `10001` | `cloudhubQuicNodePort` | `30001` |

|

||||

| `cloudhubHttpsPort` | `10002` | `cloudhubHttpsNodePort` | `30002` |

|

||||

| `cloudstreamPort` | `10003` | `cloudstreamNodePort` | `30003` |

|

||||

| `tunnelPort` | `10004` | `tunnelNodePort` | `30004` |

|

||||

|

||||

## Add an Edge Node

|

||||

|

||||

1. Log in to the console as `admin` and click **Platform** in the upper-left corner.

|

||||

|

||||

2. Select **Cluster Management** and navigate to **Edge Nodes** under **Nodes**.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you have enabled [multi-cluster management](../../../multicluster-management/), you need to select a cluster first.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

3. Click **Add**. In the dialog that appears, set a node name and enter an internal IP address of your edge node. Click **Validate** to continue.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- The internal IP address is only used for inter-node communication and you do not necessarily need to use the actual internal IP address of the edge node. As long as the IP address is successfully validated, you can use it.

|

||||

- It is recommended that you check the box to add the default taint.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

4. Copy the command automatically created under **Edge Node Configuration Command** and run it on your edge node.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

Make sure `wget` is installed on your edge node before you run the command.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

5. Close the dialog, refresh the page, and the edge node will appear in the list.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

After an edge node is added, if you cannot see CPU and memory resource usage on the **Edge Nodes** page, make sure [Metrics Server](../../../pluggable-components/metrics-server/) 0.4.1 or later is installed in your cluster.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

6. After an edge node joins your cluster, some Pods may be scheduled to it while they remains in the `Pending` state on the edge node. Due to the tolerations some DaemonSets (for example, Calico) have, in the current version (KubeSphere 3.2.1), you need to manually patch some Pods so that they will not be schedule to the edge node.

|

||||

|

||||

```bash

|

||||

#!/bin/bash

|

||||

|

||||

NodeSelectorPatchJson='{"spec":{"template":{"spec":{"nodeSelector":{"node-role.kubernetes.io/master": "","node-role.kubernetes.io/worker": ""}}}}}'

|

||||

|

||||

NoShedulePatchJson='{"spec":{"template":{"spec":{"affinity":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"node-role.kubernetes.io/edge","operator":"DoesNotExist"}]}]}}}}}}}'

|

||||

|

||||

edgenode="edgenode"

|

||||

if [ $1 ]; then

|

||||

edgenode="$1"

|

||||

fi

|

||||

|

||||

|

||||

namespaces=($(kubectl get pods -A -o wide |egrep -i $edgenode | awk '{print $1}' ))

|

||||

pods=($(kubectl get pods -A -o wide |egrep -i $edgenode | awk '{print $2}' ))

|

||||

length=${#namespaces[@]}

|

||||

|

||||

|

||||

for((i=0;i<$length;i++));

|

||||

do

|

||||

ns=${namespaces[$i]}

|

||||

pod=${pods[$i]}

|

||||

resources=$(kubectl -n $ns describe pod $pod | grep "Controlled By" |awk '{print $3}')

|

||||

echo "Patching for ns:"${namespaces[$i]}",resources:"$resources

|

||||

kubectl -n $ns patch $resources --type merge --patch "$NoShedulePatchJson"

|

||||

sleep 1

|

||||

done

|

||||

```

|

||||

|

||||

## Custom Configurations

|

||||

|

||||

To customize some configurations of an edge node, such as download URL and KubeEdge version, create a [ConfigMap](../../../project-user-guide/configuration/configmaps/) as below:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

data:

|

||||

region: zh # Download region.

|

||||

version: v1.6.1 # The version of KubeEdge to be installed. Allowed values are v1.5.0, v1.6.0, v1.6.1 (default) and v1.6.2.

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: edge-watcher-config

|

||||

namespace: kubeedge

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- You can specify `zh` or `en` for the field `region`. `zh` is the default value and the default download link is `https://kubeedge.pek3b.qingstor.com/bin/v1.6.1/$arch/keadm-v1.6.1-linux-$arch.tar.gz`. If you set `region` to `en`, the download link will be `https://github.com/kubesphere/kubeedge/releases/download/v1.6.1-kubesphere/keadm-v1.6.1-linux-amd64.tar.gz`.

|

||||

- The ConfigMap does not affect the configurations of exiting edge nodes in your cluster. It is only used to change the KubeEdge configurations to be used on a new edge node. More specifically, it decides [the command automatically created by KubeSphere mentioned above](#add-an-edge-node) which needs to be executed on the edge node.

|

||||

- While you can change the KubeEdge version to be installed on an edge node, it is recommended that the cloud and edge modules have the same KubeEdge version.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## Remove an Edge Node

|

||||

|

||||

Before you remove an edge node, delete all your workloads running on it.

|

||||

|

||||

1. On your edge node, run the following commands:

|

||||

|

||||

```bash

|

||||

./keadm reset

|

||||

```

|

||||

|

||||

```

|

||||

apt remove mosquitto

|

||||

```

|

||||

|

||||

```bash

|

||||

rm -rf /var/lib/kubeedge /var/lib/edged /etc/kubeedge/ca /etc/kubeedge/certs

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you cannot delete the tmpfs-mounted folder, restart the node or unmount the folder first.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

2. Run the following command to remove the edge node from your cluster:

|

||||

|

||||

```bash

|

||||

kubectl delete node <edgenode-name>

|

||||

```

|

||||

|

||||

3. To uninstall KubeEdge from your cluster, run the following commands:

|

||||

|

||||

```bash

|

||||

helm uninstall kubeedge -n kubeedge

|

||||

```

|

||||

|

||||

```bash

|

||||

kubectl delete ns kubeedge

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

After the uninstallation, you will not be able to add edge nodes to your cluster.

|

||||

|

||||

{{</ notice >}}

|

||||

|

|

@ -0,0 +1,226 @@

|

|||

---

|

||||

title: "Add Edge Nodes"

|

||||

keywords: 'Kubernetes, KubeSphere, KubeEdge'

|

||||

description: 'Add edge nodes to your cluster.'

|

||||

linkTitle: "Add Edge Nodes"

|

||||

weight: 3630

|

||||

---

|

||||

|

||||

KubeSphere leverages [KubeEdge](https://kubeedge.io/en/), to extend native containerized application orchestration capabilities to hosts at edge. With separate cloud and edge core modules, KubeEdge provides complete edge computing solutions while the installation may be complex and difficult.

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

For more information about different components of KubeEdge, see [the KubeEdge documentation](https://docs.kubeedge.io/en/docs/kubeedge/#components).

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

After an edge node joins your cluster, the native KubeEdge cloud component requires you to manually configure iptables so that you can use commands such as `kubectl logs` and `kubectl exec`. In this connection, KubeSphere features an efficient and convenient way to add edge nodes to a Kubernetes cluster. It uses supporting components (for example, EdgeWatcher) to automatically configure iptables.

|

||||

|

||||

|

||||

|

||||

This tutorial demonstrates how to add an edge node to your cluster.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You have enabled [KubeEdge](../../../pluggable-components/kubeedge/).

|

||||

- You have an available node to serve as an edge node. The node can run either Ubuntu (recommended) or CentOS. This tutorial uses Ubuntu 18.04 as an example.

|

||||

- Edge nodes, unlike Kubernetes cluster nodes, should work in a separate network.

|

||||

|

||||

## Configure an Edge Node

|

||||

|

||||

You need to install a container runtime and configure EdgeMesh on your edge node.

|

||||

|

||||

### Install a container runtime

|

||||

|

||||

[KubeEdge](https://docs.kubeedge.io/en/docs/) supports several container runtimes including Docker, containerd, CRI-O and Virtlet. For more information, see [the KubeEdge documentation](https://docs.kubeedge.io/en/docs/advanced/cri/).

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you use Docker as the container runtime for your edge node, Docker v19.3.0 or later must be installed so that KubeSphere can get Pod metrics of it.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Configure EdgeMesh

|

||||

|

||||

Perform the following steps to configure [EdgeMesh](https://kubeedge.io/en/docs/advanced/edgemesh/) on your edge node.

|

||||

|

||||

1. Edit `/etc/nsswitch.conf`.

|

||||

|

||||

```bash

|

||||

vi /etc/nsswitch.conf

|

||||

```

|

||||

|

||||

2. Add the following content to this file:

|

||||

|

||||

```bash

|

||||

hosts: dns files mdns4_minimal [NOTFOUND=return]

|

||||

```

|

||||

|

||||

3. Save the file and run the following command to enable IP forwarding:

|

||||

|

||||

```bash

|

||||

sudo echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

|

||||

```

|

||||

|

||||

4. Verify your modification:

|

||||

|

||||

```bash

|

||||

sudo sysctl -p | grep ip_forward

|

||||

```

|

||||

|

||||

Expected result:

|

||||

|

||||

```bash

|

||||

net.ipv4.ip_forward = 1

|

||||

```

|

||||

|

||||

## Create Firewall Rules and Port Forwarding Rules

|

||||

|

||||

To make sure edge nodes can successfully talk to your cluster, you must forward ports for outside traffic to get into your network. Specifically, map an external port to the corresponding internal IP address (control plane node) and port based on the table below. Besides, you also need to create firewall rules to allow traffic to these ports (`10000` to `10004`).

|

||||

|

||||

| Fields | External Ports | Fields | Internal Ports |

|

||||

| ------------------- | -------------- | ----------------------- | -------------- |

|

||||

| `cloudhubPort` | `10000` | `cloudhubNodePort` | `30000` |

|

||||

| `cloudhubQuicPort` | `10001` | `cloudhubQuicNodePort` | `30001` |

|

||||

| `cloudhubHttpsPort` | `10002` | `cloudhubHttpsNodePort` | `30002` |

|

||||

| `cloudstreamPort` | `10003` | `cloudstreamNodePort` | `30003` |

|

||||

| `tunnelPort` | `10004` | `tunnelNodePort` | `30004` |

|

||||

|

||||

## Add an Edge Node

|

||||

|

||||

1. Log in to the console as `admin` and click **Platform** in the upper-left corner.

|

||||

|

||||

2. Select **Cluster Management** and navigate to **Edge Nodes** under **Nodes**.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you have enabled [multi-cluster management](../../../multicluster-management/), you need to select a cluster first.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

3. Click **Add**. In the dialog that appears, set a node name and enter an internal IP address of your edge node. Click **Validate** to continue.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- The internal IP address is only used for inter-node communication and you do not necessarily need to use the actual internal IP address of the edge node. As long as the IP address is successfully validated, you can use it.

|

||||

- It is recommended that you check the box to add the default taint.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

4. Copy the command automatically created under **Edge Node Configuration Command** and run it on your edge node.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

Make sure `wget` is installed on your edge node before you run the command.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

5. Close the dialog, refresh the page, and the edge node will appear in the list.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

After an edge node is added, if you cannot see CPU and memory resource usage on the **Edge Nodes** page, make sure [Metrics Server](../../../pluggable-components/metrics-server/) 0.4.1 or later is installed in your cluster.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

6. After an edge node joins your cluster, some Pods may be scheduled to it while they remains in the `Pending` state on the edge node. Due to the tolerations some DaemonSets (for example, Calico) have, in the current version (KubeSphere 3.2.1), you need to manually patch some Pods so that they will not be schedule to the edge node.

|

||||

|

||||

```bash

|

||||

#!/bin/bash

|

||||

|

||||

NodeSelectorPatchJson='{"spec":{"template":{"spec":{"nodeSelector":{"node-role.kubernetes.io/master": "","node-role.kubernetes.io/worker": ""}}}}}'

|

||||

|

||||

NoShedulePatchJson='{"spec":{"template":{"spec":{"affinity":{"nodeAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":{"nodeSelectorTerms":[{"matchExpressions":[{"key":"node-role.kubernetes.io/edge","operator":"DoesNotExist"}]}]}}}}}}}'

|

||||

|

||||

edgenode="edgenode"

|

||||

if [ $1 ]; then

|

||||

edgenode="$1"

|

||||

fi

|

||||

|

||||

|

||||

namespaces=($(kubectl get pods -A -o wide |egrep -i $edgenode | awk '{print $1}' ))

|

||||

pods=($(kubectl get pods -A -o wide |egrep -i $edgenode | awk '{print $2}' ))

|

||||

length=${#namespaces[@]}

|

||||

|

||||

|

||||

for((i=0;i<$length;i++));

|

||||

do

|

||||

ns=${namespaces[$i]}

|

||||

pod=${pods[$i]}

|

||||

resources=$(kubectl -n $ns describe pod $pod | grep "Controlled By" |awk '{print $3}')

|

||||

echo "Patching for ns:"${namespaces[$i]}",resources:"$resources

|

||||

kubectl -n $ns patch $resources --type merge --patch "$NoShedulePatchJson"

|

||||

sleep 1

|

||||

done

|

||||

```

|

||||

|

||||

## Custom Configurations

|

||||

|

||||

To customize some configurations of an edge node, such as download URL and KubeEdge version, create a [ConfigMap](../../../project-user-guide/configuration/configmaps/) as below:

|

||||

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

data:

|

||||

region: zh # Download region.

|

||||

version: v1.6.1 # The version of KubeEdge to be installed. Allowed values are v1.5.0, v1.6.0, v1.6.1 (default) and v1.6.2.

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: edge-watcher-config

|

||||

namespace: kubeedge

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- You can specify `zh` or `en` for the field `region`. `zh` is the default value and the default download link is `https://kubeedge.pek3b.qingstor.com/bin/v1.6.1/$arch/keadm-v1.6.1-linux-$arch.tar.gz`. If you set `region` to `en`, the download link will be `https://github.com/kubesphere/kubeedge/releases/download/v1.6.1-kubesphere/keadm-v1.6.1-linux-amd64.tar.gz`.

|

||||

- The ConfigMap does not affect the configurations of exiting edge nodes in your cluster. It is only used to change the KubeEdge configurations to be used on a new edge node. More specifically, it decides [the command automatically created by KubeSphere mentioned above](#add-an-edge-node) which needs to be executed on the edge node.

|

||||

- While you can change the KubeEdge version to be installed on an edge node, it is recommended that the cloud and edge modules have the same KubeEdge version.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## Remove an Edge Node

|

||||

|

||||

Before you remove an edge node, delete all your workloads running on it.

|

||||

|

||||

1. On your edge node, run the following commands:

|

||||

|

||||

```bash

|

||||

./keadm reset

|

||||

```

|

||||

|

||||

```

|

||||

apt remove mosquitto

|

||||

```

|

||||

|

||||

```bash

|

||||

rm -rf /var/lib/kubeedge /var/lib/edged /etc/kubeedge/ca /etc/kubeedge/certs

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you cannot delete the tmpfs-mounted folder, restart the node or unmount the folder first.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

2. Run the following command to remove the edge node from your cluster:

|

||||

|

||||

```bash

|

||||

kubectl delete node <edgenode-name>

|

||||

```

|

||||

|

||||

3. To uninstall KubeEdge from your cluster, run the following commands:

|

||||

|

||||

```bash

|

||||

helm uninstall kubeedge -n kubeedge

|

||||

```

|

||||

|

||||

```bash

|

||||

kubectl delete ns kubeedge

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

After the uninstallation, you will not be able to add edge nodes to your cluster.

|

||||

|

||||

{{</ notice >}}

|

||||

|

|

@ -0,0 +1,226 @@

|

|||

---

|

||||

title: "Add Edge Nodes"

|

||||

keywords: 'Kubernetes, KubeSphere, KubeEdge'

|

||||

description: 'Add edge nodes to your cluster.'

|

||||

linkTitle: "Add Edge Nodes"

|

||||

weight: 3630

|

||||

---

|

||||

|

||||

KubeSphere leverages [KubeEdge](https://kubeedge.io/en/), to extend native containerized application orchestration capabilities to hosts at edge. With separate cloud and edge core modules, KubeEdge provides complete edge computing solutions while the installation may be complex and difficult.

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

For more information about different components of KubeEdge, see [the KubeEdge documentation](https://docs.kubeedge.io/en/docs/kubeedge/#components).

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

After an edge node joins your cluster, the native KubeEdge cloud component requires you to manually configure iptables so that you can use commands such as `kubectl logs` and `kubectl exec`. In this connection, KubeSphere features an efficient and convenient way to add edge nodes to a Kubernetes cluster. It uses supporting components (for example, EdgeWatcher) to automatically configure iptables.

|

||||

|

||||

|

||||

|

||||

This tutorial demonstrates how to add an edge node to your cluster.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You have enabled [KubeEdge](../../../pluggable-components/kubeedge/).

|

||||

- You have an available node to serve as an edge node. The node can run either Ubuntu (recommended) or CentOS. This tutorial uses Ubuntu 18.04 as an example.

|

||||

- Edge nodes, unlike Kubernetes cluster nodes, should work in a separate network.

|

||||

|

||||

## Configure an Edge Node

|

||||

|

||||

You need to install a container runtime and configure EdgeMesh on your edge node.

|

||||

|

||||

### Install a container runtime

|

||||

|

||||

[KubeEdge](https://docs.kubeedge.io/en/docs/) supports several container runtimes including Docker, containerd, CRI-O and Virtlet. For more information, see [the KubeEdge documentation](https://docs.kubeedge.io/en/docs/advanced/cri/).

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you use Docker as the container runtime for your edge node, Docker v19.3.0 or later must be installed so that KubeSphere can get Pod metrics of it.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Configure EdgeMesh

|

||||

|

||||

Perform the following steps to configure [EdgeMesh](https://kubeedge.io/en/docs/advanced/edgemesh/) on your edge node.

|

||||

|

||||

1. Edit `/etc/nsswitch.conf`.

|

||||

|

||||

```bash

|

||||

vi /etc/nsswitch.conf

|

||||

```

|

||||

|

||||

2. Add the following content to this file:

|

||||

|

||||

```bash

|

||||

hosts: dns files mdns4_minimal [NOTFOUND=return]

|

||||

```

|

||||

|

||||

3. Save the file and run the following command to enable IP forwarding:

|

||||

|

||||

```bash

|

||||

sudo echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf

|

||||

```

|

||||

|

||||

4. Verify your modification:

|

||||

|

||||

```bash

|

||||

sudo sysctl -p | grep ip_forward

|

||||

```

|

||||

|

||||

Expected result:

|

||||

|

||||

```bash

|

||||

net.ipv4.ip_forward = 1

|

||||

```

|

||||

|

||||

## Create Firewall Rules and Port Forwarding Rules

|

||||

|

||||

To make sure edge nodes can successfully talk to your cluster, you must forward ports for outside traffic to get into your network. Specifically, map an external port to the corresponding internal IP address (control plane node) and port based on the table below. Besides, you also need to create firewall rules to allow traffic to these ports (`10000` to `10004`).

|

||||

|

||||

| Fields | External Ports | Fields | Internal Ports |

|

||||

| ------------------- | -------------- | ----------------------- | -------------- |

|

||||

| `cloudhubPort` | `10000` | `cloudhubNodePort` | `30000` |

|

||||

| `cloudhubQuicPort` | `10001` | `cloudhubQuicNodePort` | `30001` |

|

||||

| `cloudhubHttpsPort` | `10002` | `cloudhubHttpsNodePort` | `30002` |

|

||||

| `cloudstreamPort` | `10003` | `cloudstreamNodePort` | `30003` |

|

||||

| `tunnelPort` | `10004` | `tunnelNodePort` | `30004` |

|

||||

|

||||

## Add an Edge Node

|

||||

|

||||

1. Log in to the console as `admin` and click **Platform** in the upper-left corner.

|

||||

|

||||

2. Select **Cluster Management** and navigate to **Edge Nodes** under **Nodes**.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If you have enabled [multi-cluster management](../../../multicluster-management/), you need to select a cluster first.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

3. Click **Add**. In the dialog that appears, set a node name and enter an internal IP address of your edge node. Click **Validate** to continue.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- The internal IP address is only used for inter-node communication and you do not necessarily need to use the actual internal IP address of the edge node. As long as the IP address is successfully validated, you can use it.

|

||||

- It is recommended that you check the box to add the default taint.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

4. Copy the command automatically created under **Edge Node Configuration Command** and run it on your edge node.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||