mirror of

https://github.com/kubesphere/website.git

synced 2025-12-30 17:52:56 +00:00

Resolve conflicts

Signed-off-by: kaige <154599029@qq.com>

This commit is contained in:

commit

80d6dbeebb

|

|

@ -388,7 +388,7 @@ section {

|

|||

z-index: 2;

|

||||

width: 20px;

|

||||

height: 20px;

|

||||

background-image: url('/images/docs/copy-code.svg');

|

||||

background-image: url('/images/docs/copy.png');

|

||||

background-repeat: no-repeat;

|

||||

background-size: cover;

|

||||

cursor: pointer;

|

||||

|

|

|

|||

|

|

@ -1 +1,6 @@

|

|||

baseURL = "https://kubesphere.io"

|

||||

|

||||

[params]

|

||||

|

||||

addGoogleAnalytics = true

|

||||

addGoogleTag = true

|

||||

|

|

@ -93,7 +93,7 @@ section4:

|

|||

content: Provide unified authentication with fine-grained roles and three-tier authorization system, and support AD/LDAP authentication

|

||||

|

||||

features:

|

||||

- name: Application Store

|

||||

- name: App Store

|

||||

icon: /images/home/store.svg

|

||||

content: Provide an application store for Helm-based applications, and offer application lifecycle management

|

||||

link: "/docs/pluggable-components/app-store/"

|

||||

|

|

|

|||

|

|

@ -0,0 +1,8 @@

|

|||

---

|

||||

title: KubeSphere Api Documents

|

||||

description: KubeSphere Api Documents

|

||||

keywords: KubeSphere, KubeSphere Documents, Kubernetes

|

||||

|

||||

swaggerUrl: json/crd.json

|

||||

---

|

||||

|

||||

|

|

@ -0,0 +1,7 @@

|

|||

---

|

||||

title: KubeSphere Api Documents

|

||||

description: KubeSphere Api Documents

|

||||

keywords: KubeSphere, KubeSphere Documents, Kubernetes

|

||||

|

||||

swaggerUrl: json/kubesphere.json

|

||||

---

|

||||

|

|

@ -8,13 +8,15 @@ author: 'Willqy, Feynman, Sherlock'

|

|||

snapshot: 'https://ap3.qingstor.com/kubesphere-website/docs/tidb-architecture.png'

|

||||

---

|

||||

|

||||

In a world where Kubernetes has become the de facto standard to build application services that span multiple containers, running a cloud-native distributed database represents an important part of the experience of using Kubernetes. In this connection, [TiDB](https://github.com/pingcap/tidb), as an open-source NewSQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads, has come to my awareness. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability. It strives to provide users with a one-stop database solution that covers OLTP (Online Transactional Processing), OLAP (Online Analytical Processing), and HTAP services. TiDB is suitable for various use cases that require high availability and strong consistency with large-scale data.

|

||||

|

||||

|

||||

In a world where Kubernetes has become the de facto standard to build application services that span multiple containers, running a cloud-native distributed database represents an important part of the experience of using Kubernetes. In this connection, [TiDB](https://github.com/pingcap/tidb), an open-source NewSQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads, has come to my awareness. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability. It strives to provide users with a one-stop database solution that covers OLTP (Online Transactional Processing), OLAP (Online Analytical Processing), and HTAP services. TiDB is suitable for various use cases that require high availability and strong consistency with large-scale data.

|

||||

|

||||

|

||||

|

||||

Among others, [TiDB Operator](https://github.com/pingcap/tidb-operator) is an automatic operation system for TiDB clusters in Kubernetes. It provides a full management life-cycle for TiDB including deployment, upgrades, scaling, backup, fail-over, and configuration changes. With TiDB Operator, TiDB can run seamlessly in Kubernetes clusters deployed on public or private cloud.

|

||||

|

||||

In addition to TiDB, I am also a KubeSphere user. [KubeSphere](https://kubesphere.io/) is a distributed operating system managing cloud-native applications with [Kubernetes](https://kubernetes.io/) as its kernel, providing a plug-and-play architecture for the seamless integration of third-party applications to boost its ecosystem. [KubeSphere can be run anywhere](https://kubesphere.io/docs/introduction/what-is-kubesphere/#run-kubesphere-everywhere) as it is highly pluggable without any hacking into Kubernetes.

|

||||

In addition to TiDB, I am also a KubeSphere user. [KubeSphere](https://kubesphere.io/) is an open-source distributed operating system managing cloud-native applications with [Kubernetes](https://kubernetes.io/) as its kernel, providing a plug-and-play architecture for the seamless integration of third-party applications to boost its ecosystem. [KubeSphere can be run anywhere](https://kubesphere.io/docs/introduction/what-is-kubesphere/#run-kubesphere-everywhere) as it is highly pluggable without any hacking into Kubernetes.

|

||||

|

||||

|

||||

|

||||

|

|

@ -28,15 +30,19 @@ Therefore, I select QingCloud Kubernetes Engine (QKE) to prepare the environment

|

|||

|

||||

1. Log in the [web console of QingCloud](https://console.qingcloud.com/). Simply select KubeSphere (QKE) from the menu and create a Kubernetes cluster with KubeSphere installed. The platform allows you to install different components of KubeSphere. Here, we need to enable [OpenPitrix](https://github.com/openpitrix/openpitrix), which powers the app management feature in KubeSphere.

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

KubeSphere can be installed on any infrastructure. I just use QingCloud Platform as an example. See [KubeSphere Documentation](https://kubesphere.io/docs/) for more details.

|

||||

{{</ notice >}}

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

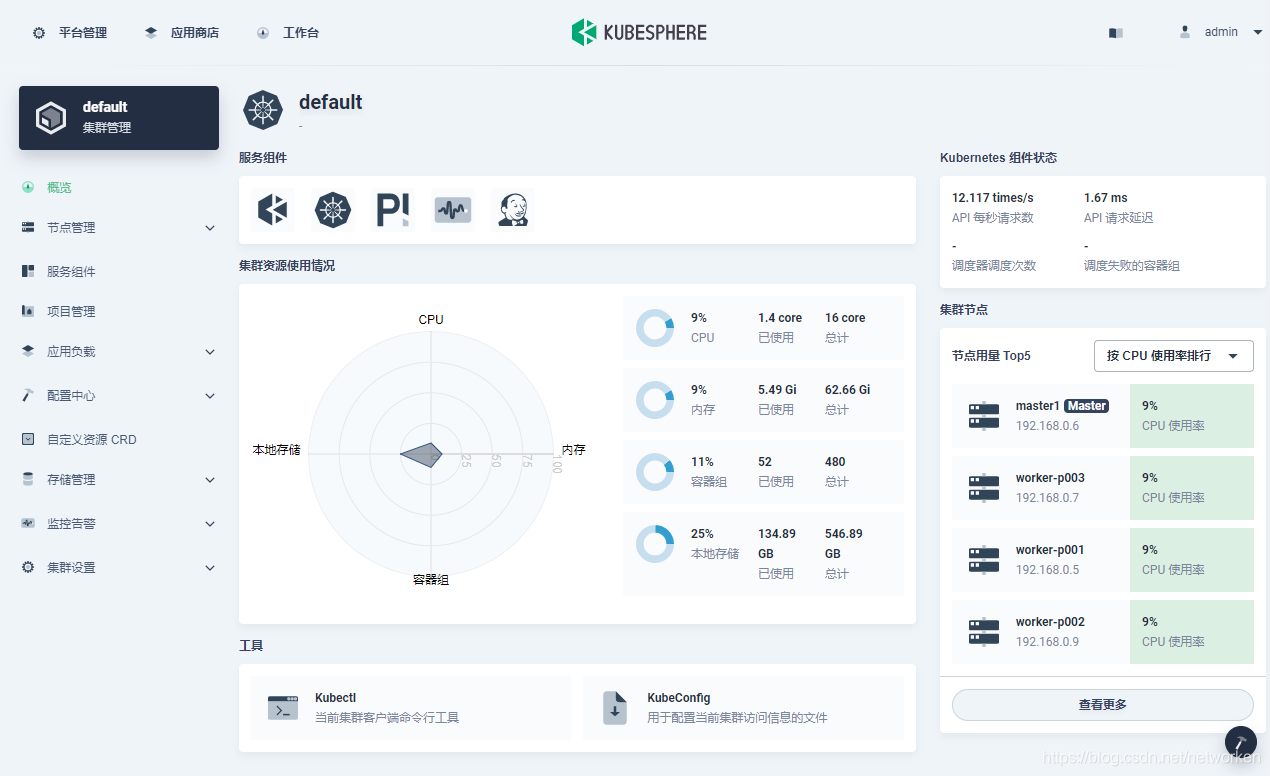

2. The cluster will be up and running in around 10 minutes. In this example, I select 3 working nodes to make sure I have enough resources for the deployment later. You can also customize configurations based on your needs. When the cluster is ready, log in the web console of KubeSphere with the default account and password (`admin/P@88w0rd`). Here is the cluster **Overview** page:

|

||||

|

||||

|

||||

|

||||

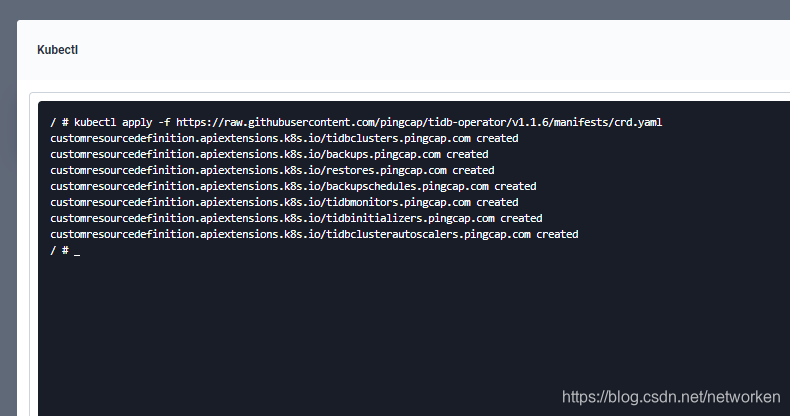

3. Use the built-in tool Kubectl from the Toolkit in the bottom right corner to execute the following command to install TiDB Operator CRD:

|

||||

3. Use the built-in **Web Kubectl** from the Toolkit in the bottom right corner to execute the following command to install TiDB Operator CRD:

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.1.6/manifests/crd.yaml

|

||||

|

|

@ -56,7 +62,7 @@ kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.1.6/

|

|||

|

||||

|

||||

|

||||

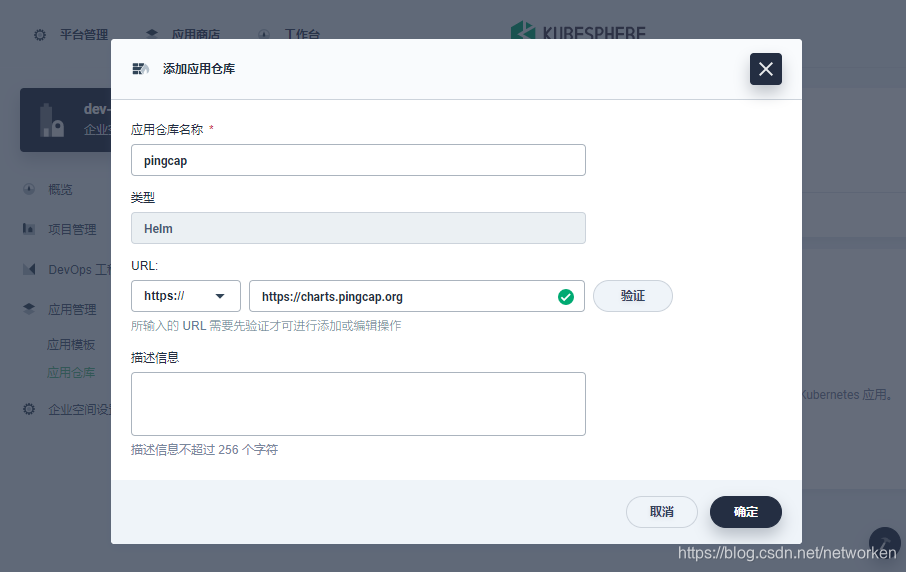

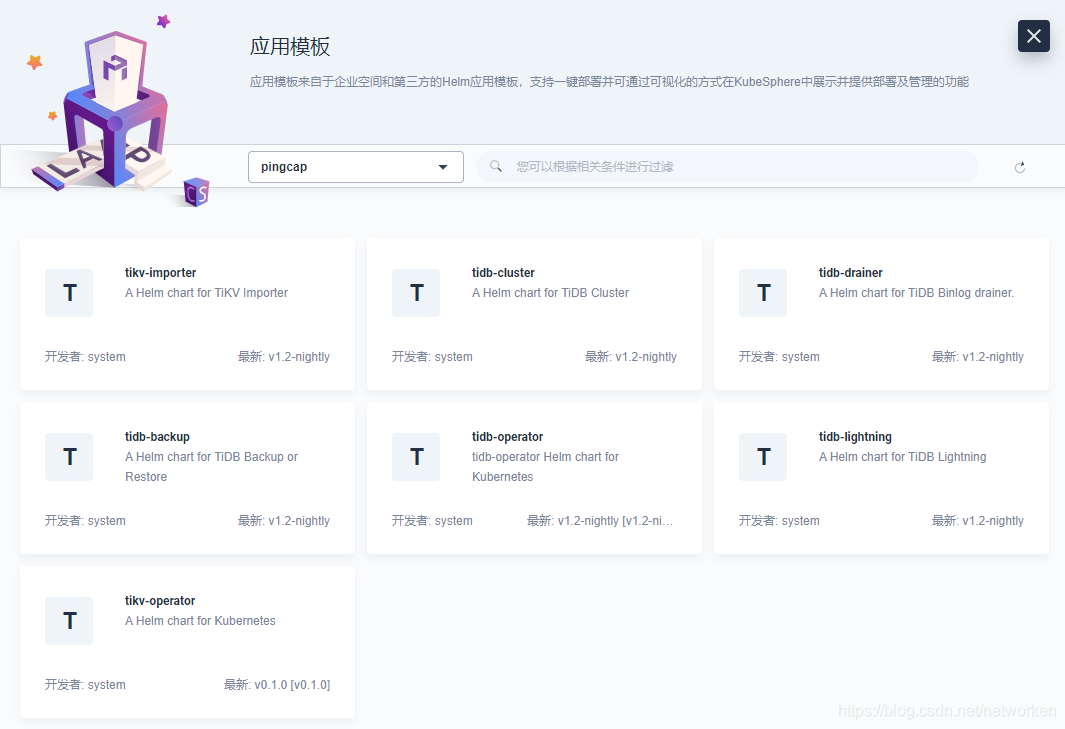

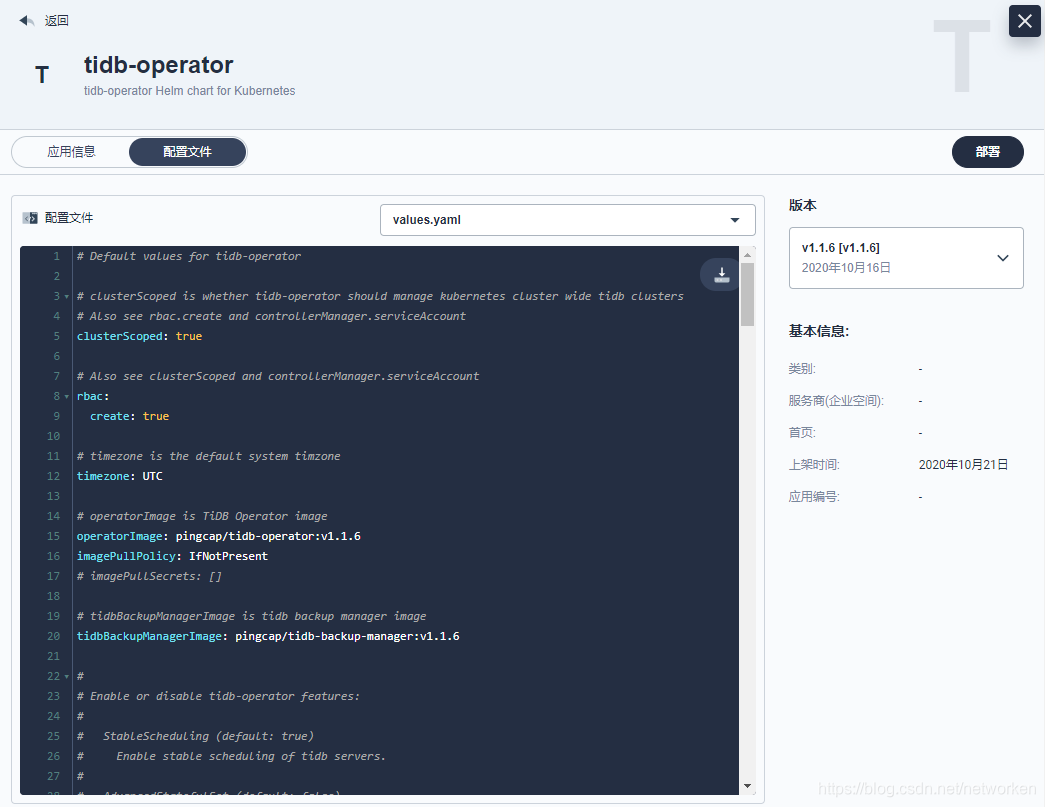

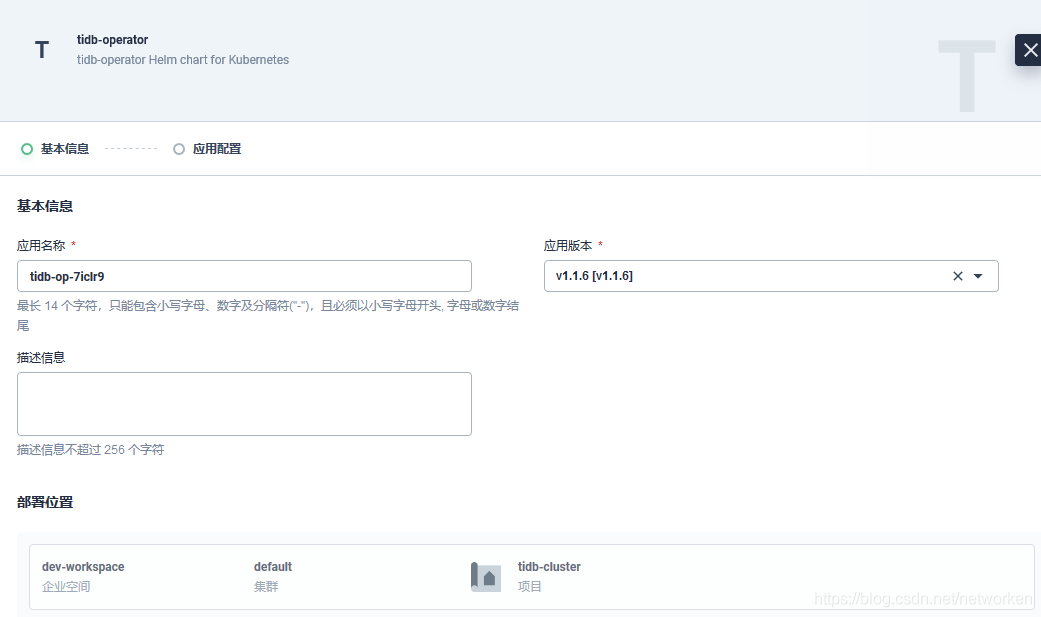

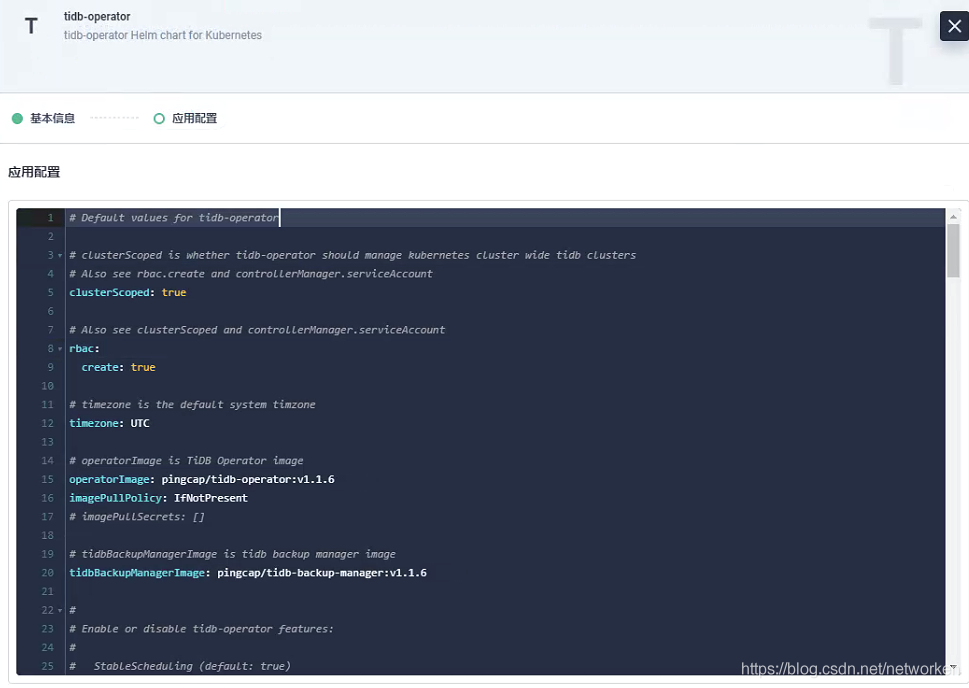

## Deploying tidb-operator

|

||||

## Deploying TiDB-operator

|

||||

|

||||

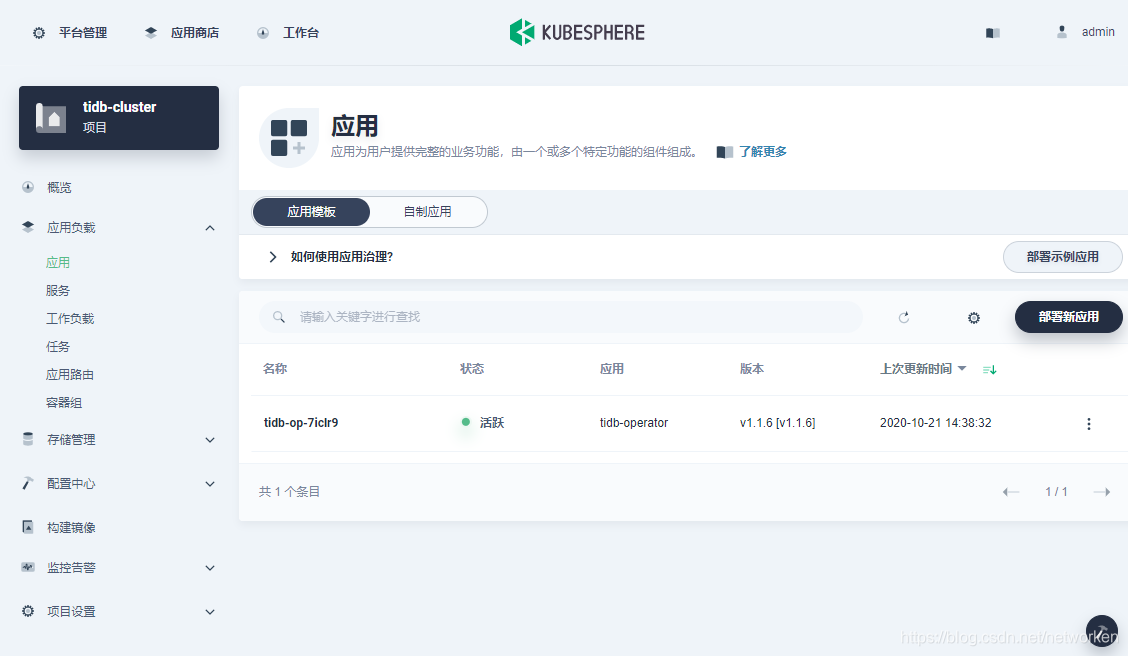

1. Like I mentioned above, we need to create a project (i.e. namespace) first to run the TiBD cluster.

|

||||

|

||||

|

|

@ -94,7 +100,7 @@ kubectl apply -f https://raw.githubusercontent.com/pingcap/tidb-operator/v1.1.6/

|

|||

|

||||

|

||||

|

||||

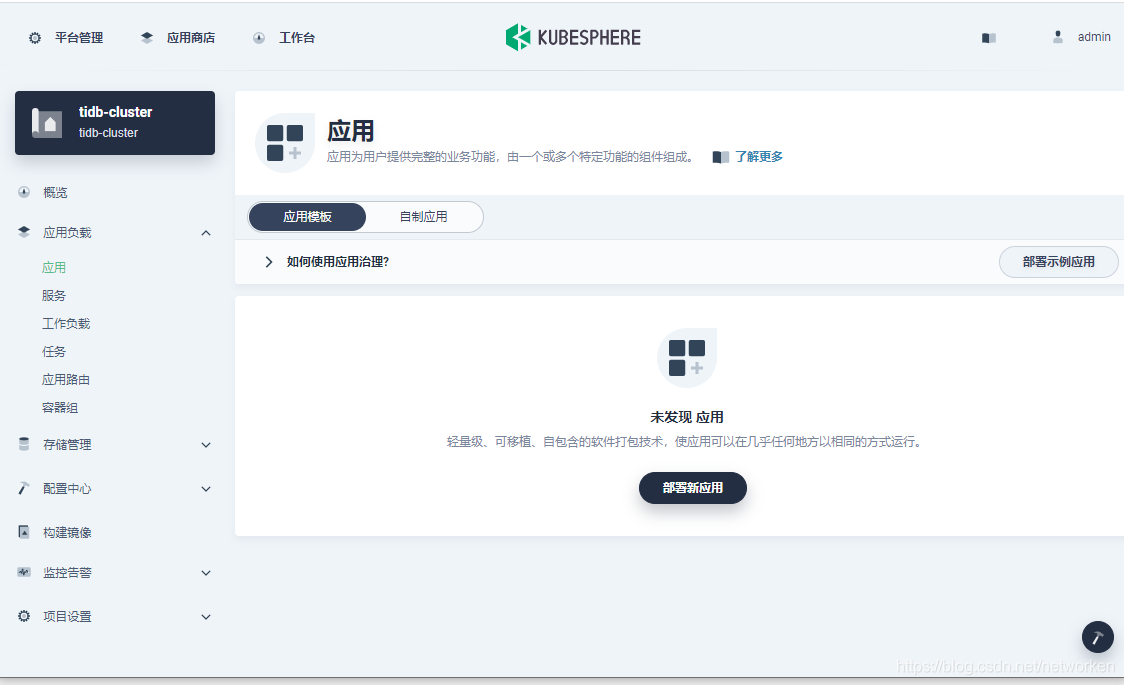

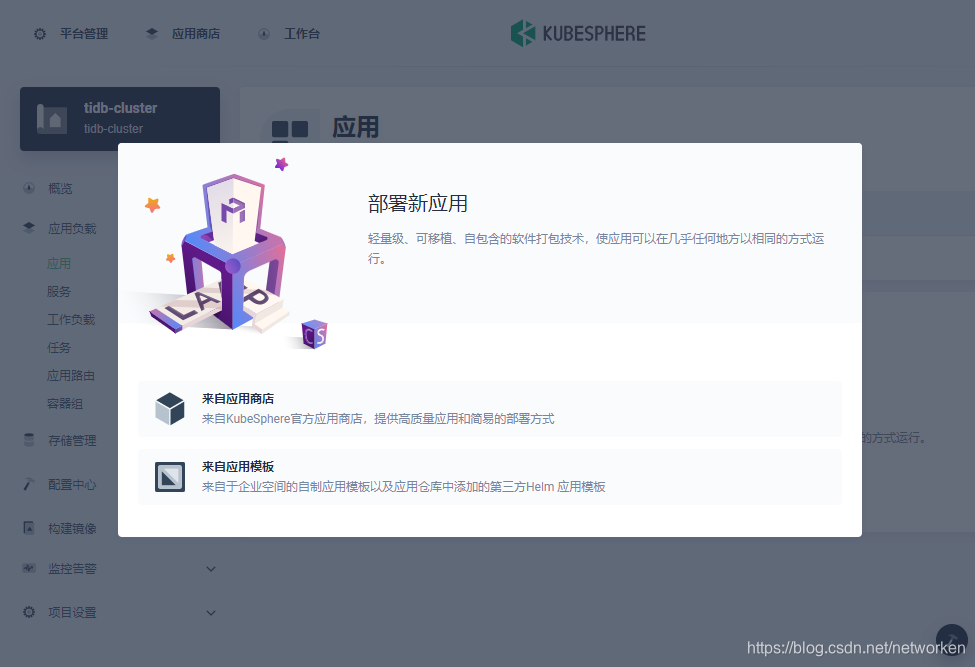

## Deploying tidb-cluster

|

||||

## Deploying TiDB-cluster

|

||||

|

||||

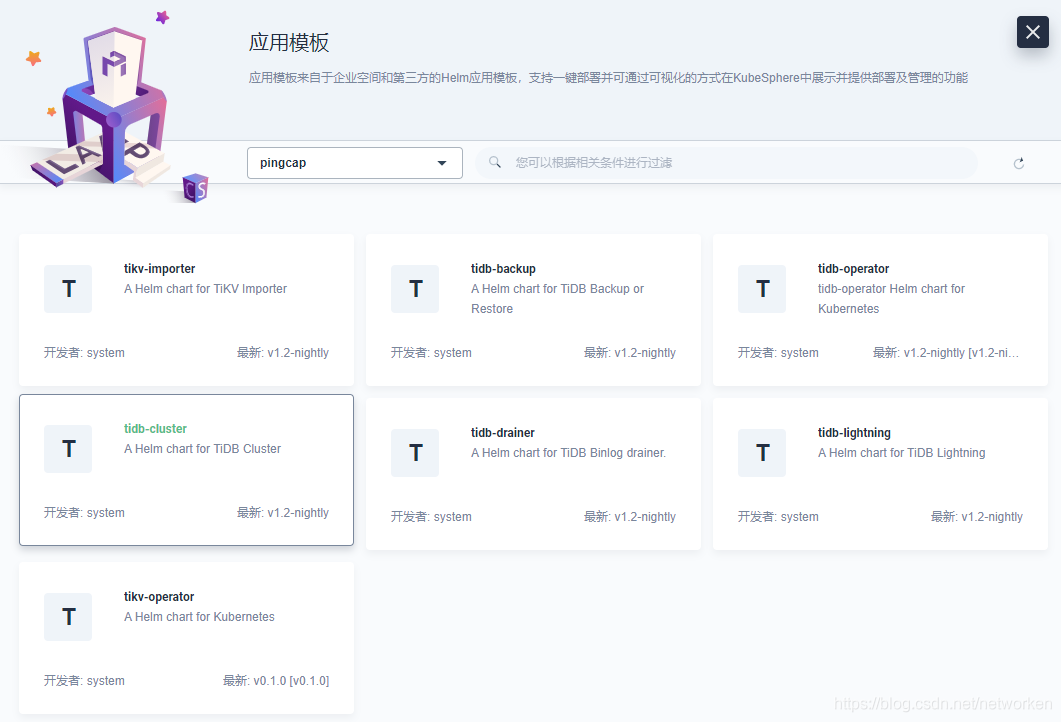

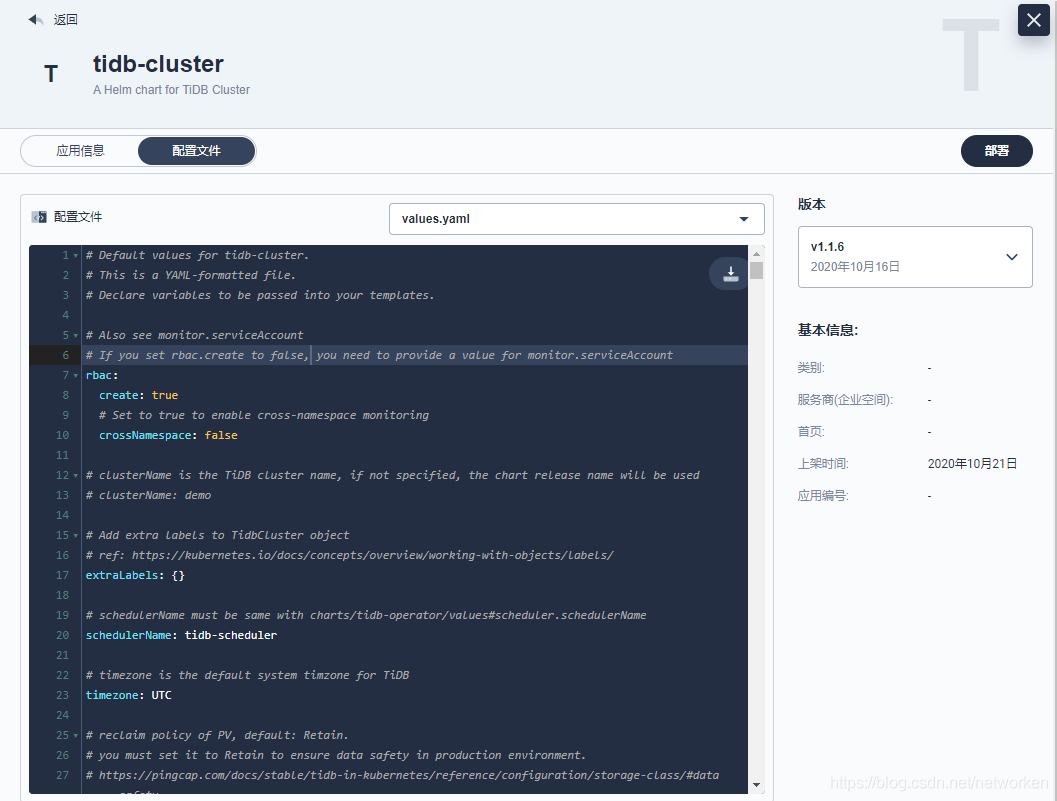

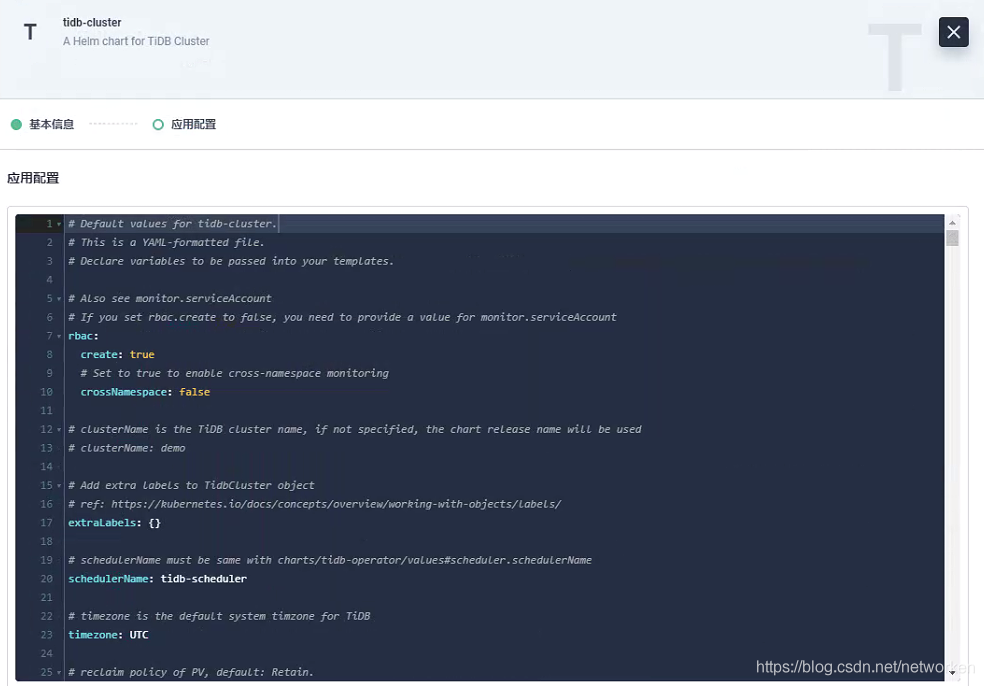

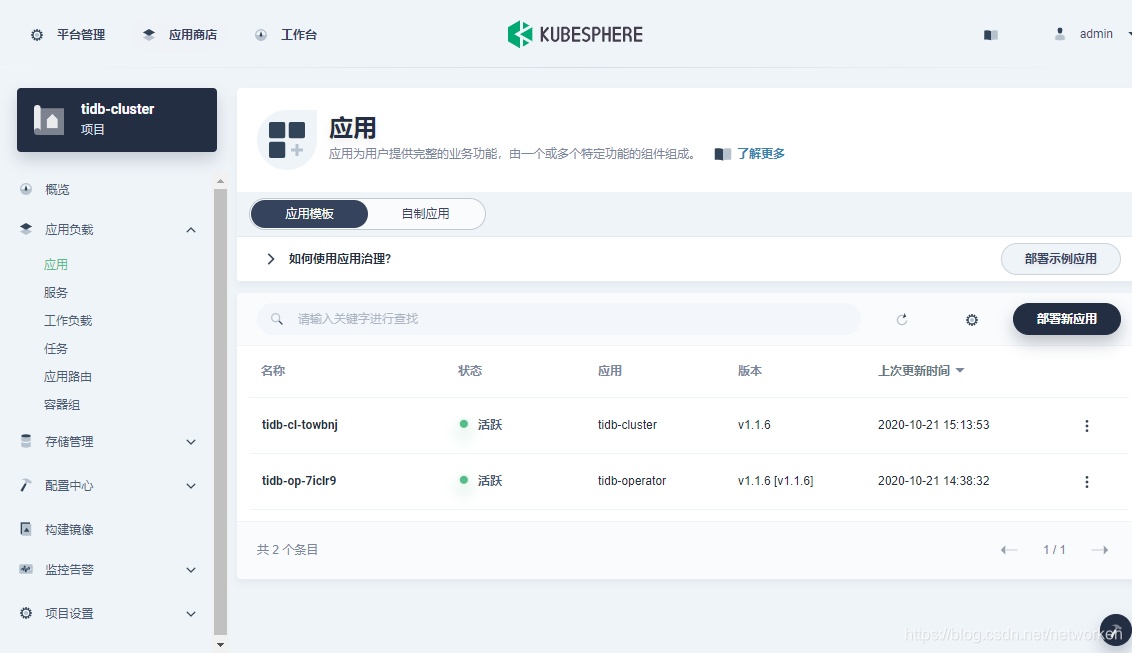

The process of deploying tidb-cluster is basically the same as that of tidb-operator shown above.

|

||||

|

||||

|

|

@ -140,7 +146,7 @@ Now that we have our apps ready, we may need to focus more on observability. Kub

|

|||

|

||||

|

||||

|

||||

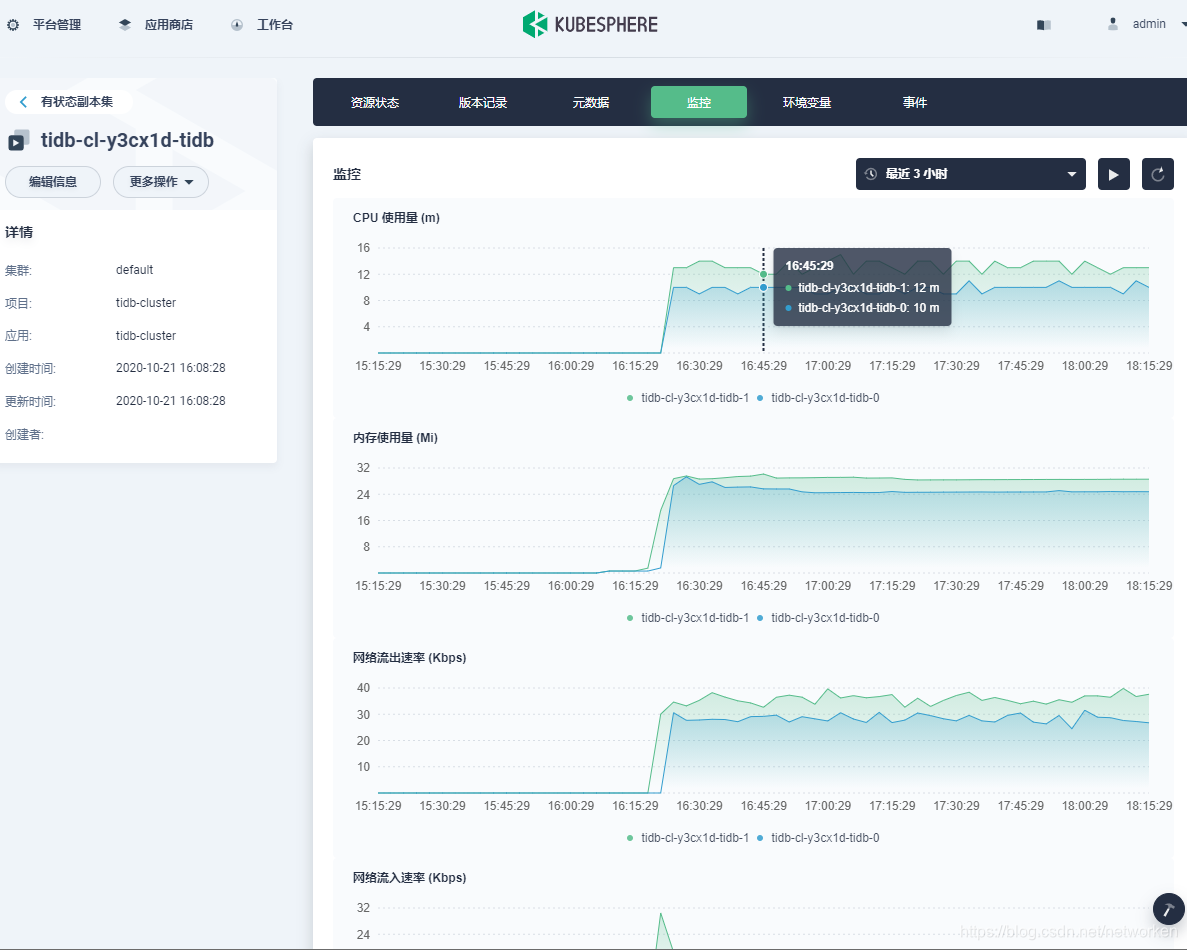

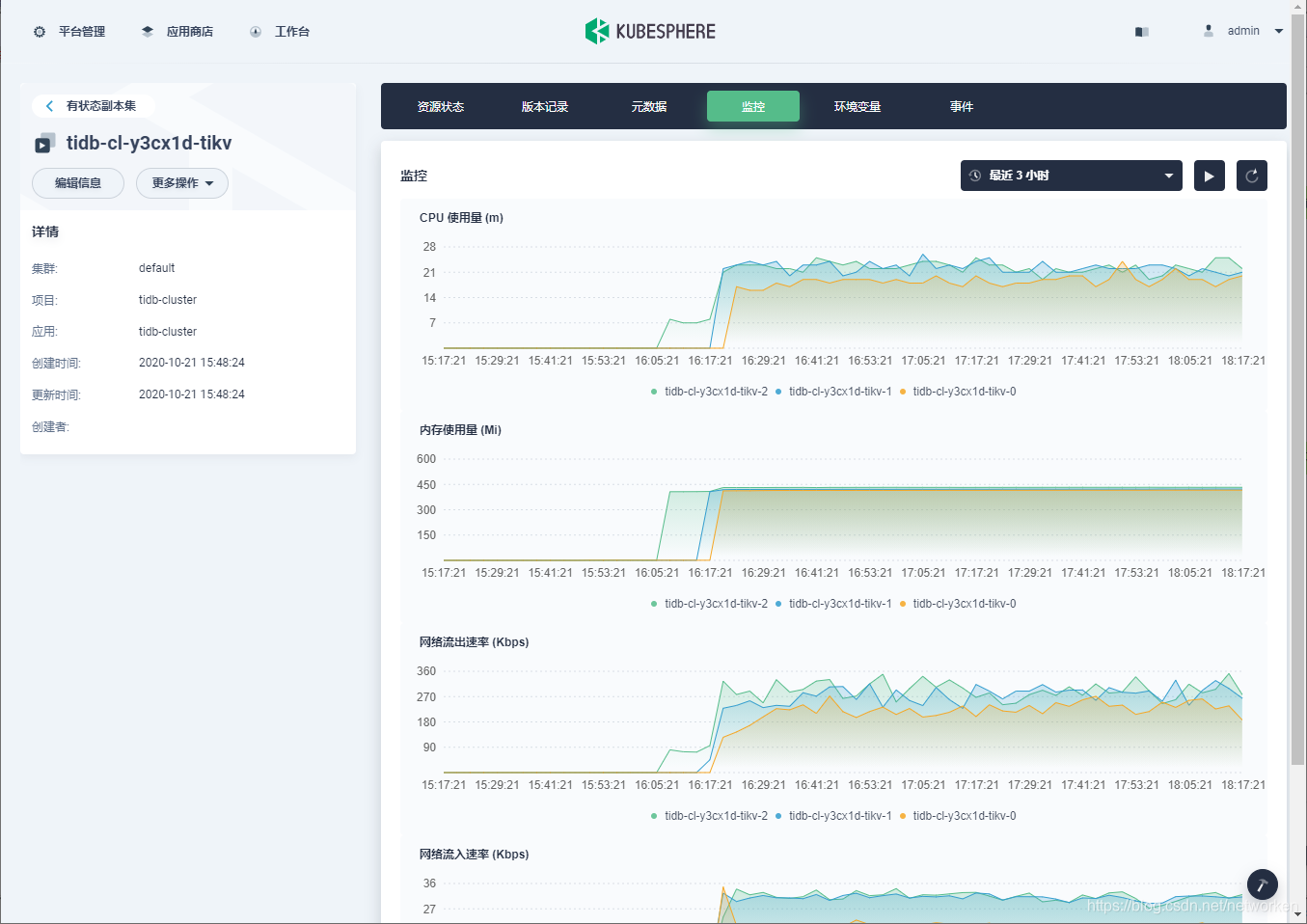

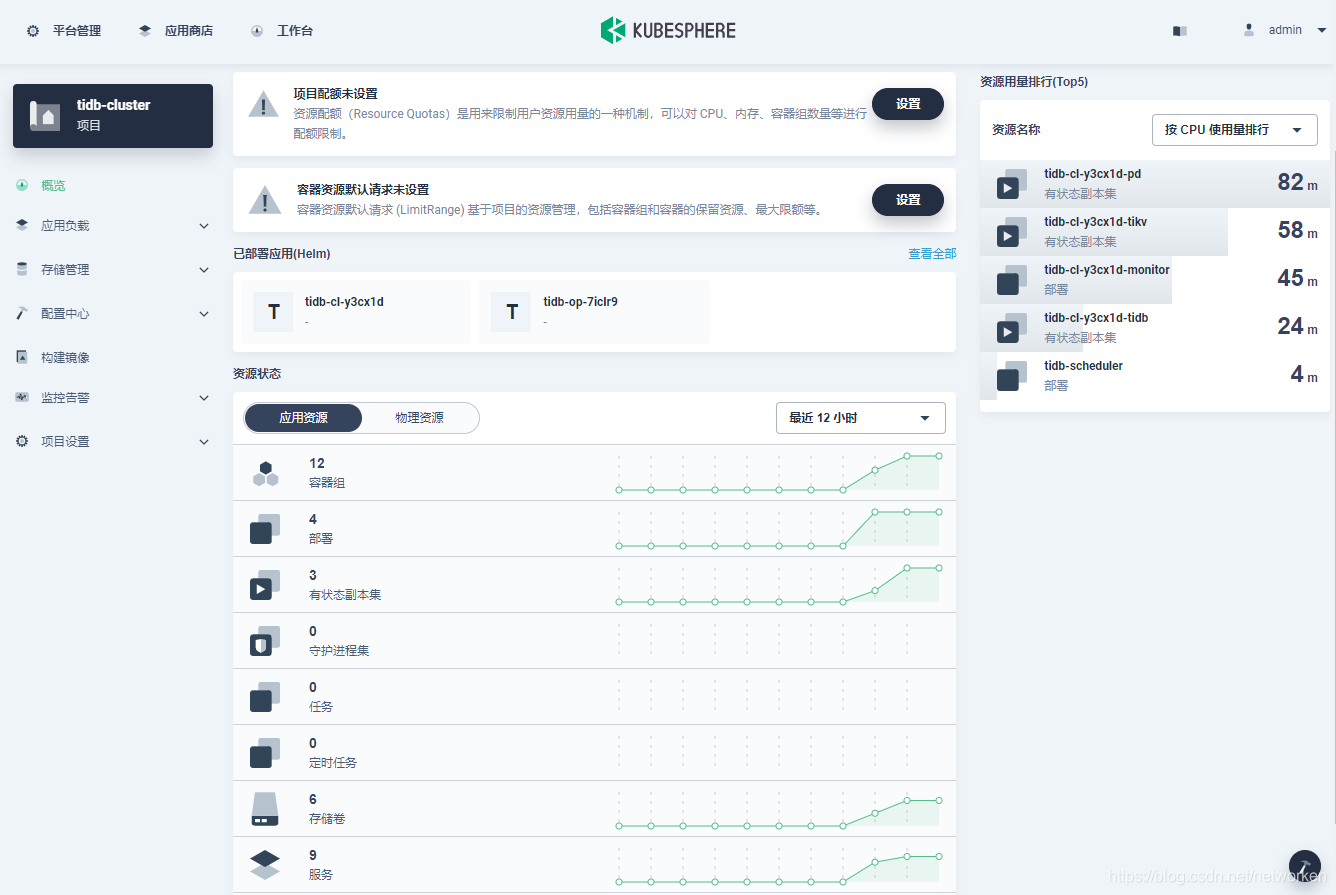

2. tidb, tikv and pd are all stateful applications which can be found in **StatefulSets**. Note that tikv and tidb will be created automatically and it may take a while before displaying in the list.

|

||||

2. TiDB, TiKV and pd are all stateful applications which can be found in **StatefulSets**. Note that TiKV and TiDB will be created automatically and it may take a while before displaying in the list.

|

||||

|

||||

|

||||

|

||||

|

|

@ -152,7 +158,7 @@ Now that we have our apps ready, we may need to focus more on observability. Kub

|

|||

|

||||

|

||||

|

||||

5. Relevant Pods are also listed. As you can see, tidb-cluster contains three pd Pods, two tidb Pods and 3 tikv Pods.

|

||||

5. Relevant Pods are also listed. As you can see, tidb-cluster contains three pd Pods, two TiDB Pods and 3 TiKV Pods.

|

||||

|

||||

|

||||

|

||||

|

|

@ -160,7 +166,7 @@ Now that we have our apps ready, we may need to focus more on observability. Kub

|

|||

|

||||

|

||||

|

||||

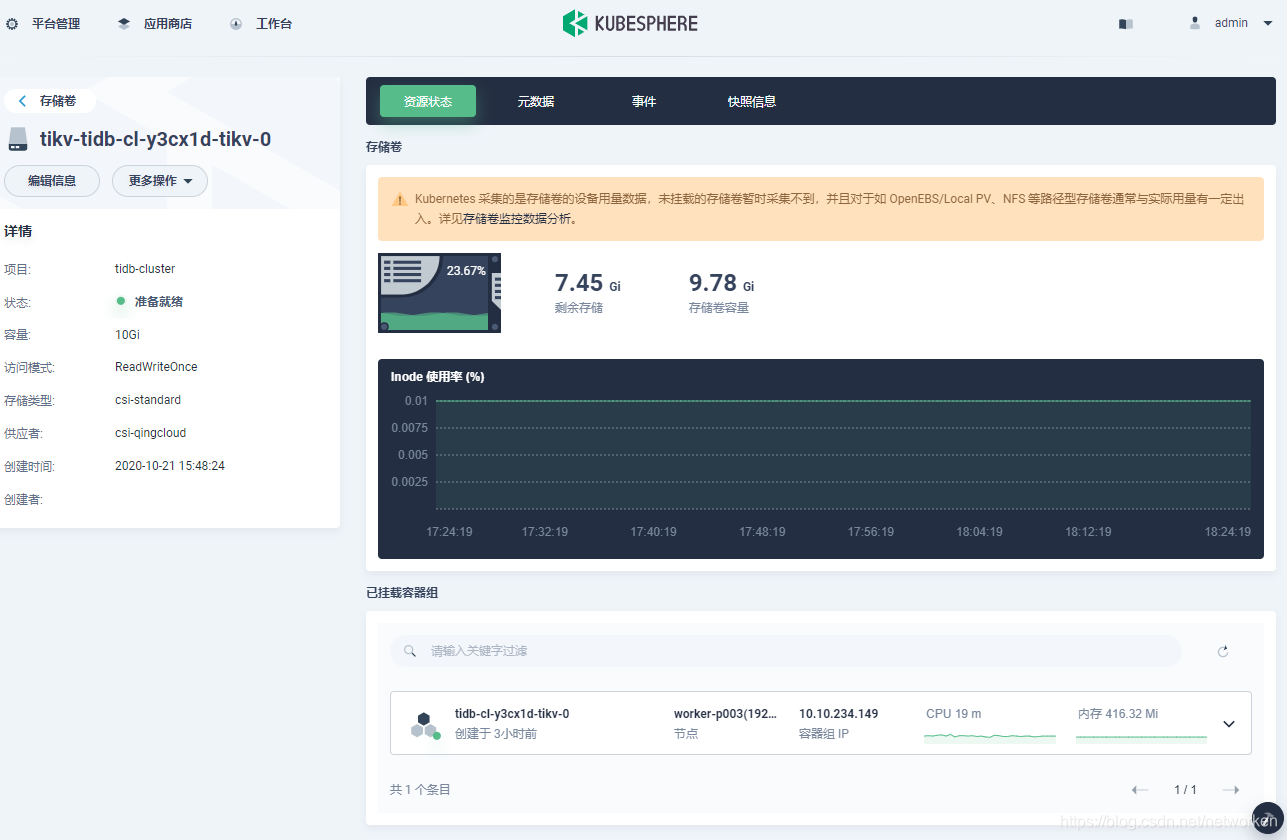

7. Volume usage is also monitored. Here is an example of tikv:

|

||||

7. Volume usage is also monitored. Here is an example of TiKV:

|

||||

|

||||

|

||||

|

||||

|

|

@ -208,7 +214,7 @@ mysql> show databases;

|

|||

mysql>

|

||||

```

|

||||

|

||||

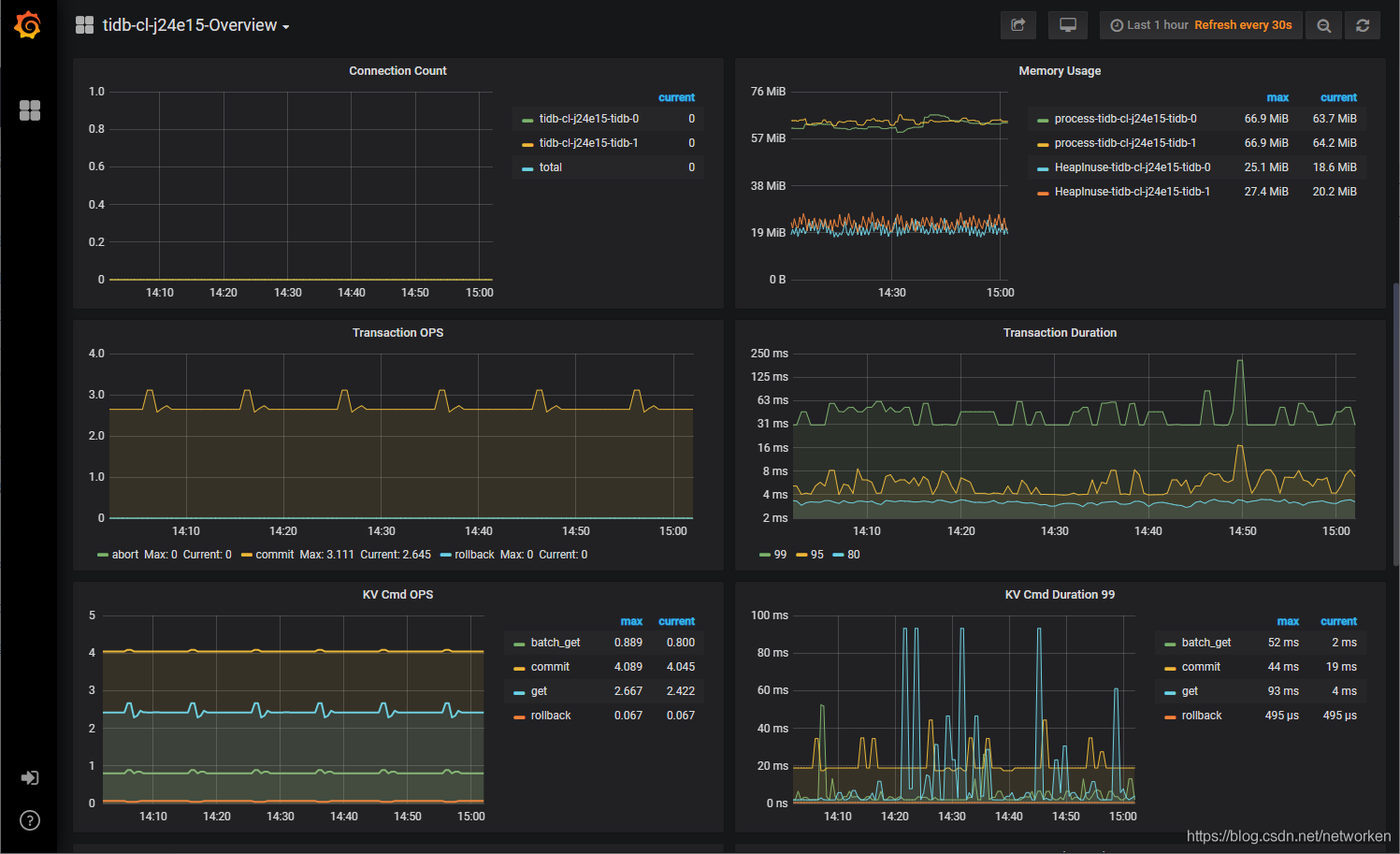

4. Besides, tidb integrates Prometheus and Grafana to monitor performance of the database cluster. As we can see above, Grafana is being exposed through `NodePort`. After you configure necessary port forwarding rules and open its port in security groups on QingCloud Platform, you can access the Grafana UI to view metrics.

|

||||

4. Besides, TiDB integrates Prometheus and Grafana to monitor performance of the database cluster. As we can see above, Grafana is being exposed through `NodePort`. After you configure necessary port forwarding rules and open its port in security groups on QingCloud Platform, you can access the Grafana UI to view metrics.

|

||||

|

||||

|

||||

|

||||

|

|

@ -218,10 +224,12 @@ I hope you guys all have successfully deploy TiDB. Both TiDB and KubeSphere are

|

|||

|

||||

## References

|

||||

|

||||

https://docs.pingcap.com/tidb-in-kubernetes/stable/get-started

|

||||

**KubeSphere GitHub**: https://github.com/kubesphere/kubesphere

|

||||

|

||||

https://docs.pingcap.com/tidb-in-kubernetes/stable/tidb-operator-overview

|

||||

**TiDB GitHub**: https://github.com/pingcap/TiDB

|

||||

|

||||

https://kubesphere.io/docs/introduction/what-is-kubesphere/

|

||||

**TiDB Operator Documentation**: https://docs.pingcap.com/tidb-in-kubernetes/stable/tidb-operator-overview

|

||||

|

||||

https://kubesphere.io/docs/

|

||||

**KubeSphere Introduction**: https://kubesphere.io/docs/introduction/what-is-kubesphere/

|

||||

|

||||

**KubeSphere Documentation**: https://kubesphere.io/docs/

|

||||

|

|

@ -0,0 +1,139 @@

|

|||

---

|

||||

title: 'Kubernetes Multi-cluster Deployment: Federation and KubeSphere'

|

||||

keywords: Kubernetes, KubeSphere, Multi-cluster, Container

|

||||

description: KubeSphere v3.0 supports the management of multiple clusters, isolated management of resources, and federated deployments.

|

||||

tag: 'KubeSphere, Multi-cluster'

|

||||

createTime: '2020-07-20'

|

||||

author: 'Jeff, Feynman, Sherlock'

|

||||

snapshot: 'https://ap3.qingstor.com/kubesphere-website/docs/kubesphere-architecture.png'

|

||||

---

|

||||

|

||||

## Scenarios for Multi-cluster Deployment

|

||||

|

||||

As the container technology and Kubernetes see a surge in popularity among their users, it is not uncommon for enterprises to run multiple clusters for their business. In general, here are the main scenarios where multiple clusters can be adopted.

|

||||

|

||||

### High Availability

|

||||

|

||||

You can deploy workloads on multiple clusters by using a global VIP or DNS to send requests to corresponding backend clusters. When a cluster malfunctions or fails to handle requests, the VIP or DNS records can be transferred to a health cluster.

|

||||

|

||||

|

||||

|

||||

### Low Latency

|

||||

|

||||

When clusters are deployed in various regions, user requests can be forwarded to the nearest cluster, greatly reducing network latency. For example, we have three Kubernetes clusters deployed in New York, Houston and Los Angeles respectively. For users in California, their requests can be forwarded to Los Angeles. This will reduce the network latency due to geographical distance, providing the most consistent user experience possible for users in different areas.

|

||||

|

||||

### Failure Isolation

|

||||

|

||||

Generally, it is much easier for multiple small clusters to isolate failures than a large cluster. In case of outages, network failures, insufficient resources or other possible resulting issues, the failure can be isolated within a certain cluster without spreading to others.

|

||||

|

||||

### Business Isolation

|

||||

|

||||

Although Kubernetes provides namespaces as a solution to app isolation, this method only represents the isolation in logic. This is because different namespaces are connected through the network, which means the issue of resource preemption still exists. To achieve further isolation, you need to create additional network isolation policies or set resource quotas. Using multiple clusters can achieve complete physical isolation that is more secure and reliable than the isolation through namespaces. For example, this is extremely effective when different departments within an enterprise use multiple clusters for the deployment of development, testing or production environments.

|

||||

|

||||

|

||||

|

||||

### Avoid Vendor Lock-in

|

||||

|

||||

Kubernetes has become the de facto standard in container orchestration. Against this backdrop, many enterprises avoid putting all eggs in one basket as they deploy clusters by using services of different cloud providers. That means they can transfer and scale their business anytime between clusters. However, it is not that easy for them to transfer their business in terms of costs, as different cloud providers feature varied Kubernetes services, including storage and network interface.

|

||||

|

||||

## Multi-cluster Deployment

|

||||

|

||||

The application of multi-cluster deployment offers solutions to a variety of problems as we can see from the scenarios above. Nevertheless, it brings more complexity for operation and maintenance. For a single cluster, app deployment and upgrade are quite straightforward as you can directly update yaml of the cluster. For multiple clusters, you can update them one by one, but how can you guarantee the application load status is the same across different clusters? How to implement service discovery among different clusters? How to achieve load balancing across clusters? The answer given by the community is Federation.

|

||||

|

||||

### Federation v1

|

||||

|

||||

|

||||

|

||||

There are two versions of Federation with the original v1 already deprecated. In v1, the general architecture is very similar to that of Kubernetes. As shown above, Federation API Server is used for the management of different clusters. It receives the request to create the deployment of multiple clusters as Federation Controller Manager deploys workloads on each cluster.

|

||||

|

||||

|

||||

|

||||

In terms of API, federated resources are scheduled through annotations, ensuring great compatibility with the original Kubernetes API. As such, the original code can be reused and existing deployment files of users can be easily transferred without any major change. However, this also prevents users from taking further advantage of Federation for API evolution. At the same time, a corresponding controller is needed for each federated resource so that they can be scheduled to different clusters. Originally, Federation only supported a limited number of resource type.

|

||||

|

||||

### Federation v2

|

||||

|

||||

|

||||

|

||||

The community developed Federation v2 (KubeFed) on the basis of v1. KubeFed has defined its own API standards through CRDs while deprecating the annotation method used before. The architecture has changed significantly as well, discarding Federated API Server and etcd that need to be deployed independently. The control plane of KubeFed adopts the popular implementation of CRD + Controller, which can be directly installed on existing Kubernetes clusters without any additional deployment.

|

||||

|

||||

KubeFed mainly defines four resource types:

|

||||

|

||||

- **Cluster Configuration** defines the register information needed for the control plane to add member clusters, including cluster name, APIServer address and the credential to create deployments.

|

||||

|

||||

- **Type Configuration** defines the resource type that KubeFed should handle. Each Type Configuration is a CRD object that contains three configuration items:

|

||||

|

||||

- **Template.** Templates define the representation of a common resource to be handled. If the object does not have a corresponding definition on the cluster where it will be deployed, the deployment will fail. In the following example of FederatedDeployment, the template contains all the information needed to create the deployment.

|

||||

- **Placement**. Placements define the name of the cluster that a resource object will appear in, with two methods available (`clusters` and `clusterSelector`).

|

||||

- **Override**. Overrides define per-cluster, field-level variation that apply to the template, allowing you to customize configurations. In the example below, the number of replicas defined in `template` is 1, while `overrides` shows the replica number of cluster gondor will be 4 when it is deployed instead of 1 in `template`. A subset of the syntax of [Jsonpatch](http://jsonpatch.com/) is achieved in `overrides`, which means, theoretically, all the content in `template` can be overridden.

|

||||

|

||||

|

||||

|

||||

- **Schedule** defines how apps are deployed across clusters, mainly related to ReplicaSets and Deployments. The maximum and minimum number of replicas of the load on a cluster can be defined through Schedule, which is similar to the annotation method in v1.

|

||||

- **MultiClusterDNS** makes it possible for service discovery across clusters. Service discovery across multiple clusters is much more complicated than in a single cluster. ServiceDNSRecord, IngressDNSRecord and DNSEndpoint objects are used in KubeFed to implement service discovery across multiple clusters (DNS needed as well).

|

||||

|

||||

In general, KubeFed has provided solutions to many problems in v1. With CRDs, federated resources can be scaled to a large extent. Basically, all Kubernetes resources can be deployed across multiple clusters, including the CRD resources defined by users themselves.

|

||||

|

||||

However, KubeFed also has some issues to be resolved:

|

||||

|

||||

- **Single point of failure**. The control plane of KubeFed is achieved through CRD + Controller. High availability can be implemented for the controller itself, but the whole control plane will malfunction if Kubernetes it runs on fails. This was also [discussed in the community](https://github.com/kubernetes-sigs/kubefed/issues/636) before. Currently, KubeFed uses the push/reconcile method. When federated resources are created, the controller of the control plane will send the resource object to clusters accordingly. After that, the control plane is not responsible for how the member cluster handles resources. Therefore, existing application workloads will not be affected when KubeFed control plane fails.

|

||||

- **Maturity**. The KubeFed community is not as active as the Kubernetes community. Its iteration cycle is too slow and many features are still in the beta stage.

|

||||

- **Abstraction**. KubeFed defines resources to be managed through Type Configurations. Different Type Configurations only vary in their templates. The advantage is that the logic can be unified so that they can be quickly achieved. In KubeFed, the corresponding Controllers of Type Configuration resources are all created through [templates](https://github.com/kubernetes-sigs/kubefed/blob/master/pkg/controller/federatedtypeconfig/controller.go). That said, the shortcoming is quite obvious as customized features are not supported for special Types. For instance, for a FederatedDeployment object, KubeFed only needs to create a deployment object accordingly based on template and override, which will be deployed on the cluster specified in placement. As for whether the corresponding Pod is created based on the deployment and how the Pod runs, you can only check the information in the related cluster. The community has realized this issue and is working on it. A [proposal](https://github.com/kubernetes-sigs/kubefed/pull/1237) has already been raised.

|

||||

|

||||

## Multi-cluster Feature in KubeSphere

|

||||

|

||||

Resource federation is what the community has proposed to solve the issue of deployments across multiple clusters. For many enterprise users, the deployment of multiple clusters is not necessary. What is more important is that they need to be able to manage the resources across multiple clusters at the same time and in the same place.

|

||||

|

||||

[KubeSphere](https://github.com/kubesphere) supports the management of multiple clusters, isolated management of resources, and federated deployments. In addition, it also features multi-dimensional queries (monitoring, logging, events and auditing) of resources such as clusters and apps, as well as alerts and notifications through various channels. Apps can be deployed on multiple clusters with CI/CD pipelines.

|

||||

|

||||

|

||||

|

||||

KubeSphere 3.0 supports unified management of user access for the multi-cluster feature based on KubeFed, RBAC and Open Policy Agent. With the multi-tenant architecture, it is very convenient for business departments, development teams and Ops teams to manage resources isolatedly in a unified console according to their needs.

|

||||

|

||||

|

||||

|

||||

### Architecture

|

||||

|

||||

|

||||

|

||||

The overall multi-cluster architecture of [KubeSphere](https://kubesphere.io/) is shown above. The cluster where the control plane is located is called Host cluster. The cluster managed by the Host cluster is called Member cluster, which is essentially a Kubernetes cluster with KubeSphere installed. The Host cluster needs to be able to access the kube-apiserver of Member clusters. Besides, there is no requirement for the network connectivity between Member clusters. The Host cluster is independent of the member clusters managed by it, which do not know the existence of the Host cluster. The advantage of the logic is that when the Host cluster malfunctions, Member clusters will not be affected and deployed workloads can continue to run as well.

|

||||

|

||||

In addition, the Host cluster also serves as an entry for API requests. It will forward all resource requests for member clusters to them. In this way, not only can requests be aggregated, but also authentication and authorization can be implemented in a unified fashion.

|

||||

|

||||

### Authorization and Authentication

|

||||

|

||||

It can be seen from the architecture that the Host cluster is responsible for the synchronization of identity and access information of clusters, which is achieved by federated resources of KubeFed. When FederatedUser, FederatedRole, or FederatedRoleBinding is created on the Host cluster, KubeFed will push User, Role, or Rolebinding to Member clusters. Any access change will only be applied to the Host cluster, which will then be synchronized to Member clusters. This is to ensure the integrity of each Member cluster. In this regard, the identity and access data stored in Member clusters enable them to implement authentication and authorization independently without any reliance on the Host cluster. In the multi-cluster architecture of KubeSphere, the Host cluster acts as a resource coordinator instead of a dictator, since it delegates power to Member clusters as much as possible.

|

||||

|

||||

### Cluster Connectivity

|

||||

|

||||

The multi-cluster feature of KubeSphere only entails the access of the Host cluster to the Kubernetes APIServer of Member clusters. There is no requirement for network connectivity at the cluster level. KubeSphere provides two methods for the connection of Host and Member clusters:

|

||||

|

||||

- **Direct connection**. If the kube-apiserver address of Member clusters is accessible on any node of the Host cluster, you can adopt this method. Member clusters only need to provide the cluster kubeconfig. This method applies to most public cloud Kubernetes services or the scenario where the Host cluster and Member clusters are in the same network.

|

||||

|

||||

- **Agent connection**. In case Member clusters are in a private network with the kube-apiserver address unable to be exposed, KubeSphere provides [Tower](https://github.com/kubesphere/tower) for agent connection. Specifically, the Host cluster will run a proxy service. When a new cluster joins, the Host cluster will generate all credential information. Besides, the agent running on Member clusters will connect to the proxy service running on the Host cluster. A reverse proxy will be created after the handshake succeeds. As the kube-apiserver address of Member clusters will change in agent connection, the Host cluster needs to create a new kubeconfig for Member clusters. This is very convenient as the underlying details can be hidden. In either direct connection or agent connection, the control plane is provided with a kubeconfig that can be used directly.

|

||||

|

||||

|

||||

|

||||

### API Forwarding

|

||||

|

||||

In the multi-cluster architecture of KubeSphere, the Host cluster serves as a cluster entry. All API requests are directly sent to the Host cluster first, which will decide where these requests go next. To provide the best compatibility possible with the original API in the multi-cluster environment, the API request whose path begins with `/apis/clusters/{cluster}` will be forwarded to the cluster `{cluster}`, with `/clusters/{cluster}` removed. The advantage is that there is no difference between the request the cluster receives this way with other requests, with no additional configuration or operation needed.

|

||||

|

||||

|

||||

|

||||

For example:

|

||||

|

||||

|

||||

|

||||

The request above will be forwarded to a cluster named rohan and be handled as:

|

||||

|

||||

|

||||

|

||||

## Summary

|

||||

|

||||

The topic of multi-cluster deployment is far more complicated than we think. The fact that the Federation solution provided by the community has not been officially released after two versions is a typical example. As we often put it, there is no Silver Bullet in software engineering. It is impossible for multi-cluster tools such as KubeFed and KubeSphere to solve all the issues. We need to find the solution that best suits us based on the specific business scenario. It is believed that these tools will become more mature over time, which can be applied in more scenarios.

|

||||

|

||||

## References

|

||||

|

||||

1. KubeFed: https://github.com/kubernetes-sigs/kubefed

|

||||

2. KubeSphere Website: https://kubesphere.io/

|

||||

3. Kubernetes Federation Evolution: https://kubernetes.io/blog/2018/12/12/kubernetes-federation-evolution/

|

||||

4. KubeSphere GitHub: https://github.com/kubesphere

|

||||

|

|

@ -15,9 +15,9 @@ section2:

|

|||

icon2: 'images/contribution/37.png'

|

||||

children:

|

||||

- content: 'Download KubeSphere'

|

||||

link: 'https://kubesphere.io/docs/installation/intro/'

|

||||

link: '../../../docs/quick-start/all-in-one-on-linux/'

|

||||

- content: 'Quickstart'

|

||||

link: 'https://kubesphere.io/docs/quick-start/admin-quick-start/'

|

||||

link: '../../../docs/quick-start/create-workspace-and-project/'

|

||||

- content: 'Tutorial Videos'

|

||||

link: '../videos'

|

||||

|

||||

|

|

@ -84,7 +84,7 @@ section3:

|

|||

- name: 'Apps'

|

||||

icon: '/images/contribution/apps.svg'

|

||||

iconActive: '/images/contribution/apps-active.svg'

|

||||

content: 'App charts for the built-in Application Store'

|

||||

content: 'App charts for the built-in App Store'

|

||||

link: 'https://github.com/kubesphere/community/tree/master/sig-apps'

|

||||

linkContent: 'Join SIG - Apps →'

|

||||

children:

|

||||

|

|

@ -92,7 +92,7 @@ section3:

|

|||

- icon: '/images/contribution/calicq2.jpg'

|

||||

- icon: '/images/contribution/calicq3.jpg'

|

||||

|

||||

- name: 'Application Store'

|

||||

- name: 'App Store'

|

||||

icon: '/images/contribution/app-store.svg'

|

||||

iconActive: '/images/contribution/app-store-active.svg'

|

||||

content: 'App Store, App template management'

|

||||

|

|

|

|||

|

|

@ -1,9 +1,9 @@

|

|||

---

|

||||

title: "Accecc Control and Account Management"

|

||||

description: "Accecc Control and Account Management"

|

||||

title: "Access Control and Account Management"

|

||||

description: "Access Control and Account Management"

|

||||

layout: "single"

|

||||

|

||||

linkTitle: "Accecc Control and Account Management"

|

||||

linkTitle: "Access Control and Account Management"

|

||||

weight: 4500

|

||||

|

||||

icon: "/images/docs/docs.svg"

|

||||

|

|

|

|||

|

|

@ -34,10 +34,6 @@ The following metrics have been deprecated and removed.

|

|||

|cluster_workspace_count|

|

||||

|cluster_account_count|

|

||||

|cluster_devops_project_count|

|

||||

|workspace_namespace_count|

|

||||

|workspace_devops_project_count|

|

||||

|workspace_member_count|

|

||||

|workspace_role_count|

|

||||

|coredns_up_sum|

|

||||

|coredns_cache_hits|

|

||||

|coredns_cache_misses|

|

||||

|

|

@ -53,6 +49,15 @@ The following metrics have been deprecated and removed.

|

|||

|prometheus_up_sum|

|

||||

|prometheus_tsdb_head_samples_appended_rate|

|

||||

|

||||

New metrics are introduced in KubeSphere 3.0.0.

|

||||

|

||||

|New Metrics|

|

||||

|---|

|

||||

|kubesphere_workspace_count|

|

||||

|kubesphere_user_count|

|

||||

|kubesphere_cluser_count|

|

||||

|kubesphere_app_template_count|

|

||||

|

||||

## Response Fields

|

||||

|

||||

In KubeSphere 3.0.0, the response fields `metrics_level`, `status` and `errorType` are removed.

|

||||

|

|

|

|||

|

|

@ -1,23 +1,14 @@

|

|||

---

|

||||

title: "Application Store"

|

||||

title: "App Store"

|

||||

description: "Getting started with KubeSphere DevOps project"

|

||||

layout: "single"

|

||||

|

||||

linkTitle: "Application Store"

|

||||

|

||||

linkTitle: "App Store"

|

||||

weight: 4600

|

||||

|

||||

icon: "/images/docs/docs.svg"

|

||||

|

||||

---

|

||||

|

||||

## Installing KubeSphere and Kubernetes on Linux

|

||||

|

||||

In this chapter, we will demonstrate how to use KubeKey to provision a new Kubernetes and KubeSphere cluster based on different infrastructures. Kubekey can help you to quickly build a production-ready cluster architecture on a set of machines from zero to one. It also helps you to easily scale the cluster and install pluggable components on existing architecture.

|

||||

|

||||

## Most Popular Pages

|

||||

|

||||

Below you will find some of the most common and helpful pages from this chapter. We highly recommend you to review them at first.

|

||||

|

||||

{{< popularPage icon="/images/docs/bitmap.jpg" title="Install KubeSphere on AWS EC2" description="Provisioning a new Kubernetes and KubeSphere cluster based on AWS" link="" >}}

|

||||

|

||||

{{< popularPage icon="/images/docs/bitmap.jpg" title="Install KubeSphere on AWS EC2" description="Provisioning a new Kubernetes and KubeSphere cluster based on AWS" link="" >}}

|

||||

TBD

|

||||

|

|

|

|||

|

|

@ -0,0 +1,162 @@

|

|||

---

|

||||

title: "App Lifecycle Management"

|

||||

keywords: 'kubernetes, kubesphere, app-store'

|

||||

description: 'App Lifecycle Management'

|

||||

|

||||

|

||||

weight: 2240

|

||||

---

|

||||

|

||||

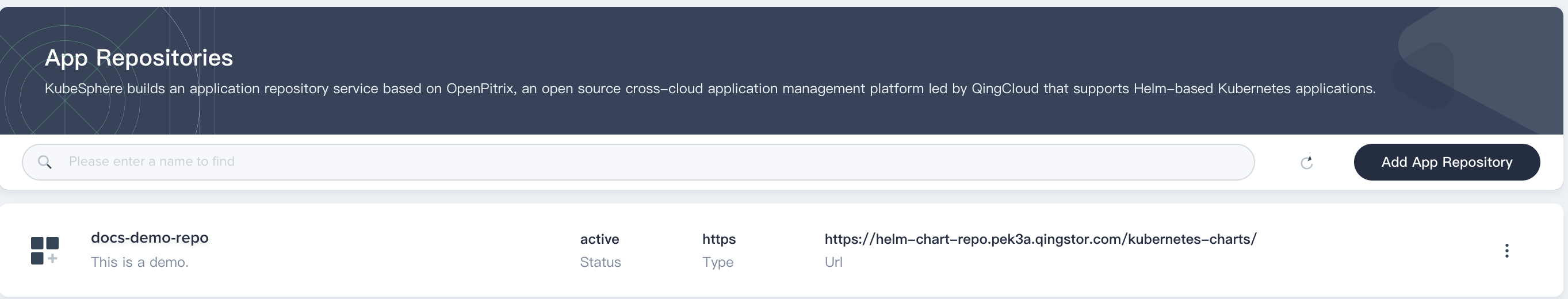

KubeSphere integrates open-source project [OpenPitrix](https://github.com/openpitrix/openpitrix) to set up the App Store which provide the full lifecycle of application management. App Store supports two kinds of application deployment as follows:

|

||||

|

||||

> - **Application template** provides a way for developers and ISVs to share applications with users in a workspace. It also supports importing third-party application repositories within workspace.

|

||||

> - **Composing application** means users can quickly compose multiple microservices into a complete application through the one-stop console.

|

||||

|

||||

|

||||

|

||||

## Objective

|

||||

|

||||

In this tutorial, we will walk you through how to use [EMQ X](https://www.emqx.io/) as a demo application to demonstrate the **global application store** and **application lifecycle management** including upload / submit / review / test / release / upgrade / delete application templates.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You need to install [App Store (OpenPitrix)](../../pluggable-components/app-store).

|

||||

- You need to create a workspace and a project, see [Create Workspace, Project, Account and Role](../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Create Customized Role and Account

|

||||

|

||||

In this step, we will create two accounts, i.e., `isv` for ISVs and `reviewer` for app technical reviewers.

|

||||

|

||||

1.1. First of all, we need to create a role for app reviewers. Log in KubeSphere console with the account `admin`, go to **Platform → Access Control → Account Roles**, then click **Create** and name it `app-review`, choose **App Templates Management** and **App Templates View** in the authorization settings list, then click **Create**.

|

||||

|

||||

|

||||

|

||||

1.2. Create an account `reviewer`, and grant the role of **app-review** to it.

|

||||

|

||||

1.3. Similarly, create an account `isv`, and grant the role of **platform-regular** to it.

|

||||

|

||||

|

||||

|

||||

1.4. Invite the accounts that we created above to an existing workspace such as `demo-workspace`, and grant them the role of `workspace-admin`.

|

||||

|

||||

### Step 2: Upload and Submit Application

|

||||

|

||||

2.1. Log in KubeSphere with `isv`, enter the workspace. We are going to upload the EMQ X app to this workspace. First please download [EMQ X chart v1.0.0](https://github.com/kubesphere/tutorial/raw/master/tutorial%205%20-%20app-store/emqx-v1.0.0-rc.1.tgz) and click **Upload Template** by choosing **App Templates**.

|

||||

|

||||

> Note we are going to upload a newer version of EMQ X to demo the upgrade feature later on.

|

||||

|

||||

|

||||

|

||||

2.2. Click **Upload**, then click **Upload Helm Chart Package** to upload the chart.

|

||||

|

||||

|

||||

|

||||

2.3. Click **OK**. Now download [EMQ Icon](https://github.com/kubesphere/tutorial/raw/master/tutorial%205%20-%20app-store/emqx-logo.png) and click **Upload icon** to upload App logo. Click **OK** when you are done.

|

||||

|

||||

|

||||

|

||||

2.4. At this point, you will be able to see the status displays `draft`, which means this app is under developing. The uploaded app is visible for all members in the same workspace.

|

||||

|

||||

|

||||

|

||||

2.5. Enter app template detailed page by clicking on EMQ X from the list. You can edit the basic information of this app by clicking **Edit Info**.

|

||||

|

||||

|

||||

|

||||

2.6. You can customize the app's basic information by filling in the table as the following screenshot.

|

||||

|

||||

|

||||

|

||||

2.7. Save your changes, then you can test this application by deploying to Kubernetes. Click on the **Test Deploy** button.

|

||||

|

||||

|

||||

|

||||

2.8. Select cluster and project that you want to deploy into, check app config then click **Deploy**.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

2.9. Wait for a few minutes, then switch to the tab **Deployed Instances**. You will find EMQ X App has been deployed successfully.

|

||||

|

||||

|

||||

|

||||

2.10. At this point, you can click `Submit Review` to submit this application to `reviewer`.

|

||||

|

||||

|

||||

|

||||

2.11. As shown in the following graph, the app status has been changed to `Submitted`. Now app reviewer can review it.

|

||||

|

||||

|

||||

|

||||

### Step 3: Review Application

|

||||

|

||||

3.1. Log out, then use `reviewer` account to log in KubeSphere. Navigate to **Platform → App Store Management → App Review**.

|

||||

|

||||

|

||||

|

||||

3.2. Click **Review** by clicking the vertical three dots at the end of app item in the list, then you start to review the app's basic information, introduction, chart file and updated logs from the pop-up windows.

|

||||

|

||||

|

||||

|

||||

3.3. It is the reviewer's responsibility to judge if the app satisfies the criteria of the Global App Store or not, if yes, then click `Pass`; otherwise, `Reject` it.

|

||||

|

||||

### Step 4: Release Application to Store

|

||||

|

||||

4.1. Log out and switch to use `isv` to log in KubeSphere. Now `isv` can release the EMQ X application to the global application store which means all users in this platform can find and deploy this application.

|

||||

|

||||

4.2. Enter the demo workspace and navigate to the EMQ X app from the template list. Enter the detailed page and expand the version list, then click **Release to Store**, choose **OK** in the pop-up windows.

|

||||

|

||||

|

||||

|

||||

4.3. At this point, EMQ X has been released to application store.

|

||||

|

||||

|

||||

|

||||

4.4. Go to **App Store** in the top menu, you will see the app in the list.

|

||||

|

||||

|

||||

|

||||

4.5. At this point, we can use any role of users to access EMQ X application. Click into the application detailed page to go through its basic information. You can click **Deploy** button to deploy the application to Kubernetes.

|

||||

|

||||

|

||||

|

||||

### Step 5: Create Application Category

|

||||

|

||||

Depending on the business needs, `Reviewer` can create multiple categories for different types of applications. It is similar as tag and can be used in application store to filter applications, e.g. Big data, Middleware, IoT, etc.

|

||||

|

||||

As for EMQ X application, we can create a category and name it `IOT`. First switch back to the user `Reviewer`, go to **Platform → App Store Management → App Categories**

|

||||

|

||||

|

||||

|

||||

Then click **Uncategorized** and find EMQ X, change its category to `IOT` and save it.

|

||||

|

||||

> Note usually reviewer should create necessary categories in advance according to the requirements of the store. Then ISVs categorize their applications as appropriate before submitting for review.

|

||||

|

||||

|

||||

|

||||

### Step 6: Add New Version

|

||||

|

||||

6.1. KubeSphere supports adding new versions of existing applications for users to quickly upgrade. Let's continue to use `isv` account and enter the EMQ X template page in the workspace.

|

||||

|

||||

|

||||

|

||||

6.2. Download [EMQ X v4.0.2](https://github.com/kubesphere/tutorial/raw/master/tutorial%205%20-%20app-store/emqx-v4.0.2.tgz), then click on the **New Version** on the right, upload the package that you just downloaded.

|

||||

|

||||

|

||||

|

||||

6.3. Click **OK** when you upload successfully.

|

||||

|

||||

|

||||

|

||||

6.4. At this point, you can test the new version and submit it to `Reviewer`. This process is similar to the one for the first version.

|

||||

|

||||

|

||||

|

||||

6.5. After you submit the new version, the rest of process regarding review and release are also similar to the first version that we demonstrated above.

|

||||

|

||||

### Step 7: Upgrade

|

||||

|

||||

After the new version has been released to application store, all users can upgrade from this application.

|

||||

|

|

@ -0,0 +1,42 @@

|

|||

---

|

||||

title: "Memcached App"

|

||||

keywords: 'Kubernetes, KubeSphere, Memcached, app-store'

|

||||

description: 'How to use built-in Memcached Object Storage'

|

||||

|

||||

|

||||

weight: 2242

|

||||

---

|

||||

[Memcached](https://memcached.org/) is designed for large data caches. Its API is available for most popular languages. This guide will show you one-click deployment for Memcached in Kubenetes .

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You have enabled [KubeSphere App Store](../../pluggable-components/app-store)

|

||||

- You have completed the tutorial in [Create Workspace, Project, Account and Role](../../quick-start/create-workspace-and-project/). Now switch to use `project-regular` account to log in and enter into `demo-peoject`.

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Common steps

|

||||

|

||||

1. Choose Memcached template `From App Store`.

|

||||

|

||||

|

||||

|

||||

2. Check app info and click `Deploy` button.

|

||||

|

||||

|

||||

|

||||

3. Select app version and deployment location, then go to **Next → Deploy**

|

||||

|

||||

|

||||

|

||||

4. Wait for a few minutes, then you will see the application memcached showing active on the application list.

|

||||

|

||||

|

||||

|

||||

5. Click into Memcached application, and then enter into its workload page and get the pod IP.

|

||||

|

||||

|

||||

|

||||

6. Because Memcached service type is headless, we should connect it inside cluster with pod IP got previously and default port `11211`.

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,50 @@

|

|||

---

|

||||

title: "PostgreSQL App"

|

||||

keywords: 'Kubernetes, KubeSphere, PostgreSQL, app-store'

|

||||

description: 'How to use built-in PostgreSQL'

|

||||

|

||||

|

||||

weight: 2242

|

||||

---

|

||||

[PostgreSQL](https://www.postgresql.org/) is a powerful, open source object-relational database system which is famous for reliability, feature robustness, and performance. This guide will show you one-click deployment for PostgreSQL in Kubenetes .

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You have enabled [KubeSphere App Store](../../pluggable-components/app-store)

|

||||

- You have completed the tutorial in [Create Workspace, Project, Account and Role](../../quick-start/create-workspace-and-project/). Now switch to use `project-regular` account to log in and enter into `demo-peoject`.

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Common steps

|

||||

|

||||

1. Choose PostgreSQL template `From App Store`.

|

||||

|

||||

|

||||

|

||||

2. Check app info and click `Deploy` button.

|

||||

|

||||

|

||||

|

||||

3. Select app version and deployment location, then go to **Next → Deploy**

|

||||

|

||||

|

||||

|

||||

4. Wait for a few minutes, then you will see the application postgresql showing active on the application list.

|

||||

|

||||

|

||||

|

||||

5. Click into PostgreSQL application, and then enter into its service page.

|

||||

|

||||

|

||||

|

||||

6. In this page, make sure its deployment and Pod are running, then click **More → Edit Internet Access**, and select **NodePort** in the dropdown list, click **OK** to save it.

|

||||

|

||||

|

||||

|

||||

7.Go to **App Template → Configuration Files** and get rootUsername and rootPassword from `values.yaml`.

|

||||

|

||||

|

||||

|

||||

8. In this step, we can connect PostgreSQL db outside cluster using host: ${Node IP}, port: ${NODEPORT}, with the rootUsername and rootPassword we got previously.

|

||||

|

||||

|

||||

|

|

@ -6,59 +6,78 @@ description: 'How to deploy RabbitMQ on KubeSphere through App Store'

|

|||

link title: "Deploy RabbitMQ"

|

||||

weight: 251

|

||||

---

|

||||

[RabbitMQ](https://www.rabbitmq.com/) is the most widely deployed open source message broker. and it's lightweight and easy to deploy on premises and in the cloud. It supports multiple messaging protocols. RabbitMQ can be deployed in distributed and federated configurations to meet high-scale, high-availability requirements.

|

||||

This tutorial walks you through an example of how to deploy RabbitMQ on KubeSphere.

|

||||

[RabbitMQ](https://www.rabbitmq.com/) is the most widely deployed open-source message broker. It is lightweight and easy to deploy on premises and in the cloud. It supports multiple messaging protocols. RabbitMQ can be deployed in distributed and federated configurations to meet high-scale, high-availability requirements.

|

||||

|

||||

This tutorial walks you through an example of how to deploy RabbitMQ from the App Store of KubeSphere.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/). RabbitMQ will be deployed from the App Store.

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-operator` and work in the project `test-project` in the workspace `test-workspace`.

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy RabbitMQ from App Store

|

||||

|

||||

Please make sure you are landing on the **Overview** page of the project `test-project`.

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

1. Go to **App Store**.

|

||||

|

||||

|

||||

|

||||

2. Find RabbitMQ and click **Deploy** on the **App Info** page.

|

||||

|

||||

2. Find **RabbitMQ** and click **Deploy**.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

3. Set a name and select an app version. Make sure RabbitMQ is deployed in `demo-project` and click **Next**.

|

||||

|

||||

3. Make sure RabbitMQ is deployed in `test-project` and click **Next**.

|

||||

|

||||

|

||||

|

||||

4. In **App Config**, you can use the default configuration directly or customize the configuration either by specifying fields in a form or editing the YAML file. Record the value of **Root Username** and the value of **Root Password**, which will be used later for login. Click **Deploy** to continue.

|

||||

|

||||

4. Use the default configuration or change the account and password as you want. then click **Deploy**.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

{{< notice tip >}}

|

||||

|

||||

To see the manifest file, toggle the **YAML** switch.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

5. Wait until RabbitMQ is up and running.

|

||||

|

||||

|

||||

|

||||

|

||||

### Step 2: Access RabbitMQ Dashboard

|

||||

|

||||

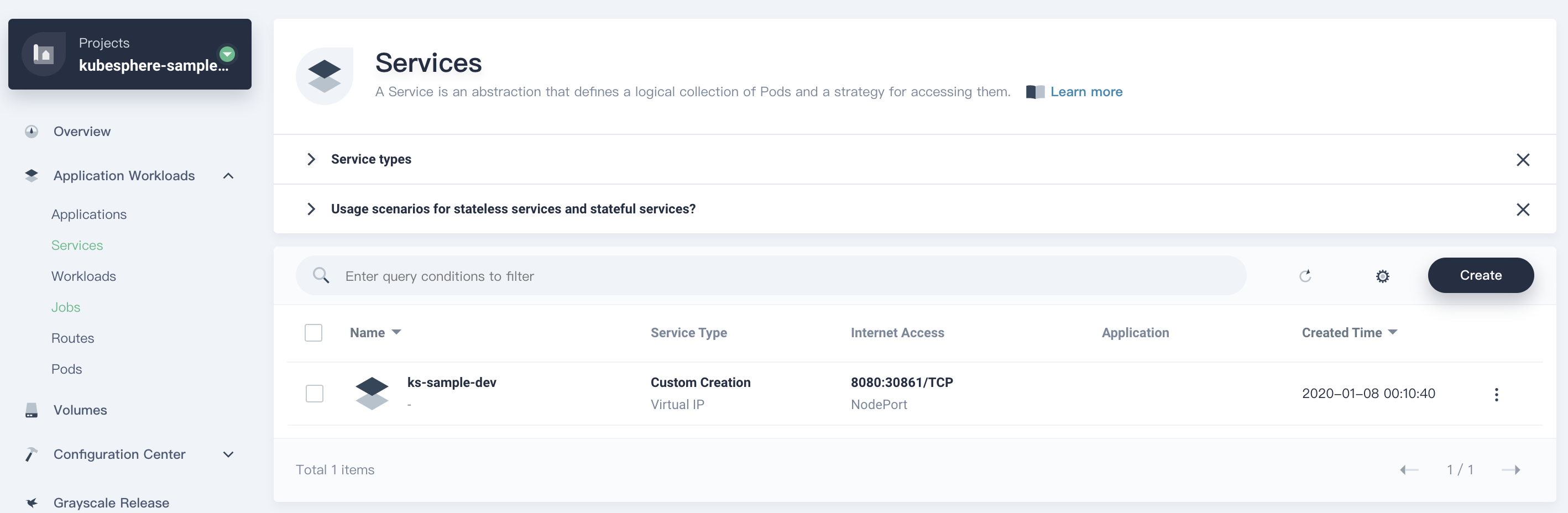

1. Go to **Services**.and click **rabbiitmq-service-name**.

|

||||

To access RabbitMQ outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

|

||||

1. Go to **Services** and click the service name of RabbitMQ.

|

||||

|

||||

2. Click **More** and click **Edit Internet Access**.

|

||||

|

||||

|

||||

|

||||

2. Click **More** and select **Edit Internet Access** from the drop-down menu.

|

||||

|

||||

3. Select **NodePort** and click **Ok**. [Learn More](https://v2-1.docs.kubesphere.io/docs/project-setting/project-gateway/)

|

||||

|

||||

|

||||

|

||||

4. Through <font color=green>{$NodeIP} : {$Nodeport}</font> to access RabbitMQ management.

|

||||

|

||||

3. Select **NodePort** for **Access Method** and click **OK**. For more information, see [Project Gateway](../../../project-administration/project-gateway/).

|

||||

|

||||

5. Log in RabbitMQ management.

|

||||

|

||||

|

||||

|

||||

6. If you want to learn more information about RabbitMQ please refer to https://www.rabbitmq.com/documentation.html.

|

||||

4. Under **Service Ports**, you can see ports are exposed.

|

||||

|

||||

|

||||

|

||||

5. Access RabbitMQ **management** through `{$NodeIP}:{$Nodeport}`. Note that the username and password are those you set in **Step 1**.

|

||||

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

You may need to open the port in your security groups and configure related port forwarding rules depending on your where your Kubernetes cluster is deployed.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

6. For more information about RabbitMQ, refer to [the official documentation of RabbitMQ](https://www.rabbitmq.com/documentation.html).

|

||||

|

|

@ -6,67 +6,83 @@ description: 'How to deploy Tomcat on KubeSphere through App Store'

|

|||

link title: "Deploy Tomcat"

|

||||

weight: 261

|

||||

---

|

||||

[Apache Tomcat](https://tomcat.apache.org/index.html) software powers numerous large-scale, mission-critical web applications across a diverse range of industries and organizations.

|

||||

This tutorial walks you through an example of how to deploy Tomcat on KubeSphere.

|

||||

[Apache Tomcat](https://tomcat.apache.org/index.html) powers numerous large-scale, mission-critical web applications across a diverse range of industries and organizations. Tomcat provides a pure Java HTTP web server environment in which Java code can run.

|

||||

|

||||

This tutorial walks you through an example of deploying Tomcat from the App Store of KubeSphere.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/). Tomcat will be deployed from the App Store.

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-operator` and work in the project `test-project` in the workspace `test-workspace`.

|

||||

- Please make sure you [enable the OpenPitrix system](https://kubesphere.io/docs/pluggable-components/app-store/).

|

||||

- You need to create a workspace, a project, and a user account for this tutorial. The account needs to be a platform regular user and to be invited as the project operator with the `operator` role. In this tutorial, you log in as `project-regular` and work in the project `demo-project` in the workspace `demo-workspace`. For more information, see [Create Workspace, Project, Account and Role](../../../quick-start/create-workspace-and-project/).

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Deploy Tomcat from App Store

|

||||

|

||||

Please make sure you are landing on the **Overview** page of the project `test-project`.

|

||||

1. On the **Overview** page of the project `demo-project`, click **App Store** in the top left corner.

|

||||

|

||||

1. Go to **App Store**.

|

||||

|

||||

|

||||

|

||||

2. Find Tomcat and click **Deploy** on the **App Info** page.

|

||||

|

||||

2. Find **Tomcat** and click **Deploy**.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

3. Set a name and select an app version. Make sure Tomcat is deployed in `demo-project` and click **Next**.

|

||||

|

||||

3. Make sure Tomcat is deployed in `test-project` and click **Next**.

|

||||

|

||||

|

||||

|

||||

4. In **App Config**, you can use the default configuration or customize the configuration by editing the YAML file directly. Click **Deploy** to continue.

|

||||

|

||||

4. Use the default configuration and click **Deploy**.

|

||||

|

||||

|

||||

|

||||

|

||||

5. Wait until Tomcat is up and running.

|

||||

|

||||

|

||||

|

||||

|

||||

### Step 2: Access Tomcat Terminal

|

||||

|

||||

1. Go to **Services** and click **tomcat-service-name**.

|

||||

1. Go to **Services** and click the service name of Tomcat.

|

||||

|

||||

|

||||

|

||||

|

||||

2. Expand pods information and click **terminal**. You can now use the feature.

|

||||

|

||||

|

||||

2. Under **Pods**, expand the menu to see container details, and then click the **Terminal** icon.

|

||||

|

||||

3. You can view the deployed projects in `/usr/local/tomcat/webapps`.

|

||||

|

||||

|

||||

|

||||

### Step 3: Access the Tomcat project in the browser

|

||||

3. You can view deployed projects in `/usr/local/tomcat/webapps`.

|

||||

|

||||

1. Go to **Services** and click **tomcat-service-name**.

|

||||

|

||||

|

||||

2. Click **More** and click **Edit Internet Access**.

|

||||

|

||||

### Step 3: Access Tomcat Project from Browser

|

||||

|

||||

3. Select **NodePort** and click **Ok**. [Learn More](https://v2-1.docs.kubesphere.io/docs/project-setting/project-gateway/)

|

||||

|

||||

To access Tomcat projects outside the cluster, you need to expose the app through NodePort first.

|

||||

|

||||

4. Through <font color=green>{$NodeIP} : {$Nodeport} / {$Project path}</font> to access the tomcat project in browser.

|

||||

|

||||

|

||||

1. Go to **Services** and click the service name of Tomcat.

|

||||

|

||||

5. If you want to learn more information about Tomcat please refer to https://tomcat.apache.org/index.html.

|

||||

|

||||

|

||||

2. Click **More** and select **Edit Internet Access** from the drop-down menu.

|

||||

|

||||

|

||||

|

||||

3. Select **NodePort** for **Access Method** and click **OK**. For more information, see [Project Gateway](https://deploy-preview-492--kubesphere-v3.netlify.app/docs/project-administration/project-gateway/).

|

||||

|

||||

|

||||

|

||||

4. Under **Service Ports**, you can see the port is exposed.

|

||||

|

||||

|

||||

|

||||

5. Access the sample Tomcat project through `{$NodeIP}:{$Nodeport}` in your browser.

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

You may need to open the port in your security groups and configure related port forwarding rules depending on your where your Kubernetes cluster is deployed.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

6. For more information about Tomcat, refer to [the official documentation of Tomcat](https://tomcat.apache.org/index.html).

|

||||

|

|

@ -13,15 +13,19 @@ icon: "/images/docs/docs.svg"

|

|||

|

||||

In KubeSphere, you set a cluster's configuration and configure its features using the interactive web console or the built-in native command-line tool kubectl. As a cluster administrator, you are responsible for a series of tasks, including cordoning and adding labels to nodes, controlling cluster visibility, monitoring cluster status, setting cluster-wide alerting and notification rules, as well as configuring storage and log collection solutions.

|

||||

|

||||

{{< notice note >}}

|

||||

{{< notice note >}}

|

||||

|

||||

Multi-cluster management is not covered in this chapter. For more information about this feature, see [Multi-cluster Management](../multicluster-management/).

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

## [Persistent Volume and Storage Class](../cluster-administration/persistent-volume-and-storage-class/)

|

||||

|

||||

Learn basic concepts of PVs, PVCs and storage classes, and demonstrates how to manage storage classes and PVCs in KubeSphere.

|

||||

|

||||

## [Node Management](../cluster-administration/nodes/)

|

||||

|

||||

Monitor node status and learn how to add node label or taints.

|

||||

Monitor node status and learn how to add node label or taints.

|

||||

|

||||

## [Cluster Status Monitoring](../cluster-administration/cluster-status-monitoring/)

|

||||

|

||||

|

|

@ -29,7 +33,7 @@ Monitor how a cluster is functioning based on different metrics, including physi

|

|||

|

||||

## [Application Resources Monitoring](../cluster-administration/application-resources-monitoring/)

|

||||

|

||||

Monitor application resources across the cluster, such as the number of Deployments and CPU usage of different projects.

|

||||

Monitor application resources across the cluster, such as the number of Deployments and CPU usage of different projects.

|

||||

|

||||

## Cluster-wide Alerting and Notification

|

||||

|

||||

|

|

@ -73,3 +77,10 @@ Learn how to add Fluentd to receive logs, events or auditing logs.

|

|||

|

||||

Customize your email address settings to receive notifications of any alert.

|

||||

|

||||

## [Customizing Platform Information](../cluster-administration/platform-settings/customize-basic-information/)

|

||||

|

||||

Customize platform settings such as logo, title etc.

|

||||

|

||||

## [Cluster Shutdown and Restart](../cluster-administration/shuting-down-and-restart-cluster-cracefully/)

|

||||

|

||||

Learn how to gracefully shutting down your cluster and how to restart it.

|

||||

|

|

|

|||

|

|

@ -9,7 +9,7 @@ weight: 400

|

|||

|

||||

In addition to monitoring data at the physical resource level, cluster administrators also need to keep a close track of application resources across the platform, such as the number of projects and DevOps projects, as well as the number of workloads and services of a specific type. Application resource monitoring provides a summary of resource usage and application-level trends of the platform.

|

||||

|

||||

## Prerequisites

|

||||

## Prerequisites

|

||||

|

||||

You need an account granted a role including the authorization of **Clusters Management**. For example, you can log in the console as `admin` directly or create a new role with the authorization and assign it to an account.

|

||||

|

||||

|

|

@ -17,25 +17,26 @@ You need an account granted a role including the authorization of **Clusters Man

|

|||

|

||||

1. Click **Platform** in the top left corner and select **Clusters Management**.

|

||||

|

||||

|

||||

|

||||

|

||||

2. If you have enabled the [multi-cluster feature](../../multicluster-management) with member clusters imported, you can select a specific cluster to view its application resources. If you have not enabled the feature, refer to the next step directly.

|

||||

|

||||

|

||||

|

||||

|

||||

3. Choose **Application Resources** under **Monitoring & Alerting** to see the overview of application resource monitoring, including the summary of the usage of all resources in the cluster, as shown in the following figure.

|

||||

|

||||

|

||||

|

||||

|

||||

4. Among them, **Cluster Resources Usage** and **Application Resources Usage** retain the monitoring data of the last 7 days and support custom time range queries.

|

||||

|

||||

|

||||

|

||||

|

||||

5. Click a specific resource to view detailed usage and trends of it during a certain time period, such as **CPU** under **Cluster Resources Usage**. The detail page allows you to view specific monitoring data by project. The highly-interactive dashboard enables users to customize the time range, displaying the exact resource usage at a given time point.

|

||||

|

||||

|

||||

|

||||

|

||||

## Usage Ranking

|

||||

|

||||

**Usage Ranking** supports the sorting of project resource usage, so that platform administrators can understand the resource usage of each project in the current cluster, including **CPU Usage**, **Memory Usage**, **Pod Count**, as well as **Outbound Traffic** and **Inbound Traffic**. You can sort projects in ascending or descending order by one of the indicators in the drop-down list.

|

||||

|

||||

**Usage Ranking** supports the sorting of project resource usage, so that platform administrators can understand the resource usage of each project in the current cluster, including **CPU Usage**, **Memory Usage**, **Pod Count**, as well as **Outbound Traffic** and **Inbound Traffic**. You can sort projects in ascending or descending order by one of the indicators in the drop-down list. This feature is very useful for quickly locating your application (Pod) that is consuming heavy CPU or memory.

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -15,23 +15,25 @@ Before adding a log receiver, you need to enable any of the `logging`, `events`

|

|||

|

||||

1. To add a log receiver:

|

||||

|

||||

- Login KubeSphere with an account of ***platform-admin*** role

|

||||

- Click ***Platform*** -> ***Clusters Management***

|

||||

- Select a cluster if multiple clusters exist

|

||||

- Click ***Cluster Settings*** -> ***Log Collections***

|

||||

- Log receivers can be added by clicking ***Add Log Collector***

|

||||

- Login KubeSphere with an account of ***platform-admin*** role

|

||||

- Click ***Platform*** -> ***Clusters Management***

|

||||

- Select a cluster if multiple clusters exist

|

||||

- Click ***Cluster Settings*** -> ***Log Collections***

|

||||

- Log receivers can be added by clicking ***Add Log Collector***

|

||||

|

||||

|

||||

|

||||

|

||||

2. Choose ***Elasticsearch*** and fill in the Elasticsearch service address and port like below:

|

||||

|

||||

|

||||

|

||||

|

||||

3. Elasticsearch appears in the receiver list of ***Log Collections*** page and its status becomes ***Collecting***.

|

||||

|

||||

|

||||

|

||||

|

||||

4. Verify whether Elasticsearch is receiving logs sent from Fluent Bit:

|

||||

|

||||

- Click ***Log Search*** in the ***Toolbox*** in the bottom right corner.

|

||||

- You can search logs in the logging console that appears.

|

||||

- Click ***Log Search*** in the ***Toolbox*** in the bottom right corner.

|

||||

- You can search logs in the logging console that appears.

|

||||

|

||||

You can read [Log Query](../../../../toolbox/log-query/) to learn how to use the tool.

|

||||

|

|

|

|||

|

|

@ -125,31 +125,30 @@ EOF

|

|||

|

||||

1. To add a log receiver:

|

||||

|

||||

- Login KubeSphere with an account of ***platform-admin*** role

|

||||

- Click ***Platform*** -> ***Clusters Management***

|

||||

- Select a cluster if multiple clusters exist

|

||||

- Click ***Cluster Settings*** -> ***Log Collections***

|

||||

- Log receivers can be added by clicking ***Add Log Collector***

|

||||

- Login KubeSphere with an account of ***platform-admin*** role

|

||||

- Click ***Platform*** -> ***Clusters Management***

|

||||

- Select a cluster if multiple clusters exist

|

||||

- Click ***Cluster Settings*** -> ***Log Collections***

|

||||

- Log receivers can be added by clicking ***Add Log Collector***

|

||||

|

||||

|

||||

|

||||

|

||||

2. Choose ***Fluentd*** and fill in the Fluentd service address and port like below:

|

||||

|

||||

|

||||

|

||||

|

||||

3. Fluentd appears in the receiver list of ***Log Collections*** UI and its status shows ***Collecting***.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

4. Verify whether Fluentd is receiving logs sent from Fluent Bit:

|

||||

|

||||

- Click ***Application Workloads*** in the ***Cluster Management*** UI.

|

||||

- Select ***Workloads*** and then select the `default` namespace in the ***Workload*** - ***Deployments*** tab

|

||||

- Click the ***fluentd*** item and then click the ***fluentd-xxxxxxxxx-xxxxx*** pod

|

||||

- Click the ***fluentd*** container

|

||||

- In the ***fluentd*** container page, select the ***Container Logs*** tab

|

||||

- Click ***Application Workloads*** in the ***Cluster Management*** UI.

|

||||

- Select ***Workloads*** and then select the `default` namespace in the ***Workload*** - ***Deployments*** tab

|

||||

- Click the ***fluentd*** item and then click the ***fluentd-xxxxxxxxx-xxxxx*** pod

|

||||

- Click the ***fluentd*** container

|

||||

- In the ***fluentd*** container page, select the ***Container Logs*** tab

|

||||

|

||||

You'll see logs begin to scroll up continuously.

|

||||

You'll see logs begin to scroll up continuously.