mirror of

https://github.com/kubesphere/website.git

synced 2025-12-30 00:52:47 +00:00

Update overview of install on linux introduction

Signed-off-by: Ray Zhou <ray@yunify.com>

This commit is contained in:

parent

d13651fbba

commit

6744ca08d0

|

|

@ -18,7 +18,7 @@ Users are provided with multiple installation options. Please note not all optio

|

|||

- [Air-gapped Installation on Linux](../air-gapped-installation): All images of KubeSphere have been encapsulated into a package. It is convenient for air-gapped installation on Linux machines.

|

||||

- [High Availability Installation](../ha-configuration/): Install high availability KubeSphere on multiple nodes which is used for the production environment.

|

||||

- Minimal Packages: Only install the minimum required system components of KubeSphere. Here is the minimum resource requirement:

|

||||

- 2vCPUs

|

||||

- 2 CPUs

|

||||

- 4GB RAM

|

||||

- 40GB Storage

|

||||

- [Full Packages](../../../pluggable-components/): Install all available system components of KubeSphere such as DevOps, service mesh, and alerting.

|

||||

|

|

@ -27,7 +27,7 @@ If you have existing Kubernetes clusters, see [Overview of Installing on Kuberne

|

|||

|

||||

## Before Installation

|

||||

|

||||

- As images will be pulled and operating systems will be downloaded from the Internet, your environment must have Internet access. Otherwise, you need to use the air-gapped installer instead.

|

||||

- As images will be pulled will be downloaded from the Internet, your environment must have Internet access. Otherwise, you need to use the air-gapped installer instead.

|

||||

- For all-in-one installation, the only one node is both the master and the worker.

|

||||

- For multi-node installation, you need to specify the node roles in the configuration file before installation.

|

||||

- Your linux host must have OpenSSH Server installed.

|

||||

|

|

|

|||

|

|

@ -0,0 +1,180 @@

|

|||

---

|

||||

title: "Persistent Volume and Storage Class"

|

||||

keywords: "storage, volume, pv, pvc, storage class, csi, Ceph RBD, Glusterfs, QingCloud, "

|

||||

description: "Persistent Volume and Storage Class Management"

|

||||

|

||||

linkTitle: "Persistent Volume and Storage Class"

|

||||

weight: 100

|

||||

---

|

||||

|

||||

This tutorial describes the basic concepts of PVs, PVCs and storage classes and demonstrates how a cluster administrator can manage storage classes and persistent volumes in KubeSphere.

|

||||

|

||||

## Introduction

|

||||

|

||||

A PersistentVolume (PV) is a piece of storage in the cluster that has been provisioned by an administrator or dynamically provisioned using Storage Classes. PVs are volume plugins like Volumes, but have a lifecycle independent of any individual Pod that uses the PV. PVs can be provisioned either [statically](https://kubernetes.io/docs/concepts/storage/persistent-volumes/#static) or [dynamically](https://kubernetes.io/docs/concepts/storage/persistent-volumes/#dynamic).

|

||||

|

||||

A PersistentVolumeClaim (PVC) is a request for storage by a user. It is similar to a Pod. Pods consume node resources and PVCs consume PV resources.

|

||||

|

||||

KubeSphere supports [dynamic volume provisioning](https://kubernetes.io/docs/concepts/storage/dynamic-provisioning/) based on storage classes to create PVs.

|

||||

|

||||

A [StorageClass](https://kubernetes.io/docs/concepts/storage/storage-classes) provides a way for administrators to describe the classes of storage they offer. Different classes might map to quality-of-service levels, or to backup policies, or to arbitrary policies determined by the cluster administrators. Each StorageClass has a provisioner that determines what volume plugin is used for provisioning PVs. This field must be specified. For which value to use, please read [the official Kubernetes documentation](https://kubernetes.io/docs/concepts/storage/storage-classes/#provisioner) or check with your storage administrator.

|

||||

|

||||

The table below summarizes common volume plugins for various provisioners (storage systems).

|

||||

|

||||

| Type | Description |

|

||||

| -------------------- | ------------------------------------------------------------ |

|

||||

| In-tree | Built-in and run as part of Kubernetes, such as [RBD](https://kubernetes.io/docs/concepts/storage/storage-classes/#ceph-rbd) and [Glusterfs](https://kubernetes.io/docs/concepts/storage/storage-classes/#glusterfs). For more plugins of this kind, see [Provisioner](https://kubernetes.io/docs/concepts/storage/storage-classes/#provisioner). |

|

||||

| External-provisioner | Deployed independently from Kubernetes, but works like an in-tree plugin, such as [nfs-client](https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client). For more plugins of this kind, see [External Storage](https://github.com/kubernetes-retired/external-storage). |

|

||||

| CSI | Container Storage Interface, a standard for exposing storage resources to workloads on COs (e.g. Kubernetes), such as [QingCloud-csi](https://github.com/yunify/qingcloud-csi) and [Ceph-CSI](https://github.com/ceph/ceph-csi). For more plugins of this kind, see [Drivers](https://kubernetes-csi.github.io/docs/drivers.html). |

|

||||

|

||||

## Prerequisites

|

||||

|

||||

You need an account granted a role including the authorization of **Clusters Management**. For example, you can log in the console as `admin` directly or create a new role with the authorization and assign it to an account.

|

||||

|

||||

## Manage Storage Class

|

||||

|

||||

1. Click **Platform** in the top left corner and select **Clusters Management**.

|

||||

|

||||

|

||||

2. If you have enabled the [multi-cluster feature](../../multicluster-management) with member clusters imported, you can select a specific cluster. If you have not enabled the feature, refer to the next step directly.

|

||||

|

||||

3. On the **Cluster Management** page, navigate to **Storage Classes** under **Storage**, where you can create, update and delete a storage class.

|

||||

|

||||

|

||||

4. To create a storage class, click **Create** and enter the basic information in the pop-up window. When you finish, click **Next**.

|

||||

|

||||

|

||||

5. In KubeSphere, you can create storage classes for `QingCloud-CSI`, `Glusterfs` and `Ceph RBD` directly. Alternatively, you can also create customized storage classes for other storage systems based on your needs. Select a type and click **Next**.

|

||||

|

||||

|

||||

|

||||

|

||||

### Common Settings

|

||||

|

||||

Some settings are commonly used and shared among storage classes. You can find them as dashboard properties on the console, which are also indicated by fields or annotations in the StorageClass manifest. You can see the manifest file in YAML format by enabling **Edit Mode** in the top right corner.

|

||||

Here are property descriptions of some commonly used fields in KubeSphere.

|

||||

|

||||

| Property | Description |

|

||||

| :---- | :---- |

|

||||

| Allow Volume Expansion | Specified by `allowVolumeExpansion` in the manifest. When it is set to `true`, PVs can be configured to be expandable. For more information, see [Allow Volume Expansion](https://kubernetes.io/docs/concepts/storage/storage-classes/#allow-volume-expansion). |

|

||||

| Reclaiming Policy | Specified by `reclaimPolicy` in the manifest. It can be set to `Delete` or `Retain` (default). For more information, see [Reclaim Policy](https://kubernetes.io/docs/concepts/storage/storage-classes/#reclaim-policy). |

|

||||

| Storage System | Specified by `provisioner` in the manifest. It determines what volume plugin is used for provisioning PVs. For more information, see [Provisioner](https://kubernetes.io/docs/concepts/storage/storage-classes/#provisioner). |

|

||||

| Supported Access Mode | Specified by `metadata.annotations[storageclass.kubesphere.io/supported-access-modes]` in the manifest. It tells KubeSphere which [access mode](https://kubernetes.io/docs/concepts/storage/persistent-volumes/#access-modes) is supported. |

|

||||

|

||||

For other settings, you need to provide different information for different storage plugins, which, in the manifest, are always indicated under the field `parameters`. They will be described in detail in the sections below. You can also refer to [Parameters](https://kubernetes.io/docs/concepts/storage/storage-classes/#parameters) in the official documentation of Kubernetes.

|

||||

|

||||

### QingCloud CSI

|

||||

|

||||

QingCloud CSI is a CSI plugin on Kubernetes for the volume of QingCloud. Storage classes of QingCloud CSI can be created on the KubeSphere console.

|

||||

|

||||

#### Prerequisites

|

||||

|

||||

- QingCloud CSI can be used on both public cloud and private cloud of QingCloud. Therefore, make sure KubeSphere has been installed on either of them so that you can use cloud volumes.

|

||||

- QingCloud CSI Plugin has been installed on your KubeSphere cluster. See [QingCloud-CSI Installation](https://github.com/yunify/qingcloud-csi#installation) for more information.

|

||||

|

||||

#### Settings

|

||||

|

||||

|

||||

|

||||

| Property | Description |

|

||||

| :---- | :---- |

|

||||

| type | On the QingCloud platform, 0 represents high performance volumes. 2 represents high capacity volumes. 3 represents super high performance volumes. 5 represents Enterprise Server SAN. 6 represents NeonSan HDD. 100 represents standard volumes. 200 represents enterprise SSD. |

|

||||

| maxSize | The volume size upper limit. |

|

||||

| stepSize | The volume size increment. |

|

||||

| minSize | The volume size lower limit. |

|

||||

| fsType | Filesystem type of the volume: ext3, ext4 (default), xfs. |

|

||||

| tags | The ID of QingCloud Tag resource, split by commas. |

|

||||

|

||||

More storage class parameters can be seen in [QingCloud-CSI user guide](https://github.com/yunify/qingcloud-csi/blob/master/docs/user-guide.md#set-storage-class).

|

||||

|

||||

### Glusterfs

|

||||

|

||||

Glusterfs is an in-tree storage plugin on Kubernetes, which means you don't need to install a volume plugin additionally.

|

||||

|

||||

#### Prerequisites

|

||||

|

||||

The Glusterfs storage system has already been installed. See [GlusterFS Installation Documentation](https://www.gluster.org/install/) for more information.

|

||||

|

||||

#### Settings

|

||||

|

||||

| Property | Description |

|

||||

| :---- | :---- |

|

||||

| resturl | The Gluster REST service/Heketi service url which provision gluster volumes on demand. |

|

||||

| clusterid | The ID of the cluster which will be used by Heketi when provisioning the volume. |

|

||||

| restauthenabled | Gluster REST service authentication boolean that enables authentication to the REST server. |

|

||||

| restuser | The Glusterfs REST service/Heketi user who has access to create volumes in the Glusterfs Trusted Pool. |

|

||||

| secretNamespace, secretName | The Identification of Secret instance that contains user password to use when talking to Gluster REST service. |

|

||||

| gidMin, gidMax | The minimum and maximum value of GID range for the StorageClass. |

|

||||

| volumetype | The volume type and its parameters can be configured with this optional value. |

|

||||

|

||||

For more information about StorageClass parameters, see [Glusterfs in Kubernetes Documentation](https://kubernetes.io/docs/concepts/storage/storage-classes/#glusterfs).

|

||||

|

||||

### Ceph RBD

|

||||

|

||||

Ceph RBD is also an in-tree storage plugin on Kubernetes. The volume plugin is already in Kubernetes,

|

||||

but the storage server must be installed before you create the storage class of Ceph RBD.

|

||||

|

||||

As **hyperkube** images were [deprecated since 1.17](https://github.com/kubernetes/kubernetes/pull/85094), in-tree Ceph RBD may not work without **hyperkube**.

|

||||

Nevertheless, you can use [rbd provisioner](https://github.com/kubernetes-incubator/external-storage/tree/master/ceph/rbd) as a substitute, whose format is the same as in-tree Ceph RBD. The only different parameter is `provisioner` (i.e **Storage System** on the KubeSphere console). If you want to use rbd-provisioner, the value of `provisioner` must be `ceph.com/rbd` (Input this value in **Storage System** in the image below). If you use in-tree Ceph RBD, the value must be `kubernetes.io/rbd`.

|

||||

|

||||

|

||||

|

||||

#### Prerequisites

|

||||

|

||||

- The Ceph server has already been installed. See [Ceph Installation Documentation](https://docs.ceph.com/en/latest/install/) for more information.

|

||||

- Install the plugin if you choose to use rbd-provisioner. Community developers provide [charts for rbd provisioner](https://github.com/kubesphere/helm-charts/tree/master/src/test/rbd-provisioner) that you can use to install rbd-provisioner by helm.

|

||||

|

||||

#### Settings

|

||||

|

||||

| Property | Description |

|

||||

| :---- | :---- |

|

||||

| monitors| The Ceph monitors, comma delimited. |

|

||||

| adminId| The Ceph client ID that is capable of creating images in the pool. |

|

||||

| adminSecretName| The Secret Name for `adminId`. |

|

||||

| adminSecretNamespace| The namespace for `adminSecretName`. |

|

||||

| pool | The Ceph RBD pool. |

|

||||

| userId | The Ceph client ID that is used to map the RBD image. |

|

||||

| userSecretName | The name of Ceph Secret for `userId` to map RBD image. |

|

||||

| userSecretNamespace | The namespace for `userSecretName`. |

|

||||

| fsType | The fsType that is supported by Kubernetes. |

|

||||

| imageFormat | The Ceph RBD image format, `1` or `2`. |

|

||||

| imageFeatures| This parameter is optional and should only be used if you set `imageFormat` to `2`. |

|

||||

|

||||

For more information about StorageClass parameters, see [Ceph RBD in Kubernetes Documentation](https://kubernetes.io/docs/concepts/storage/storage-classes/#ceph-rbd).

|

||||

|

||||

### Custom Storage Class

|

||||

|

||||

You can create custom storage classes for your storage systems if they are not directly supported by KubeSphere. The following example shows you how to create a storage class for NFS on the KubeSphere console.

|

||||

|

||||

#### NFS Introduction

|

||||

|

||||

NFS (Net File System) is widely used on Kubernetes with the external-provisioner volume plugin

|

||||

[nfs-client](https://github.com/kubernetes-retired/external-storage/tree/master/nfs-client). You can create the storage class of nfs-client by clicking **Custom** in the image below.

|

||||

|

||||

|

||||

|

||||

#### Prerequisites

|

||||

|

||||

- An available NFS server.

|

||||

- The volume plugin nfs-client has already been installed. Community developers provide [charts for nfs-client](https://github.com/kubesphere/helm-charts/tree/master/src/main/nfs-client-provisioner) that you can use to install nfs-client by helm.

|

||||

|

||||

#### Common Settings

|

||||

|

||||

|

||||

|

||||

| Property | Description |

|

||||

| :---- | :---- |

|

||||

| Storage System | Specified by `provisioner` in the manifest. If you install the storage class by [charts for nfs-client](https://github.com/kubesphere/helm-charts/tree/master/src/main/nfs-client-provisioner), it can be `cluster.local/nfs-client-nfs-client-provisioner`. |

|

||||

| Allow Volume Expansion | Specified by `allowVolumeExpansion` in the manifest. Select `No`. |

|

||||

| Reclaiming Policy | Specified by `reclaimPolicy` in the manifest. The value is `Delete` by default. |

|

||||

| Supported Access Mode | Specified by `.metadata.annotations.storageclass.kubesphere.io/supported-access-modes` in the manifest. `ReadWriteOnce`, `ReadOnlyMany` and `ReadWriteMany` all are selected by default. |

|

||||

|

||||

#### Parameters

|

||||

|

||||

| Key| Description | Value |

|

||||

| :---- | :---- | :----|

|

||||

| archiveOnDelete | Archive pvc when deleting | `true` |

|

||||

|

||||

## Manage Volumes

|

||||

|

||||

Once the storage class is created, you can create volumes with it. You can list, create, update and delete volumes in **Volumes** under **Storage** on the KubeSphere console. For more details, please see [Volume Management](../../project-user-guide/storage/volumes/).

|

||||

|

|

@ -0,0 +1,192 @@

|

|||

---

|

||||

title: "High Availability Configurations"

|

||||

keywords: 'KubeSphere, Kubernetes, HA, high availability, installation, configuration'

|

||||

description: 'How to configure a high-availability Kubernetes cluster.'

|

||||

linkTitle: "High Availability Configurations"

|

||||

weight: 2130

|

||||

---

|

||||

|

||||

You can set up a single-master Kubernetes cluster with KubeSphere installed based on the tutorial of [Multi-node Installation](../multioverview/). Single-master clusters may be sufficient for development and testing in most cases. For a production environment, however, you need to consider the high availability of the cluster. If key components (for example, kube-apiserver, kube-scheduler, and kube-controller-manager) are all running on the same master node, Kubernetes and KubeSphere will be unavailable once the master node goes down. Therefore, you need to set up a high-availability cluster by provisioning load balancers with multiple master nodes. You can use any cloud load balancer, or any hardware load balancer (e.g. F5). In addition, Keepalived and [HAproxy](https://www.haproxy.com/), or Nginx is also an alternative for creating high-availability clusters.

|

||||

|

||||

This tutorial demonstrates the general configurations of a high-availability cluster as you install KubeSphere on Linux.

|

||||

|

||||

## Architecture

|

||||

|

||||

Make sure you have prepared six Linux machines before you begin, with three of them serving as master nodes and the other three as worker nodes. The following image shows details of these machines, including their private IP address and role. For more information about system and network requirements, see [Multi-node Installation](../multioverview/#step-1-prepare-linux-hosts).

|

||||

|

||||

|

||||

|

||||

## Configure a Load Balancer

|

||||

|

||||

You must create a load balancer in your environment to listen (also known as listeners on some cloud platforms) on key ports. Here is a table of recommended ports that need to be listened on.

|

||||

|

||||

| Service | Protocol | Port |

|

||||

| ---------- | -------- | ----- |

|

||||

| apiserver | TCP | 6443 |

|

||||

| ks-console | TCP | 30880 |

|

||||

| http | TCP | 80 |

|

||||

| https | TCP | 443 |

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- Make sure your load balancer at least listens on the port of apiserver.

|

||||

|

||||

- You may need to open ports in your security group to ensure external traffic is not blocked depending on where your cluster is deployed. For more information, see [Port Requirements](../port-firewall/).

|

||||

- You can configure both internal and external load balancers on some cloud platforms. After assigning a public IP address to the external load balancer, you can use the IP address to access the cluster.

|

||||

- For more information about how to configure load balancers, see “Installing on Public Cloud” to see specific steps on major public cloud platforms.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## Download KubeKey

|

||||

|

||||

[Kubekey](https://github.com/kubesphere/kubekey) is the next-gen installer which provides an easy, fast and flexible way to install Kubernetes and KubeSphere. Follow the steps below to download KubeKey.

|

||||

|

||||

{{< tabs >}}

|

||||

|

||||

{{< tab "For users with good network connections to GitHub" >}}

|

||||

|

||||

Download KubeKey from [GitHub Release Page](https://github.com/kubesphere/kubekey/releases/tag/v1.0.0) or use the following command directly.

|

||||

|

||||

```bash

|

||||

wget https://github.com/kubesphere/kubekey/releases/download/v1.0.0/kubekey-v1.0.0-linux-amd64.tar.gz -O - | tar -xz

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

||||

{{< tab "For users with poor network connections to GitHub" >}}

|

||||

|

||||

Download KubeKey using the following command:

|

||||

|

||||

```bash

|

||||

wget -c https://kubesphere.io/download/kubekey-v1.0.0-linux-amd64.tar.gz -O - | tar -xz

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

||||

{{</ tabs >}}

|

||||

|

||||

Make `kk` executable:

|

||||

|

||||

```bash

|

||||

chmod +x kk

|

||||

```

|

||||

|

||||

Create an example configuration file with default configurations. Here Kubernetes v1.17.9 is used as an example.

|

||||

|

||||

```bash

|

||||

./kk create config --with-kubesphere v3.0.0 --with-kubernetes v1.17.9

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

Kubernetes versions that have been fully tested with KubeSphere: v1.15.12, v1.16.13, v1.17.9 (default), and v1.18.6.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## Deploy KubeSphere and Kubernetes

|

||||

|

||||

After you run the commands above, a configuration file `config-sample.yaml` will be created. Edit the file to add machine information, configure the load balancer and more.

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

The file name may be different if you customize it.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### config-sample.yaml Example

|

||||

|

||||

```yaml

|

||||

spec:

|

||||

hosts:

|

||||

- {name: master1, address: 192.168.0.2, internalAddress: 192.168.0.2, user: ubuntu, password: Testing123}

|

||||

- {name: master2, address: 192.168.0.3, internalAddress: 192.168.0.3, user: ubuntu, password: Testing123}

|

||||

- {name: master3, address: 192.168.0.4, internalAddress: 192.168.0.4, user: ubuntu, password: Testing123}

|

||||

- {name: node1, address: 192.168.0.5, internalAddress: 192.168.0.5, user: ubuntu, password: Testing123}

|

||||

- {name: node2, address: 192.168.0.6, internalAddress: 192.168.0.6, user: ubuntu, password: Testing123}

|

||||

- {name: node3, address: 192.168.0.7, internalAddress: 192.168.0.7, user: ubuntu, password: Testing123}

|

||||

roleGroups:

|

||||

etcd:

|

||||

- master1

|

||||

- master2

|

||||

- master3

|

||||

master:

|

||||

- master1

|

||||

- master2

|

||||

- master3

|

||||

worker:

|

||||

- node1

|

||||

- node2

|

||||

- node3

|

||||

```

|

||||

|

||||

For more information about different fields in this configuration file, see [Kubernetes Cluster Configurations](../vars/) and [Multi-node Installation](../multioverview/#2-edit-the-configuration-file).

|

||||

|

||||

### Configure the Load Balancer

|

||||

|

||||

```yaml

|

||||

## Public LB config example

|

||||

## apiserver_loadbalancer_domain_name: "lb.kubesphere.local"

|

||||

controlPlaneEndpoint:

|

||||

domain: lb.kubesphere.local

|

||||

address: "192.168.0.xx"

|

||||

port: "6443"

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

- The address and port should be indented by two spaces in `config-sample.yaml`.

|

||||

- In most cases, you need to provide the **private IP address** of the load balancer for the field `address`. However, different cloud providers may have different configurations for load balancers. For example, if you configure a Server Load Balancer (SLB) on Alibaba Cloud, the platform assigns a public IP address to the SLB, which means you need to specify the public IP address for the field `address`.

|

||||

- The domain name of the load balancer is `lb.kubesphere.local` by default for internal access. If you need to change the domain name, please uncomment and modify it.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

### Persistent Storage Plugin Configurations

|

||||

|

||||

For a production environment, you need to prepare persistent storage and configure the storage plugin (e.g. CSI) in `config-sample.yaml` to define which storage service you want to use. For more information, see [Persistent Storage Configurations](../storage-configuration/).

|

||||

|

||||

### Enable Pluggable Components (Optional)

|

||||

|

||||

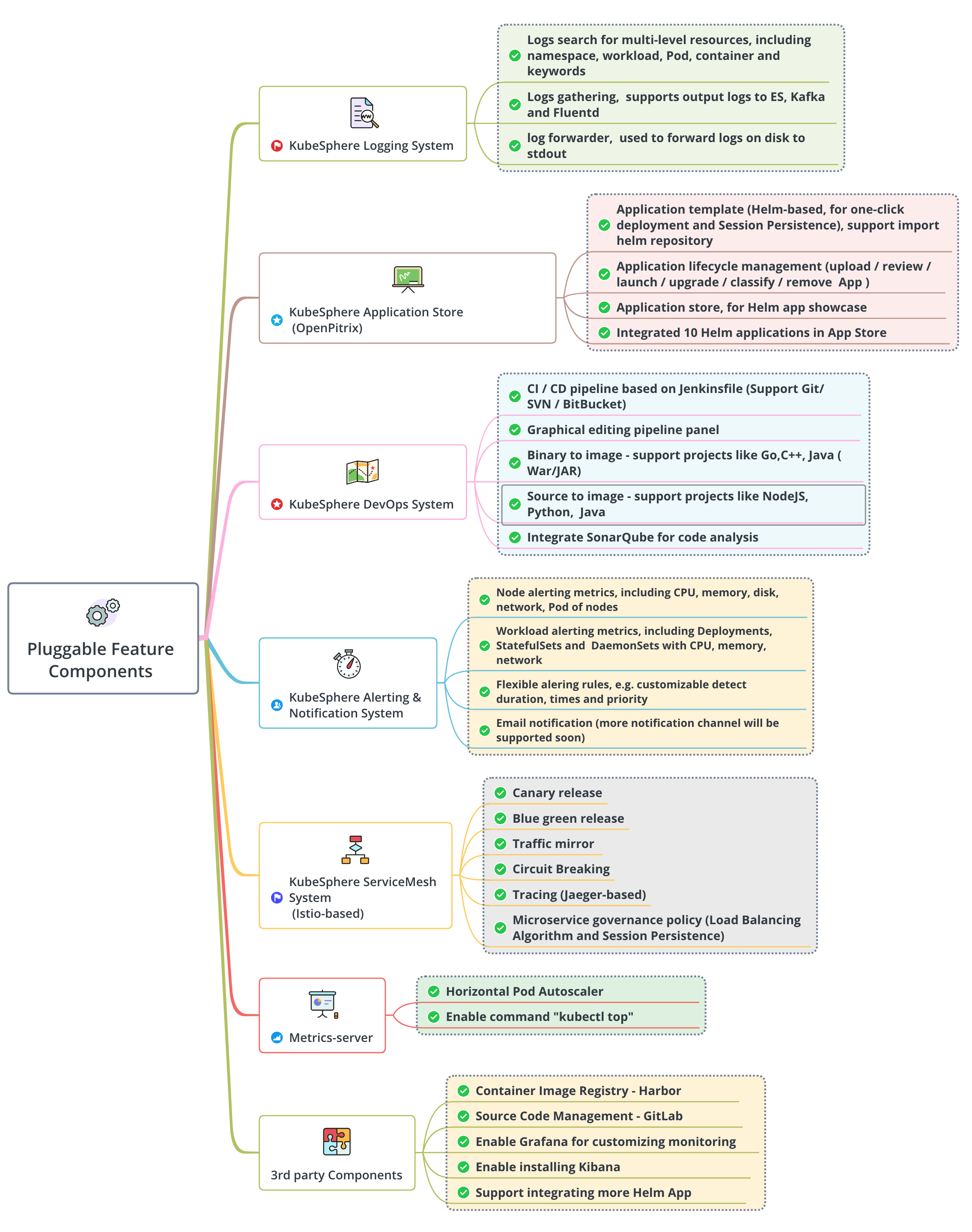

KubeSphere has decoupled some core feature components since v2.1.0. These components are designed to be pluggable which means you can enable them either before or after installation. By default, KubeSphere will be installed with the minimal package if you do not enable them.

|

||||

|

||||

You can enable any of them according to your demands. It is highly recommended that you install these pluggable components to discover the full-stack features and capabilities provided by KubeSphere. Make sure your machines have sufficient CPU and memory before enabling them. See [Enable Pluggable Components](../../../pluggable-components/) for details.

|

||||

|

||||

### Start Installation

|

||||

|

||||

After you complete the configuration, you can execute the following command to start the installation:

|

||||

|

||||

```bash

|

||||

./kk create cluster -f config-sample.yaml

|

||||

```

|

||||

|

||||

### Verify Installation

|

||||

|

||||

1. Run the following command to Inspect the logs of installation.

|

||||

|

||||

```bash

|

||||

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

|

||||

```

|

||||

|

||||

2. When you see the following message, it means your HA cluster is successfully created.

|

||||

|

||||

```bash

|

||||

#####################################################

|

||||

### Welcome to KubeSphere! ###

|

||||

#####################################################

|

||||

|

||||

Console: http://192.168.0.3:30880

|

||||

Account: admin

|

||||

Password: P@88w0rd

|

||||

|

||||

NOTES:

|

||||

1. After logging into the console, please check the

|

||||

monitoring status of service components in

|

||||

the "Cluster Management". If any service is not

|

||||

ready, please wait patiently until all components

|

||||

are ready.

|

||||

2. Please modify the default password after login.

|

||||

|

||||

#####################################################

|

||||

https://kubesphere.io 2020-xx-xx xx:xx:xx

|

||||

#####################################################

|

||||

```

|

||||

|

||||

|

|

@ -1,113 +1,79 @@

|

|||

---

|

||||

title: "概述"

|

||||

keywords: 'Kubernetes, KubeSphere, Linux, Installation'

|

||||

description: 'Overview of Installing KubeSphere on Linux'

|

||||

description: '介绍在 Linux 上安装 KubeSphere 的各种方式'

|

||||

|

||||

linkTitle: "概述"

|

||||

weight: 2110

|

||||

---

|

||||

|

||||

对于在 Linux 上的安装,KubeSphere 既可以安装在云中也可以安装在本地环境中,例如 AWS EC2,Azure VM 和裸机。 用户可以在配置新的 Kubernetes 集群时在 Linux 主机上安装 KubeSphere。 安装过程简单而友好。 同时,KubeSphere不仅提供在线安装程序或 [KubeKey](https://github.com/kubesphere/kubekey) ,而且还为无法访问 Internet 的环境提供了离线的安装解决方案。

|

||||

对于在 Linux 上的安装,KubeSphere 既可以安装在云中也可以安装在本地环境中,例如 AWS EC2,Azure VM 和裸机。用户可以在 Linux 主机上安装全新的 Kubernetes 和 KubeSphere 集群,安装过程简单而友好。同时,KubeSphere 不仅提供在线安装工具即 [KubeKey](https://github.com/kubesphere/kubekey),而且还为无法访问 Internet 的环境提供了离线的安装解决方案。

|

||||

|

||||

作为 [GitHub](https://github.com/kubesphere) 上的开源项目, KubeSphere 是一个有成千上万的社区用户的聚集地。 他们中的许多人把KubeSphere 运行在生产环境中。

|

||||

作为 [GitHub](https://github.com/kubesphere) 上的开源项目,KubeSphere 是一个有成千上万的社区用户的聚集地,他们中的许多人已经把 KubeSphere 运行在生产环境中。

|

||||

|

||||

为用户提供了多个安装选项。 请注意,并非所有选项都是互斥的。 例如,您可以在离线环境中的多个节点上以最小化部署 KubeSphere。

|

||||

KubeSphere 有多钟安装方式,请注意,这些安装方式不是互斥的。例如,您可以在离线环境中的多个节点上以最小化方式部署 KubeSphere。

|

||||

|

||||

- [All-in-One](../../../quick-start/all-in-one-on-linux/): 在单个节点上安装 KubeSphere 。 仅用于用户快速熟悉 KubeSphere。

|

||||

- [Multi-Node](../multioverview/): 在多个节点上安装 KubeSphere 。 用于测试或开发。

|

||||

- [Install KubeSphere on Air-gapped Linux](../air-gapped-installation): 把 KubeSphere 的所有镜像打包,方便再 Linux 上离线安装。

|

||||

- High Availability Installation: 在用于生产环境的多个节点上安装高可用性 KubeSphere。

|

||||

- Minimal Packages: 仅安装 KubeSphere 所需的最少系统组件。 以下是最低资源要求:

|

||||

- 2vCPUs

|

||||

- [All-in-One](../../../quick-start/all-in-one-on-linux/):在单个节点上安装 KubeSphere,仅用于用户快速熟悉 KubeSphere。

|

||||

- [多节点安装](../multioverview/):在多个节点上安装单 master 的 KubeSphere,用于测试或开发。

|

||||

- [离线安装](../air-gapped-installation/):把 KubeSphere 的所有镜像打包用于没有互联网连接的离线环境下安装。

|

||||

- [高可用安装](../ha-configuration/):多个节点上安装主节点高可用 KubeSphere,用于生产环境。

|

||||

- 最小化安装:仅安装 KubeSphere 所需的最少系统组件。以下是最低资源要求:

|

||||

- 2 CPUs

|

||||

- 4GB RAM

|

||||

- 40GB Storage

|

||||

- [Full Packages](../complete-installation): 安装 KubeSphere 的所有可用系统组件,例如 DevOps,ServiceMesh 和告警。

|

||||

- [全家桶安装](../../../pluggable-components/):安装 KubeSphere 的所有可插拔系统组件,例如 DevOps,ServiceMesh 和告警等。

|

||||

|

||||

|

||||

有关在 Kubernetes 上进行安装,请参阅在 Kubernetes 上进行安装概述。

|

||||

有关在 Kubernetes 上进行安装,请参阅[在 Kubernetes 上安装 KubeSphere 概述](../../../installing-on-kubernetes/introduction/overview/)。

|

||||

|

||||

## 安装前

|

||||

|

||||

- 由于镜像和操作系统将从网络上下载,因此您的环境必须可以访问 Internet。 否则,您需要改用离线式安装程序。

|

||||

- 对于 All-in-One 安装,唯一的节点是主节点和工作节点。

|

||||

- 由于需要从网络上下载镜像,因此您的环境必须可以访问 Internet。否则,需要改用离线方式安装。

|

||||

- 对于 All-in-One 安装,唯一的节点既是主节点也是工作节点。

|

||||

- 对于多节点安装,需要在安装之前在配置文件中指定节点角色。

|

||||

- 您的 Linux 主机必须已安装 OpenSSH 服务。

|

||||

- 在安装之前,请检查[端口要求](../port-firewall) 。

|

||||

- Linux 主机必须已安装 OpenSSH 服务。

|

||||

- 在安装之前,请检查[端口要求](../port-firewall)。

|

||||

|

||||

## KubeKey

|

||||

|

||||

KubeKey 是用 Go 语言开发的,代表了一种全新的安装工具,可以替代以前使用的基于ansible的安装程序。 KubeKey 为用户提供了灵活的安装选择,因为他们可以分别安装KubeSphere和Kubernetes或一次安装它们,这既方便又高效。

|

||||

KubeKey 是用 Go 语言开发的,是一种全新的安装工具,可以替代以前使用的基于 ansible 的安装程序。KubeKey 为用户提供了灵活的安装选择,它既可以分别安装 KubeSphere 和 Kubernetes 也可以一次性同时安装它们,这既方便又高效。

|

||||

|

||||

使用 KubeKey 的三种方案:

|

||||

KubeKey 的三种使用场景:

|

||||

|

||||

- 仅安装Kubernetes;

|

||||

- 在一个命令中一起安装 Kubernetes 和 KubeSphere;

|

||||

- 首先安装Kubernetes,然后使用 [ks-installer](https://github.com/kubesphere/ks-installer) 在 Kubernetes 上部署 KubeSphere。

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

如果您已有 Kubernetes 集群,请参考[在 Kubernetes 上安装](../../../installing-on-kubernetes/)。

|

||||

|

||||

{{</ notice >}}

|

||||

- 仅安装 Kubernetes;

|

||||

- 在一个命令中同时安装 Kubernetes 和 KubeSphere;

|

||||

- 先安装好 Kubernetes,然后使用 [ks-installer](https://github.com/kubesphere/ks-installer) 部署 KubeSphere。

|

||||

|

||||

## 快速安装用于开发和测试

|

||||

|

||||

自v2.1.0起,KubeSphere 已取消了某些组件的耦合。 默认情况下,KubeKey 仅安装必要的组件,因为这种方法具有安装速度快和资源消耗最少的特点。 如果要启用增强的可插拔功能,请参阅[可插拔组件概述](../../../pluggable-components/)了解详细信息。

|

||||

自 v2.1.0 起,KubeSphere 升级为松耦合系统架构,默认情况下,KubeKey 仅安装必要的组件,这样安装速度既快而且资源消耗也最少。如果要启用增强的可插拔功能,请参阅[可插拔组件概述](../../../pluggable-components/)了解详细信息。

|

||||

|

||||

快速安装KubeSphere仅用于开发或测试,因为默认情况下它使用本地卷进行存储。 如果要进行生产安装,请参阅“ HA群集配置”。

|

||||

快速安装 KubeSphere 仅用于开发或测试,因为默认情况下它使用了 [openEBS](https://openebs.io/) 的[本地卷](https://kubernetes.io/docs/concepts/storage/volumes/#local)作为存储类型。如果需要在生产环境安装,请参阅[高可用配置安装](../ha-configuration/)。

|

||||

|

||||

- **All-in-one**. 这意味着只需一个命令即可进行单节点无忧安装。

|

||||

- **Multi-node**. 它允许您使用默认存储类(本地卷)在多个实例上安装 KubeSphere,这意味着不需要安装存储服务器(例如 Ceph 和 GlusterFS)。

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

关于离线安装,请参考[在 Linux 上离线安装 KubeSphere](../air-gapped-installation/)。

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

## 在 Linux 上安装HA KubeSphere

|

||||

|

||||

KubeKey 允许用户安装高度可用的群集进行生产。用户需要预先配置负载均衡器和持久性存储服务。

|

||||

|

||||

- [持久卷配置](../storage-configuration):默认情况下,KubeKey 使用基于 [openEBS](https://openebs.io/) 的[本地卷](https://kubernetes.io/docs/concepts/storage/volumes/#local)在 Kubernetes 集群中为存储服务提供动态配置。这对于快速安装测试环境非常方便。在生产环境中,必须设置存储服务器。有关详细信息,请参阅[Persistent Storage Configuration](../storage-configuration)。

|

||||

- [用于HA安装的负载均衡器配置](../master-ha):在生产环境中开始进行多节点安装之前,需要配置负载均衡器。云负载均衡器,Nginx 和 `HAproxy + Keepalived` 均可用于安装。

|

||||

|

||||

有关更多信息,请参见HA群集配置。您还可以在“在公共云上安装”中看到跨主要云提供商进行HA安装的特定步骤。

|

||||

- **All-in-one**,只需一个命令即可进行单节点零配置安装。

|

||||

- **多节点安装**,使用默认存储类(本地卷)在多个机器上安装 KubeSphere,并不需要单独安装存储服务(例如 Ceph 和 GlusterFS)。

|

||||

|

||||

## 可插拔组件概述

|

||||

|

||||

自v2.1.0起,KubeSphere 已取消了一些核心功能组件的耦合。 这些组件被设计为可插拔的,这意味着您可以在安装之前和之后都启用它们。 默认情况下,KubeKey 不安装这些可插入组件。 有关更多信息,请参见[启用可插拔组件](../../../pluggable-components/)。

|

||||

前面说过,自 v2.1.0 起,KubeSphere 变为松耦合系统架构。一些系统功能被设计为可插拔的组件,您可以在安装之前或之后启用它们。默认情况下,KubeKey 不安装这些可插拔组件,有关更多信息,请参见[启用可插拔组件](../../../pluggable-components/)。

|

||||

|

||||

|

||||

|

||||

## 存储配置说明

|

||||

|

||||

以下链接说明了如何配置不同类型的持久性存储服务。 有关如何在 KubeSphere 中配置存储类的详细说明,请参考[Storage Configuration Instruction](../ storage-configuration)。

|

||||

|

||||

- [NFS](https://kubernetes.io/docs/concepts/storage/volumes/#nfs)

|

||||

- [GlusterFS](https://www.gluster.org/)

|

||||

- [Ceph RBD](https://ceph.com/)

|

||||

- [QingCloud Block Storage](https://docs.qingcloud.com/product/storage/volume/)

|

||||

- [QingStor NeonSAN](https://docs.qingcloud.com/product/storage/volume/super_high_performance_shared_volume/)

|

||||

KubeSphere 的存储既可以在安装之前配置也可以在安装之后添加,同时,KubeSphere 既支持一些开源的存储产品比如 Ceph, GlusterFS 等,也支持商业化的存储方案。有关如何在安装 KubeSphere 之前配置存储类的详细说明请看[配置持久化存储](../storage-configuration)。安装 KubeSphere 之后如何添加存储类型请参阅[添加新的存储类型](../../../cluster-administration/persistent-volume-and-storage-class/)。

|

||||

|

||||

## 集群运维

|

||||

|

||||

### 添加新节点

|

||||

|

||||

使用 KubeKey,您可以扩展节点的数量,以在安装后满足更高的资源需求,尤其是在生产环境中。 有关更多信息,请参阅[添加新节点](../../../installing-on-linux/cluster-operation/add-new-nodes/)。

|

||||

集群资源在使用过程通常因为负载的提高需要增加节点,尤其是在生产环境中,使用 KubeKey,可以方便地扩展节点的数量。有关更多信息,请参阅[添加新节点](../../../installing-on-linux/cluster-operation/add-new-nodes/)。

|

||||

|

||||

### 删除节点

|

||||

|

||||

您需要先清空节点,然后再删除。 有关更多信息,请参阅[删除节点](../../cluster-operation/remove-nodes)。

|

||||

|

||||

### 添加新的存储类

|

||||

|

||||

KubeKey 允许您在安装后设置新的存储类。 您可以为 KubeSphere 本身和工作负载设置不同的存储类。

|

||||

|

||||

有关更多信息,请参见添加新的存储类。

|

||||

同样,负载降低常常需要删除节点节省资源。首先需要清空该节点负载,然后再删除。有关更多信息,请参阅[删除节点](../../cluster-operation/remove-nodes)。

|

||||

|

||||

## 卸载

|

||||

|

||||

卸载 KubeSphere 意味着将其从计算机中删除,这是不可逆的。 请谨慎操作。

|

||||

卸载 KubeSphere 和 Kubernetes 意味着删除整个集群,这是不可逆的,请谨慎操作。

|

||||

|

||||

有关更多信息,请参见[卸载](../../../installing-on-linux/uninstalling/uninstalling-kubesphere-and-kubernetes/)。

|

||||

有关更多信息,请参见[卸载 KubeSphere 和 Kubernetes](../../../installing-on-linux/uninstalling/uninstalling-kubesphere-and-kubernetes/)。

|

||||

|

|

|

|||

Loading…

Reference in New Issue