mirror of

https://github.com/kubesphere/website.git

synced 2025-12-26 00:12:48 +00:00

update download link for v3.0.0, sync /en to /zh

Signed-off-by: FeynmanZhou <pengfeizhou@yunify.com>

This commit is contained in:

parent

246110d956

commit

6369605927

|

|

@ -71,8 +71,8 @@ For how to set up or cancel a default StorageClass, refer to Kubernetes official

|

|||

Use [ks-installer](https://github.com/kubesphere/ks-installer) to deploy KubeSphere on an existing Kubernetes cluster. It is suggested that you install it in minimal size.

|

||||

|

||||

```bash

|

||||

$ kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

$ kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml

|

||||

$ kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

$ kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml

|

||||

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -69,11 +69,11 @@ All the other Resources will be placed in MC_KubeSphereRG_KuberSphereCluster_wes

|

|||

## Deploy KubeSphere on AKS

|

||||

To start deploying KubeSphere, use the following command.

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

```

|

||||

Download the cluster-configuration.yaml as below and you can customize the configuration. You can also enable pluggable components by setting the `enabled` property to `true` in this file.

|

||||

```bash

|

||||

wget https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml

|

||||

wget https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml

|

||||

```

|

||||

As `metrics-server` is already installed on AKS, you need to disable the component in the cluster-configuration.yaml file by changing `true` to `false` for `enabled`.

|

||||

```bash

|

||||

|

|

|

|||

|

|

@ -44,7 +44,7 @@ Now that the cluster is ready, you can install KubeSphere following this steps:

|

|||

- Install KubeSphere using kubectl. The following command is only for the default minimal installation.

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

```

|

||||

|

||||

- Create a local cluster-configuration.yaml.

|

||||

|

|

@ -53,7 +53,7 @@ Now that the cluster is ready, you can install KubeSphere following this steps:

|

|||

vi cluster-configuration.yaml

|

||||

```

|

||||

|

||||

- Copy all the content in this [file](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) and paste it to your local cluster-configuration.yaml.

|

||||

- Copy all the content in this [file](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) and paste it to your local cluster-configuration.yaml.

|

||||

|

||||

- Save the file when you finish. Execute the following command to start installation:

|

||||

|

||||

|

|

|

|||

|

|

@ -1,5 +1,5 @@

|

|||

---

|

||||

title: "Deploy KubeSphere on EKS"

|

||||

title: "Deploy KubeSphere on AWS EKS"

|

||||

keywords: 'Kubernetes, KubeSphere, EKS, Installation'

|

||||

description: 'How to install KubeSphere on EKS'

|

||||

|

||||

|

|

@ -71,14 +71,14 @@ When your cluster provisioning is complete (usually between 10 and 15 minutes),

|

|||

- Config node group

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

- Supported Kubernetes versions for KubeSphere 3.0.0: 1.15.x, 1.16.x, 1.17.x, 1.18.x.

|

||||

- Ubuntu is used for the operating system here as an example. For more information on supported systems, see Overview.

|

||||

- 3 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

- The machine type t3.medium (2 vCPU, 4GB memory) is for minimal installation. If you want to enable pluggable components or use the cluster for production, please select a machine type with more resources.

|

||||

- For other settings, you can change them as well based on your own needs or use the default value.

|

||||

{{< notice note >}}

|

||||

- Supported Kubernetes versions for KubeSphere 3.0.0: 1.15.x, 1.16.x, 1.17.x, 1.18.x.

|

||||

- Ubuntu is used for the operating system here as an example. For more information on supported systems, see Overview.

|

||||

- 3 nodes are included in this example. You can add more nodes based on your own needs especially in a production environment.

|

||||

- The machine type t3.medium (2 vCPU, 4GB memory) is for minimal installation. If you want to enable pluggable components or use the cluster for production, please select a machine type with more resources.

|

||||

- For other settings, you can change them as well based on your own needs or use the default value.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

- When the EKS cluster is ready, you can connect to the cluster with kubectl.

|

||||

## configure kubectl

|

||||

|

|

@ -111,13 +111,13 @@ For more information, see the help page with the aws eks update-kubeconfig help

|

|||

- Install KubeSphere using kubectl. The following command is only for the default minimal installation.

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

```

|

||||

|

||||

|

||||

- Create a local cluster-configuration.yaml.

|

||||

```shell

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml

|

||||

```

|

||||

|

||||

|

||||

|

|

@ -165,9 +165,8 @@ kubectl get svc -nkubesphere-system

|

|||

|

||||

- Log in the console with the default account and password (`admin/P@88w0rd`). In the cluster overview page, you can see the dashboard as shown in the following image.

|

||||

|

||||

|

||||

|

||||

|

||||

## Enable Pluggable Components (Optional)

|

||||

|

||||

The example above demonstrates the process of a default minimal installation. To enable other components in KubeSphere, see Enable Pluggable Components for more details.

|

||||

|

||||

|

|

|

|||

|

|

@ -48,7 +48,7 @@ This guide walks you through the steps of deploying KubeSphere on [Google Kubern

|

|||

- Install KubeSphere using kubectl. The following command is only for the default minimal installation.

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

```

|

||||

|

||||

- Create a local cluster-configuration.yaml.

|

||||

|

|

@ -57,7 +57,7 @@ kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/maste

|

|||

vi cluster-configuration.yaml

|

||||

```

|

||||

|

||||

- Copy all the content in this [file](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) and paste it to your local cluster-configuration.yaml. Navigate to `metrics_server`, and change `true` to `false` for `enabled`.

|

||||

- Copy all the content in this [file](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) and paste it to your local cluster-configuration.yaml. Navigate to `metrics_server`, and change `true` to `false` for `enabled`.

|

||||

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -68,11 +68,11 @@ If you do not copy and execute the command above, you cannot proceed with the st

|

|||

- Install KubeSphere using kubectl. The following command is only for the default minimal installation.

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

```

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml

|

||||

```

|

||||

|

||||

- Inspect the logs of installation:

|

||||

|

|

|

|||

|

|

@ -26,16 +26,16 @@ After you make sure your existing Kubernetes cluster meets all the requirements,

|

|||

- Execute the following commands to start installation:

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml

|

||||

```

|

||||

|

||||

```bash

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml

|

||||

kubectl apply -f https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml

|

||||

```

|

||||

|

||||

{{< notice note >}}

|

||||

|

||||

If your server has trouble accessing GitHub, you can copy the content in [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml) and [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) respectively and past it to local files. You then can use `kubectl apply -f` for the local files to install KubeSphere.

|

||||

If your server has trouble accessing GitHub, you can copy the content in [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml) and [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) respectively and past it to local files. You then can use `kubectl apply -f` for the local files to install KubeSphere.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

|

|

@ -47,7 +47,7 @@ kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=

|

|||

|

||||

{{< notice tip >}}

|

||||

|

||||

In some environments, you may find the installation process stopped by issues related to `metrics_server`, as some cloud providers have already installed metrics server in their platform. In this case, please manually create a local cluster-configuration.yaml file (copy the [content](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) to it). In this file, disable `metrics_server` by changing `true` to `false` for `enabled`, and use `kubectl apply -f cluster-configuration.yaml` to execute it.

|

||||

In some environments, you may find the installation process stopped by issues related to `metrics_server`, as some cloud providers have already installed metrics server in their platform. In this case, please manually create a local cluster-configuration.yaml file (copy the [content](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) to it). In this file, disable `metrics_server` by changing `true` to `false` for `enabled`, and use `kubectl apply -f cluster-configuration.yaml` to execute it.

|

||||

|

||||

{{</ notice >}}

|

||||

|

||||

|

|

|

|||

|

|

@ -7,218 +7,4 @@ description: 'How to install KubeSphere on air-gapped Linux machines'

|

|||

weight: 2240

|

||||

---

|

||||

|

||||

The air-gapped installation is almost the same as the online installation except it creates a local registry to host the Docker images. We will demonstrate how to install KubeSphere and Kubernetes on air-gapped environment.

|

||||

|

||||

> Note: The dependencies in different operating systems may cause upexpected problems. If you encounter any installation problems on air-gapped environment, please describe your OS information and error logs on [GitHub](https://github.com/kubesphere/kubesphere/issues).

|

||||

|

||||

## Prerequisites

|

||||

|

||||

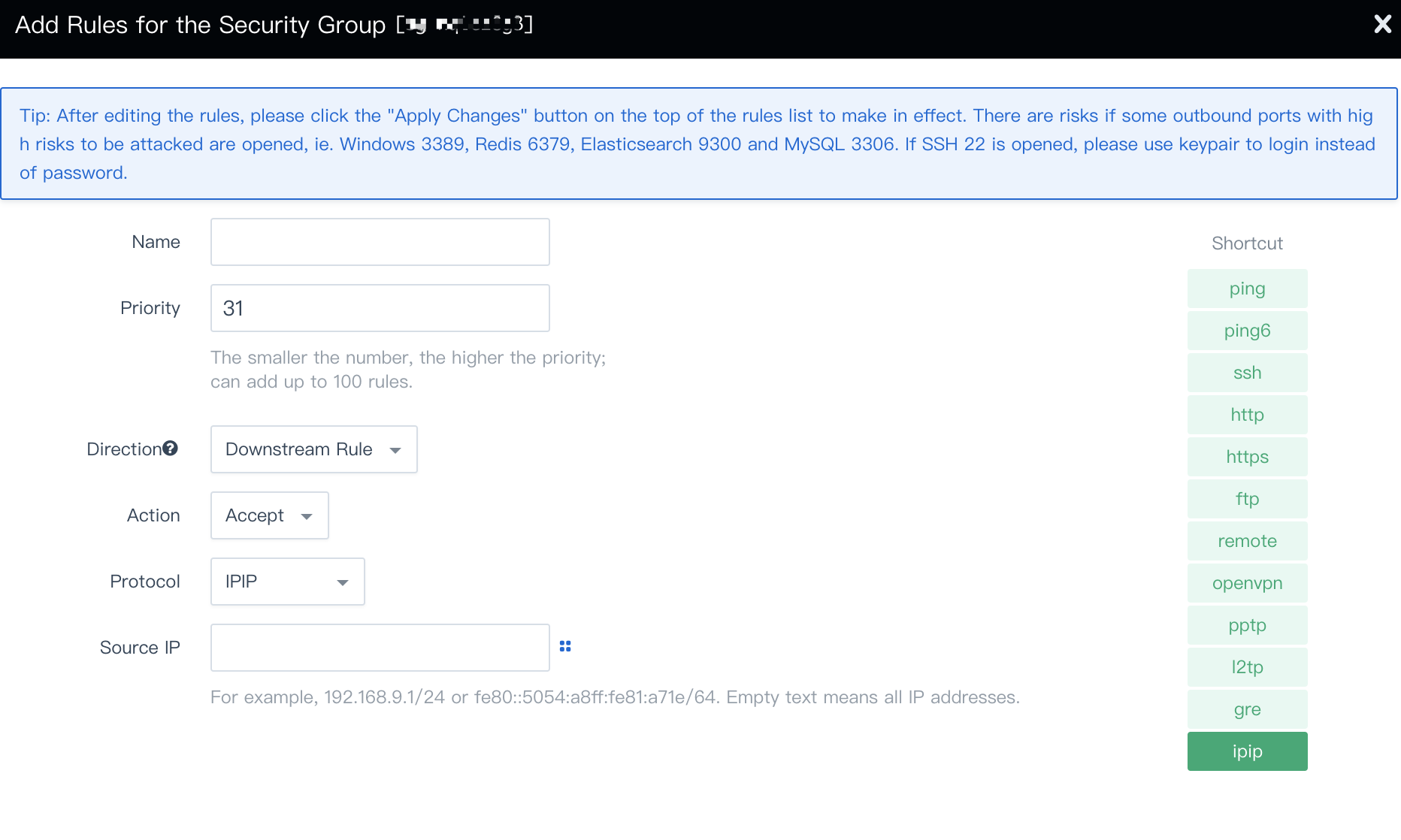

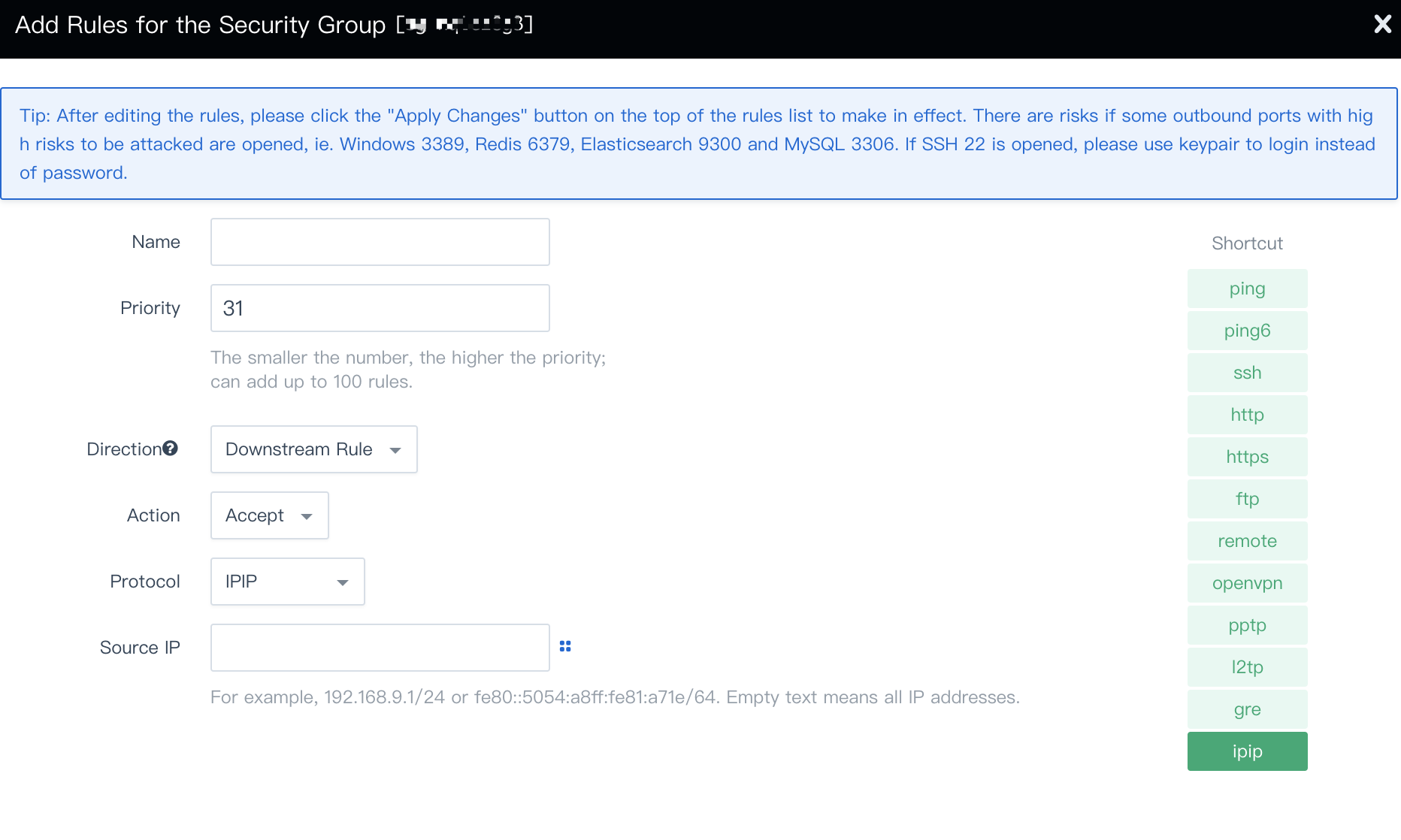

- If your machine is behind a firewall, you need to open the ports by following the document [Ports Requirements](../port-firewall) for more information.

|

||||

> - Installer will use `/var/lib/docker` as the default directory where all Docker related files, including the images, are stored. We recommend you to add additional storage to a disk with at least 100G mounted at `/var/lib/docker` and `/mnt/registry` respectively, use the [fdisk](https://www.computerhope.com/unix/fdisk.htm) command for reference.

|

||||

- Installer uses [Local volume](https://kubernetes.io/docs/concepts/storage/volumes/#local) based on [OpenEBS](https://openebs.io/) to provide storage service with dynamic provisioning. It is convenient for testing and development. For production, please [configure supported persistent storage service](../storage-configuration) and prepare [high availability configuration](../master-ha) before installation.

|

||||

- Since the air-gapped machines cannot connect to apt or yum source, please use clean Linux machine to avoid this problem.

|

||||

|

||||

## Step 1: Prepare Linux Hosts

|

||||

|

||||

The following describes the requirements of hardware and operating system. To get started with multi-node installation, you need to prepare at least `three` hosts according to the following requirements.

|

||||

|

||||

- Supported OSes: CentOS 7.4 ~ 7.7 (64-bit), Ubuntu 16.04.5/16.04.6/18.04.1/18.04.2/18.04.3 LTS (64-bit)

|

||||

- Time synchronization is required across all nodes, otherwise the installation may not succeed;

|

||||

- For `Ubuntu 16.04` OS, it is recommended to select `16.04.5`;

|

||||

- If you are using `Ubuntu 18.04`, you need to use the user `root`.

|

||||

- Ensure your disk of each node is at least 100G.

|

||||

- CPU and memory in total of all machines: 2 cores and 4 GB for minimal installation; 8 cores and 16 GB for complete installation.

|

||||

|

||||

|

||||

The following section describes an example to introduce multi-node installation. This example shows three hosts installation by taking the `master` serving as the taskbox to execute the installation. The following cluster consists of one Master and two Nodes.

|

||||

|

||||

> Note: KubeSphere supports the high-availability configuration of the Masters and Etcd nodes. Please refer to [Creating High Availability KubeSphere Cluster](../master-ha) for guide.

|

||||

|

||||

| Host IP | Host Name | Role |

|

||||

| --- | --- | --- |

|

||||

|192.168.0.1|master|master, etcd|

|

||||

|192.168.0.2|node1|node|

|

||||

|192.168.0.3|node2|node|

|

||||

|

||||

### Cluster Architecture

|

||||

|

||||

#### Single Master, Single Etcd, Two Nodes

|

||||

|

||||

|

||||

|

||||

## Step 2: Download Installer Package

|

||||

|

||||

Download `KubeSphere 2.1.1` to your taskbox machine, then unpack it and go to the folder `conf`.

|

||||

|

||||

```bash

|

||||

curl -L https://kubesphere.io/download/offline/latest > kubesphere-all-offline-v2.1.1.tar.gz \

|

||||

&& tar -zxf kubesphere-all-offline-v2.1.1.tar.gz && cd kubesphere-all-offline-v2.1.1/conf

|

||||

```

|

||||

|

||||

## Step 3: Configure Host Template

|

||||

|

||||

> This step is only for multi-node installation, you can skip this step if you choose all-in-one installation.

|

||||

|

||||

Please refer to the following sample to configure all hosts in `hosts.ini`. It is recommended to install KubeSphere using root user. The following is an example configuration for `CentOS 7.5` using root user. Note do not manually wrap any line in the file.

|

||||

|

||||

> Note:

|

||||

>

|

||||

> - If you use non-root user with sudo access to install KubeSphere, you need to refer to the example block that is commented out in `conf/hosts.ini`.

|

||||

> - If the `root` user of that taskbox machine cannot establish SSH connection with the rest of machines, you need to refer to the `non-root` user example at the top of the `conf/hosts.ini`, but it is recommended to switch `root` user when executing `install.sh`.

|

||||

> - master, node1 and node2 are the host names of each node and all host names should be in lowercase.

|

||||

|

||||

### hosts.ini

|

||||

|

||||

```ini

|

||||

[all]

|

||||

master ansible_connection=local ip=192.168.0.1

|

||||

node1 ansible_host=192.168.0.2 ip=192.168.0.2 ansible_ssh_pass=PASSWORD

|

||||

node2 ansible_host=192.168.0.3 ip=192.168.0.3 ansible_ssh_pass=PASSWORD

|

||||

|

||||

[local-registry]

|

||||

master

|

||||

|

||||

[kube-master]

|

||||

master

|

||||

|

||||

[kube-node]

|

||||

node1

|

||||

node2

|

||||

|

||||

[etcd]

|

||||

master

|

||||

|

||||

[k8s-cluster:children]

|

||||

kube-node

|

||||

kube-master

|

||||

```

|

||||

|

||||

> Note:

|

||||

>

|

||||

> - You need to replace each node information such as IP, password with real values in the group `[all]`. The master node is the taskbox so you do not need to add password field here.

|

||||

> - Installer will use a node as the local registry for docker images, defaults to "master" in the group `[local-registry]`.

|

||||

> - The "master" node also takes the role of master and etcd, so "master" is filled under the group`[kube-master]` and the group `[etcd]` respectively.

|

||||

> - "node1" and "node2" both serve the role of `Node`, so they are filled under the group `[kube-node]`.

|

||||

>

|

||||

> Parameters Specification:

|

||||

>

|

||||

> - `ansible_connection`: Connection type to the host, "local" in the example above means local connection.

|

||||

> - `ansible_host`: The name of the host to be connected.

|

||||

> - `ip`: The ip of the host to be connected.

|

||||

> - `ansible_user`: The default ssh user name to use.

|

||||

> - `ansible_become_pass`: Allows you to set the privilege escalation password.

|

||||

> - `ansible_ssh_pass`: The password of the host to be connected using root.

|

||||

|

||||

## Step 4: Enable All Components

|

||||

|

||||

> This is step is complete installation. You can skip this step if you choose a minimal installation.

|

||||

|

||||

Edit `conf/common.yaml`, reference the following changes with values being `true` which are `false` by default.

|

||||

|

||||

```yaml

|

||||

# LOGGING CONFIGURATION

|

||||

# logging is an optional component when installing KubeSphere, and

|

||||

# Kubernetes builtin logging APIs will be used if logging_enabled is set to false.

|

||||

# Builtin logging only provides limited functions, so recommend to enable logging.

|

||||

logging_enabled: true # Whether to install logging system

|

||||

elasticsearch_master_replica: 1 # total number of master nodes, it's not allowed to use even number

|

||||

elasticsearch_data_replica: 2 # total number of data nodes

|

||||

elasticsearch_volume_size: 20Gi # Elasticsearch volume size

|

||||

log_max_age: 7 # Log retention time in built-in Elasticsearch, it is 7 days by default.

|

||||

elk_prefix: logstash # the string making up index names. The index name will be formatted as ks-<elk_prefix>-log

|

||||

kibana_enabled: false # Kibana Whether to install built-in Grafana

|

||||

#external_es_url: SHOULD_BE_REPLACED # External Elasticsearch address, KubeSphere supports integrate with Elasticsearch outside the cluster, which can reduce the resource consumption.

|

||||

#external_es_port: SHOULD_BE_REPLACED # External Elasticsearch service port

|

||||

|

||||

#DevOps Configuration

|

||||

devops_enabled: true # Whether to install built-in DevOps system (Supports CI/CD pipeline, Source/Binary to image)

|

||||

jenkins_memory_lim: 8Gi # Jenkins memory limit, it is 8 Gi by default

|

||||

jenkins_memory_req: 4Gi # Jenkins memory request, it is 4 Gi by default

|

||||

jenkins_volume_size: 8Gi # Jenkins volume size, it is 8 Gi by default

|

||||

jenkinsJavaOpts_Xms: 3g # Following three are JVM parameters

|

||||

jenkinsJavaOpts_Xmx: 6g

|

||||

jenkinsJavaOpts_MaxRAM: 8g

|

||||

sonarqube_enabled: true # Whether to install built-in SonarQube

|

||||

#sonar_server_url: SHOULD_BE_REPLACED # External SonarQube address, KubeSphere supports integrate with SonarQube outside the cluster, which can reduce the resource consumption.

|

||||

#sonar_server_token: SHOULD_BE_REPLACED # SonarQube token

|

||||

|

||||

# Following components are all optional for KubeSphere,

|

||||

# Which could be turned on to install it before installation or later by updating its value to true

|

||||

openpitrix_enabled: true # KubeSphere application store

|

||||

metrics_server_enabled: true # For KubeSphere HPA to use

|

||||

servicemesh_enabled: true # KubeSphere service mesh system(Istio-based)

|

||||

notification_enabled: true # KubeSphere notification system

|

||||

alerting_enabled: true # KubeSphere alerting system

|

||||

```

|

||||

|

||||

## Step 5: Install KubeSphere to Linux Machines

|

||||

|

||||

> Note:

|

||||

>

|

||||

> - Generally, you can install KubeSphere without any modification, it will start with minimal installation by default.

|

||||

> - If you want to enable pluggable feature components installation, modify common.yaml and refer to [Enable Pluggable Components Installation](../pluggable-components) for instructions.

|

||||

> - Installer uses [Local volume](https://kubernetes.io/docs/concepts/storage/volumes/#local) based on [openEBS](https://openebs.io/) to provide storage service with dynamic provisioning. For production environment, please [configure supported persistent storage service](../storage-configuration) before installation.

|

||||

> - Since the default subnet for Cluster IPs is 10.233.0.0/18, and the default subnet for Pod IPs is 10.233.64.0/18, the node IPs must not use the two IP range. You can modify the default subnets `kube_service_addresses` or `kube_pods_subnet` in the file `conf/common.yaml` to avoid conflicts.

|

||||

|

||||

**1.** Enter `scripts` folder, and execute `install.sh` using `root` user:

|

||||

|

||||

```bash

|

||||

cd ../cripts

|

||||

./install.sh

|

||||

```

|

||||

|

||||

**2.** Type `2` to select multi-node mode to start the installation. The installer will ask you if you have set up persistent storage service or not. Just type `yes` since we are going to use local volume.

|

||||

|

||||

```bash

|

||||

################################################

|

||||

KubeSphere Installer Menu

|

||||

################################################

|

||||

* 1) All-in-one

|

||||

* 2) Multi-node

|

||||

* 3) Quit

|

||||

################################################

|

||||

https://kubesphere.io/ 2020-02-24

|

||||

################################################

|

||||

Please input an option: 2

|

||||

|

||||

```

|

||||

|

||||

**3.** Verify the multi-node installation:

|

||||

|

||||

**(1).** If "Successful" it returned after `install.sh` process completed, then congratulation! you are ready to go.

|

||||

|

||||

```bash

|

||||

successsful!

|

||||

#####################################################

|

||||

### Welcome to KubeSphere! ###

|

||||

#####################################################

|

||||

|

||||

Console: http://192.168.0.1:30880

|

||||

Account: admin

|

||||

Password: P@88w0rd

|

||||

|

||||

NOTE:Please modify the default password after login.

|

||||

#####################################################

|

||||

```

|

||||

|

||||

> Note: The information above is saved in a log file that you can view by following the [guide](../verify-components).

|

||||

|

||||

**(2).** You will be able to use default account and password `admin / P@88w0rd` to log in the console `http://{$IP}:30880` to take a tour of KubeSphere. Please change the default password after logging in.

|

||||

|

||||

|

||||

|

||||

<font color=red>Note: After log in console, please verify the monitoring status of service components in the "Cluster Status". If any service is not ready, please wait patiently untill all components get running up.</font>

|

||||

|

||||

|

||||

|

||||

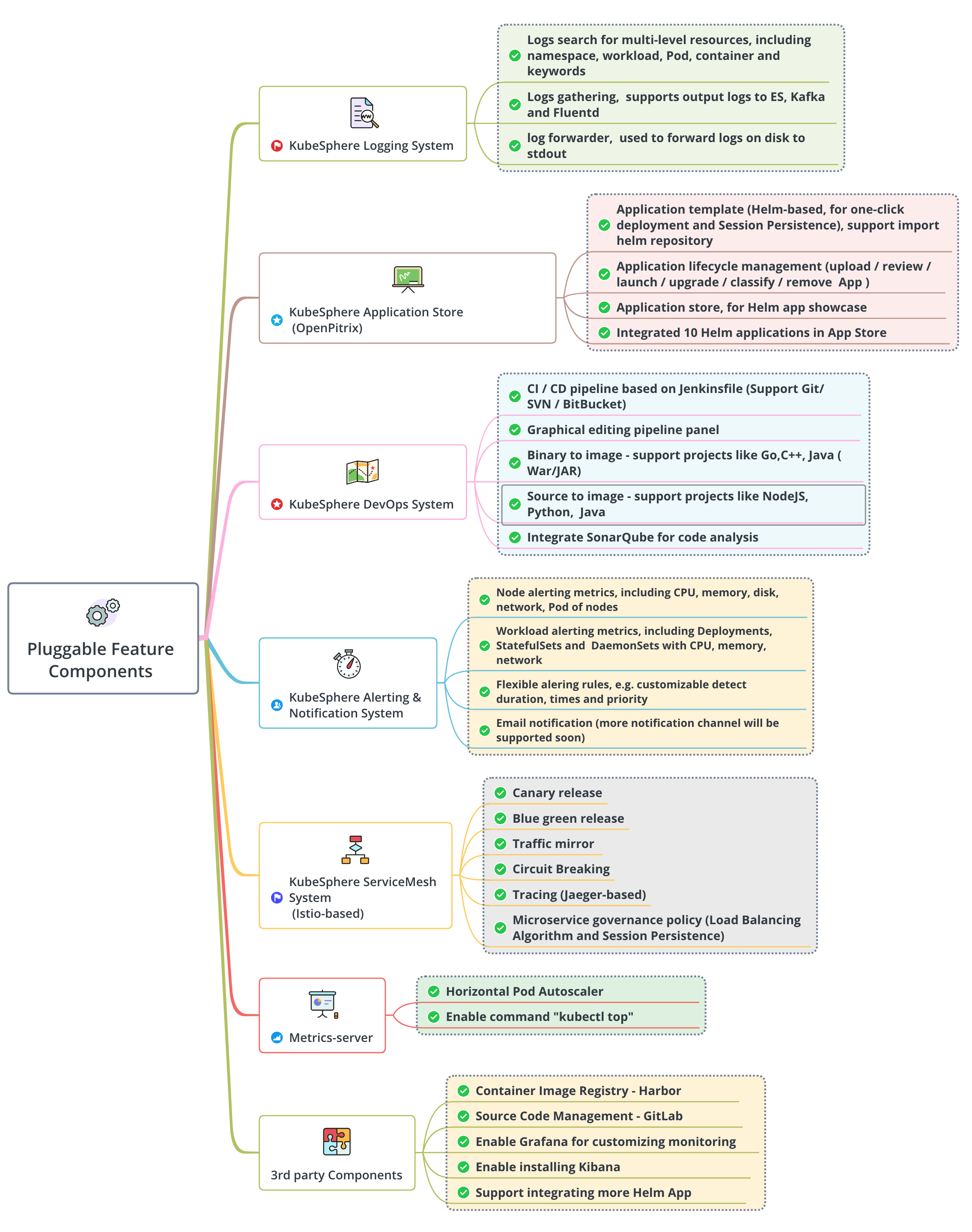

## Enable Pluggable Components

|

||||

|

||||

If you already have set up minimal installation, you still can edit the ConfigMap of ks-installer using the following command. Please make sure there is enough resource in your machines, see [Pluggable Components Overview](/en/installation/pluggable-components/).

|

||||

|

||||

```bash

|

||||

kubectl edit cm -n kubesphere-system ks-installer

|

||||

```

|

||||

|

||||

## FAQ

|

||||

|

||||

If you have further questions please do not hesitate to raise issues on [GitHub](https://github.com/kubesphere/kubesphere/issues).

|

||||

TBD

|

||||

|

|

|

|||

|

|

@ -49,7 +49,7 @@ Please see the requirements for hardware and operating system shown below. To ge

|

|||

|

||||

The path `/var/lib/docker` is mainly used to store the container data, and will gradually increase in size during use and operation. In the case of a production environment, it is recommended that `/var/lib/docker` should mount a drive separately.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

### Node Requirements

|

||||

|

||||

|

|

@ -81,49 +81,44 @@ This example includes three hosts as below with the master node serving as the t

|

|||

|

||||

## Step 2: Download KubeKey

|

||||

|

||||

As below, you can either download the binary file or build the binary package from source code.

|

||||

As below, you can either download the binary file.

|

||||

|

||||

Download the Installer for KubeSphere v3.0.0.

|

||||

|

||||

{{< tabs >}}

|

||||

|

||||

{{< tab "Download Binary" >}}

|

||||

{{< tab "For users with poor network to GitHub" >}}

|

||||

|

||||

Execute the following command:

|

||||

For users in China, you can download the installer using this link.

|

||||

|

||||

```bash

|

||||

curl -O -k https://kubernetes.pek3b.qingstor.com/tools/kubekey/kk

|

||||

wget https://kubesphere.io/kubekey/releases/v1.0.0

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{< tab "For users with good network to GitHub" >}}

|

||||

|

||||

For users with good network to GitHub, you can download it from [GitHub Release Page](https://github.com/kubesphere/kubekey/releases/tag/v1.0.0) or use the following link directly.

|

||||

|

||||

```bash

|

||||

wget https://github.com/kubesphere/kubekey/releases/download/v1.0.0/kubekey-v1.0.0-linux-amd64.tar.gz

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{</ tabs >}}

|

||||

|

||||

Unzip it.

|

||||

|

||||

```bash

|

||||

tar -zxvf v1.0.0

|

||||

```

|

||||

|

||||

Grant the execution right to `kk`:

|

||||

|

||||

```bash

|

||||

chmod +x kk

|

||||

```

|

||||

|

||||

{{</ tab >}}

|

||||

|

||||

{{< tab "Build Binary from Source Code" >}}

|

||||

|

||||

Execute the following command one by one:

|

||||

|

||||

```bash

|

||||

git clone https://github.com/kubesphere/kubekey.git

|

||||

```

|

||||

|

||||

```bash

|

||||

cd kubekey

|

||||

```

|

||||

|

||||

```bash

|

||||

./build.sh

|

||||

```

|

||||

|

||||

Note:

|

||||

|

||||

- Docker needs to be installed before the building.

|

||||

- If you have problems accessing `https://proxy.golang.org/`, execute `build.sh -p` instead.

|

||||

|

||||

{{</ tab >}}

|

||||

|

||||

{{</ tabs >}}

|

||||

|

||||

## Step 3: Create a Cluster

|

||||

|

||||

For multi-node installation, you need to create a cluster by specifying a configuration file.

|

||||

|

|

@ -133,7 +128,7 @@ For multi-node installation, you need to create a cluster by specifying a config

|

|||

Command:

|

||||

|

||||

```bash

|

||||

./kk create config [--with-kubernetes version] [--with-storage plugins] [--with-kubesphere version] [(-f | --file) path]

|

||||

./kk create config [--with-kubernetes version] [--with-kubesphere version] [(-f | --file) path]

|

||||

```

|

||||

|

||||

{{< notice info >}}

|

||||

|

|

@ -150,7 +145,7 @@ Here are some examples for your reference:

|

|||

./kk create config [-f ~/myfolder/abc.yaml]

|

||||

```

|

||||

|

||||

- You can customize the storage plugins (supported: LocalPV, NFS Client, Ceph RBD, and GlusterFS). You can also specify multiple plugins separated by comma. Please note the first one you add will be the default storage class.

|

||||

- You can customize the persistent storage plugins (e.g. NFS Client, Ceph RBD, and GlusterFS) in `sample-config.yaml`.

|

||||

|

||||

```bash

|

||||

./kk create config --with-storage localVolume

|

||||

|

|

@ -158,9 +153,9 @@ Here are some examples for your reference:

|

|||

|

||||

{{< notice note >}}

|

||||

|

||||

KubeKey will install [OpenEBS](https://openebs.io/) to provision LocalPV for development and testing environment by default, which is convenient for new users. For production, please use NFS/Ceph/GlusterFS or commercial products as persistent storage solutions, and install [relevant clients](https://github.com/kubesphere/kubekey/blob/master/docs/storage-client.md) in all nodes. For this example of multi-cluster installation, we will use the default storage class (local volume). For more information, see HA Cluster Configuration and Storage Class Configuration.

|

||||

KubeKey will install [OpenEBS](https://openebs.io/) to provision [LocalPV](https://kubernetes.io/docs/concepts/storage/volumes/#local) for development and testing environment by default, which is convenient for new users. For this example of multi-cluster installation, we will use the default storage class (local volume). For production, please use NFS/Ceph/GlusterFS/CSI or commercial products as persistent storage solutions, you need to specify them in `addons` of `sample-config.yaml`, see [Persistent Storage Configuration](../storage-configuration).

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

- You can specify a KubeSphere version that you want to install (e.g. `--with-kubesphere v3.0.0`).

|

||||

|

||||

|

|

@ -223,7 +218,7 @@ hosts:

|

|||

|

||||

#### controlPlaneEndpoint (for HA installation only)

|

||||

|

||||

`controlPlaneEndpoint` allows you to define an external load balancer for an HA cluster. You need to prepare and configure an external load balancer if and only if you need to install more than 3 master nodes. Please note that the address and port should be indented by two spaces in `config-sample.yaml`, and the `address` should be VIP. See KubeSphere on QingCloud Instance for more information.

|

||||

`controlPlaneEndpoint` allows you to define an external load balancer for an HA cluster. You need to prepare and configure an external load balancer if and only if you need to install more than 3 master nodes. Please note that the address and port should be indented by two spaces in `config-sample.yaml`, and the `address` should be VIP. See HA Configuration for details.

|

||||

|

||||

{{< notice tip >}}

|

||||

|

||||

|

|

@ -244,7 +239,7 @@ When you finish editing, save the file.

|

|||

|

||||

You need to change `config-sample.yaml` above to your own file if you use a different name.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

The whole installation process may take 10-20 minutes, depending on your machine and network.

|

||||

|

||||

|

|

@ -265,7 +260,7 @@ NOTES:

|

|||

1. After logging into the console, please check the

|

||||

monitoring status of service components in

|

||||

the "Cluster Management". If any service is not

|

||||

ready, please wait patiently until all components

|

||||

ready, please wait patiently until all components

|

||||

are ready.

|

||||

2. Please modify the default password after login.

|

||||

|

||||

|

|

@ -280,7 +275,7 @@ Now, you will be able to access the web console of KubeSphere at `http://{IP}:30

|

|||

|

||||

To access the console, you may need to forward the source port to the intranet port of the intranet IP depending on the platform of your cloud providers. Please also make sure port 30880 is opened in the security group.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

|

||||

|

||||

|

|

@ -301,4 +296,4 @@ echo 'source <(kubectl completion bash)' >>~/.bashrc

|

|||

kubectl completion bash >/etc/bash_completion.d/kubectl

|

||||

```

|

||||

|

||||

Detailed information can be found [here](https://kubernetes.io/docs/tasks/tools/install-kubectl/#enabling-shell-autocompletion).

|

||||

Detailed information can be found [here](https://kubernetes.io/docs/tasks/tools/install-kubectl/#enabling-shell-autocompletion).

|

||||

|

|

|

|||

|

|

@ -80,7 +80,10 @@ In the Ready to complete page, you review the configuration selections that you

|

|||

|

||||

|

||||

|

||||

## Keepalived+Haproxy

|

||||

## Install a Load Balancer using Keepalived and Haproxy (Optional)

|

||||

|

||||

For production environment, you have to prepare an external Load Balancer. If you do not have a Load Balancer, you can install it using Keepalived and Haproxy. If you are provisioning a development or testing environment, please skip this section.

|

||||

|

||||

### Yum Install

|

||||

|

||||

host lb-0(10.10.71.77) and host lb-1(10.10.71.66)

|

||||

|

|

@ -159,7 +162,7 @@ global_defs {

|

|||

notification_email {

|

||||

}

|

||||

smtp_connect_timeout 30

|

||||

router_id LVS_DEVEL01

|

||||

router_id LVS_DEVEL01

|

||||

vrrp_skip_check_adv_addr

|

||||

vrrp_garp_interval 0

|

||||

vrrp_gna_interval 0

|

||||

|

|

@ -173,10 +176,10 @@ vrrp_instance haproxy-vip {

|

|||

state MASTER

|

||||

priority 100

|

||||

interface ens192

|

||||

virtual_router_id 60

|

||||

advert_int 1

|

||||

virtual_router_id 60

|

||||

advert_int 1

|

||||

authentication {

|

||||

auth_type PASS

|

||||

auth_type PASS

|

||||

auth_pass 1111

|

||||

}

|

||||

unicast_src_ip 10.10.71.77

|

||||

|

|

@ -185,7 +188,7 @@ vrrp_instance haproxy-vip {

|

|||

}

|

||||

virtual_ipaddress {

|

||||

#vip

|

||||

10.10.71.67/24

|

||||

10.10.71.67/24

|

||||

}

|

||||

track_script {

|

||||

chk_haproxy

|

||||

|

|

@ -198,7 +201,7 @@ remarks haproxy 66 lb-1-10.10.71.66 (/etc/keepalived/keepalived.conf)

|

|||

global_defs {

|

||||

notification_email {

|

||||

}

|

||||

router_id LVS_DEVEL02

|

||||

router_id LVS_DEVEL02

|

||||

vrrp_skip_check_adv_addr

|

||||

vrrp_garp_interval 0

|

||||

vrrp_gna_interval 0

|

||||

|

|

@ -209,7 +212,7 @@ vrrp_script chk_haproxy {

|

|||

weight 2

|

||||

}

|

||||

vrrp_instance haproxy-vip {

|

||||

state BACKUP

|

||||

state BACKUP

|

||||

priority 90

|

||||

interface ens192

|

||||

virtual_router_id 60

|

||||

|

|

@ -223,7 +226,7 @@ vrrp_instance haproxy-vip {

|

|||

10.10.71.77

|

||||

}

|

||||

virtual_ipaddress {

|

||||

10.10.71.67/24

|

||||

10.10.71.67/24

|

||||

}

|

||||

track_script {

|

||||

chk_haproxy

|

||||

|

|

@ -243,7 +246,7 @@ systemctl start keepalived

|

|||

Use `ip a s` to view the vip binding status of each lb node

|

||||

|

||||

```bash

|

||||

ip a s

|

||||

ip a s

|

||||

```

|

||||

|

||||

Pause VIP node haproxy:`systemctl stop haproxy`

|

||||

|

|

@ -255,7 +258,7 @@ systemctl stop haproxy

|

|||

Use `ip a s` again to check the vip binding of each lb node, and check whether vip drifts

|

||||

|

||||

```bash

|

||||

ip a s

|

||||

ip a s

|

||||

```

|

||||

|

||||

Or use `systemctl status -l keepalived` command to view

|

||||

|

|

@ -264,31 +267,67 @@ Or use `systemctl status -l keepalived` command to view

|

|||

systemctl status -l keepalived

|

||||

```

|

||||

|

||||

|

||||

|

||||

## Get the Installer Excutable File

|

||||

|

||||

Download Binary

|

||||

Download the Installer for KubeSphere v3.0.0.

|

||||

|

||||

{{< tabs >}}

|

||||

|

||||

{{< tab "For users with poor network to GitHub" >}}

|

||||

|

||||

For users in China, you can download the installer using this link.

|

||||

|

||||

```bash

|

||||

wget https://kubesphere.io/kubekey/releases/v1.0.0

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{< tab "For users with good network to GitHub" >}}

|

||||

|

||||

For users with good network to GitHub, you can download it from [GitHub Release Page](https://github.com/kubesphere/kubekey/releases/tag/v1.0.0) or use the following link directly.

|

||||

|

||||

```bash

|

||||

wget https://github.com/kubesphere/kubekey/releases/download/v1.0.0/kubekey-v1.0.0-linux-amd64.tar.gz

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{</ tabs >}}

|

||||

|

||||

Unzip it.

|

||||

|

||||

```bash

|

||||

tar -zxvf v1.0.0

|

||||

```

|

||||

|

||||

Grant the execution right to `kk`:

|

||||

|

||||

```bash

|

||||

curl -O -k https://kubernetes.pek3b.qingstor.com/tools/kubekey/kk

|

||||

chmod +x kk

|

||||

```

|

||||

|

||||

## Create a Multi-Node Cluster

|

||||

## Create a Multi-node Cluster

|

||||

|

||||

You have more control to customize parameters or create a multi-node cluster using the advanced installation. Specifically, create a cluster by specifying a configuration file.。

|

||||

|

||||

### With KubeKey, you can install Kubernetes and KubeSphere

|

||||

With KubeKey, you can install Kubernetes and KubeSphere

|

||||

|

||||

Create a Kubernetes cluster with KubeSphere installed (e.g. --with-kubesphere v3.0.0)

|

||||

|

||||

```bash

|

||||

./kk create config --with-kubesphere v3.0.0 -f ~/config-sample.yaml

|

||||

./kk create config --with-kubernetes v1.17.9 --with-kubesphere v3.0.0 -f ~/config-sample.yaml

|

||||

```

|

||||

#### Modify the file config-sample.yaml according to your environment

|

||||

|

||||

vi ~/config-sample.yaml

|

||||

> The following Kubernetes versions has been fully tested with KubeSphere:

|

||||

> - v1.15: v1.15.12

|

||||

> - v1.16: v1.16.13

|

||||

> - v1.17: v1.17.9 (default)

|

||||

> - v1.18: v1.18.6

|

||||

|

||||

Modify the file config-sample.yaml according to your environment

|

||||

|

||||

```bash

|

||||

vi config-sample.yaml

|

||||

```

|

||||

|

||||

```yaml

|

||||

apiVersion: kubekey.kubesphere.io/v1alpha1

|

||||

|

|

@ -308,7 +347,7 @@ spec:

|

|||

- master1

|

||||

- master2

|

||||

- master3

|

||||

master:

|

||||

master:

|

||||

- master1

|

||||

- master2

|

||||

- master3

|

||||

|

|

@ -446,7 +485,7 @@ NOTES:

|

|||

1. After logging into the console, please check the

|

||||

monitoring status of service components in

|

||||

the "Cluster Management". If any service is not

|

||||

ready, please wait patiently until all components

|

||||

ready, please wait patiently until all components

|

||||

are ready.

|

||||

2. Please modify the default password after login.

|

||||

#####################################################

|

||||

|

|

@ -462,4 +501,3 @@ You will be able to use default account and password `admin / P@88w0rd` to log i

|

|||

|

||||

#### Enable Pluggable Components (Optional)

|

||||

The example above demonstrates the process of a default minimal installation. To enable other components in KubeSphere, see [Enable Pluggable Components for more details](https://github.com/kubesphere/ks-installer#enable-pluggable-components).

|

||||

|

||||

|

|

|

|||

|

|

@ -10,7 +10,7 @@ Technically, you can either install, administer, and manage Kubernetes yourself

|

|||

|

||||

## Introduction

|

||||

|

||||

In this tutorial, we will use two key features of Azure virtual machines (VMs):

|

||||

In this tutorial, we will use two key features of Azure virtual machines (VMs):

|

||||

|

||||

- Virtual Machine Scale Sets: Azure VMSS let you create and manage a group of load balanced VMs. The number of VM instances can automatically increase or decrease in response to demand or a defined schedule(Kubernates Autoscaler is available, but not covered in this tutorial, see [autoscaler](https://github.com/kubernetes/autoscaler/tree/master/cluster-autoscaler/cloudprovider/azure) for more details), which perfectly fits the Worker Nodes.

|

||||

- Availability sets: An availability set is a logical grouping of VMs within a datacenter that automatically distributed across fault domains. This approach limits the impact of potential physical hardware failures, network outages, or power interruptions. All the Master and ETCD VMs will be placed in an Availability sets to meet our High Availability goals.

|

||||

|

|

@ -88,8 +88,38 @@ ssh -i .ssh/id_rsa2 -p50200 kubesphere@40.81.5.xx

|

|||

|

||||

1. First, download it and generate a configuration file to customize the installation as follows.

|

||||

|

||||

|

||||

{{< tabs >}}

|

||||

|

||||

{{< tab "For users with poor network to GitHub" >}}

|

||||

|

||||

For users in China, you can download the installer using this link.

|

||||

|

||||

```bash

|

||||

wget https://kubesphere.io/kubekey/releases/v1.0.0

|

||||

```

|

||||

curl -O -k https://kubernetes.pek3b.qingstor.com/tools/kubekey/kk

|

||||

{{</ tab >}}

|

||||

|

||||

{{< tab "For users with good network to GitHub" >}}

|

||||

|

||||

For users with good network to GitHub, you can download it from [GitHub Release Page](https://github.com/kubesphere/kubekey/releases/tag/v1.0.0) or use the following link directly.

|

||||

|

||||

```bash

|

||||

wget https://github.com/kubesphere/kubekey/releases/download/v1.0.0/kubekey-v1.0.0-linux-amd64.tar.gz

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{</ tabs >}}

|

||||

|

||||

Unzip it.

|

||||

|

||||

```bash

|

||||

tar -zxvf v1.0.0

|

||||

```

|

||||

|

||||

Grant the execution right to `kk`:

|

||||

|

||||

```bash

|

||||

chmod +x kk

|

||||

```

|

||||

|

||||

|

|

@ -98,7 +128,7 @@ chmod +x kk

|

|||

```

|

||||

./kk create config --with-kubesphere v3.0.0 --with-kubernetes v1.17.9

|

||||

```

|

||||

> Kubernetes Versions

|

||||

> The following Kubernetes versions have been fully tested with KubeSphere:

|

||||

> - v1.15: v1.15.12

|

||||

> - v1.16: v1.16.13

|

||||

> - v1.17: v1.17.9 (default)

|

||||

|

|

@ -208,4 +238,3 @@ Since we are using self-hosted Kubernetes solutions on Azure, So the Load Balanc

|

|||

|

||||

2. Create an Inbound Security rule to allow Internet access in the Network Security Group.

|

||||

|

||||

|

||||

|

|

|

|||

|

|

@ -28,7 +28,7 @@ This example prepares six machines of **Ubuntu 16.04.6**. We will create two loa

|

|||

|

||||

The Kubernetes document [Options for Highly Available topology](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/ha-topology/) demonstrates that there are two options for configuring the topology of a highly available (HA) Kubernetes cluster, i.e. stacked etcd topology and external etcd topology. You should carefully consider the advantages and disadvantages of each topology before setting up an HA cluster according to [this document](https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/ha-topology/). In this guide, we adopt stacked etcd topology to bootstrap an HA cluster for convenient demonstration.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

## Install HA Cluster

|

||||

|

||||

|

|

@ -61,7 +61,7 @@ Click Submit to continue.

|

|||

|

||||

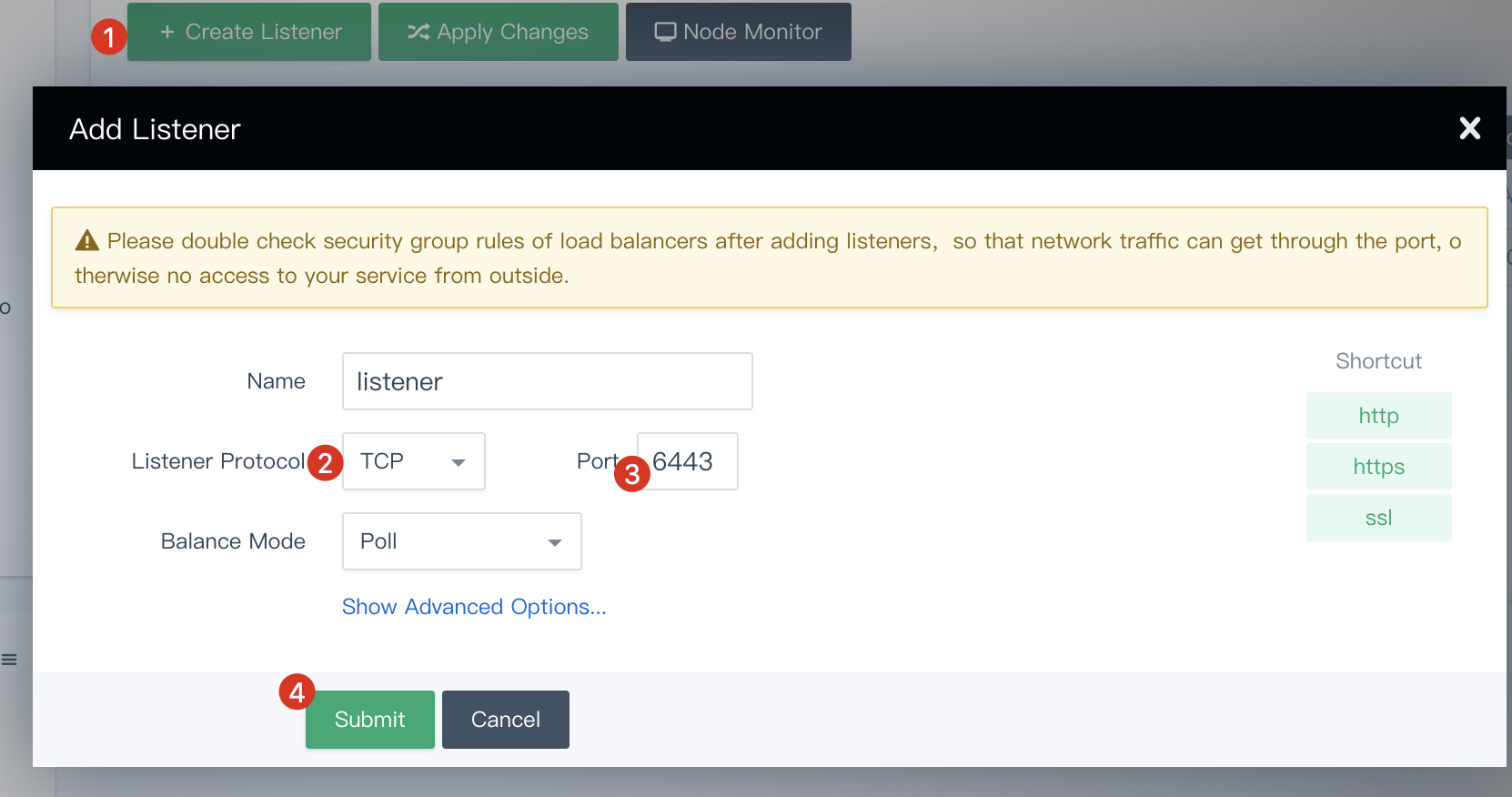

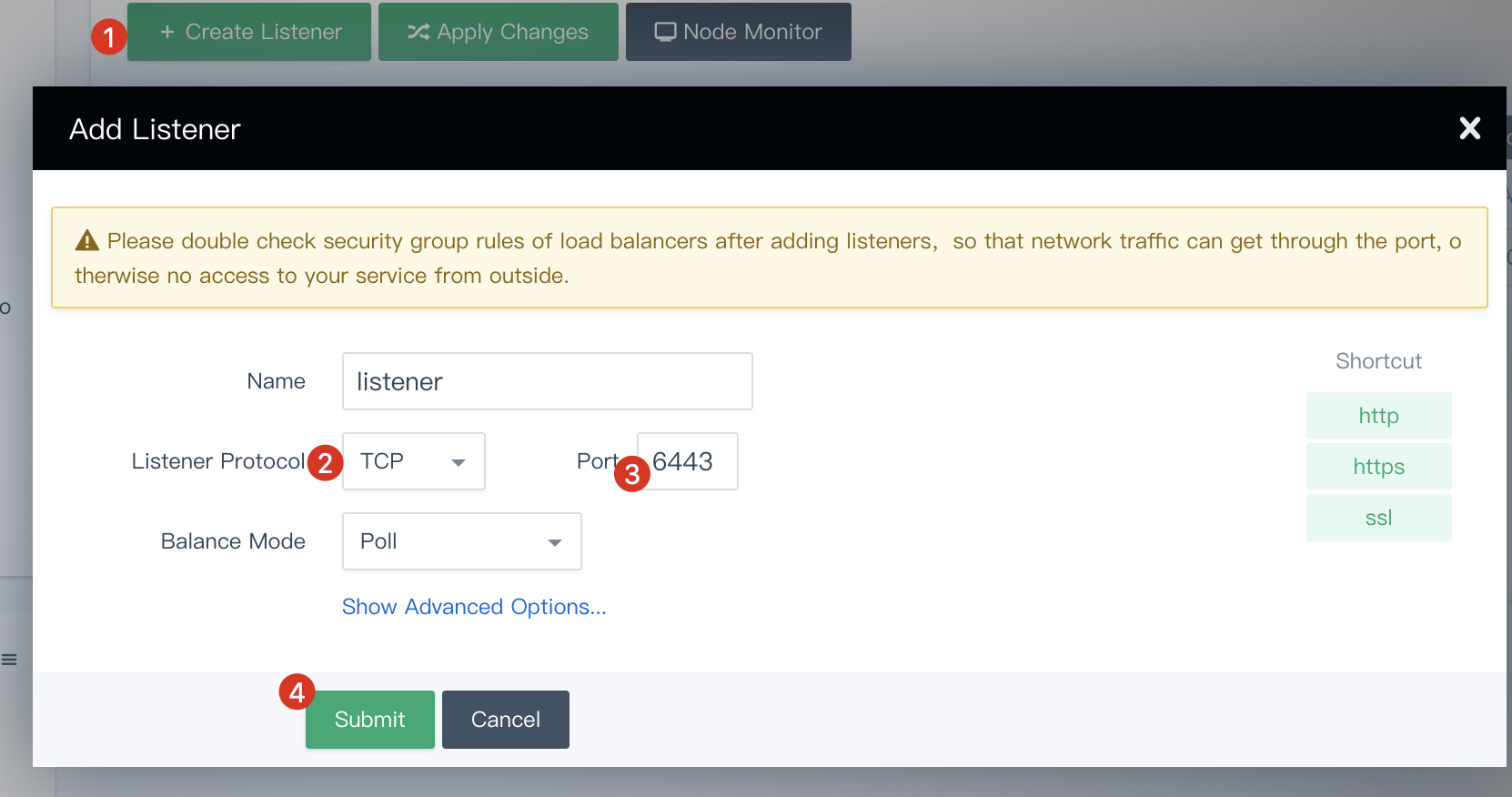

After you create the listener, please check the firewall rules of the load balancer. Make sure that the port `6443` has been added to the firewall rules and the external traffic can pass through `6443`. Otherwise, the installation will fail. If you are using QingCloud platform, you can find the information in **Security Groups** under **Security**.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

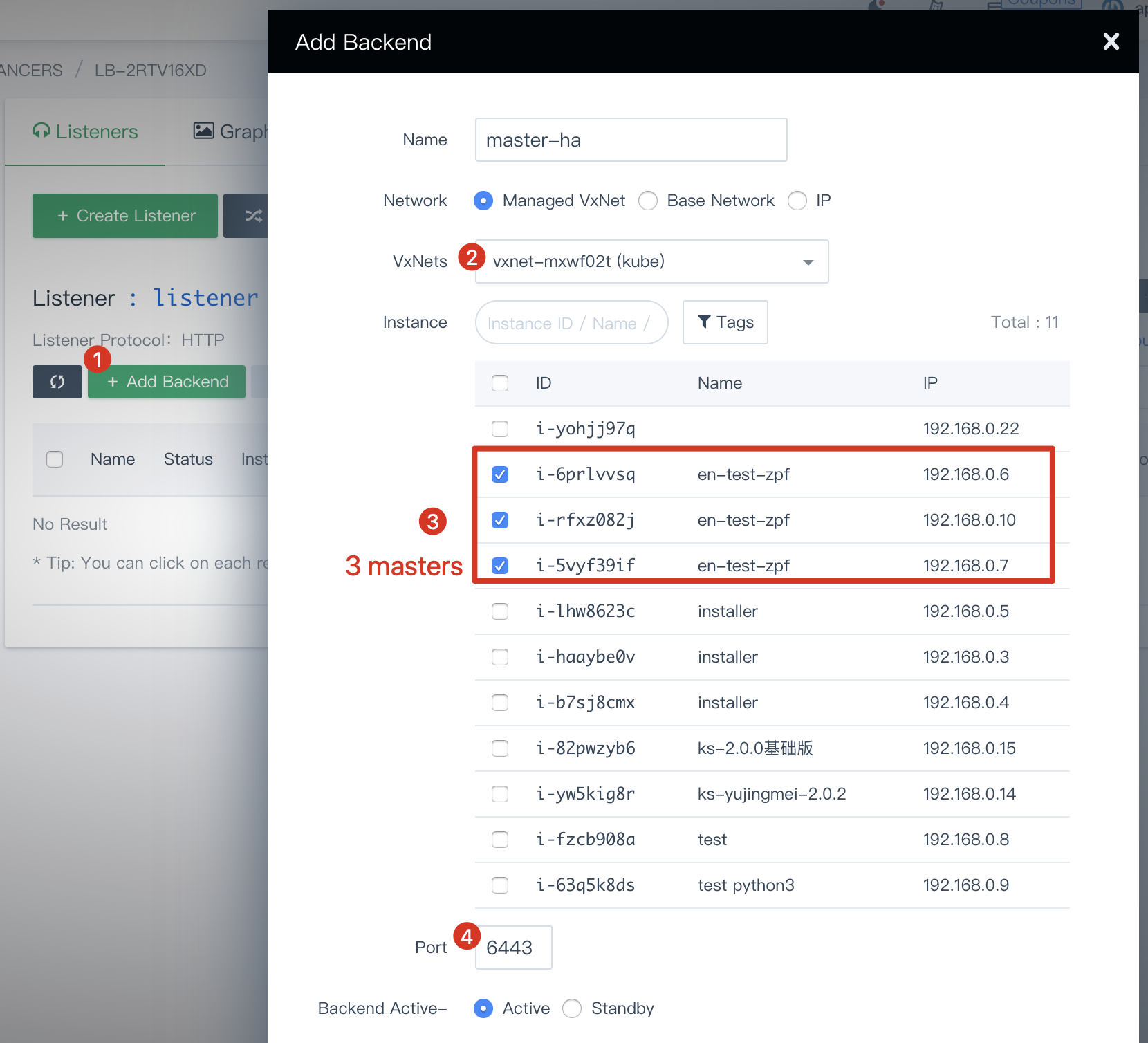

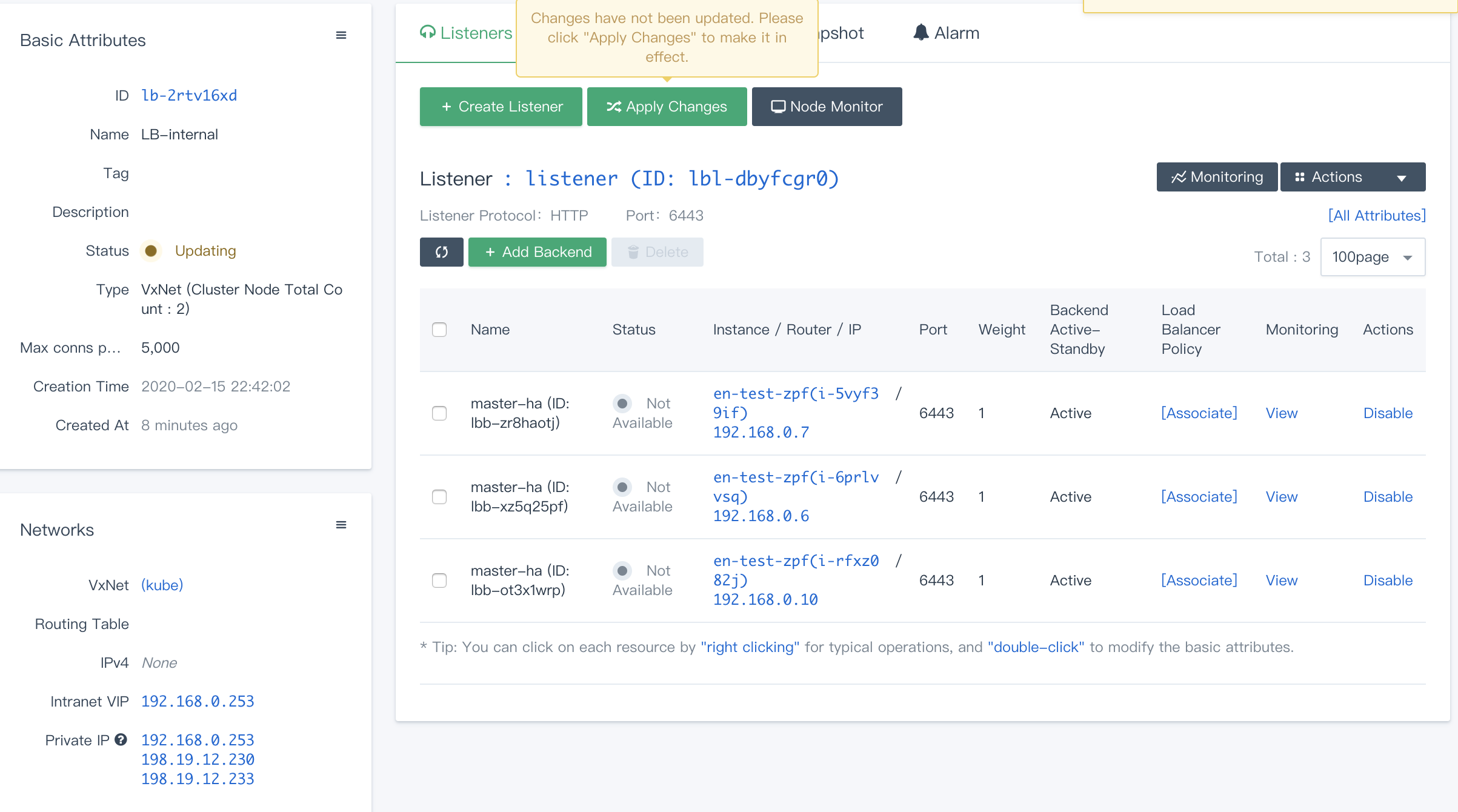

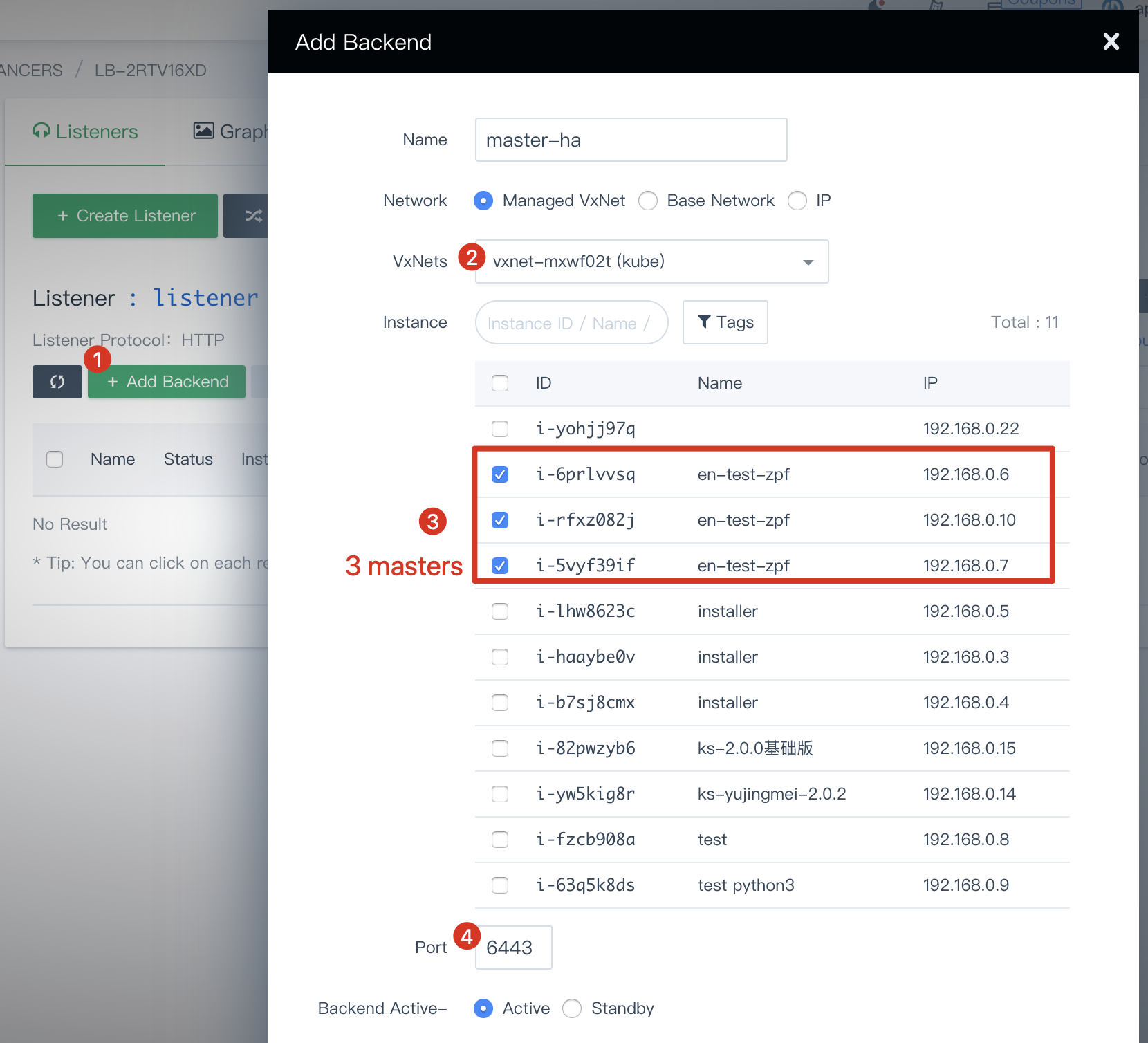

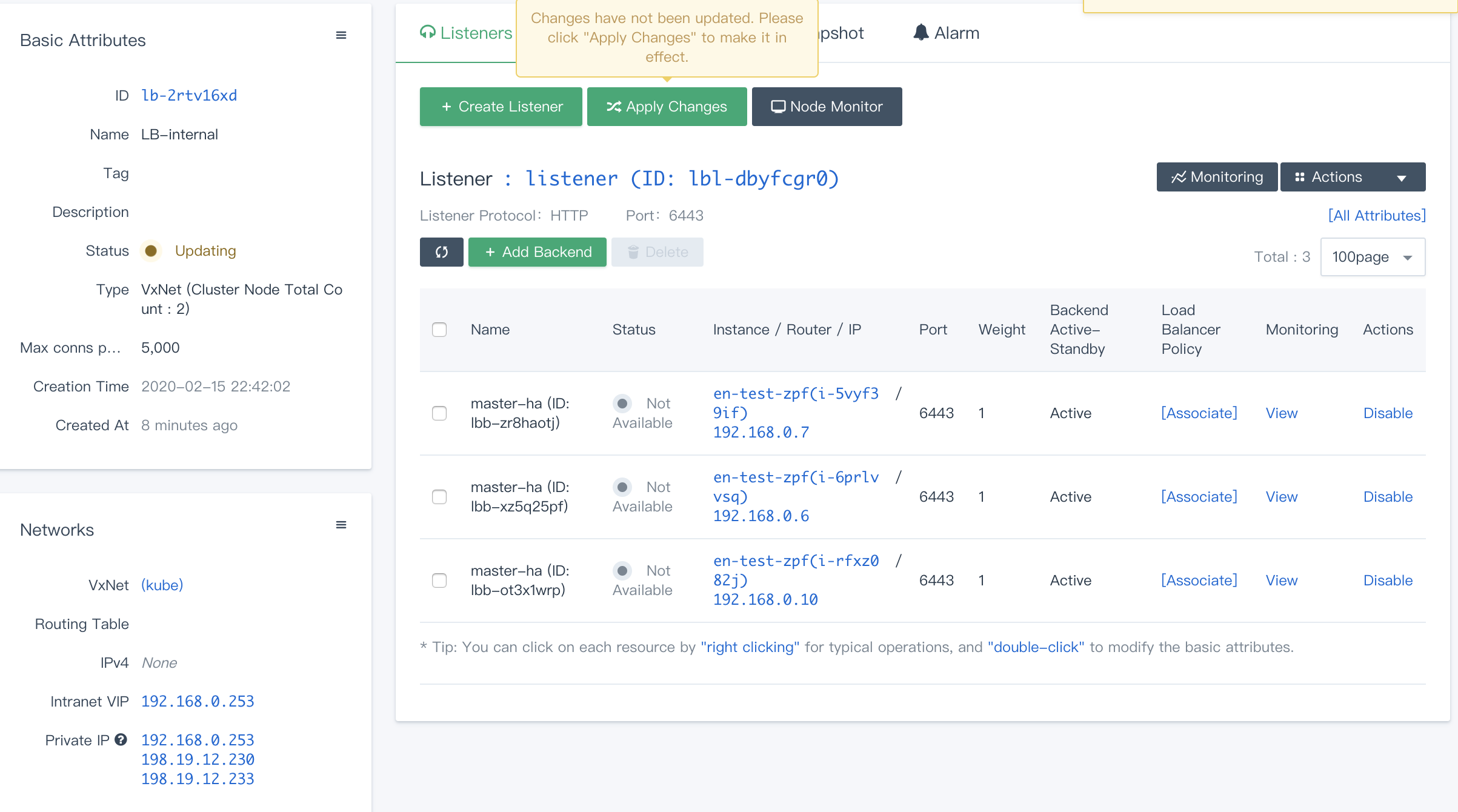

4. Click **Add Backend**, and choose the VxNet you just selected (in this example, it is `pn`). Click the button **Advanced Search**, choose the three master nodes, and set the port to `6443` which is the default secure port of api-server.

|

||||

|

||||

|

|

@ -75,7 +75,7 @@ Click **Submit** when you finish.

|

|||

|

||||

The status of all masters might show `Not Available` after you added them as backends. This is normal since the port `6443` of api-server is not active on master nodes yet. The status will change to `Active` and the port of api-server will be exposed after the installation finishes, which means the internal load balancer you configured works as expected.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

|

||||

|

||||

|

|

@ -89,7 +89,7 @@ You need to create an EIP in advance. To create an EIP, go to **Elastic IPs** un

|

|||

|

||||

Two elastic IPs are needed for this whole tutorial, one for the VPC network and the other for the external load balancer created in this step. You cannot associate the same EIP to the VPC network and the load balancer at the same time.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

6. Similarly, create an external load balancer while don't select VxNet for the Network field. Bind the EIP that you created to this load balancer by clicking **Add IPv4**.

|

||||

|

||||

|

|

@ -101,7 +101,7 @@ Two elastic IPs are needed for this whole tutorial, one for the VPC network and

|

|||

|

||||

After you create the listener, please check the firewall rules of the load balancer. Make sure that the port `30880` has been added to the firewall rules and the external traffic can pass through `6443`. Otherwise, the installation will fail. If you are using QingCloud platform, you can find the information in **Security Groups** under **Security**.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

|

||||

|

||||

|

|

@ -117,22 +117,47 @@ Click **Submit** when you finish.

|

|||

|

||||

[Kubekey](https://github.com/kubesphere/kubekey) is the next-gen installer which is used for installing Kubernetes and KubeSphere v3.0.0 fastly, flexibly and easily.

|

||||

|

||||

1. Download KubeKey and generate a configuration file to customize the installation as follows.

|

||||

{{< tabs >}}

|

||||

|

||||

{{< tab "For users with poor network to GitHub" >}}

|

||||

|

||||

For users in China, you can download the installer using this link.

|

||||

|

||||

```bash

|

||||

curl -O -k https://kubernetes.pek3b.qingstor.com/tools/kubekey/kk

|

||||

wget https://kubesphere.io/kubekey/releases/v1.0.0

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{< tab "For users with good network to GitHub" >}}

|

||||

|

||||

For users with good network to GitHub, you can download it from [GitHub Release Page](https://github.com/kubesphere/kubekey/releases/tag/v1.0.0) or use the following link directly.

|

||||

|

||||

```bash

|

||||

wget https://github.com/kubesphere/kubekey/releases/download/v1.0.0/kubekey-v1.0.0-linux-amd64.tar.gz

|

||||

```

|

||||

{{</ tab >}}

|

||||

|

||||

{{</ tabs >}}

|

||||

|

||||

Unzip it.

|

||||

|

||||

```bash

|

||||

tar -zxvf v1.0.0

|

||||

```

|

||||

|

||||

Grant the execution right to `kk`:

|

||||

|

||||

```bash

|

||||

chmod +x kk

|

||||

```

|

||||

|

||||

2. Then create an example configuration file with default configurations. Here we use Kubernetes v1.17.9 as an example.

|

||||

Then create an example configuration file with default configurations. Here we use Kubernetes v1.17.9 as an example.

|

||||

|

||||

```bash

|

||||

./kk create config --with-kubesphere v3.0.0 --with-kubernetes v1.17.9

|

||||

```

|

||||

|

||||

> Tip: These Kubernetes versions have been fully tested with KubeSphere: *v1.15.12*, *v1.16.13*, *v1.17.9* (default), *v1.18.6*.

|

||||

|

||||

### Cluster Node Planning

|

||||

|

||||

|

|

@ -195,7 +220,7 @@ In addition to the node information, you need to provide the load balancer infor

|

|||

- The address and port should be indented by two spaces in `config-sample.yaml`, and the address should be VIP.

|

||||

- The domain name of the load balancer is `lb.kubesphere.local` by default for internal access. If you need to change the domain name, please uncomment and modify it.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

After that, you can enable any components you need by following **Enable Pluggable Components** and start your HA cluster installation.

|

||||

|

||||

|

|

@ -211,7 +236,7 @@ As we mentioned in the prerequisites, considering data persistence in a producti

|

|||

|

||||

For testing or development, you can skip this part. KubeKey will use the integrated OpenEBS to provision LocalPV as the storage service directly.

|

||||

|

||||

{{</ notice >}}

|

||||

{{</ notice >}}

|

||||

|

||||

**Available Storage Plugins & Clients**

|

||||

|

||||

|

|

|

|||

|

|

@ -12,7 +12,7 @@ weight: 2343

|

|||

You have already installed at least two KubeSphere clusters, please refer to [Installing on Linux](../../../installing-on-linux) or [Installing on Kubernetes](../../../installing-on-kubernetes) if not yet.

|

||||

|

||||

{{< notice note >}}

|

||||

Multi-cluster management requires Kubesphere to be installed on the target clusters. If you have an existing cluster, please install a minimal KubeSphere on it as an agent, see [Installing Minimal KubeSphere on Kubernetes](../../../installing-on-kubernetes/minimal-kubesphere-on-k8s) for details.

|

||||

Multi-cluster management requires Kubesphere to be installed on the target clusters. If you have an existing cluster, please install a minimal KubeSphere on it as an agent, see [Installing Minimal KubeSphere on Kubernetes](../../installing-on-kubernetes/minimal-kubesphere-on-k8s) for details.

|

||||

{{</ notice >}}

|

||||

|

||||

## Agent Connection

|

||||

|

|

|

|||

|

|

@ -12,7 +12,7 @@ weight: 2340

|

|||

You have already installed at least two KubeSphere clusters, please refer to [Installing on Linux](../../../installing-on-linux) or [Installing on Kubernetes](../../../installing-on-kubernetes) if not yet.

|

||||

|

||||

{{< notice note >}}

|

||||

Multi-cluster management requires Kubesphere to be installed on the target clusters. If you have an existing cluster, please install a minimal KubeSphere on it as an agent, see [Installing Minimal KubeSphere on Kubernetes](../../../installing-on-kubernetes/minimal-kubesphere-on-k8s) for details.

|

||||

Multi-cluster management requires Kubesphere to be installed on the target clusters. If you have an existing cluster, please install a minimal KubeSphere on it as an agent, see [Installing Minimal KubeSphere on Kubernetes](../../installing-on-kubernetes/minimal-kubesphere-on-k8s) for details.

|

||||

{{</ notice >}}

|

||||

|

||||

## Direct Connection

|

||||

|

|

|

|||

|

|

@ -50,15 +50,15 @@ openpitrix:

|

|||

|

||||

### **Installing on Kubernetes**

|

||||

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) for cluster setting. If you want to install App Store, do not use `kubectl apply -f` directly for this file.

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) for cluster setting. If you want to install App Store, do not use `kubectl apply -f` directly for this file.

|

||||

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml). After that, to enable App Store, create a local file cluster-configuration.yaml.

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml). After that, to enable App Store, create a local file cluster-configuration.yaml.

|

||||

|

||||

```bash

|

||||

vi cluster-configuration.yaml

|

||||

```

|

||||

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

3. In this local cluster-configuration.yaml file, navigate to `openpitrix` and enable App Store by changing `false` to `true` for `enabled`. Save the file after you finish.

|

||||

|

||||

```bash

|

||||

|

|

|

|||

|

|

@ -64,15 +64,15 @@ es: # Storage backend for logging, tracing, events and auditing.

|

|||

|

||||

### **Installing on Kubernetes**

|

||||

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) for cluster setting. If you want to install Auditing, do not use `kubectl apply -f` directly for this file.

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) for cluster setting. If you want to install Auditing, do not use `kubectl apply -f` directly for this file.

|

||||

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml). After that, to enable Auditing, create a local file cluster-configuration.yaml.

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml). After that, to enable Auditing, create a local file cluster-configuration.yaml.

|

||||

|

||||

```bash

|

||||

vi cluster-configuration.yaml

|

||||

```

|

||||

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

3. In this local cluster-configuration.yaml file, navigate to `auditing` and enable Auditing by changing `false` to `true` for `enabled`. Save the file after you finish.

|

||||

|

||||

```bash

|

||||

|

|

|

|||

|

|

@ -48,15 +48,15 @@ devops:

|

|||

|

||||

### **Installing on Kubernetes**

|

||||

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) for cluster setting. If you want to install DevOps, do not use `kubectl apply -f` directly for this file.

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) for cluster setting. If you want to install DevOps, do not use `kubectl apply -f` directly for this file.

|

||||

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml). After that, to enable DevOps, create a local file cluster-configuration.yaml.

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml). After that, to enable DevOps, create a local file cluster-configuration.yaml.

|

||||

|

||||

```bash

|

||||

vi cluster-configuration.yaml

|

||||

```

|

||||

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

3. In this local cluster-configuration.yaml file, navigate to `devops` and enable DevOps by changing `false` to `true` for `enabled`. Save the file after you finish.

|

||||

|

||||

```bash

|

||||

|

|

|

|||

|

|

@ -63,15 +63,15 @@ es: # Storage backend for logging, tracing, events and auditing.

|

|||

|

||||

### **Installing on Kubernetes**

|

||||

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) for cluster setting. If you want to install Logging, do not use `kubectl apply -f` directly for this file.

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) for cluster setting. If you want to install Logging, do not use `kubectl apply -f` directly for this file.

|

||||

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml). After that, to enable Logging, create a local file cluster-configuration.yaml.

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml). After that, to enable Logging, create a local file cluster-configuration.yaml.

|

||||

|

||||

```bash

|

||||

vi cluster-configuration.yaml

|

||||

```

|

||||

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

2. Copy all the content in the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) and paste it to the local file just created.

|

||||

3. In this local cluster-configuration.yaml file, navigate to `logging` and enable Logging by changing `false` to `true` for `enabled`. Save the file after you finish.

|

||||

|

||||

```bash

|

||||

|

|

|

|||

|

|

@ -46,15 +46,15 @@ servicemesh:

|

|||

|

||||

### **Installing on Kubernetes**

|

||||

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/cluster-configuration.yaml) for cluster setting. If you want to install Service Mesh, do not use `kubectl apply -f` directly for this file.

|

||||

When you install KubeSphere on Kubernetes, you need to download the file [cluster-configuration.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/cluster-configuration.yaml) for cluster setting. If you want to install Service Mesh, do not use `kubectl apply -f` directly for this file.

|

||||

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/master/deploy/kubesphere-installer.yaml). After that, to enable Service Mesh, create a local file cluster-configuration.yaml.

|

||||

1. In the tutorial of [Installing KubeSphere on Kubernetes](https://kubesphere-v3.netlify.app/docs/installing-on-kubernetes/introduction/overview/), you execute `kubectl apply -f` first for the file [kubesphere-installer.yaml](https://raw.githubusercontent.com/kubesphere/ks-installer/v3.0.0/deploy/kubesphere-installer.yaml). After that, to enable Service Mesh, create a local file cluster-configuration.yaml.

|

||||

|

||||

```bash

|

||||

vi cluster-configuration.yaml

|

||||

```