add devops

Signed-off-by: shaowenchen <mail@chenshaowen.com>

|

|

@ -4,7 +4,7 @@ description: "Getting started with KubeSphere DevOps project"

|

|||

layout: "single"

|

||||

|

||||

linkTitle: "DevOps User Guide"

|

||||

weight: 4400

|

||||

weight: 4000

|

||||

|

||||

icon: "/images/docs/docs.svg"

|

||||

|

||||

|

|

|

|||

|

|

@ -1,7 +0,0 @@

|

|||

---

|

||||

linkTitle: "DevOps Administration"

|

||||

weight: 2200

|

||||

|

||||

_build:

|

||||

render: false

|

||||

---

|

||||

|

|

@ -0,0 +1,7 @@

|

|||

---

|

||||

linkTitle: "Examples"

|

||||

weight: 400

|

||||

|

||||

_build:

|

||||

render: false

|

||||

---

|

||||

|

|

@ -0,0 +1,7 @@

|

|||

---

|

||||

linkTitle: "How to integrate"

|

||||

weight: 300

|

||||

|

||||

_build:

|

||||

render: false

|

||||

---

|

||||

|

|

@ -0,0 +1,157 @@

|

|||

---

|

||||

title: "How to integrate SonarQube in Pipeline"

|

||||

keywords: 'kubernetes, docker, devops, jenkins, sonarqube'

|

||||

description: ''

|

||||

linkTitle: "Integrate SonarQube in Pipeline"

|

||||

weight: 200

|

||||

---

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You need to [enable KubeSphere DevOps System](../../../../docs/pluggable-components/devops/).

|

||||

|

||||

## Install SonarQube Server(Optional, if you don't)

|

||||

|

||||

Execute the following command to install Sonarqube Server:

|

||||

|

||||

```bash

|

||||

helm upgrade --install sonarqube sonarqube --repo https://charts.kubesphere.io/main -n kubesphere-devops-system --create-namespace --set service.type=NodePort

|

||||

```

|

||||

|

||||

You will get this prompt:

|

||||

|

||||

|

||||

|

||||

## Get Address of Sonarqube Console

|

||||

|

||||

Execute the following command to get SonarQube NodePort. As you can see `31331` is returned in this example:

|

||||

|

||||

```bash

|

||||

export NODE_PORT=$(kubectl get --namespace kubesphere-devops-system -o jsonpath="{.spec.ports[0].nodePort}" services sonarqube-sonarqube)

|

||||

export NODE_IP=$(kubectl get nodes --namespace kubesphere-devops-system -o jsonpath="{.items[0].status.addresses[0].address}")

|

||||

echo http://$NODE_IP:$NODE_PORT

|

||||

```

|

||||

|

||||

## Configuration of Sonarqube Server

|

||||

|

||||

### Access SonarQube Console

|

||||

|

||||

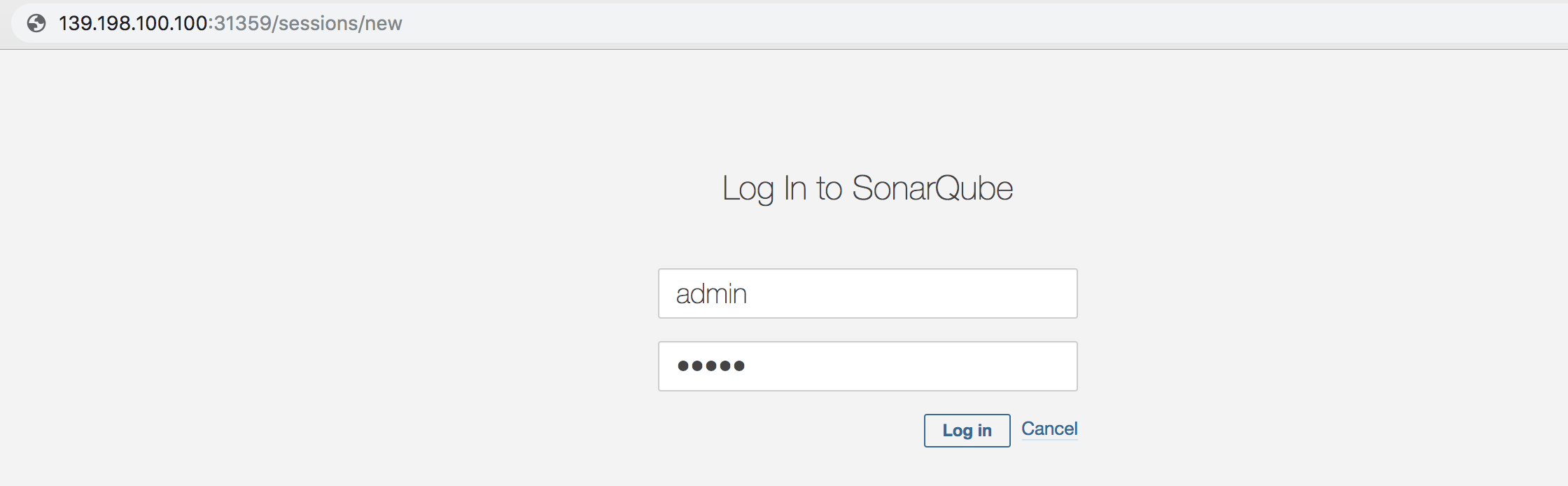

Now access SonarQube console `http://{$Node IP}:{$NodePort}` in your browser using the default account `admin / admin`.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

{{< notice note >}}

|

||||

Make sure you have set up necessary port forwarding rules and open the port to access SonarQube in your security groups.

|

||||

{{</ notice >}}

|

||||

|

||||

### Create SonarQube Admin Token

|

||||

|

||||

1. Click `My Account` go to the personal page.

|

||||

|

||||

|

||||

|

||||

2. Click `Security` and input a token name, such as kubesphere.

|

||||

|

||||

|

||||

|

||||

3. Click `Generate` and copy the token.

|

||||

|

||||

|

||||

|

||||

### Create a Webhook Server

|

||||

|

||||

Execute the following command to get the address of Sonarqube Webhook

|

||||

|

||||

```bash

|

||||

export NODE_PORT=$(kubectl get --namespace kubesphere-devops-system -o jsonpath="{.spec.ports[0].nodePort}" services ks-jenkins)

|

||||

export NODE_IP=$(kubectl get nodes --namespace kubesphere-devops-system -o jsonpath="{.items[0].status.addresses[0].address}")

|

||||

echo http://$NODE_IP:$NODE_PORT/sonarqube-webhook/

|

||||

```

|

||||

|

||||

Click in turn `Administration –> Configuration –> Webhooks` to create a webhook.

|

||||

|

||||

|

||||

|

||||

Input Name and Jenkins Console URL.

|

||||

|

||||

|

||||

|

||||

## Configuration of KubeSphere

|

||||

|

||||

### Add Configuration of Sonarqube to ks-installer

|

||||

|

||||

```bash

|

||||

kubectl edit cc -n kubesphere-system ks-installer

|

||||

```

|

||||

|

||||

Add externalSonarUrl and externalSonarToken and save it.

|

||||

|

||||

|

||||

|

||||

### Add Sonarqube Server to Jenkins

|

||||

|

||||

Execute the following command to get the address of Jenkins.

|

||||

|

||||

```bash

|

||||

export NODE_PORT=$(kubectl get --namespace kubesphere-devops-system -o jsonpath="{.spec.ports[0].nodePort}" services ks-jenkins)

|

||||

export NODE_IP=$(kubectl get nodes --namespace kubesphere-devops-system -o jsonpath="{.items[0].status.addresses[0].address}")

|

||||

echo http://$NODE_IP:$NODE_PORT

|

||||

```

|

||||

|

||||

In the page of Jenkins UI, you also need to add Sonarqube Server.

|

||||

|

||||

|

||||

|

||||

### Add SonarqubeUrl to Kubesphere Console

|

||||

|

||||

In order to be able to click directly from the UI page to jump to the Sonarqube page, you need to configure the following

|

||||

|

||||

```bash

|

||||

kubectl edit cm -n kubesphere-system ks-console-config

|

||||

```

|

||||

|

||||

Add the following:

|

||||

|

||||

```bash

|

||||

client:

|

||||

devops:

|

||||

sonarqubeURL: http://192.168.6.11:31331

|

||||

```

|

||||

|

||||

### Restart Services to Make All Effective

|

||||

|

||||

```bash

|

||||

kubectl -n kubesphere-system rollout restart deploy ks-apiserver

|

||||

```

|

||||

|

||||

```bash

|

||||

kubectl -n kubesphere-system rollout restart deploy ks-console

|

||||

```

|

||||

|

||||

## Create SonarQube Token For New Projetct

|

||||

|

||||

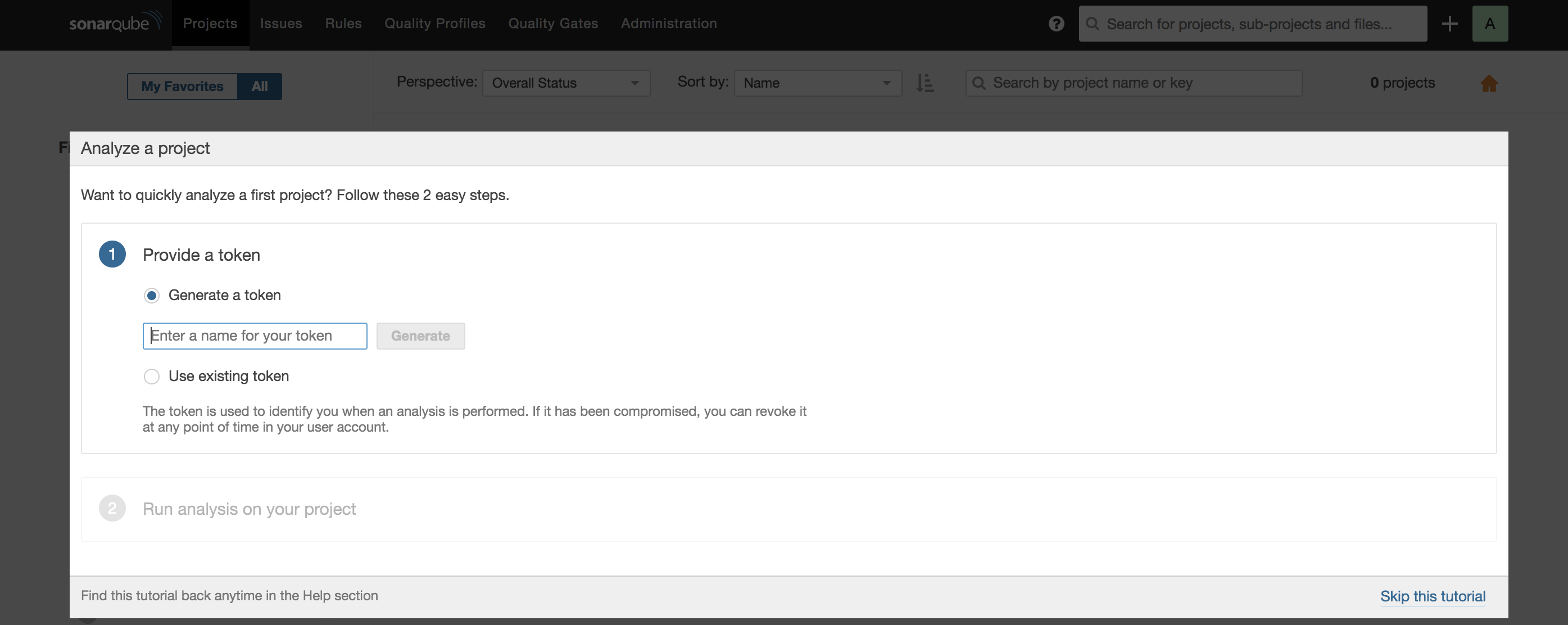

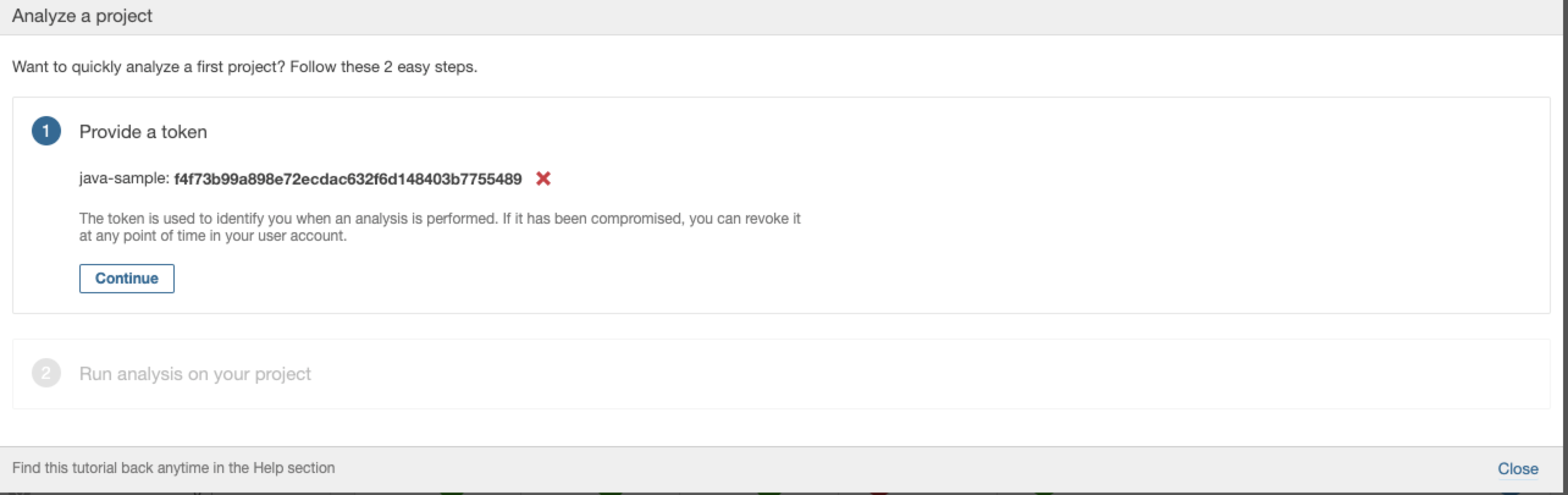

1. Click **Create new project** then a pop-up page **Analyze a project** shows up.

|

||||

|

||||

|

||||

|

||||

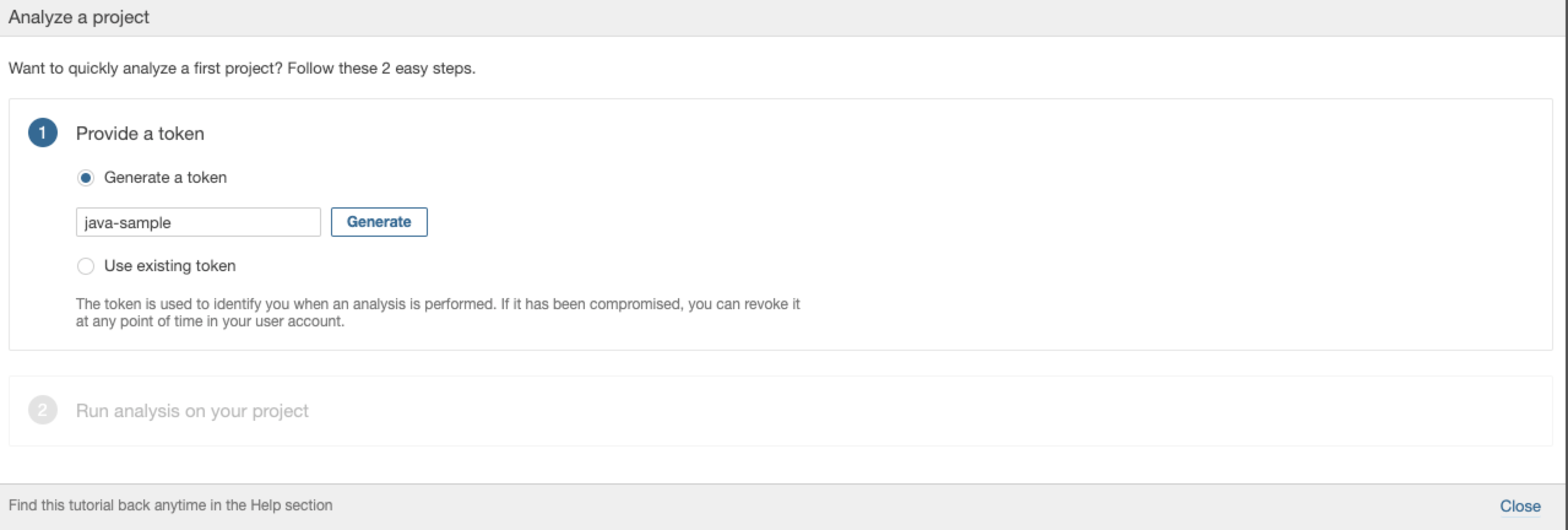

2. Enter a project name like `java-sample`,then click **Generate**.

|

||||

|

||||

|

||||

|

||||

3. At this point, we've got token as follows. Click **Continue**.

|

||||

|

||||

|

||||

|

||||

4. Choose **Java** and select `Maven` by default, please be aware that you just need to copy the highlighted serial number.

|

||||

|

||||

|

||||

|

||||

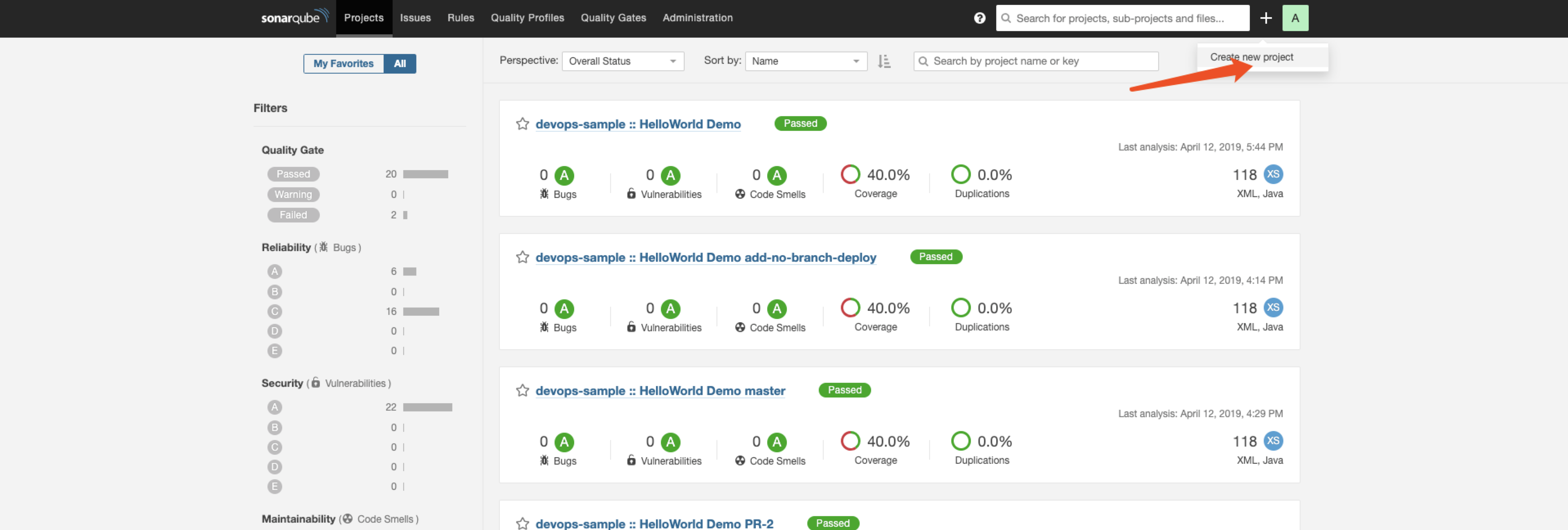

## View the results on KubeSpher Console

|

||||

|

||||

Please refer to [Create a Pipeline - using Graphical Editing Panel](../../how-to-use/create-a-pipeline-using-graphical-editing-panel) or [Create a pipeline using jenkinsfile](../../how-to-use/create-a-pipeline-using-jenkinsfile) for configuration in the project.

|

||||

|

||||

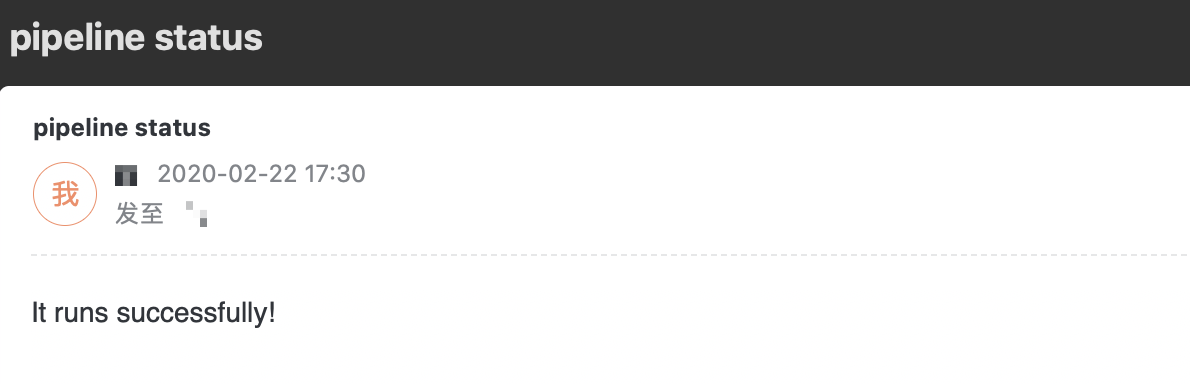

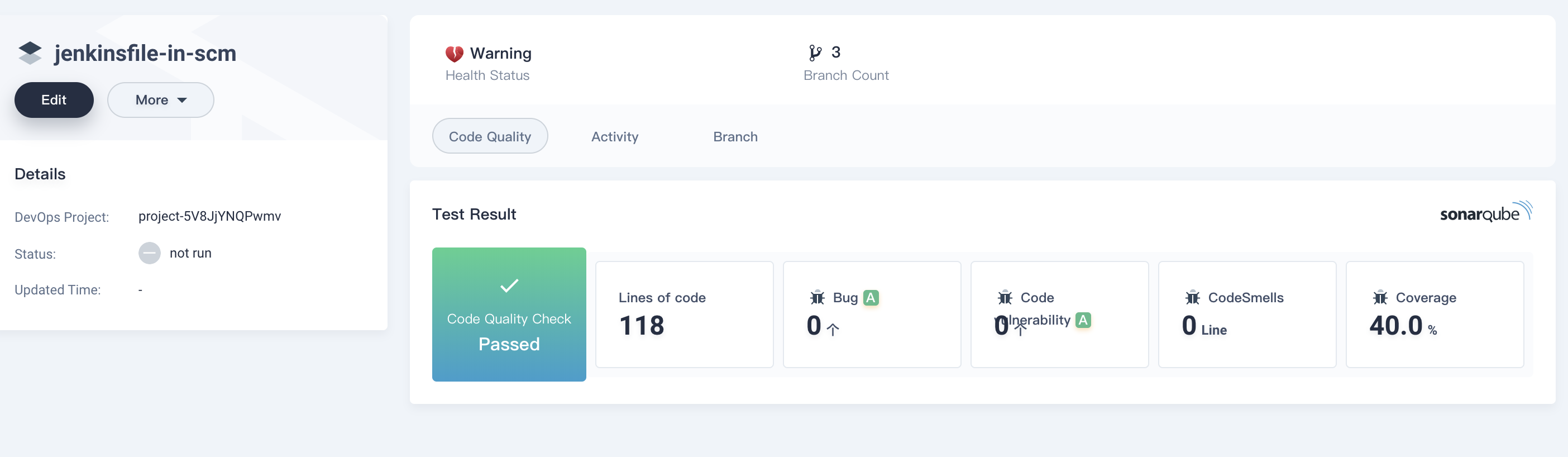

Then, after running successfully, you will get:

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,7 @@

|

|||

---

|

||||

linkTitle: "How to use"

|

||||

weight: 200

|

||||

|

||||

_build:

|

||||

render: false

|

||||

---

|

||||

|

|

@ -0,0 +1,136 @@

|

|||

---

|

||||

title: "Choose Jenkins Agent"

|

||||

keywords: 'kubernetes, docker, devops, jenkins, agent'

|

||||

description: ''

|

||||

---

|

||||

|

||||

## Intro

|

||||

|

||||

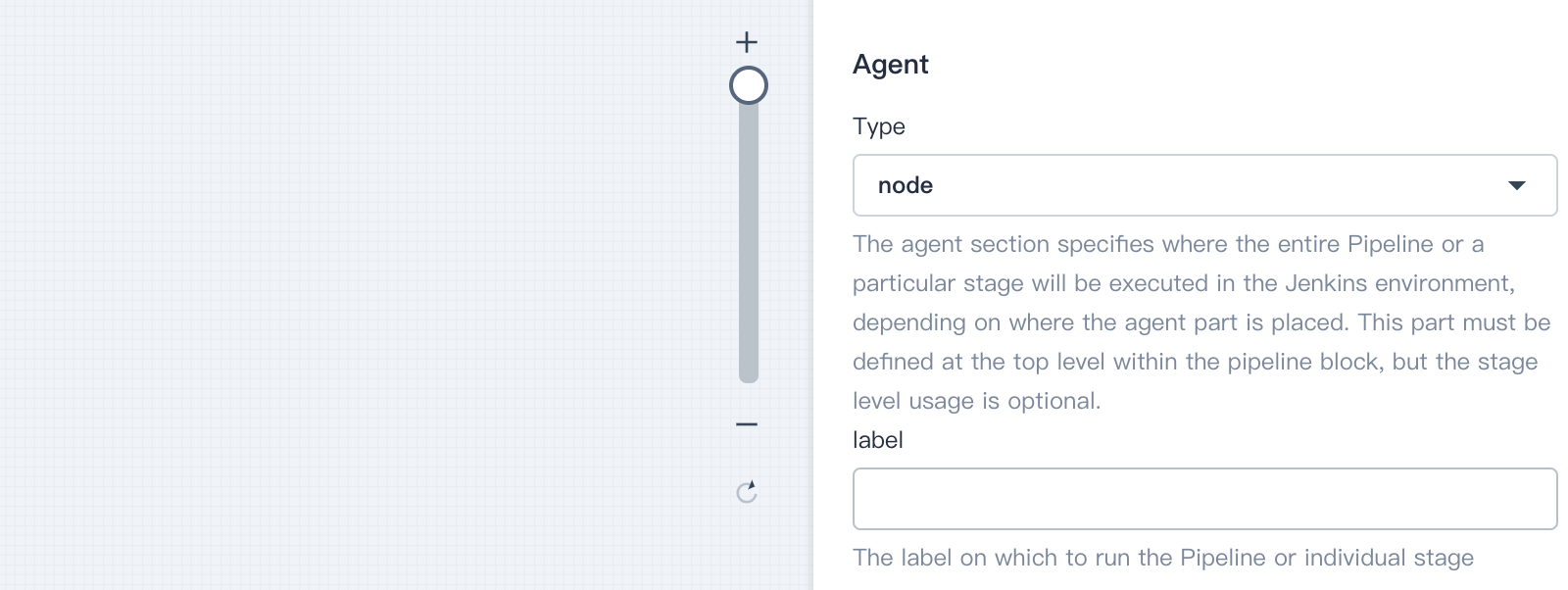

The agent section specifies where the entire Pipeline, or a specific stage, will execute in the Jenkins environment depending on where the agent section is placed. The section must be defined at the top-level inside the pipeline block, but stage-level usage is optional.

|

||||

|

||||

## Built-in podTemplate

|

||||

|

||||

The podTemplate is a template of a pod that will be used to create agents, users can define a podTemplate to use in the kubernetes plugin.

|

||||

|

||||

When creating a pipeline, each Pod contains at least the jnlp container for Jenkins Master to communicate with the Jenkins Agent. In addition, users can add containers in the podTemplate to meet their own needs. Users can choose to use the form of their own Pod yaml to flexibly control the runtime, and the container can be switched by the `container` command.

|

||||

|

||||

```groovy

|

||||

pipeline {

|

||||

agent {

|

||||

kubernetes {

|

||||

//cloud 'kubernetes'

|

||||

label 'mypod'

|

||||

yaml """

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

spec:

|

||||

containers:

|

||||

- name: maven

|

||||

image: maven:3.3.9-jdk-8-alpine

|

||||

command: ['cat']

|

||||

tty: true

|

||||

"""

|

||||

}

|

||||

}

|

||||

stages {

|

||||

stage('Run maven') {

|

||||

steps {

|

||||

container('maven') {

|

||||

sh 'mvn -version'

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

At the same time, in order to reduce the user's learning cost, we have built in some podTemplate, so that users can avoid writing yaml files.

|

||||

|

||||

In the current version we have built in 4 types of podTemplates, i.e. `base`, `nodejs`, `maven`, `go`, and provide an isolated Docker environment in the Pod.

|

||||

|

||||

You can use the built-in podTempalte by specifying the Agent's label. For example, to use the nodejs podTemplate, you can specify label as `nodejs` when creating the Pipeline, as shown in the example below.

|

||||

|

||||

|

||||

|

||||

```groovy

|

||||

pipeline {

|

||||

agent {

|

||||

node {

|

||||

label 'nodejs'

|

||||

}

|

||||

}

|

||||

|

||||

stages {

|

||||

stage('nodejs hello') {

|

||||

steps {

|

||||

container('nodejs') {

|

||||

sh 'yarn -v'

|

||||

sh 'node -v'

|

||||

sh 'docker version'

|

||||

sh 'docker images'

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

|

||||

|

||||

### podTemplate base

|

||||

|

||||

| Name | Type / Version |

|

||||

| --- | --- |

|

||||

|Jenkins Agent Label | base |

|

||||

|Container Name | base |

|

||||

| OS| centos-7 |

|

||||

|Docker| 18.06.0|

|

||||

|Helm | 2.11.0 |

|

||||

|Kubectl| Stable release|

|

||||

|Built-in Softwares | unzip、which、make、wget、zip、bzip2、git |

|

||||

|

||||

|

||||

### podTemplate nodejs

|

||||

|

||||

| Name | Type / Version |

|

||||

| --- | --- |

|

||||

|Jenkins Agent Label | nodejs |

|

||||

|Container Name | nodejs |

|

||||

| OS| centos-7 |

|

||||

|Node | 9.11.2 |

|

||||

|Yarn | 1.3.2 |

|

||||

| Docker | 18.06.0 |

|

||||

| Helm | 2.11.0 |

|

||||

|Kubectl | stable release|

|

||||

|Built-in Softwares| unzip、which、make、wget、zip、bzip2、git|

|

||||

|

||||

|

||||

### podTemplate maven

|

||||

|

||||

| Name | Type / Version |

|

||||

| --- | --- |

|

||||

| Jenkins Agent Label | maven |

|

||||

| Container Name | maven |

|

||||

| OS| centos-7 |

|

||||

| Jdk | openjdk-1.8.0 |

|

||||

| Maven | 3.5.3|

|

||||

| Docker| 18.06.0 |

|

||||

| Helm | 2.11.0 |

|

||||

| Kubectl| stable release |

|

||||

| Built-in Softwares | unzip、which、make、wget、zip、bzip2、git |

|

||||

|

||||

|

||||

### podTemplate go

|

||||

|

||||

| Name | Type / Version |

|

||||

| --- | --- |

|

||||

| Jenkins Agent Label | go |

|

||||

| Container Name | go |

|

||||

| OS| centos-7 |

|

||||

| Go | 1.11 |

|

||||

| GOPATH | /home/jenkins/go |

|

||||

| GOROOT | /usr/local/go |

|

||||

| Docker | 18.06.0 |

|

||||

| Helm | 2.11.0 |

|

||||

| Kubectl | stable release |

|

||||

| Built-in Softwares | unzip、which、make、wget、zip、bzip2、git |

|

||||

|

|

@ -0,0 +1,344 @@

|

|||

---

|

||||

title: "Create a Pipeline - using Graphical Editing Panel"

|

||||

keywords: 'KubeSphere, kubernetes, docker, jenkins, cicd, graphical pipeline'

|

||||

description: 'Create a Pipeline - using Graphical Editing Panel'

|

||||

|

||||

linkTitle: 'Create a Pipeline - using Graphical Editing Panel'

|

||||

weight: 300

|

||||

---

|

||||

|

||||

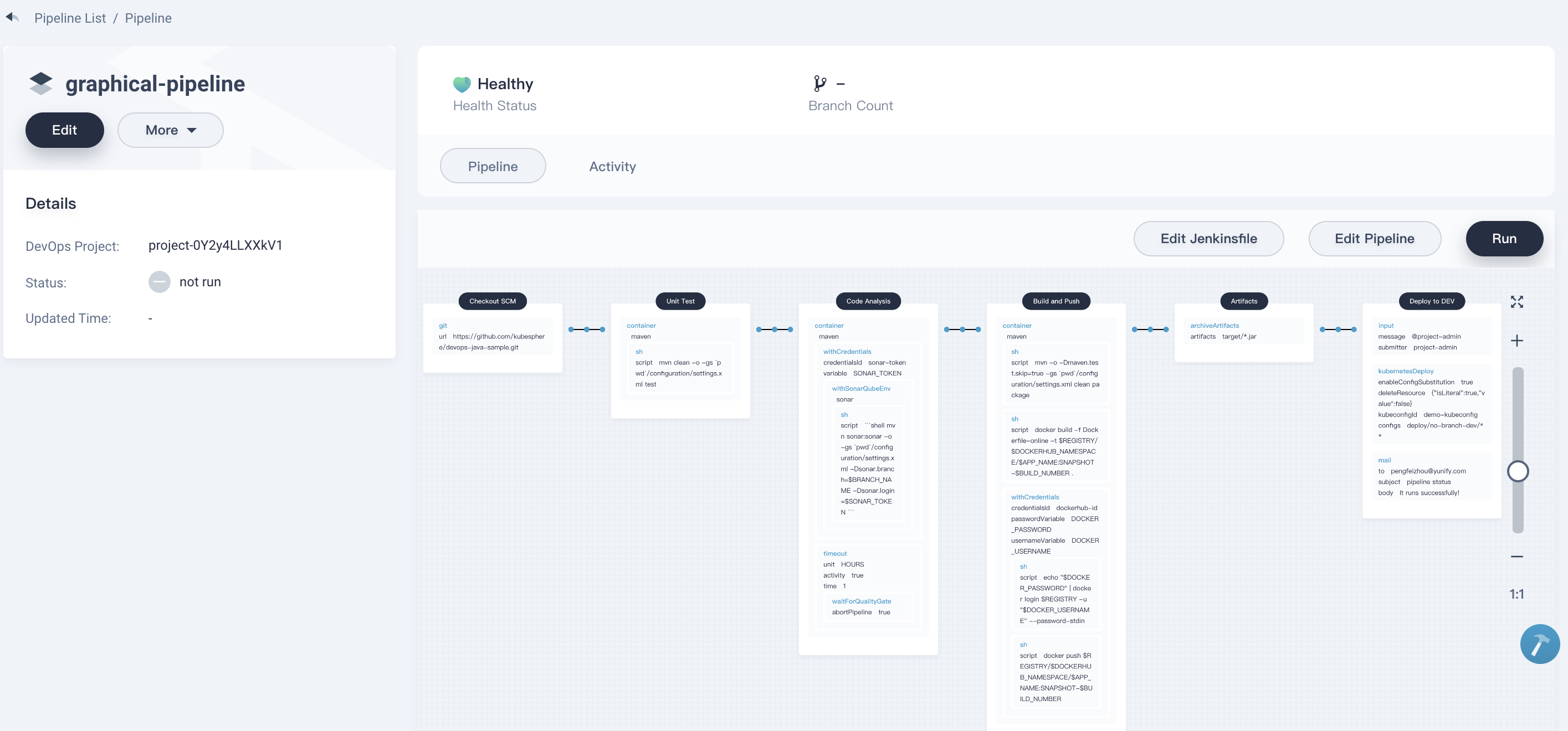

We are going to show how to create a CI/CD pipeline without Jenkinsfile by visually editing the workflow through KubeSphere console.

|

||||

|

||||

## Objective

|

||||

|

||||

We will use the graphical editing panel in KubeSphere console to create a pipeline, which automates the processes and release the sample project to Kubernetes development environment. If you have tried the Jenkinsfile-based pipeline, the build steps for this tutorial are easy to understand. The sample project in this tutorial is same to this [demo](https://github.com/kubesphere/devops-java-sample/tree/sonarqube).

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You need to [enable KubeSphere DevOps System](../../../../docs/pluggable-components/devops/).

|

||||

- You need to create [DockerHub](http://www.dockerhub.com/) account.

|

||||

- You need to create a workspace, a DevOps project, and a **project-regular** user account, and this account needs to be invited into the DevOps project as the role of maintainer.

|

||||

- Configure email server for notification in pipeline, please refer to [Set Email Server for KubeSphere Pipeline](../../how-to-use/jenkins-email/).

|

||||

- Set CI dedicated node for building pipeline, please refer to [Set CI Node for Dependency Cache](../../set-ci-node/).

|

||||

- You need to install and configure sonarqube, please refer to [How to integrate SonarQube in Pipeline

|

||||

](../../../how-to-integrate/sonarqube/) . Or you can skip this part, There is no **Code Analysis** below.

|

||||

|

||||

## Pipeline Overview

|

||||

|

||||

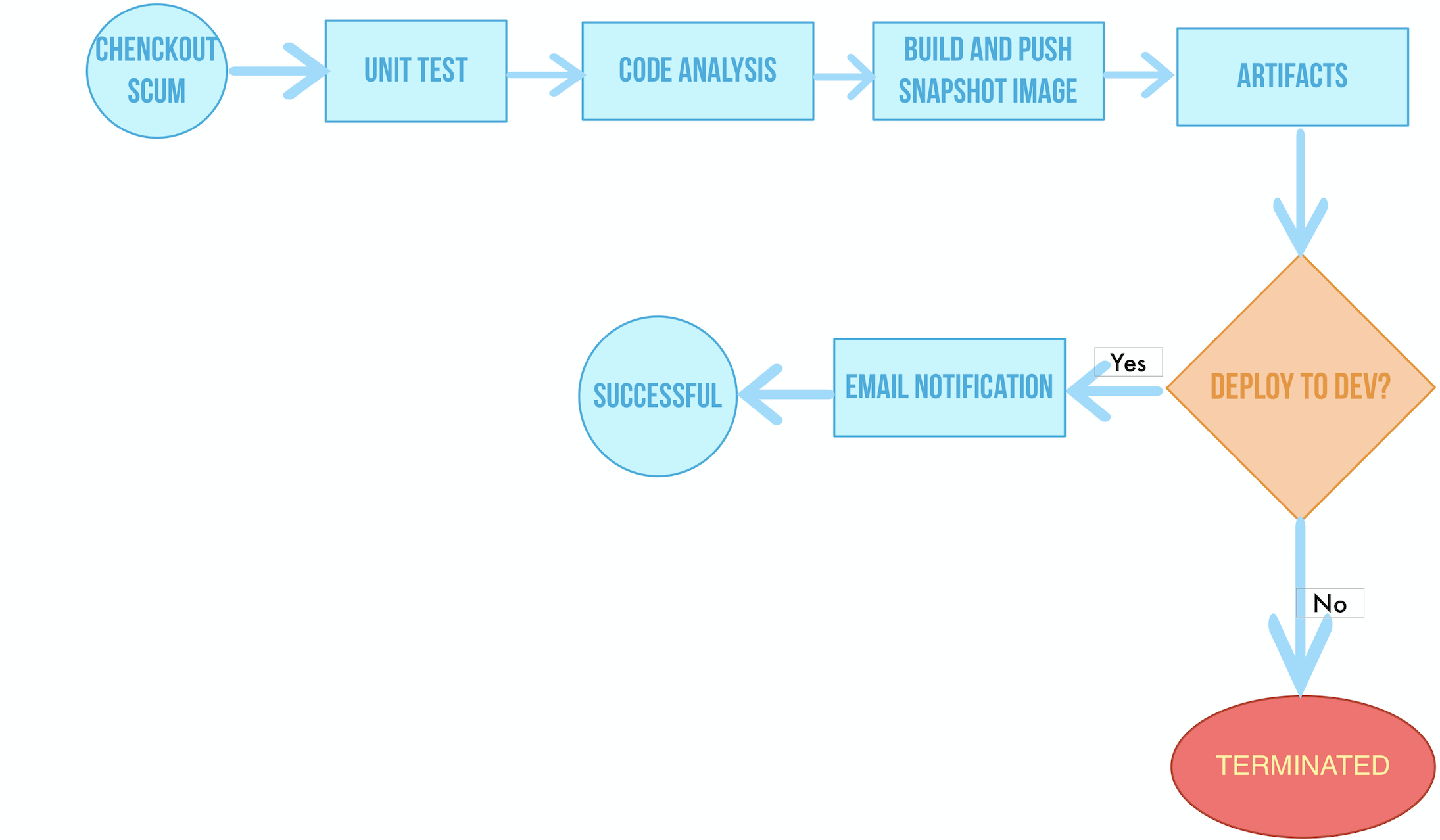

The sample pipeline includes the following six stages.

|

||||

|

||||

|

||||

|

||||

> To elaborate every stage:

|

||||

>

|

||||

> - **Stage 1. Checkout SCM:** Pull the GitHub repository code;

|

||||

> - **Stage 2. Unit test**: The pipeline will continue running the next stage only if the unit test is passed;

|

||||

> - **Stage 3. Code Analysis**: Configure SonarQube for static code quality check and analysis;

|

||||

> - **Stage 4. Build and Push**: Build the image and push the it to DockerHub with tag `snapshot-$BUILD_NUMBER` where `$BUILD_NUMBER` is the serial number of the pipeline active list;

|

||||

> - **Stage 5. Artifacts**: Generate the artifact (jar package) and save it;

|

||||

> - **Stage 6. Deploy to DEV**: Deploy the project to the development environment. It requires an approval in this stage. An email will be sent after the deployment is successful.

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Create Credentials

|

||||

|

||||

We need to create **three** credentials for DockerHub, Kubernetes and SonarQube respectively. If you have finished the last lab [Create a Jenkinsfile-based Pipeline for Spring Boot Project](../devops-online#step-1-create-credentials), you already have the credentials created. Otherwise, please refer to [create credentials](../devops-online#step-1-create-credentials) to create them that are used in the pipeline.

|

||||

|

||||

|

||||

|

||||

### Step 2: Create Project

|

||||

|

||||

The sample pipeline will deploy the [sample](https://github.com/kubesphere/devops-java-sample) to Kubernetes namespace, thus we need to create a project in KubeSphere. If you do not finish the last lab, please refer to the [step](../create-a-pipeline-using-jenkinsfile/#step-3-create-projects) to create a project named `kubesphere-sample-dev` by using `project-admin`, then invite the account `project-regular` into this project and assign the role of `operator` to this account.

|

||||

|

||||

### Step 3: Create Pipeline

|

||||

|

||||

Follow the steps below to create a pipeline using graphical editing panel.

|

||||

|

||||

#### Fill in the basic information

|

||||

|

||||

3.1. In the DevOps project, select the **Pipeline** on the left and click **Create**.

|

||||

|

||||

|

||||

|

||||

3.2. In the pop-up window, name it `graphical-pipeline`, click **Next**.

|

||||

|

||||

#### Advanced Settings

|

||||

|

||||

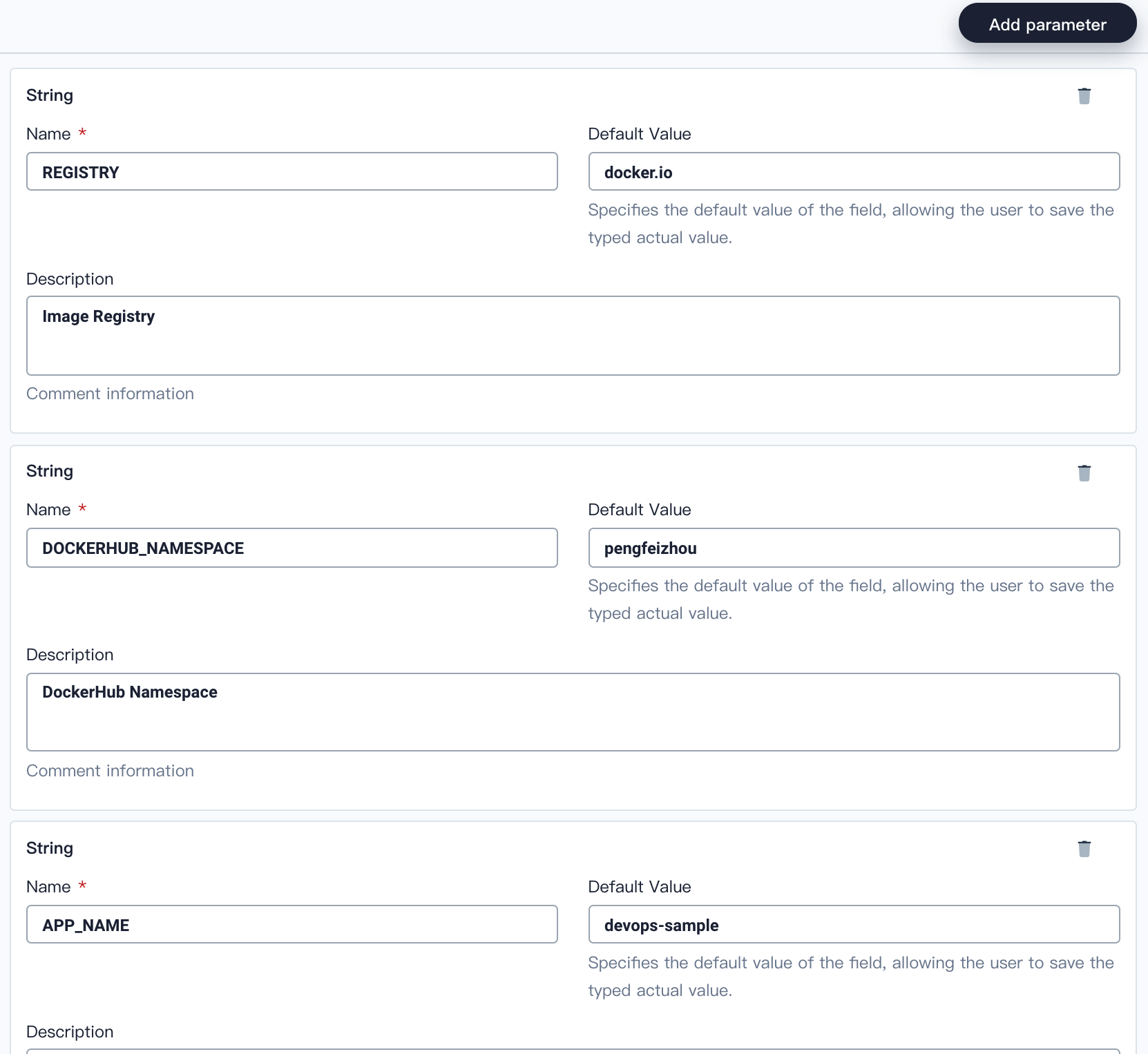

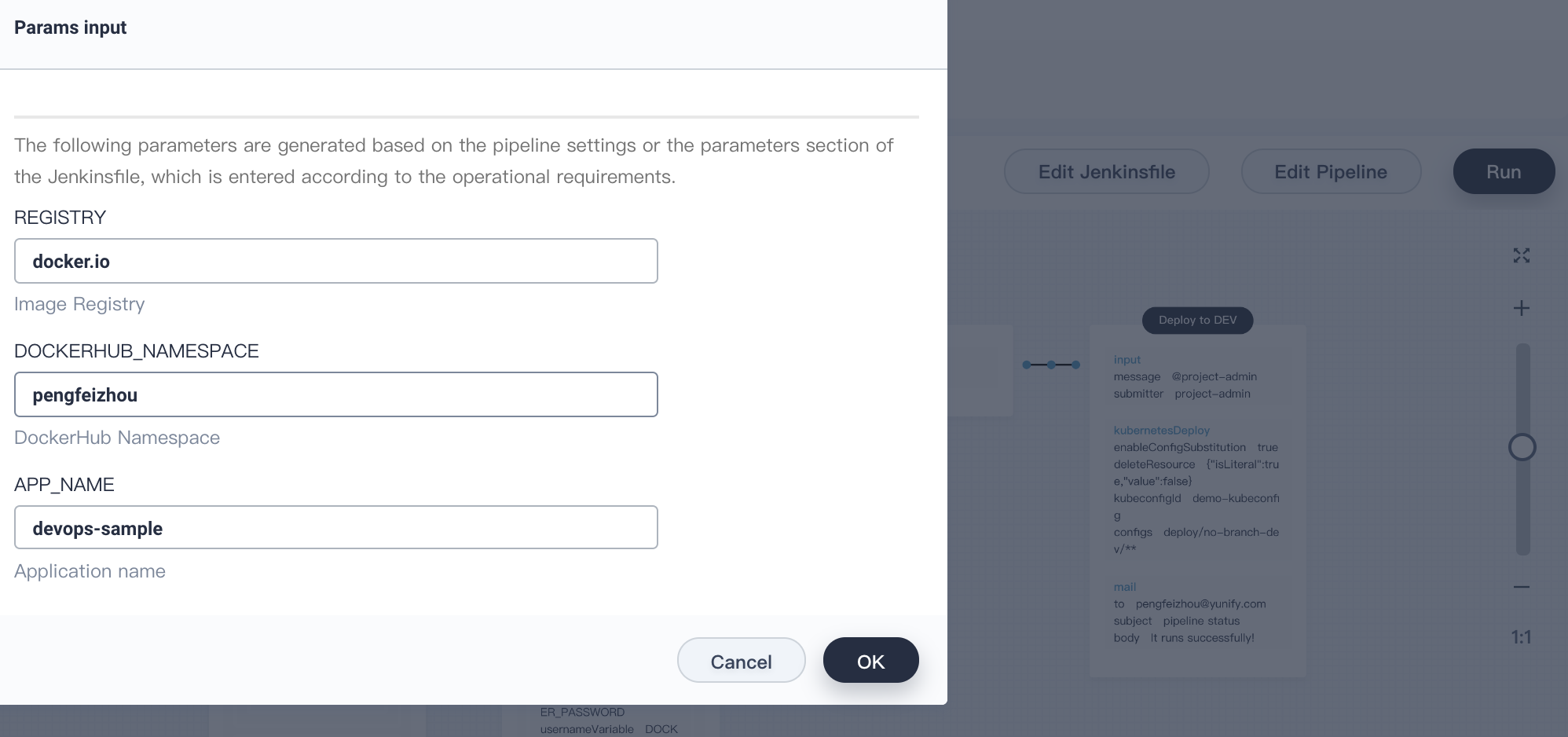

3.3. Keep clicking **Add Parameter** to add **three** string parameters as follows. These parameters will be used in the Docker command of the pipeline. Click **Create** when you are done.

|

||||

|

||||

| Parameter Type | Name | Default Value | Description |

|

||||

| --- | --- | --- | --- |

|

||||

| String | REGISTRY | The sample repository address is `docker.io`. | Image Registry |

|

||||

| String | DOCKERHUB_NAMESPACE | Fill in your DockerHub account which can also be the Organization name under the account. | DockerHub Namespace |

|

||||

| String | APP_NAME | Fill the application name with `devops-sample`. | Application Name |

|

||||

|

||||

|

||||

|

||||

### Step 4: Editing pipeline

|

||||

|

||||

This pipeline consists of six stages. We will demonstrate the steps and tasks in each stage.

|

||||

|

||||

#### Stage I: Pull Source Code (Checkout SCM)

|

||||

|

||||

The graphical editing panel includes two areas, i.e., **canvas** on the left and **content** on the right. It will generate Jenkinsfile after creating a pipeline in the panel, which is much more user-friendly for developers.

|

||||

|

||||

> Note: Pipeline includes `scripted pipeline` and `declarative pipeline`, and the panel supports `declarative pipeline`. For pipeline syntax, see [Jenkins Documentation](https://jenkins.io/doc/book/pipeline/syntax/).

|

||||

|

||||

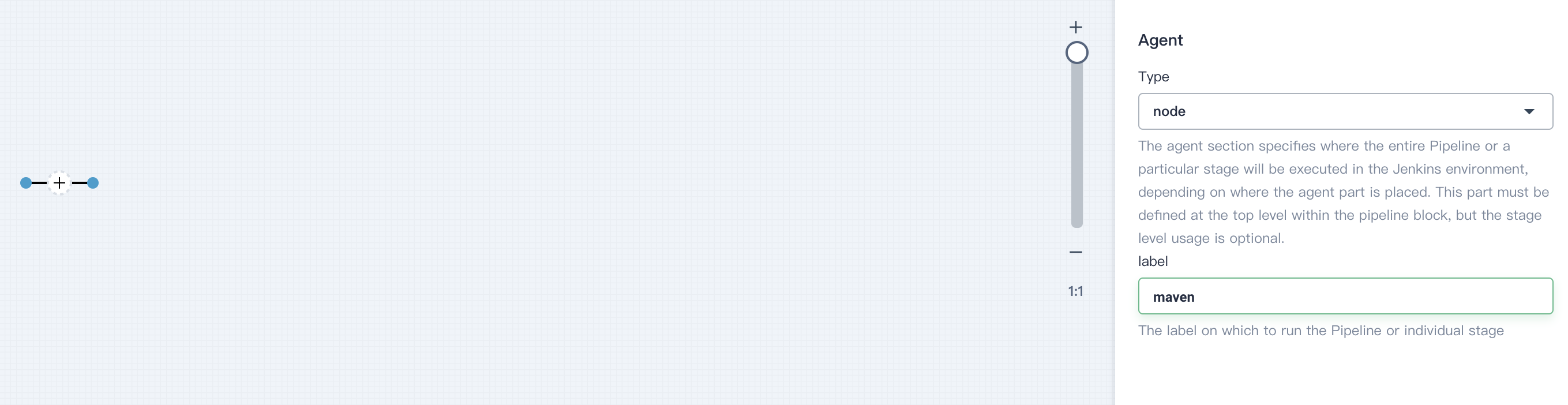

4.1.1. As follows, select **node** from the drop-down list of **agent type** in the content area, input `maven` in the label.

|

||||

|

||||

> Note: The agent is used to define execution environment. The agent directive tells Jenkins where and how to execute the pipeline or a specific stage. Please refer to [Jenkins Agent](https://jenkins.io/doc/pipeline/tour/agents/) for further information.

|

||||

|

||||

|

||||

|

||||

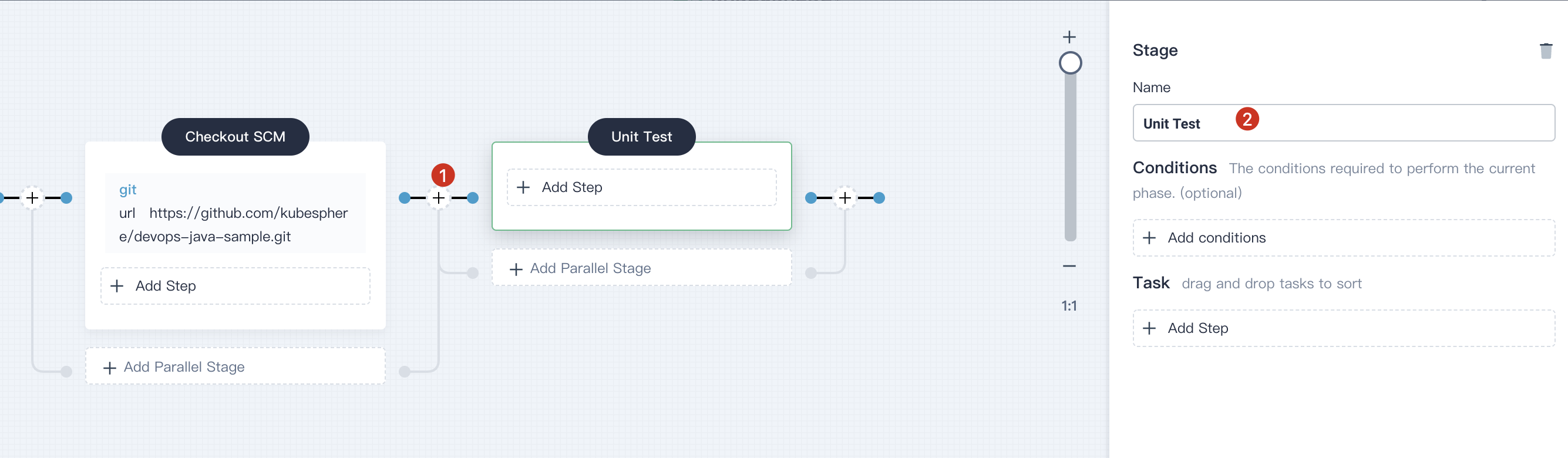

4.1.2. In the canvas area, click the **+** button to add a stage. Click the box with title `No Name` that encloses the box **Add Step**, name it `Checkout SCM` in the content area on the right of the panel.

|

||||

|

||||

|

||||

|

||||

4.1.3. Click **Add Step**. Select **git** from the content area. For now, fill in the pop-up window as follows:

|

||||

|

||||

- Url: Input GitHub repository URL `https://github.com/kubesphere/devops-java-sample.git` . Please replace the url with your own repository.

|

||||

- Branch: Input `sonarqube` . If you want't to use Code Analysis, you can input `master` or ignore it.

|

||||

- Credential ID: Leave it blank as it is for using a private repository.

|

||||

|

||||

When you are done, click **OK** to save it and you will see the first stage created.

|

||||

|

||||

|

||||

|

||||

#### Stage II: Unit Test

|

||||

|

||||

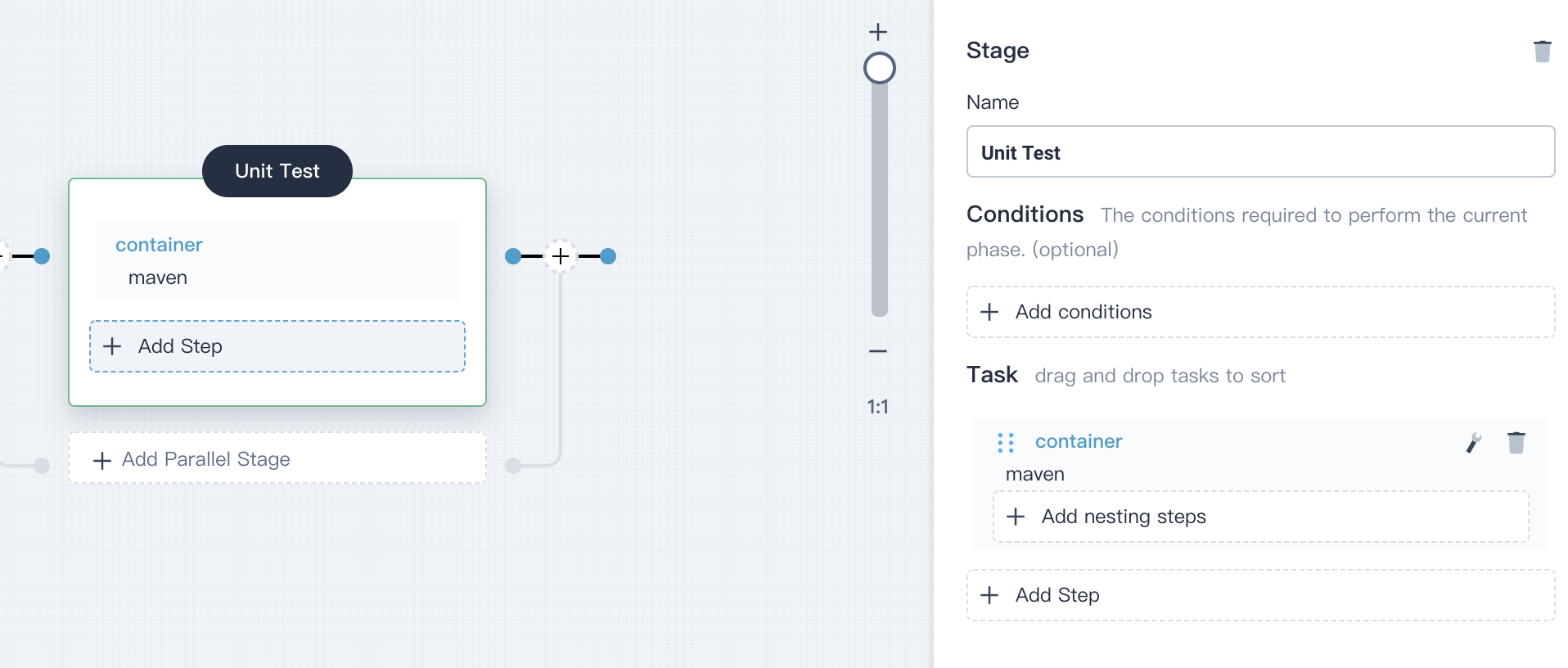

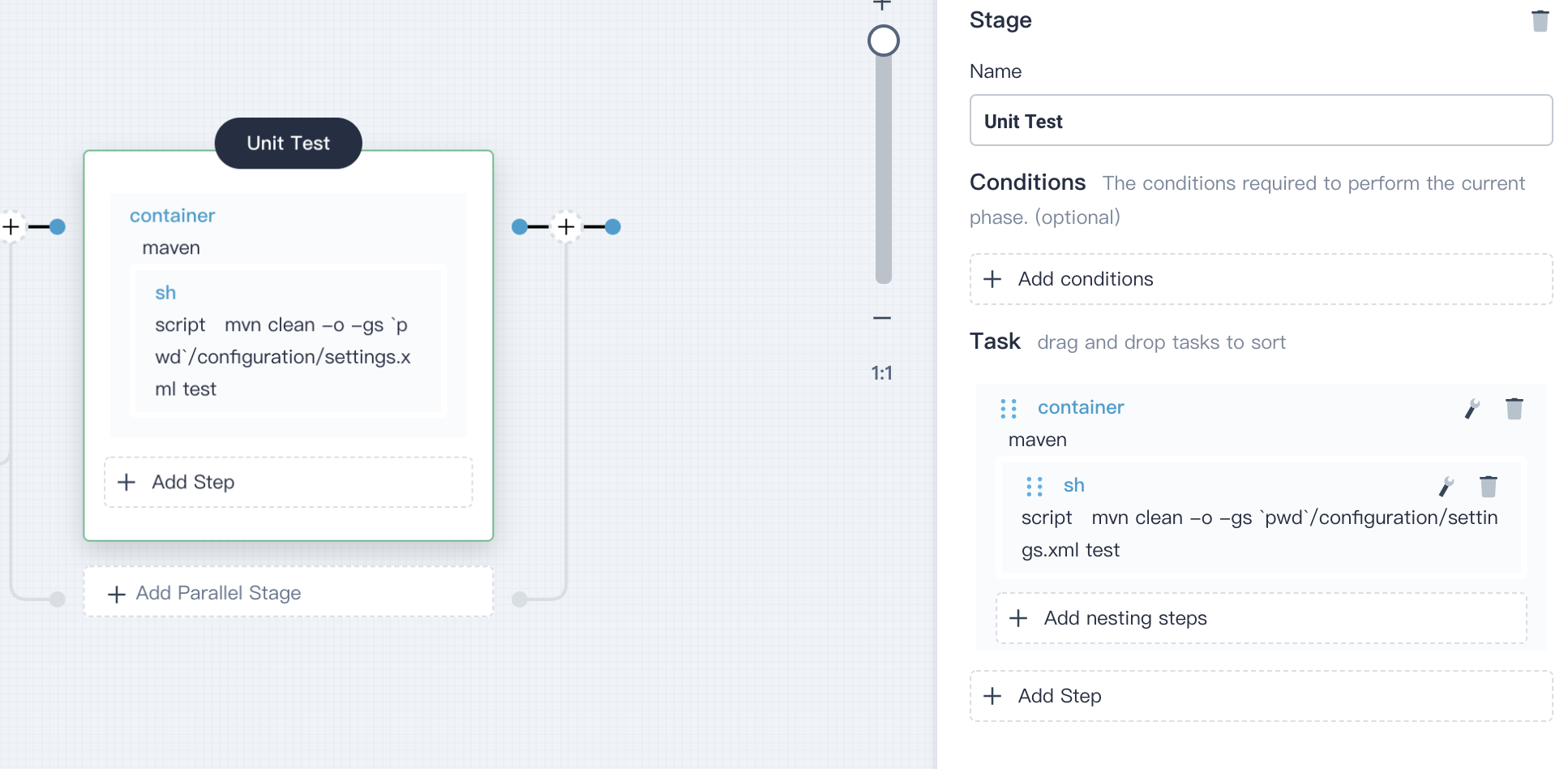

4.2.1. Click **+** on the right of the stage **Checkout SCM** to add another stage for performing a unit test in the container, name it `Unit Test`.

|

||||

|

||||

|

||||

|

||||

4.2.2. Click **Add Step** and select **container**, name it `maven`, then click **OK**.

|

||||

|

||||

|

||||

|

||||

4.2.3. In the content area, click **Add nesting steps** in the `maven` container created above to add a nested step. Then select **shell** and enter the following command in the pop-up window:

|

||||

|

||||

```bash

|

||||

mvn clean -o -gs `pwd`/configuration/settings.xml test

|

||||

```

|

||||

|

||||

Click **OK** to save it.

|

||||

|

||||

|

||||

|

||||

#### Stage III: Code Analysis

|

||||

|

||||

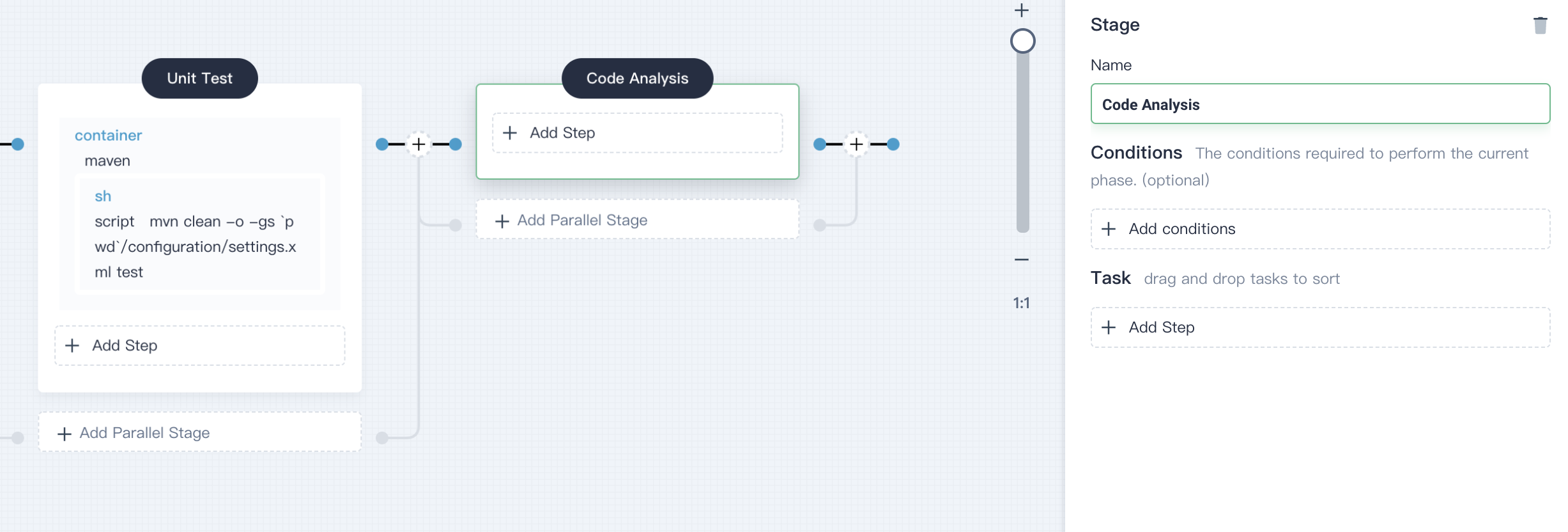

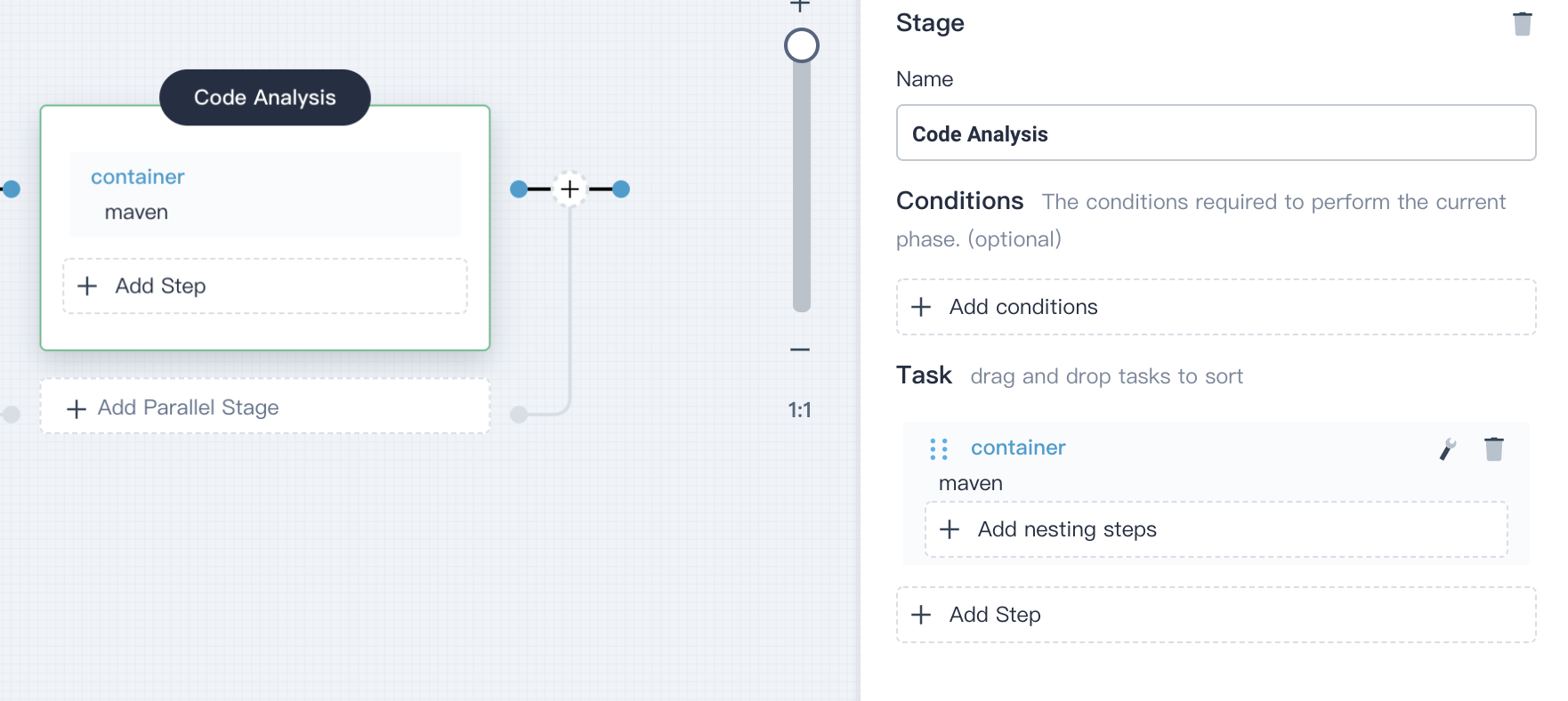

4.3.1. Same as above, click **+** on the right of the stage **Unit Test** to continue adding a stage for configuring SonarQube, which is used to perform static code quality analysis in the container, name it `Code Analysis`.

|

||||

|

||||

|

||||

|

||||

4.3.2. Click **Add Step** in **Code Analysis**, and select **container**,name it `maven`,then click **OK**.

|

||||

|

||||

|

||||

|

||||

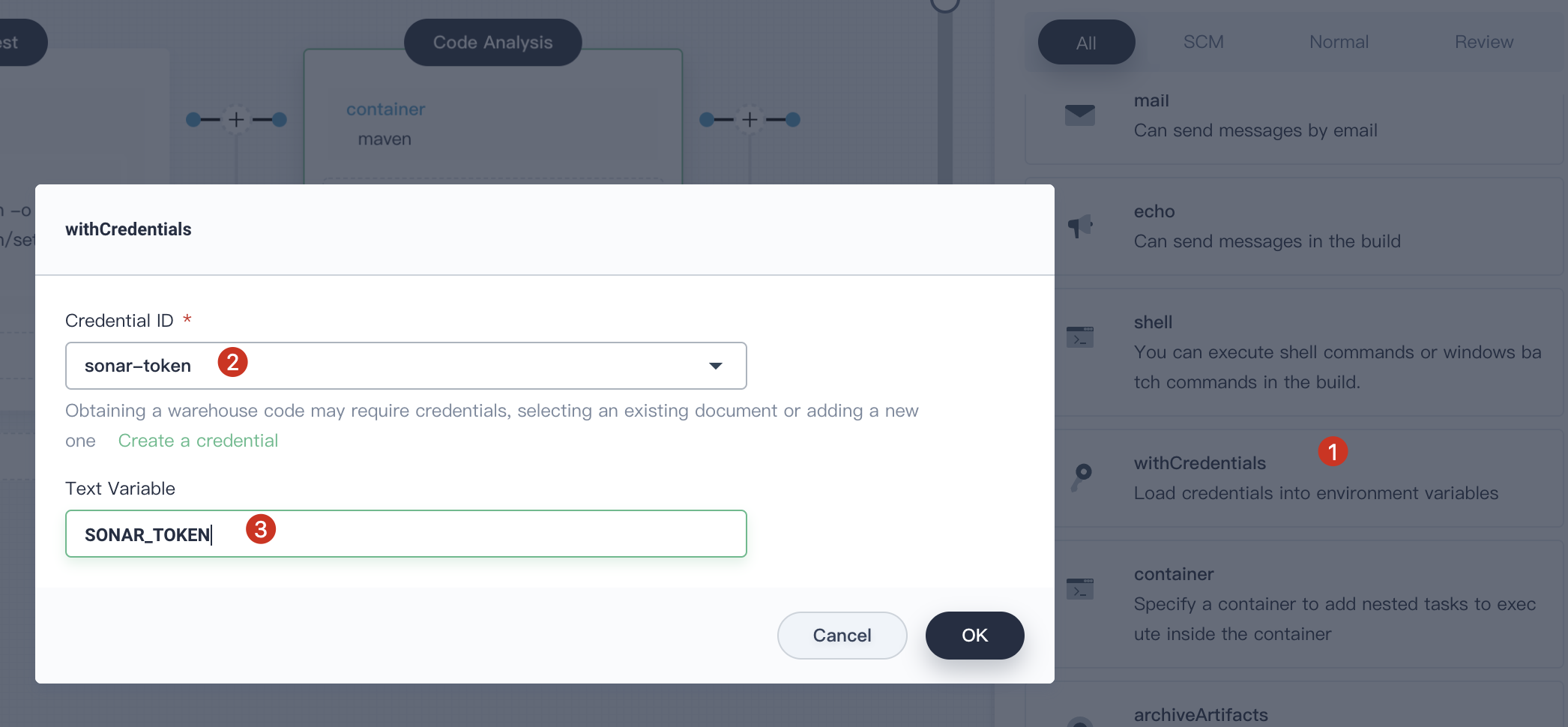

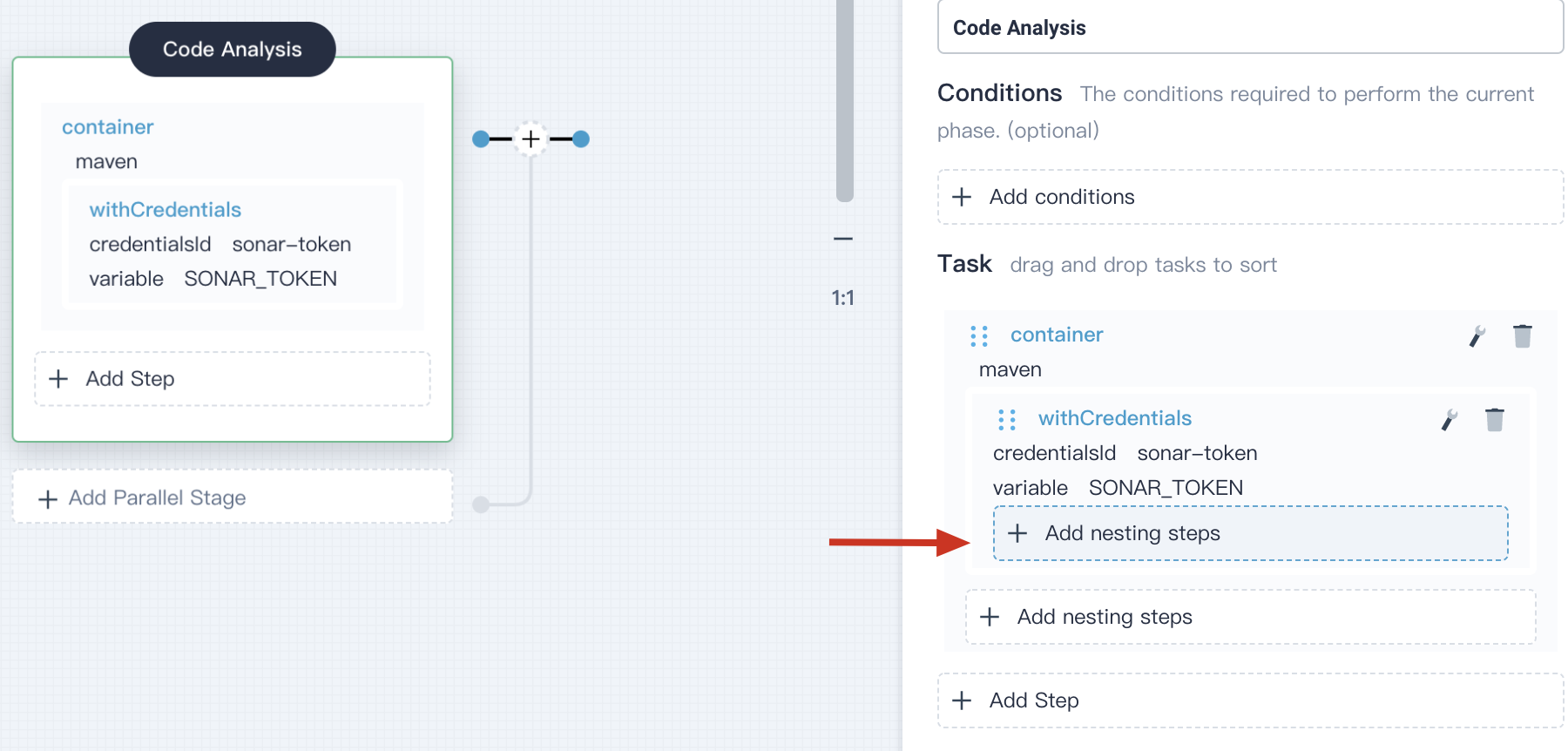

4.3.3. Click **Add nesting steps** in the `maven` container created above to add a nested step and select **withCredentials**, Select the previously created credential ID `sonar-token` and input `SONAR_TOKEN` in the text variable, then click **OK**.

|

||||

|

||||

|

||||

|

||||

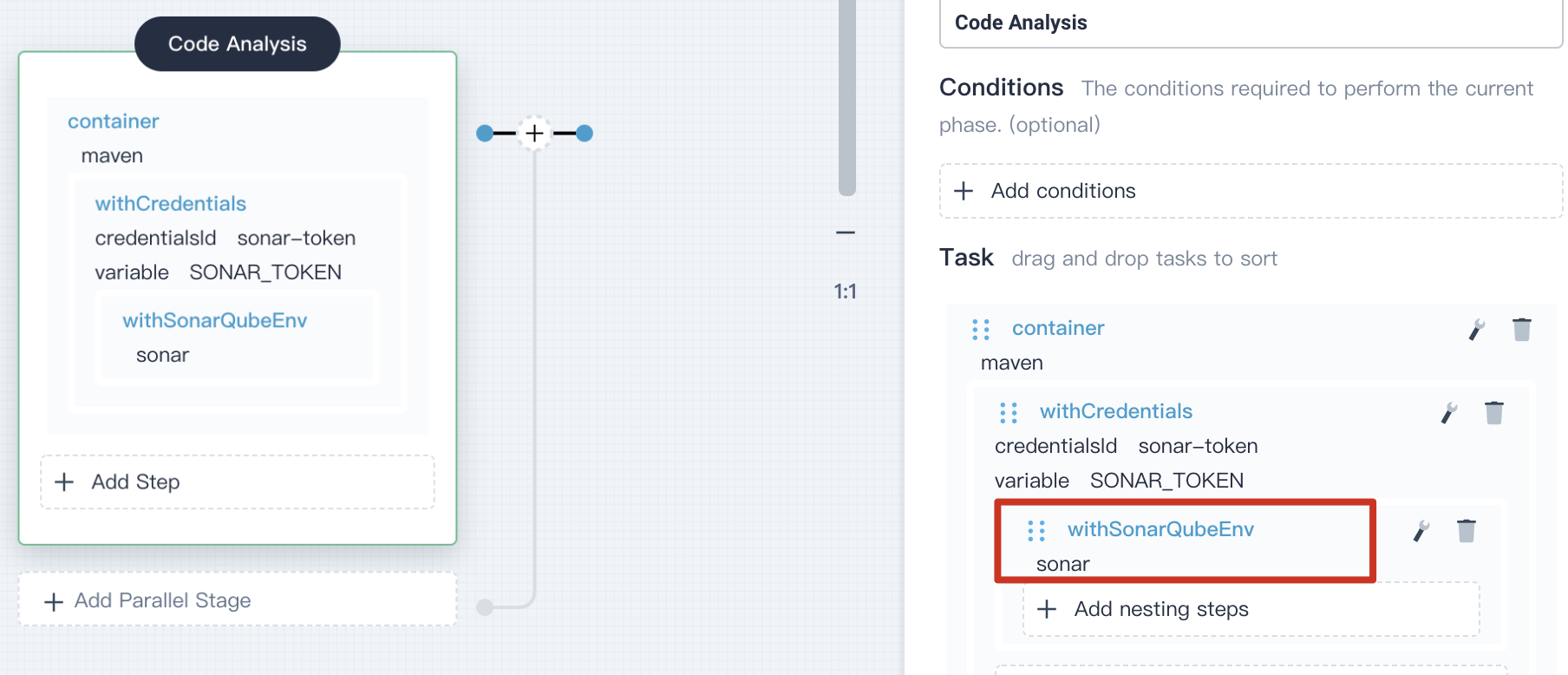

4.3.4. In the task **withCredential** on the right, click **Add nesting steps** (the first one),then select **withSonarQubeEnv**, leave the default name `sonar`, click **OK** to save it.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

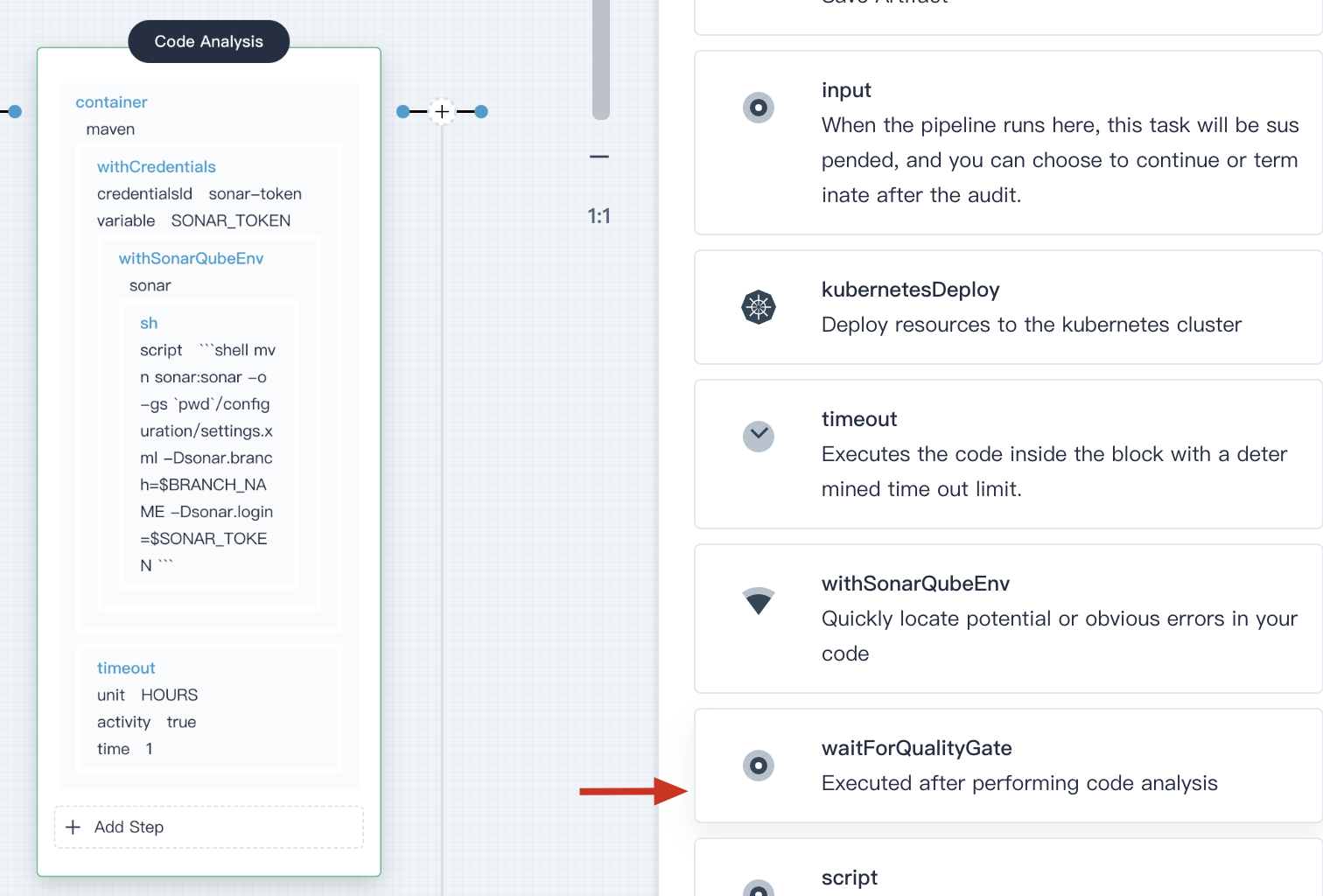

4.3.5. Click **Add nesting steps** (the first one) in the **withSonarQubeEnv**. Then select **shell** on the right, enter the following commands in the pop-up window for SonarQube branch and authentication, and click **OK** to save the information.

|

||||

|

||||

```shell

|

||||

mvn sonar:sonar -o -gs `pwd`/configuration/settings.xml -Dsonar.branch=$BRANCH_NAME -Dsonar.login=$SONAR_TOKEN

|

||||

```

|

||||

|

||||

|

||||

|

||||

4.3.6. Click on the **Add nesting steps** (the third one) on the right, select **timeout**. Input `1` to time, and select `Hours` in unit.

|

||||

|

||||

Click **OK** to save it.

|

||||

|

||||

|

||||

|

||||

4.3.7. In the `timeout`, click **Add nesting steps** (the first one). Then select **waitforSonarQubeGate** and keep the default `Start the follow-up task after inspection` in the popup window.

|

||||

|

||||

Click **OK** to save it.

|

||||

|

||||

|

||||

|

||||

#### Stage IV: Build and Push the Image

|

||||

|

||||

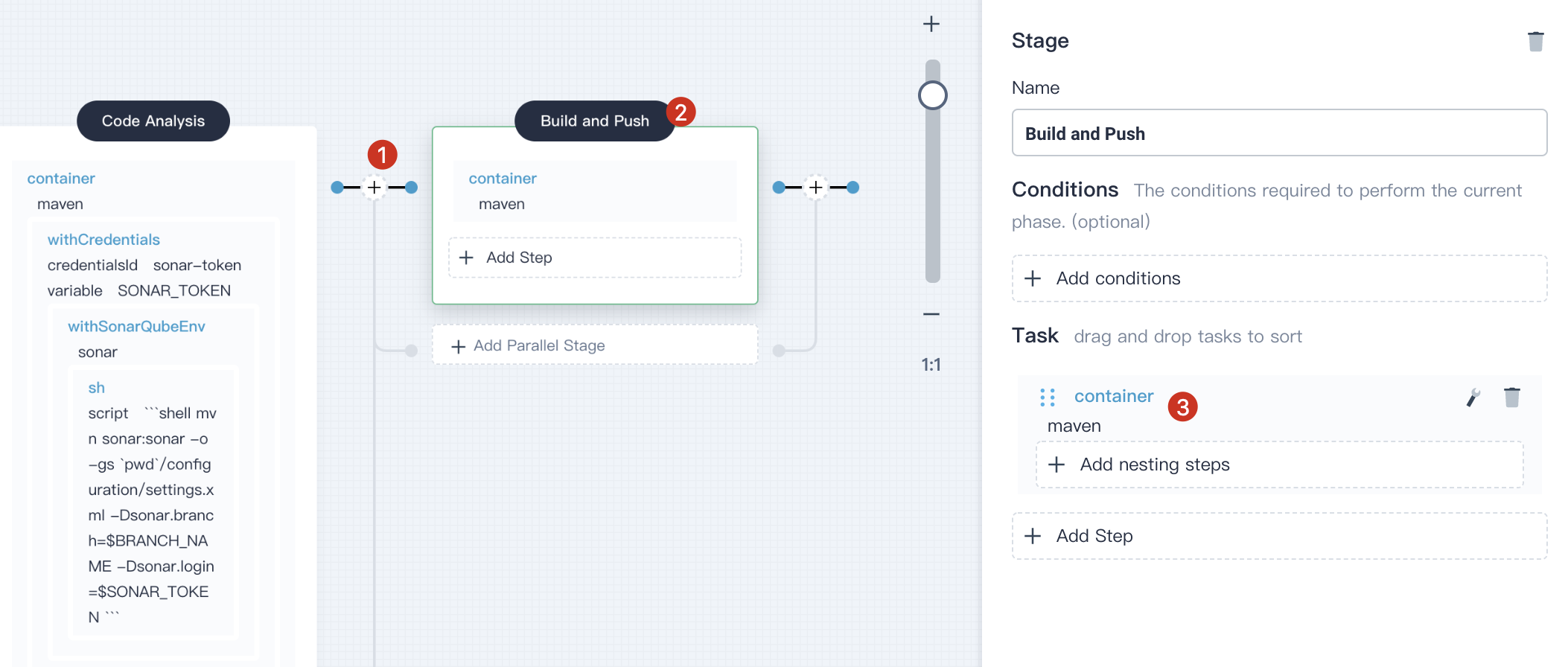

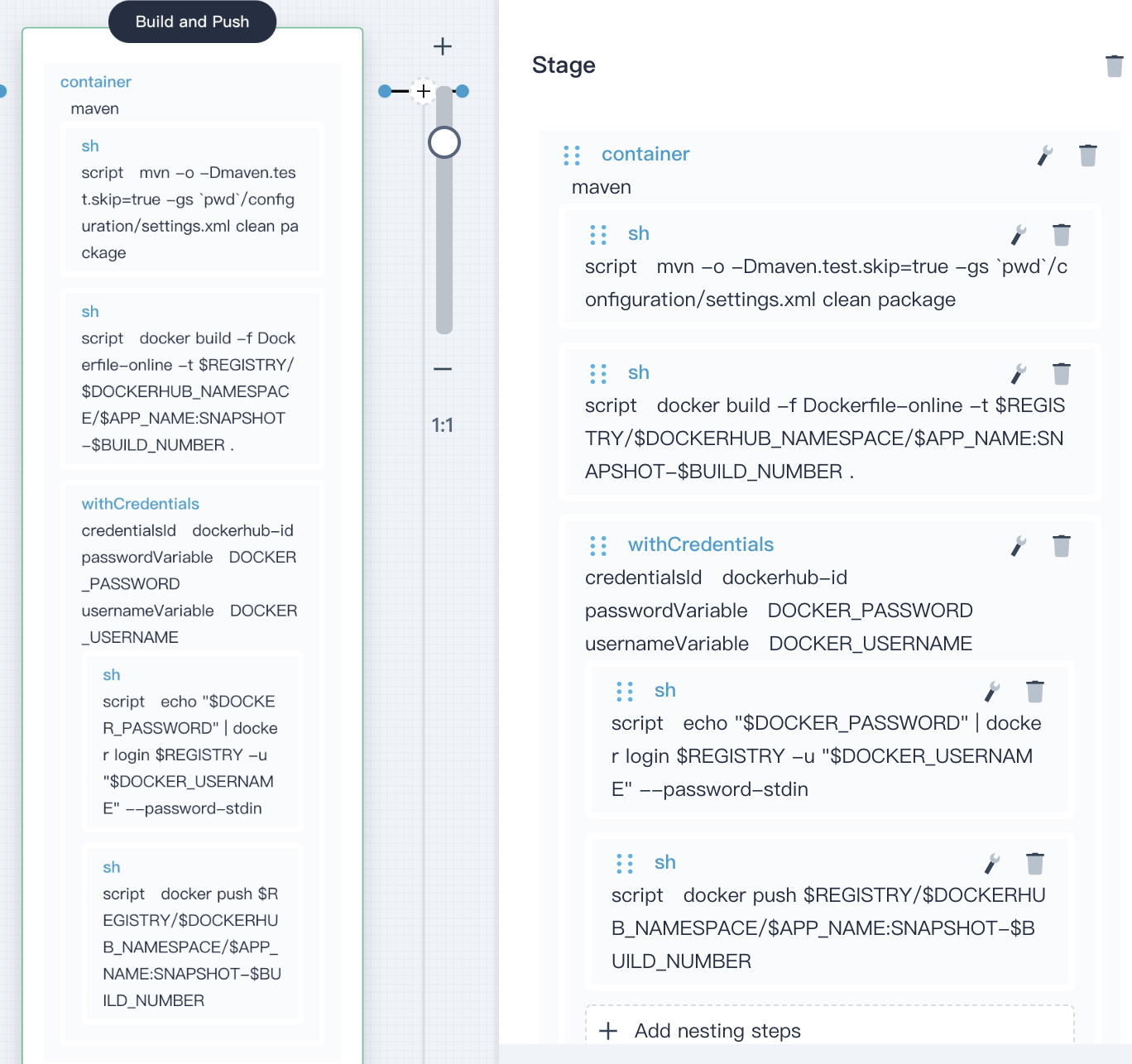

4.4.1. Similarly, click **+** on the right of the stage of **Code Analysis** to add another stage to build and push images to DockerHub, name it `Build and Push`.

|

||||

|

||||

4.4.2. Click **Add Step** and select **container**,name it `maven`,then click **OK**.

|

||||

|

||||

|

||||

|

||||

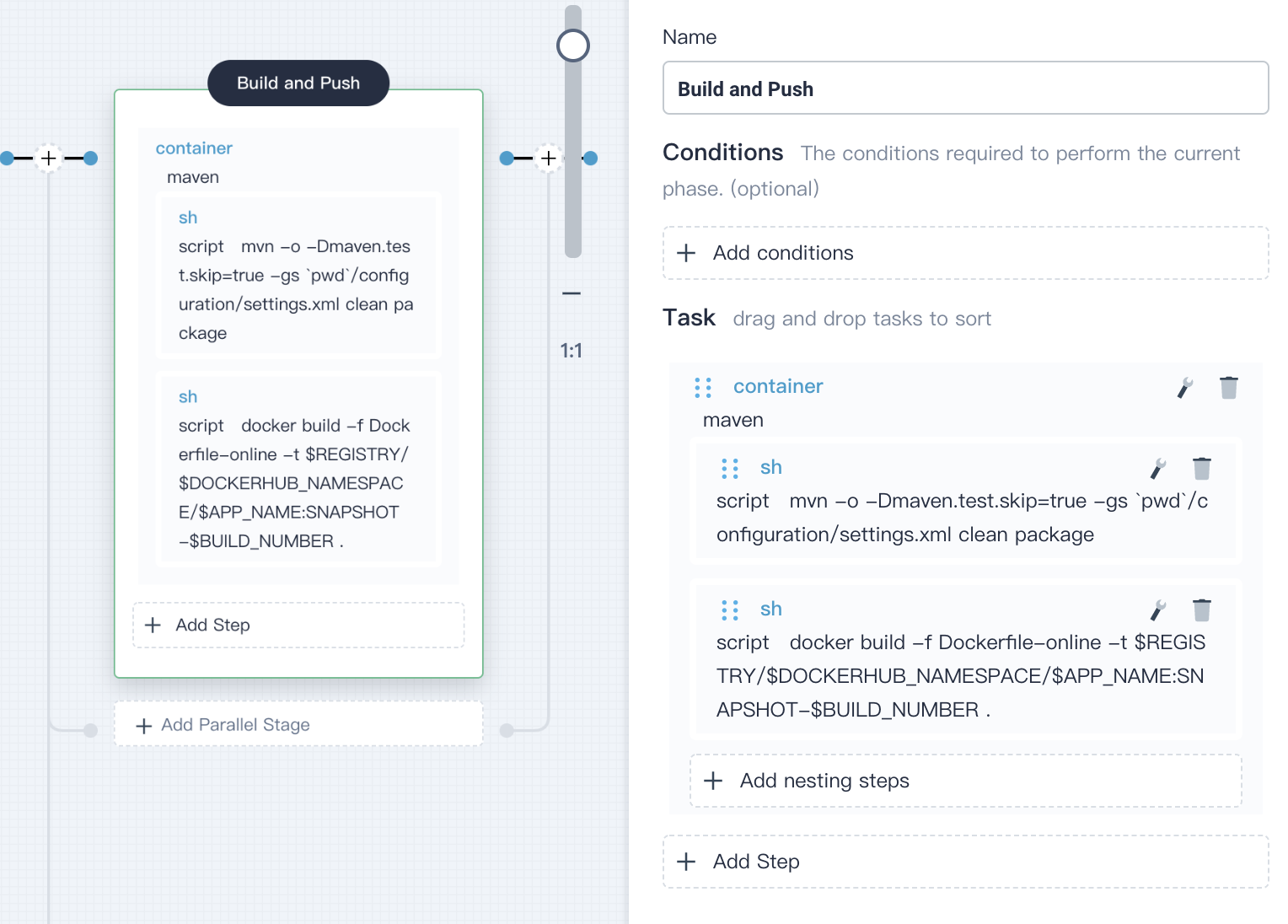

4.16. Click **Add nesting steps** in the contain `maven`, and select **shell** on the right, enter the following command in the pop-up window:

|

||||

|

||||

```shell

|

||||

mvn -o -Dmaven.test.skip=true -gs `pwd`/configuration/settings.xml clean package

|

||||

```

|

||||

|

||||

4.4.3. Then continue to click **Add nesting steps** on the right, select **shell** in the pop-up window, enter the following command to build a Docker image based on the [Dockerfile](https://github.com/kubesphere/devops-java-sample/blob/master/Dockerfile-online):

|

||||

|

||||

> Please DO NOT miss the dot `.` at the end of the command.

|

||||

|

||||

```shell

|

||||

docker build -f Dockerfile-online -t $REGISTRY/$DOCKERHUB_NAMESPACE/$APP_NAME:SNAPSHOT-$BUILD_NUMBER .

|

||||

```

|

||||

|

||||

|

||||

|

||||

Click **OK** to save it.

|

||||

|

||||

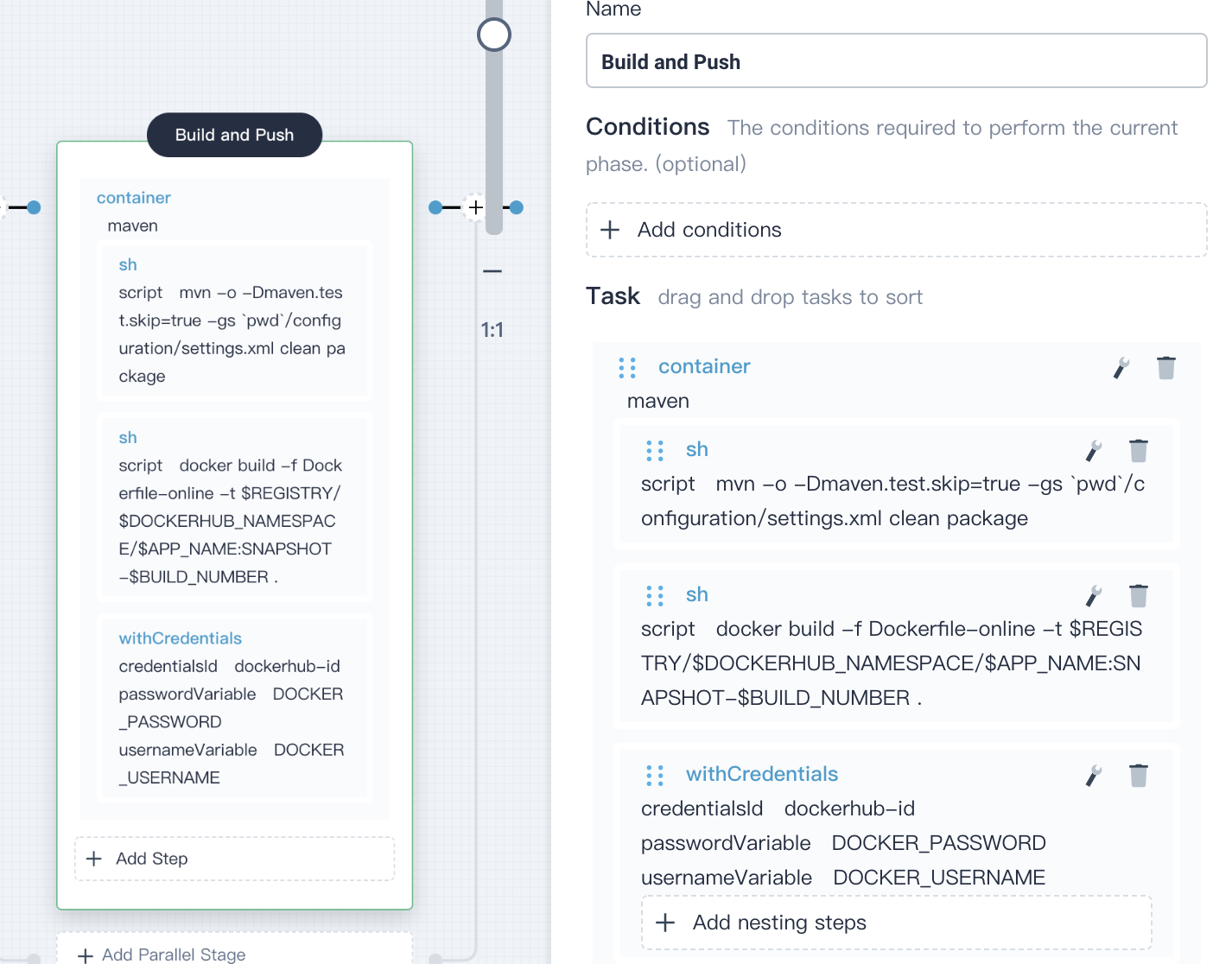

4.4.4. Similarly, click **Add nesting steps** again and select **withCredentials** on the right. Fill in the pop-up window as follows:

|

||||

|

||||

> Note: Considering the security, the account information are not allowed to be exposed in plaintext in the script.

|

||||

|

||||

- Credential ID:Select the DockerHub credentials you created, e.g. `dockerhub-id`

|

||||

- Password variable:Enter `DOCKER_PASSWORD`

|

||||

- Username variable:Enter `DOCKER_USERNAME`

|

||||

|

||||

Click **OK** to save the it.

|

||||

|

||||

|

||||

|

||||

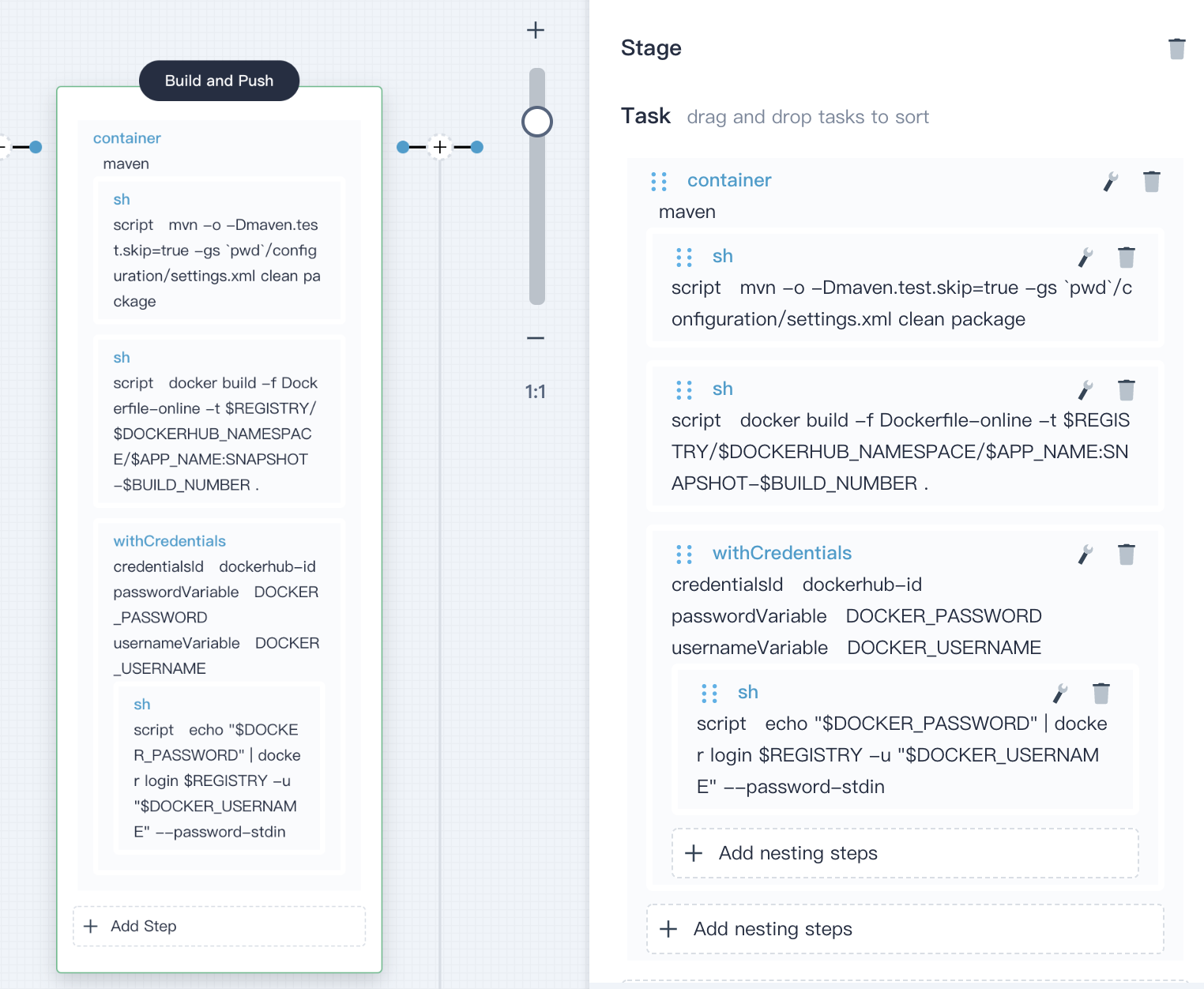

4.4.5. Click **Add nesting steps** (the first one) in the **withCredentials** step created above, select **shell** and enter the following command in the pop-up window, which is used to log in Docker Hub:

|

||||

|

||||

```shell

|

||||

echo "$DOCKER_PASSWORD" | docker login $REGISTRY -u "$DOCKER_USERNAME" --password-stdin

|

||||

```

|

||||

|

||||

Click **OK** to save the it.

|

||||

|

||||

|

||||

|

||||

4.4.6. As above, click **Add nesting steps** in the **withCredentials** step again, choose **shell** and enter the following command to push the SNAPSHOT image to DockerHub:

|

||||

|

||||

```shell

|

||||

docker push $REGISTRY/$DOCKERHUB_NAMESPACE/$APP_NAME:SNAPSHOT-$BUILD_NUMBER

|

||||

```

|

||||

|

||||

|

||||

|

||||

#### Stage V: Generate Artifact

|

||||

|

||||

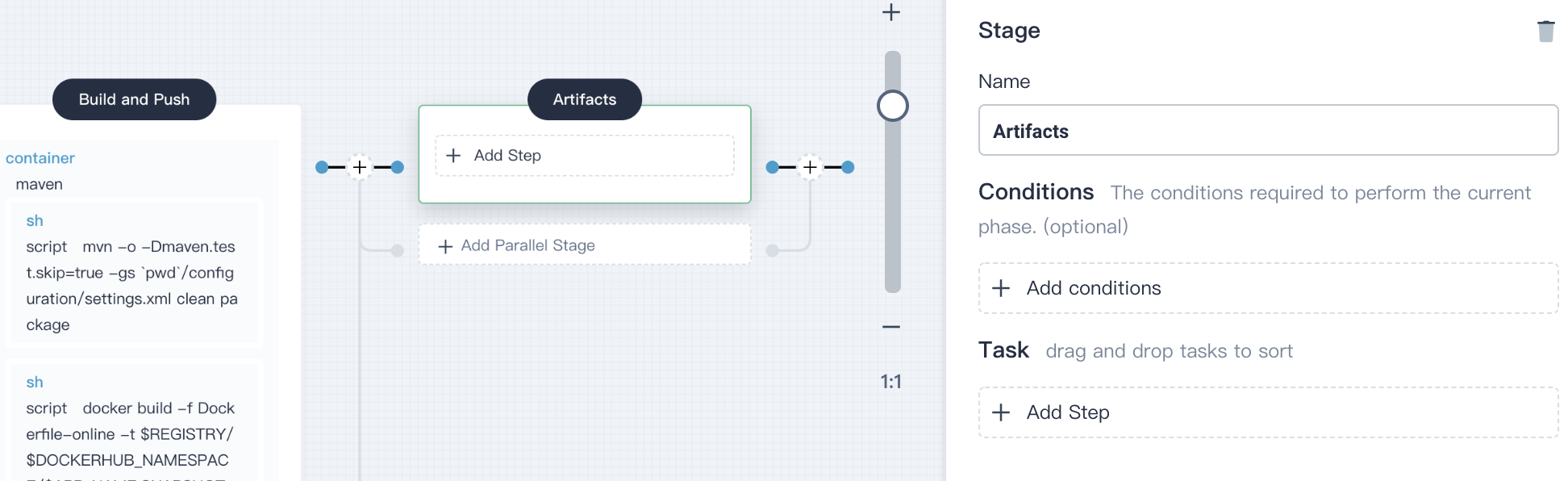

4.5.1. Click **+** on the right of the **Build and Push** stage, here we add another stage to save artifacts. This example uses the jar package and name it `Artifacts`.

|

||||

|

||||

|

||||

|

||||

4.5.2. Click **Add Step** in **Artifacts** stage, select **archiveArtifacts**. Enter `target/*.jar` in the pop-up window, which is used to set the archive path of artifact in Jenkins.

|

||||

|

||||

Click **OK** to save the it.

|

||||

|

||||

|

||||

|

||||

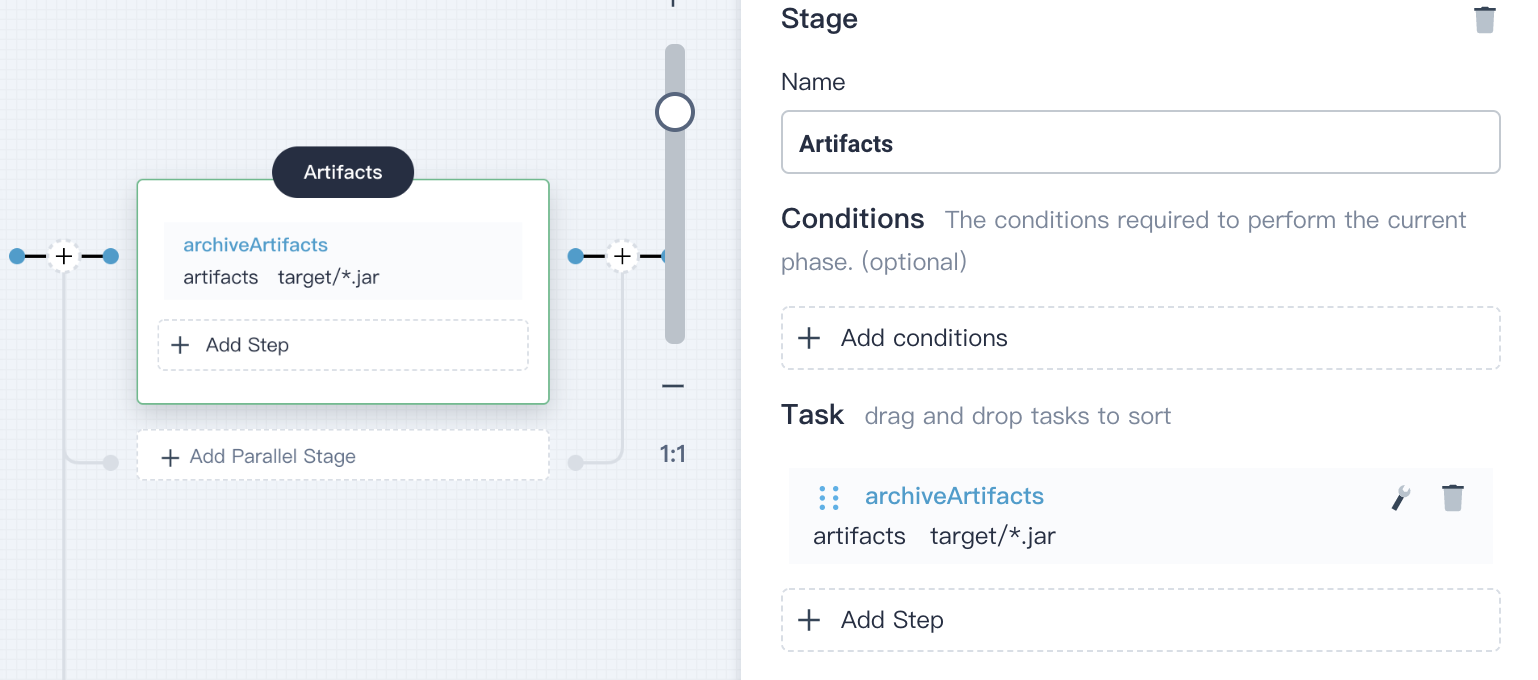

#### Stage VI: Deploy to Dev

|

||||

|

||||

4.6.1. Click **+** on the right of the stage **Artifacts** to add the last stage, name it `Deploy to Dev`. This stage is used to deploy resources to development environment, namely, the project of `kubesphere-sample-dev`.

|

||||

|

||||

4.6.2. Click **Add Step** in **Deploy to Dev**, select **input** and enter `@project-admin` in the pop-up window, assigning account `project-admin` to review this pipeline.

|

||||

|

||||

Click **OK** to save the it.

|

||||

|

||||

4.6.3. Click **Add Step** on the right,select **kubernetesDeploy**. Fill in the pop-up window as below and click **Confirm** to save the information:

|

||||

|

||||

- Kubeconfig: select `demo-kubeconfig`

|

||||

- Configuration file path: Enter `deploy/no-branch-dev/**` which is the related path of the Kubernetes [yaml](https://github.com/kubesphere/devops-java-sample/tree/master/deploy/no-branch-dev).

|

||||

|

||||

Click **OK** to save the it.

|

||||

|

||||

|

||||

|

||||

4.6.4. Similarly, click **Add Step** to send an email notification to the user after the pipeline runs successfully, select **mail** and fill in the information.

|

||||

|

||||

> Note: Make sure you have [configured email server](../../devops/jenkins-email) in `ks-jenkins`. Please refer to Jenkins email configuration. If not yet, skip this step and you still can run this pipeline.

|

||||

|

||||

At this point, the total six stages of the pipeline have been edited completely, click **Confirm → Save**, it will generate Jenkinsfile as well.

|

||||

|

||||

|

||||

|

||||

### Step 5: Run Pipeline

|

||||

|

||||

5.1. The pipeline created by the graphical editing panel needs to be manually run. Click **Run**, you can see the three string parameters defined in the third step. Click **OK** to start this pipeline.

|

||||

|

||||

|

||||

|

||||

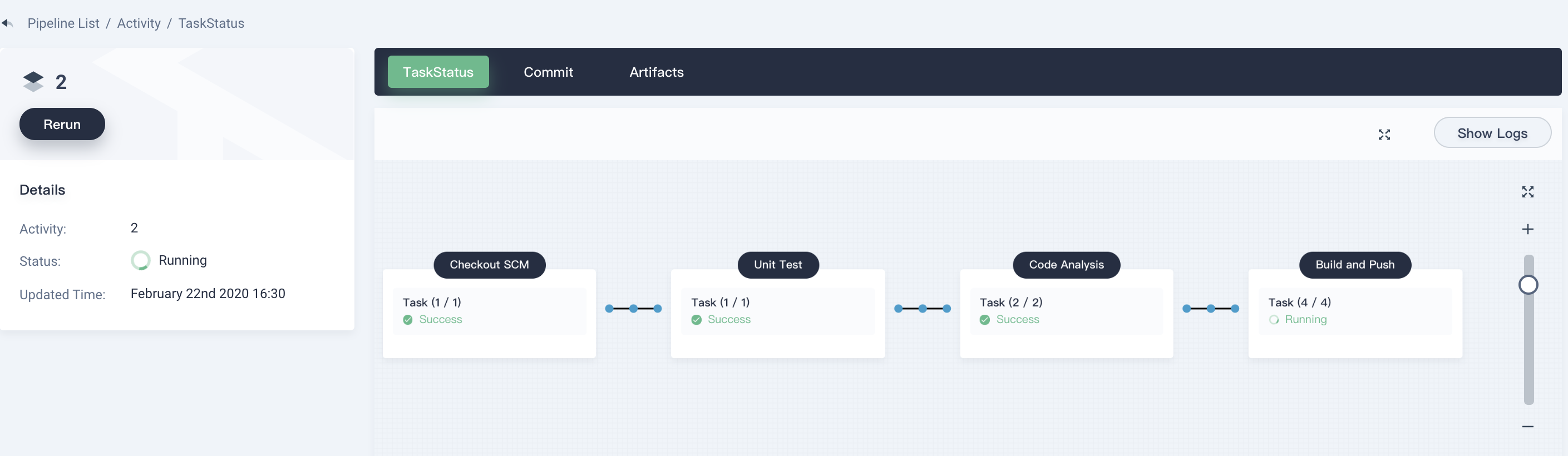

5.2. You can see the status of the pipeline in the **Activity** list. Click **Activity** to view the detailed running status.

|

||||

|

||||

5.3. Enter the first activity to view detailed page.

|

||||

|

||||

|

||||

|

||||

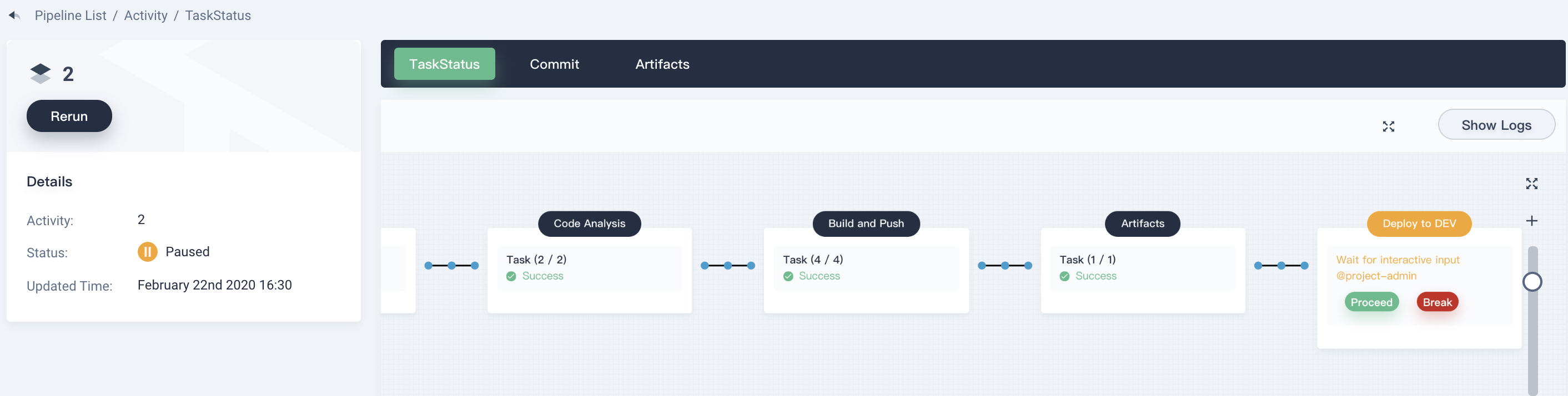

> Note: If the previous steps are running correctly, you can see that the pipeline has successfully run to the last stage in a few minutes. Since we set the review step and specify the account `project-admin` as the reviewer. Therefore, we need to switch to use `project-admin` to manually review and approve it.

|

||||

|

||||

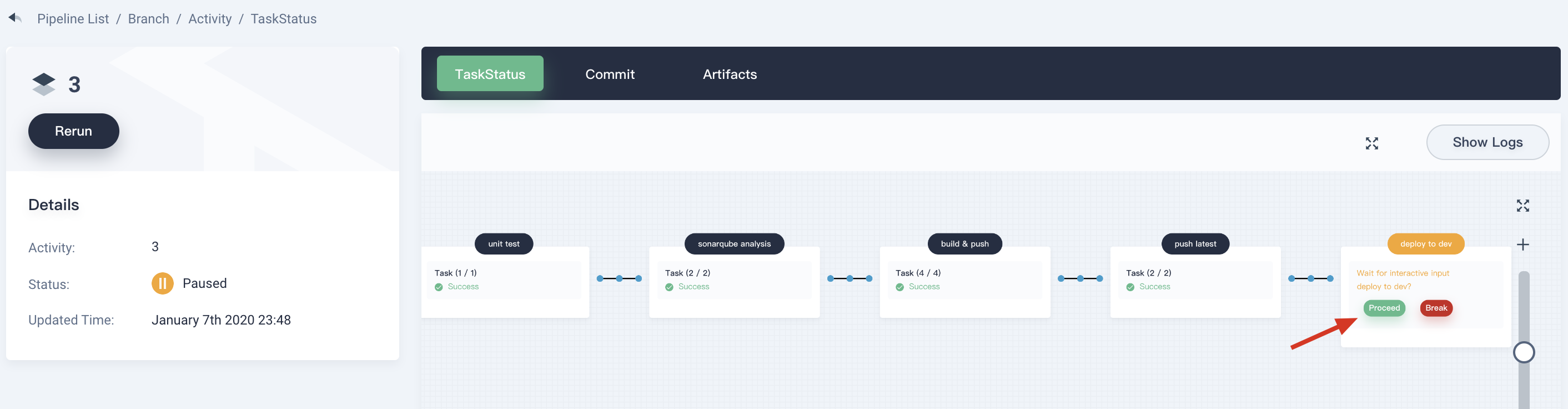

5.4. Log out, and log in with account `project-admin`. Enter into the pipeline `graphical-pipeline` of the DevOps project that we used above. Drill into **Activity** to view the running status. You can see the pipeline has run to the **Deploy to DEV** stage. Click **Proceed** to approve it.

|

||||

|

||||

|

||||

|

||||

### Step 6: View Pipeline

|

||||

|

||||

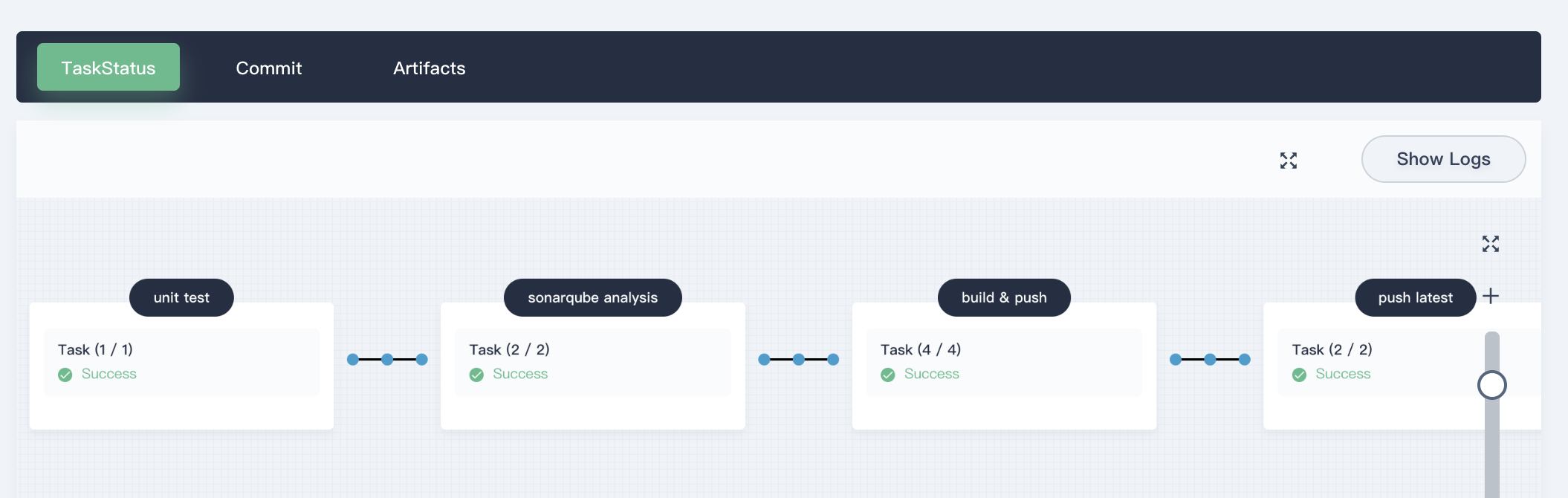

6.1. Log back the account `project-regular`. After a few minutes, the pipeline runs successfully. Click **Activity** list in the pipeline to view the current running pipeline serial number. This page shows the running status of each stage in the pipeline.

|

||||

|

||||

|

||||

|

||||

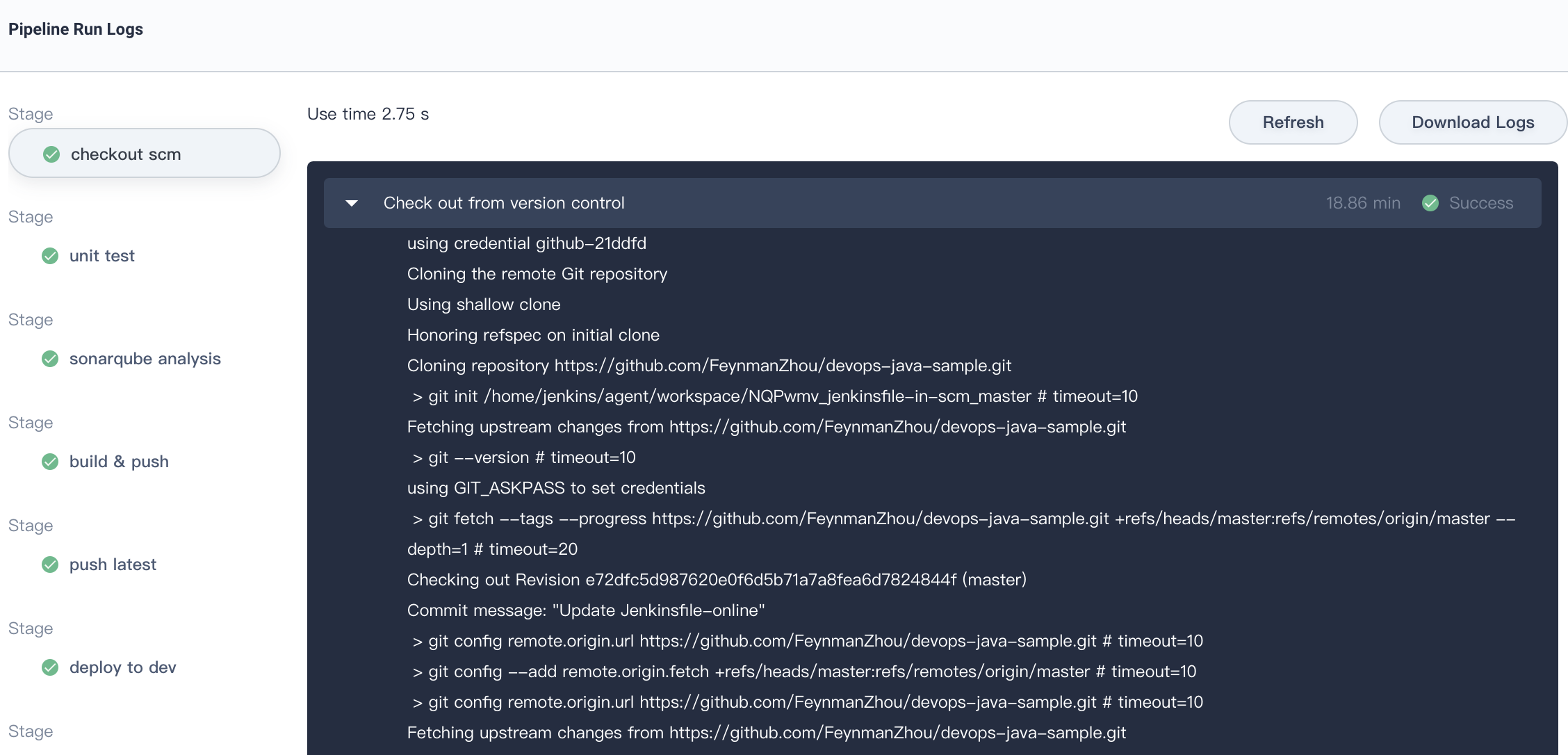

6.2. Click **Show Logs** on the top right of the current page to inspect the logs. The pop-up window shows the specific logs, running status and time of each stage. Click on a specific stage and expand its specific log on the right. You can debug any problems based on the logs which also can be downloaded to your local file for further analysis.

|

||||

|

||||

|

||||

|

||||

### Step 7: Check Code Quality

|

||||

|

||||

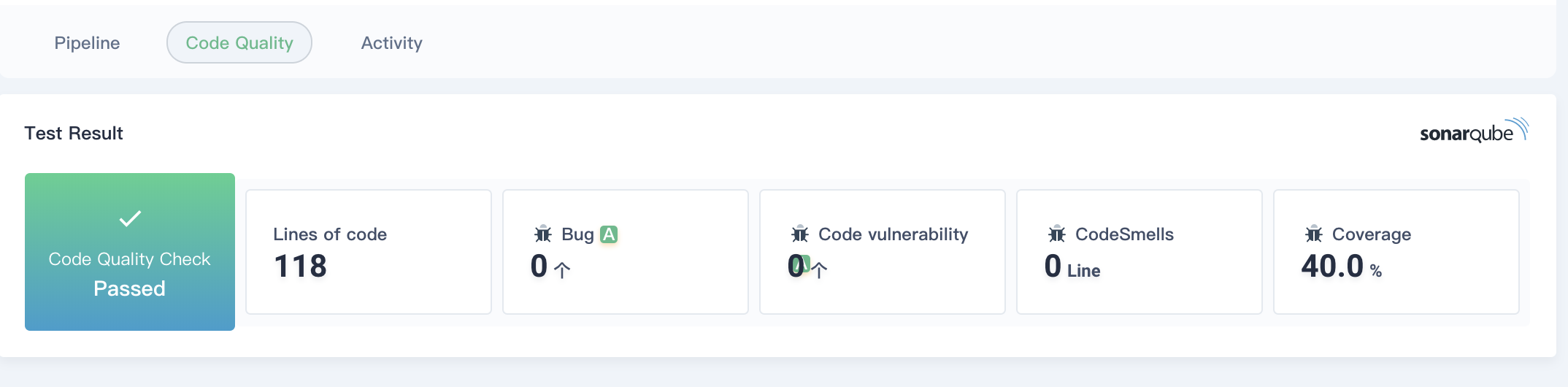

Back to the **Activity** page, click **Code quality** to check the analysis of the code quality for the demo project, which is provided by the SonarQube. The sample code is simple and does not show bugs or vulnerabilities. Click on the SonarQube icon on the right to access SonarQube. Please refer to [Access SonarQube](./../../how-to-integrate/sonarqube/) to log in.

|

||||

|

||||

|

||||

|

||||

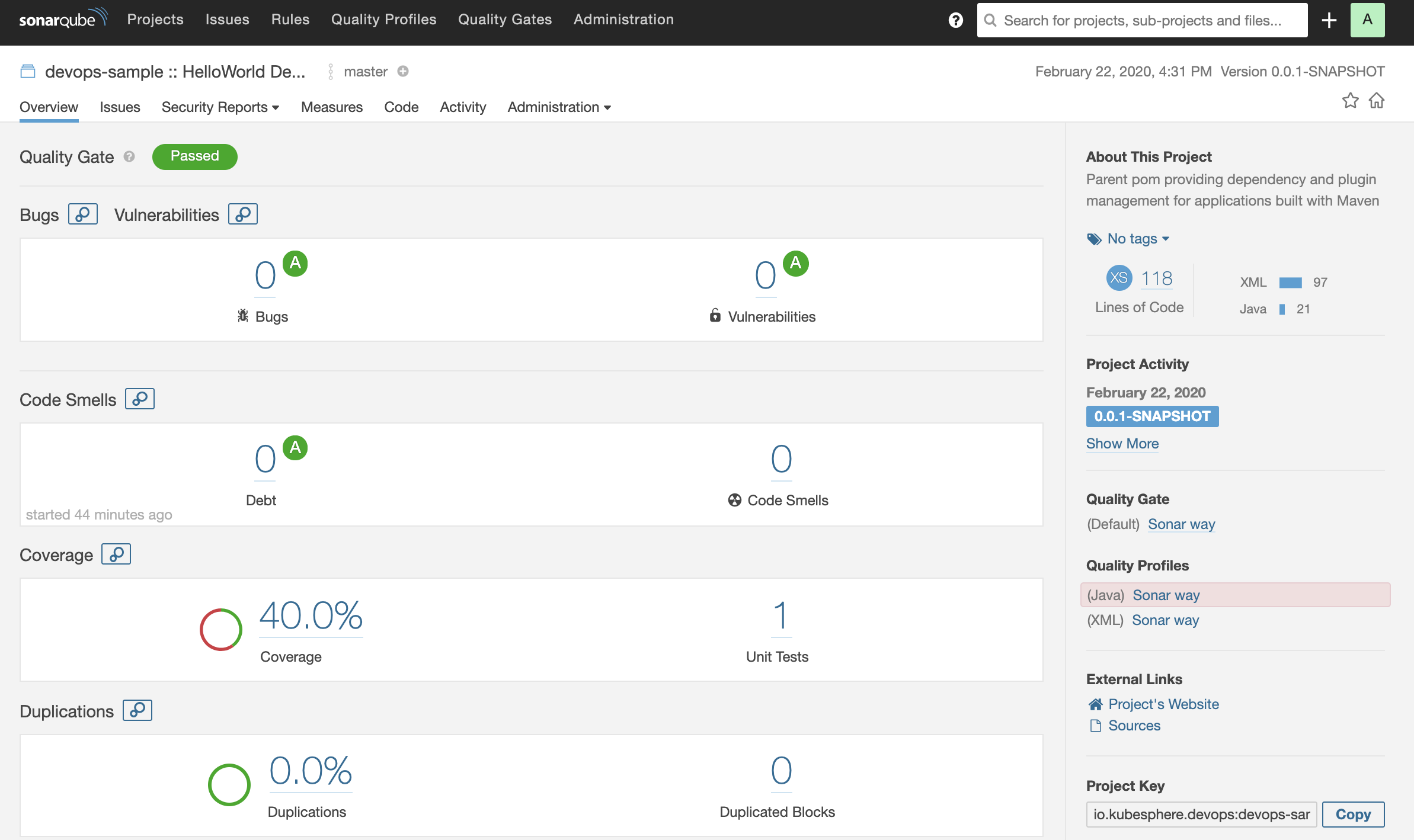

#### View the Quality Report at SonarQube

|

||||

|

||||

|

||||

|

||||

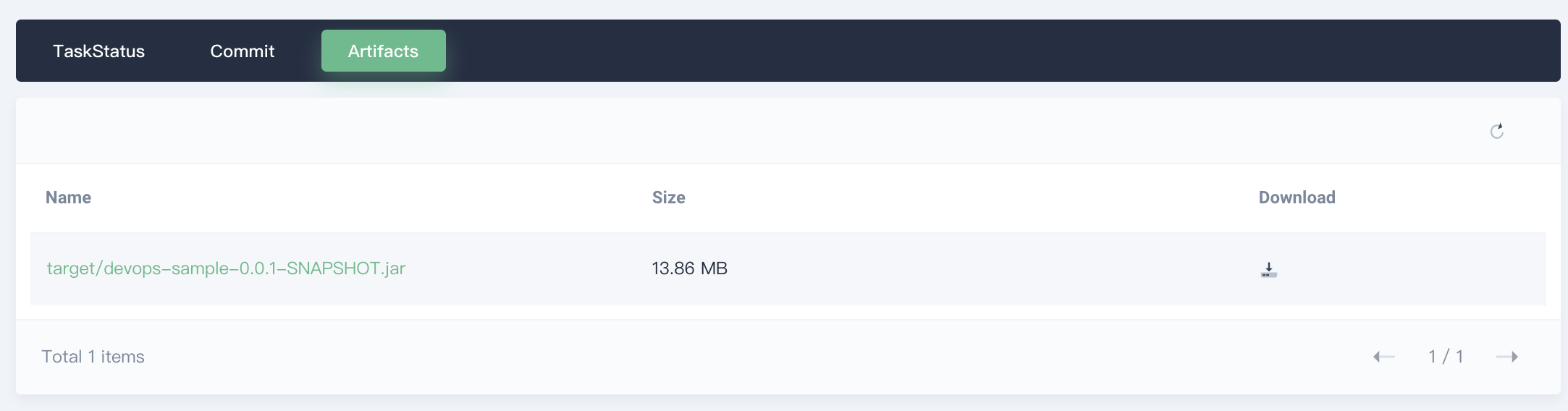

### Step 8: Download Artifacts

|

||||

|

||||

Enter the first activity and select **Artifacts**. You can find the artifact of jar package generated by the pipeline, and you can download it by clicking the icon.

|

||||

|

||||

|

||||

|

||||

### Step 9: Verify the Kubernetes Resource

|

||||

|

||||

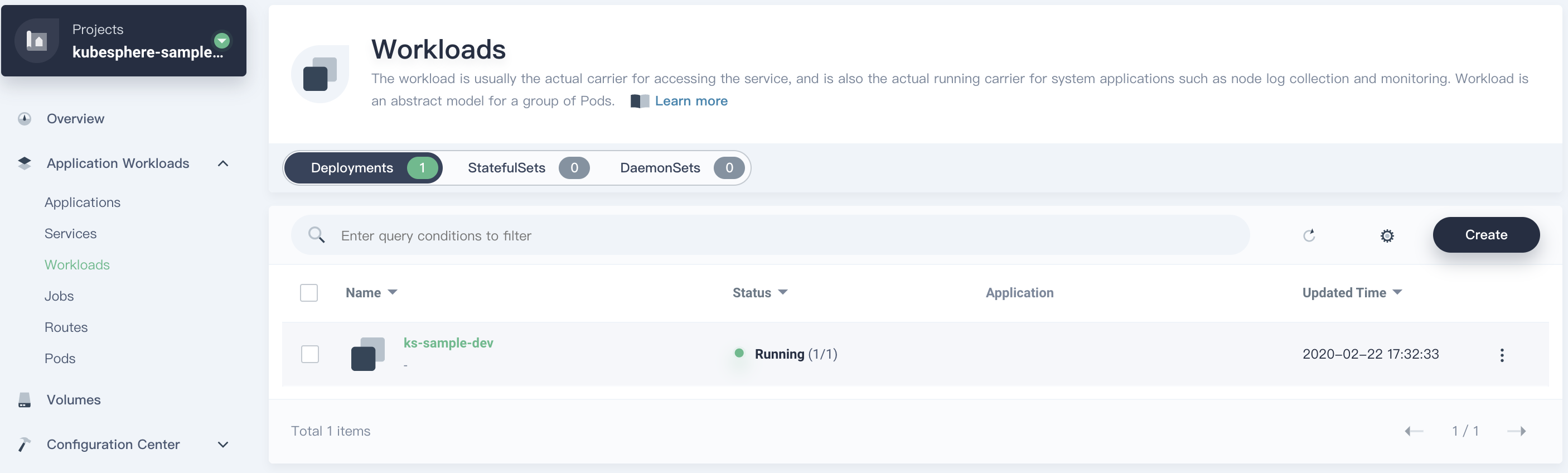

If every stage of the pipeline runs successfully, the Docker image will be automatically built and pushed to your DockerHub account. Finally, the project is deployed to the Kubernetes with a deployment and a service automatically.

|

||||

|

||||

9.1. Enter the project `kubesphere-sample-dev`, click **Application Workloads → Workloads** to see that `ks-sample-dev` has been created successfully.

|

||||

|

||||

| Environment | Address | Namespace | Deployment | Service |

|

||||

| --- | --- | --- | --- | --- |

|

||||

| Dev | `http://{$Virtual IP}:{$8080}` or `http://{$Intranet/Public IP}:{$30861}` | kubesphere-sample-dev | ks-sample-dev | ks-sample-dev |

|

||||

|

||||

#### View Deployment

|

||||

|

||||

|

||||

|

||||

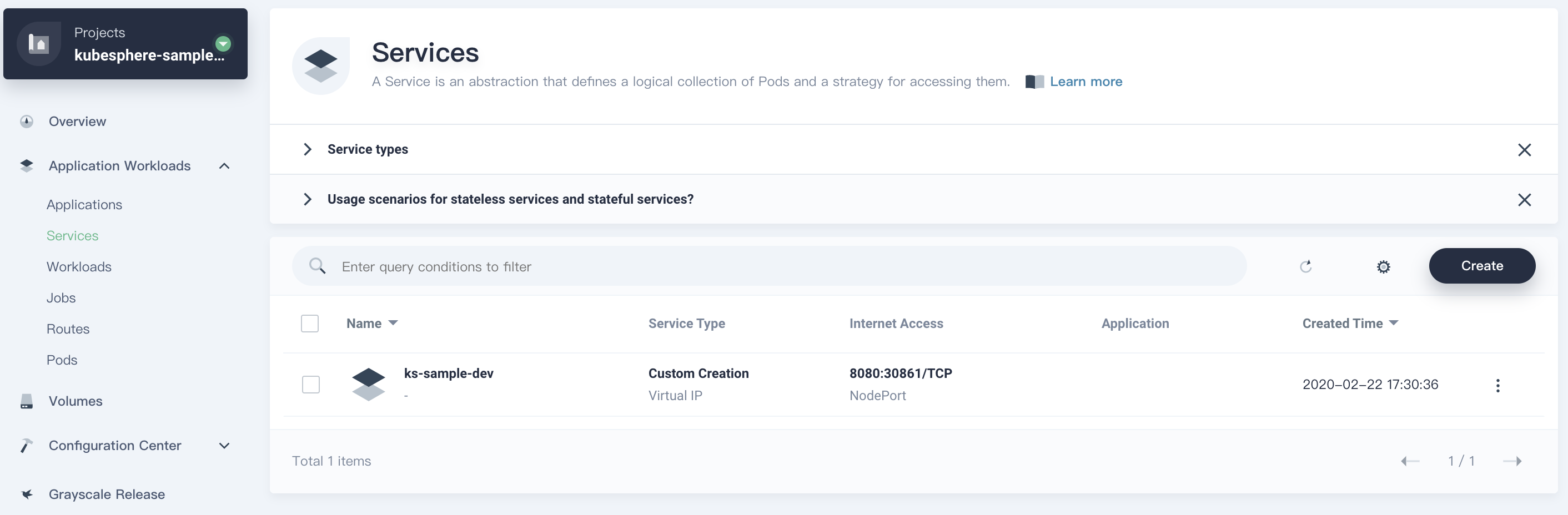

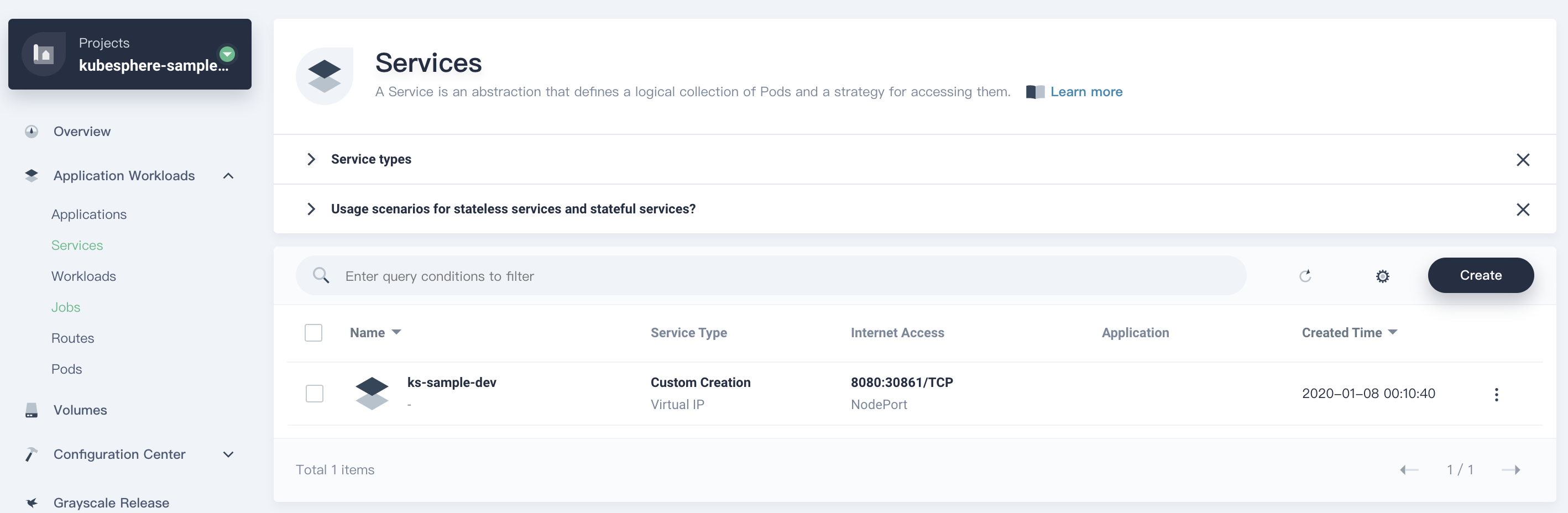

9.2. Navigate to **Service** list, you can find the corresponding service has been created. The NodePort exposed by the service is`30861` in this example.

|

||||

|

||||

#### View Service

|

||||

|

||||

|

||||

|

||||

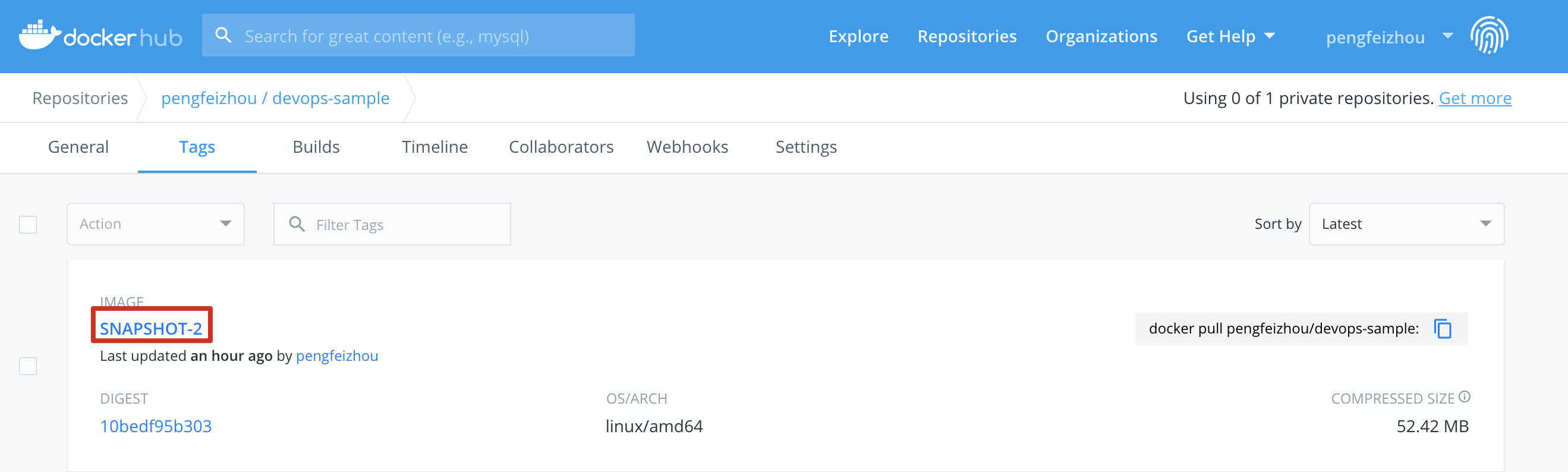

9.3. Now verify the images pushed to DockerHub. You can see that `devops-sample` is the value of **APP_NAME**, while the tag is the value of `SNAPSHOT-$BUILD_NUMBER`, and `$BUILD_NUMBER` is the serial number of the activity within pipeline. This tag has also been used in deployment `ks-sample-dev`.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

9.4. Since we set an email notification in the pipeline, thus we can verify the email in the mailbox.

|

||||

|

||||

|

||||

|

||||

### Step 10: Access the Sample Service

|

||||

|

||||

We can access the sample service using command or access in browser. For example, you can use the web kubectl by using account `admin` as follows:

|

||||

|

||||

```bash

|

||||

# curl {$Virtual IP}:{$Port} or curl {$Node IP}:{$NodePort}

|

||||

curl 10.233.4.154:8080

|

||||

Really appreciate your star, that's the power of our life.

|

||||

```

|

||||

|

||||

Congratulation! You have been familiar with using graphical editing panel to visualize your CI/CD workflow.

|

||||

|

|

@ -0,0 +1,289 @@

|

|||

---

|

||||

title: "Create a pipeline using jenkinsfile"

|

||||

keywords: 'kubesphere, kubernetes, docker, spring boot, jenkins, devops, ci/cd, pipeline'

|

||||

description: "Create a pipeline using jenkinsfile"

|

||||

linkTitle: "Create a pipeline using jenkinsfile"

|

||||

weight: 200

|

||||

---

|

||||

|

||||

## Objective

|

||||

|

||||

In this tutorial, we will show you how to create a pipeline based on the Jenkinsfile from a GitHub repository. Using the pipeline, we will deploy a demo application to a development environment and a production environment respectively. Meanwhile, we will demo a branch that is used to test dependency caching capability. In this demo, it takes a relatively long time to finish the pipeline for the first time. However, it runs very faster since then. It proves the cache works well since this branch pulls lots of dependency from internet initially.

|

||||

|

||||

> Note:

|

||||

> KubeSphere supports two kinds of pipeline, i.e., Jenkinsfile in SCM which is introduced in this document and [Create a Pipeline - using Graphical Editing Panel](../create-a-pipeline-using-graphical-editing-panel). Jenkinsfile in SCM requires an internal Jenkinsfile in Source Control Management (SCM). In another word, Jenkfinsfile serves as a part of SCM. KubeSphere DevOps system will automatically build a CI/CD pipeline depending on existing Jenkinsfile of the code repository. You can define workflow like Stage, Step and Job in the pipeline.

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You need to have a DokcerHub account and a GitHub account.

|

||||

- You need to create a workspace, a DevOps project, and a **project-regular** user account, and this account needs to be invited into a DevOps project.

|

||||

- Set CI dedicated node for building pipeline, please refer to [Set CI Node for Dependency Cache](../../how-to-use/set-ci-node/).

|

||||

- You need to install and configure sonarqube, please refer to [How to integrate SonarQube in Pipeline

|

||||

](../../../how-to-integrate/sonarqube/) . Or you can skip this part, There is no **Sonarqube Analysis** below.

|

||||

|

||||

## Pipeline Overview

|

||||

|

||||

There are eight stages as shown below in the pipeline that is going to demonstrate.

|

||||

|

||||

|

||||

|

||||

> Note:

|

||||

|

||||

> - **Stage 1. Checkout SCM**: Checkout source code from GitHub repository.

|

||||

> - **Stage 2. Unit test**: It will continue to execute next stage after unit test passed.

|

||||

> - **Stage 3. SonarQube analysis**:Process sonarQube code quality analysis.

|

||||

> - **Stage 4.** **Build & push snapshot image**: Build the image based on selected branches in the behavioral strategy. Push the tag of `SNAPSHOT-$BRANCH_NAME-$BUILD_NUMBER` to DockerHub, among which, the `$BUILD_NUMBER` is the operation serial number in the pipeline's activity list.

|

||||

> - **Stage 5. Push the latest image**: Tag the sonarqube branch as latest and push it to DockerHub.

|

||||

> - **Stage 6. Deploy to dev**: Deploy sonarqube branch to Dev environment. verification is needed for this stage.

|

||||

> - **Stage 7. Push with tag**: Generate tag and released to GitHub. Then push the tag to DockerHub.

|

||||

> - **Stage 8. Deploy to production**: Deploy the released tag to the Production environment.

|

||||

|

||||

## Hands-on Lab

|

||||

|

||||

### Step 1: Create Credentials

|

||||

|

||||

> Note: If there are special characters in your account or password, please encode it using https://www.urlencoder.org/, then paste the encoded result into credentials below.

|

||||

|

||||

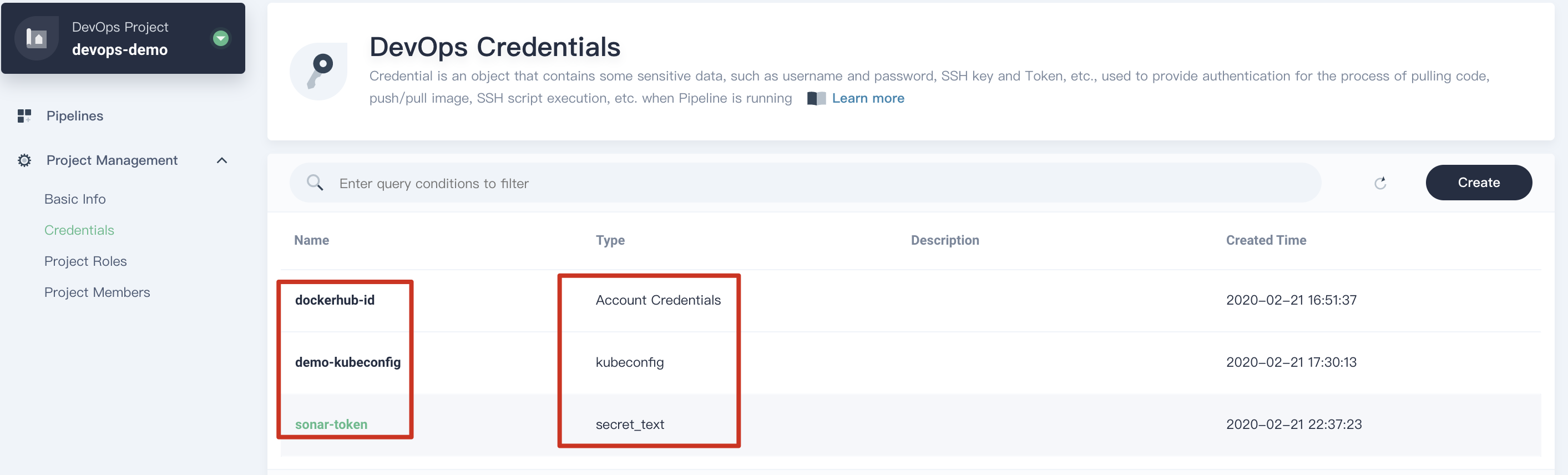

1.1. Log in KubeSphere with the account `project-regular`, enter into the created DevOps project and create the following three credentials under **Project Management → Credentials**:

|

||||

|

||||

|Credential ID| Type | Where to use |

|

||||

| --- | --- | --- |

|

||||

| dockerhub-id | Account Credentials | DockerHub |

|

||||

| github-id | Account Credentials | GitHub |

|

||||

| demo-kubeconfig | kubeconfig | Kubernetes |

|

||||

|

||||

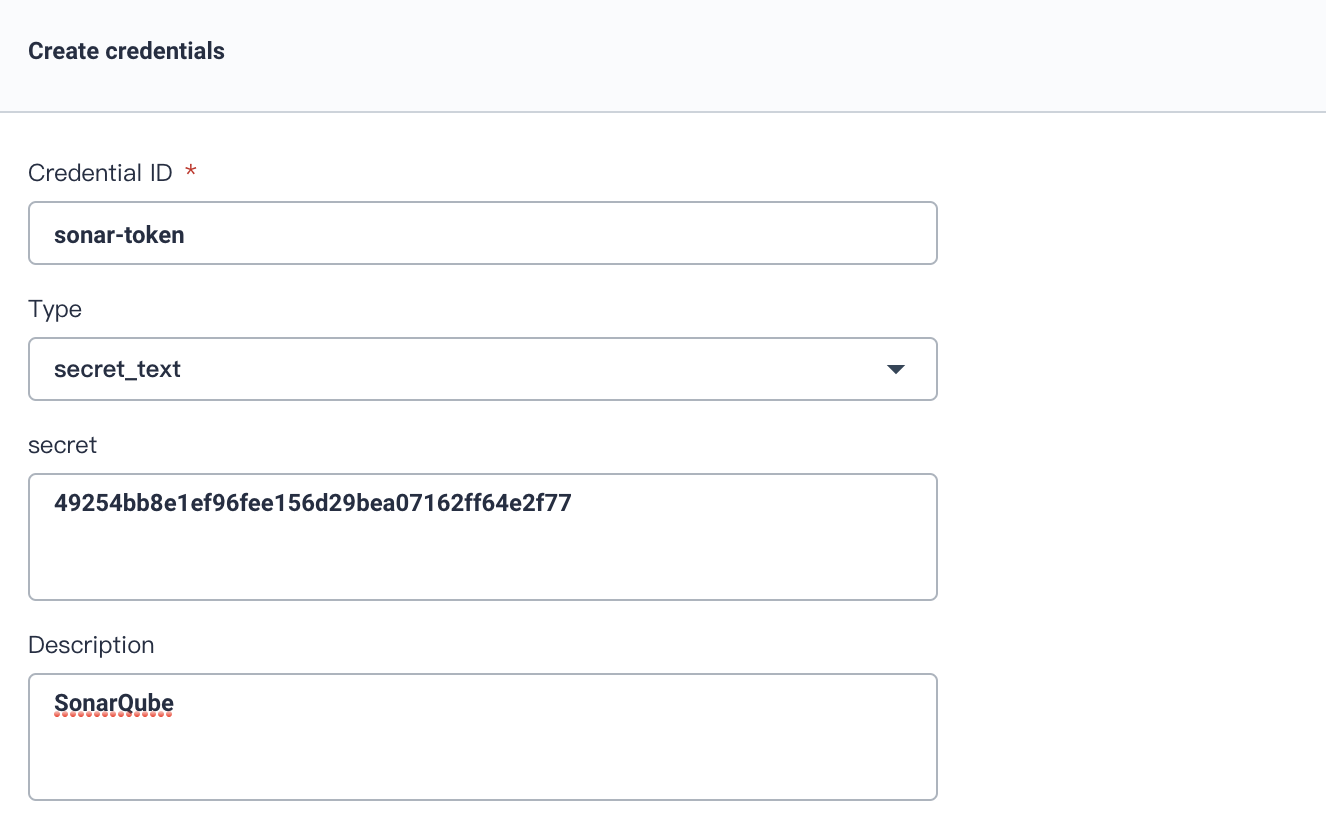

1.2. We need to create an additional credential `sonar-token` for SonarQube token, which is used in stage 3 (SonarQube analysis) mentioned above. Refer to [Access SonarQube Console and Create Token](../../how-to-integrate/sonarqube/) to copy the token and paste here. Then press **OK** button.

|

||||

|

||||

|

||||

|

||||

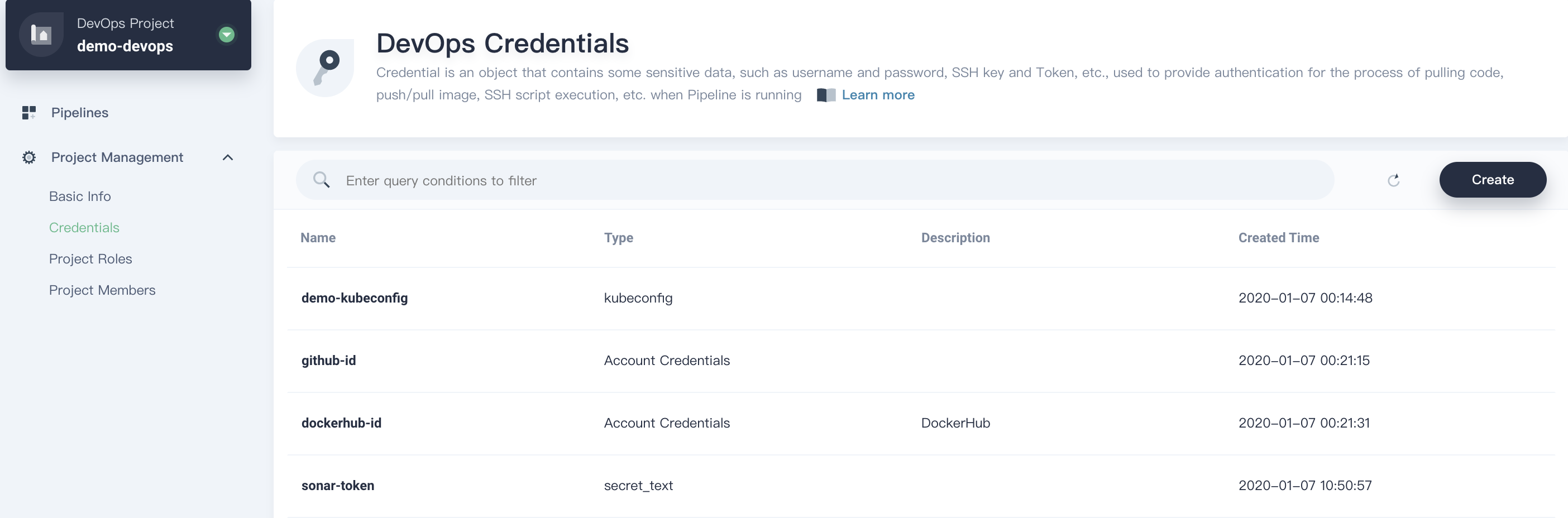

In total, we have created four credentials in this step.

|

||||

|

||||

|

||||

|

||||

### Step 2: Modify Jenkinsfile in Repository

|

||||

|

||||

#### Fork Project

|

||||

|

||||

Log in GitHub. Fork the [devops-java-sample](https://github.com/kubesphere/devops-java-sample) from GitHub repository to your own GitHub.

|

||||

|

||||

|

||||

|

||||

#### Edit Jenkinsfile

|

||||

|

||||

2.1. After forking the repository to your own GitHub, open the file **Jenkinsfile-online** under root directory.

|

||||

|

||||

|

||||

|

||||

2.2. Click the editing logo in GitHub UI to edit the values of environment variables.

|

||||

|

||||

|

||||

|

||||

| Editing Items | Value | Description |

|

||||

| :--- | :--- | :--- |

|

||||

| DOCKER\_CREDENTIAL\_ID | dockerhub-id | Fill in DockerHub's credential ID to log in your DockerHub. |

|

||||

| GITHUB\_CREDENTIAL\_ID | github-id | Fill in the GitHub credential ID to push the tag to GitHub repository. |

|

||||

| KUBECONFIG\_CREDENTIAL\_ID | demo-kubeconfig | kubeconfig credential ID is used to access to the running Kubernetes cluster. |

|

||||

| REGISTRY | docker.io | Set the web name of docker.io by default for pushing images. |

|

||||

| DOCKERHUB\_NAMESPACE | your-dockerhub-account | Replace it to your DockerHub's account name. (It can be the Organization name under the account.) |

|

||||

| GITHUB\_ACCOUNT | your-github-account | Change your GitHub account name, such as `https://github.com/kubesphere/`. Fill in `kubesphere` which can also be the account's Organization name. |

|

||||

| APP\_NAME | devops-java-sample | Application name |

|

||||

| SONAR\_CREDENTIAL\_ID | sonar-token | Fill in the SonarQube token credential ID for code quality test. |

|

||||

|

||||

**Note: The command parameter `-o` of Jenkinsfile's `mvn` indicates that the offline mode is on. This tutorial has downloaded relevant dependencies to save time and to adapt to network interference in certain environments. The offline mode is on by default.**

|

||||

|

||||

2.3. After editing the environmental variables, click **Commit changes** at the top of GitHub page, then submit the updates to the sonarqube branch.

|

||||

|

||||

### Step 3: Create Projects

|

||||

|

||||

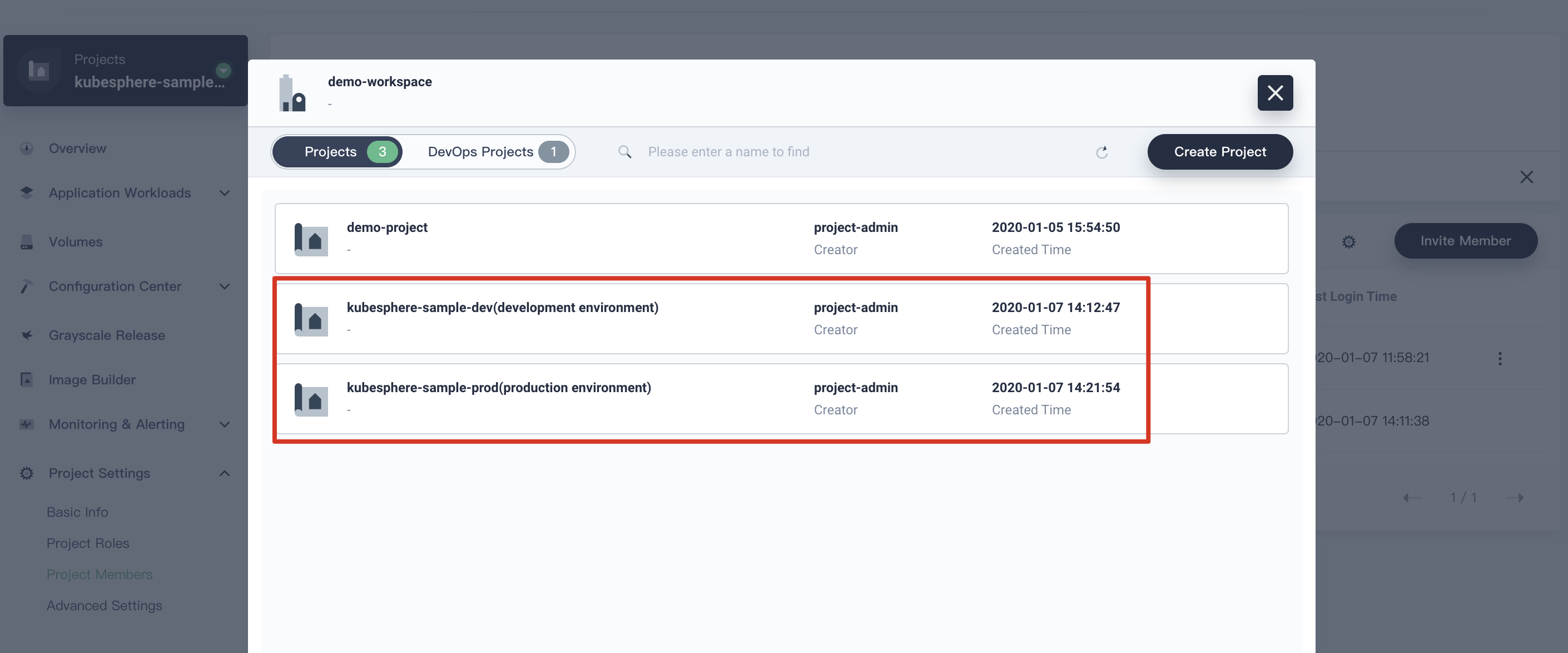

In this step, we will create two projects, i.e. `kubesphere-sample-dev` and `kubesphere-sample-prod`, which are development environment and production environment respectively.

|

||||

|

||||

#### Create The First Project

|

||||

|

||||

> Tip:The account `project-admin` should be created in advance since it is used as the reviewer of the CI/CD Pipeline.

|

||||

|

||||

3.1. Use the account `project-admin` to log in KubeSphere. Click **Create** button, then choose **Create a resource project**. Fill in basic information for the project. Click **Next** after complete.

|

||||

|

||||

- Name: `kubesphere-sample-dev`.

|

||||

- Alias: `development environment`.

|

||||

|

||||

|

||||

3.2. Leave the default values at Advanced Settings. Click **Create**.

|

||||

|

||||

3.3. Now invite `project-regular` user into `kubesphere-sample-dev`. Choose **Project Settings → Project Members**. Click **Invite Member** to invite `project-regular` and grant this account the role of `operator`.

|

||||

|

||||

#### Create the Second Project

|

||||

|

||||

Similarly, create a project named `kubesphere-sample-prod` following the steps above. This project is the production environment. Then invite `project-regular` to the project of `kubesphere-sample-prod`, and grant it the role of `operator` as well.

|

||||

|

||||

> Note: When the CI/CD pipeline succeeded. You will see the demo application's Deployment and Service have been deployed to `kubesphere-sample-dev` and `kubesphere-sample-prod.` respectively.

|

||||

|

||||

|

||||

|

||||

### Step 4: Create a Pipeline

|

||||

|

||||

#### Fill in Basic Information

|

||||

|

||||

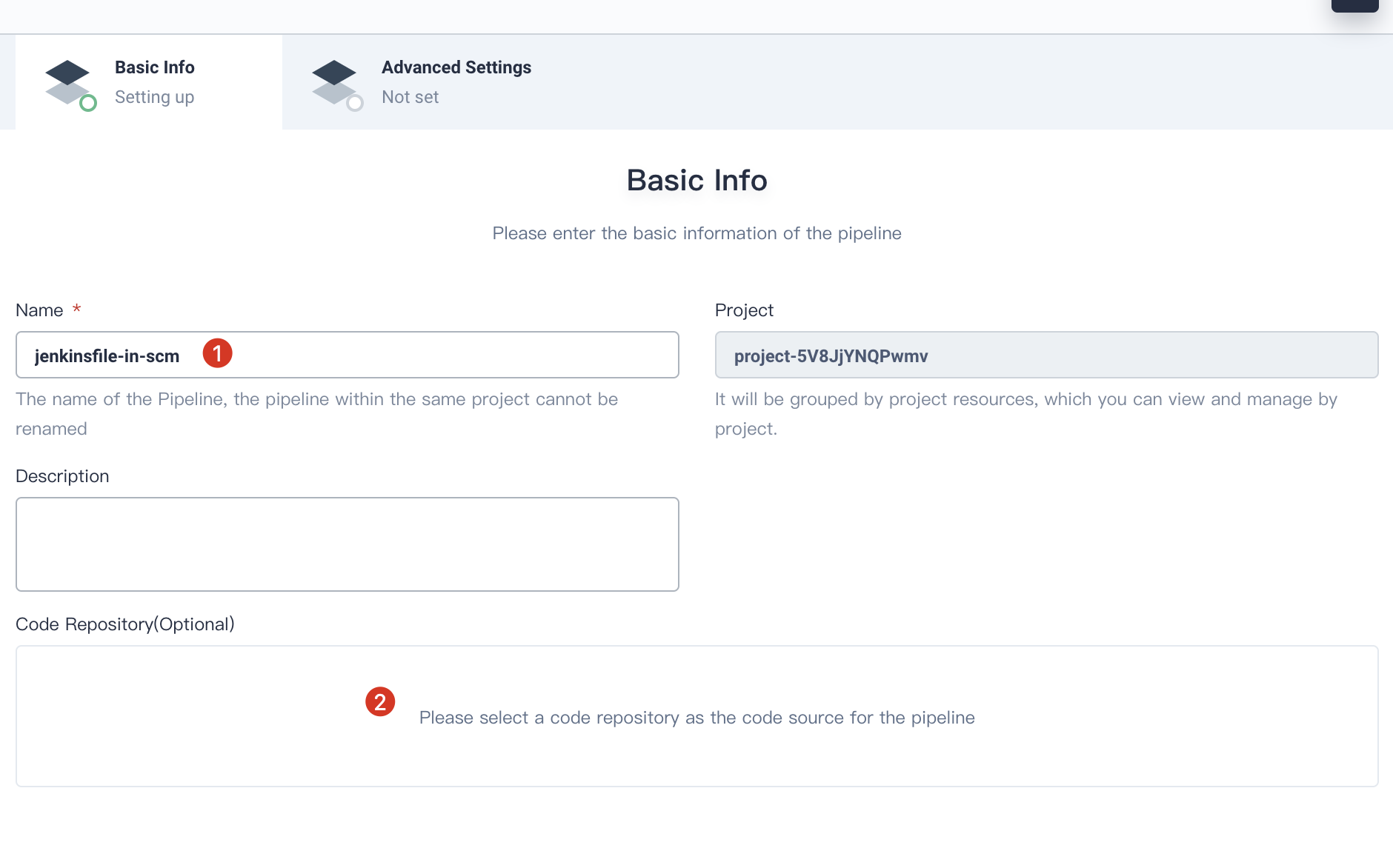

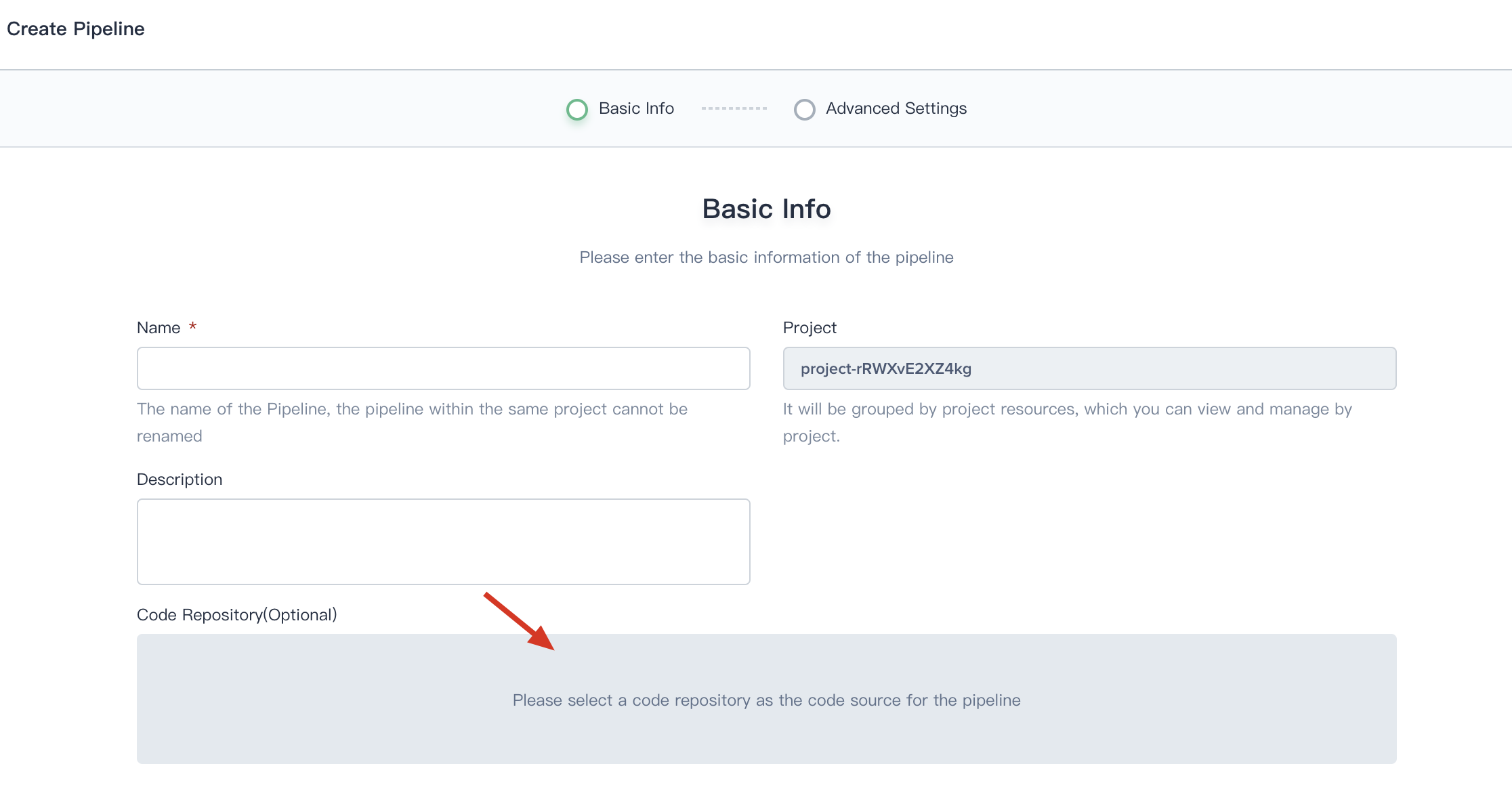

4.1. Switch the login user to `project-regular`. Enter into the DevOps project `demo-devops`. click **Create** to build a new pipeline.

|

||||

|

||||

|

||||

|

||||

4.2. Fill in the pipeline's basic information in the pop-up window, name it `jenkinsfile-in-scm`, click **Code Repository**.

|

||||

|

||||

|

||||

|

||||

#### Add Repository

|

||||

|

||||

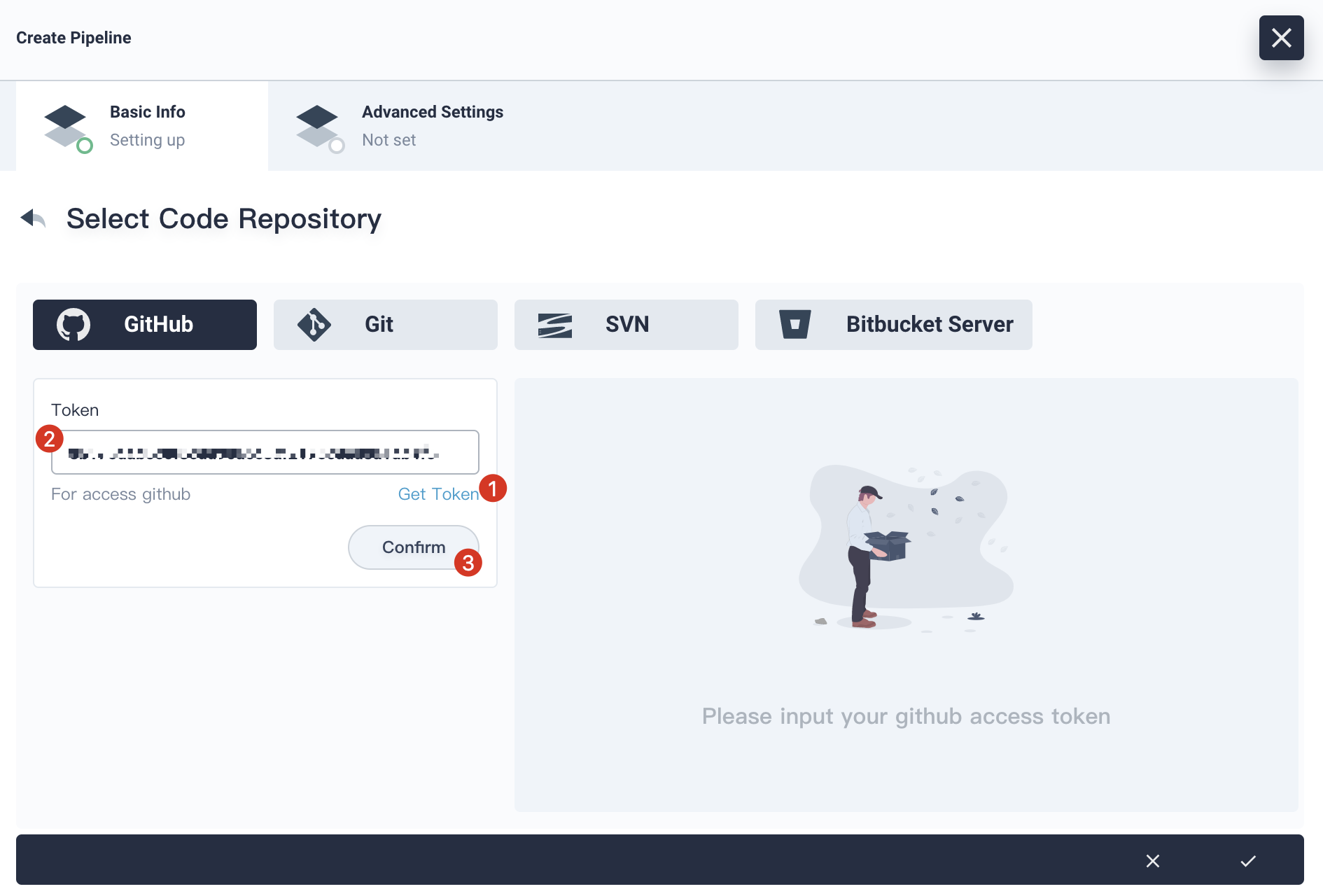

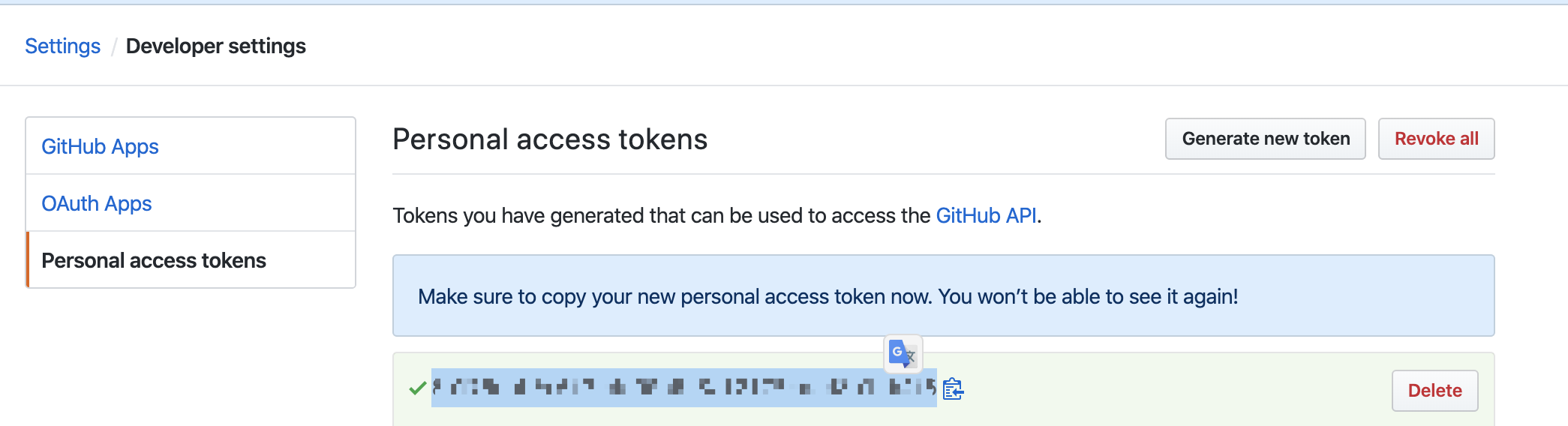

4.3. Click **Get Token** to generate a new GitHub token if you do not have one. Then paste the token to the edit box.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

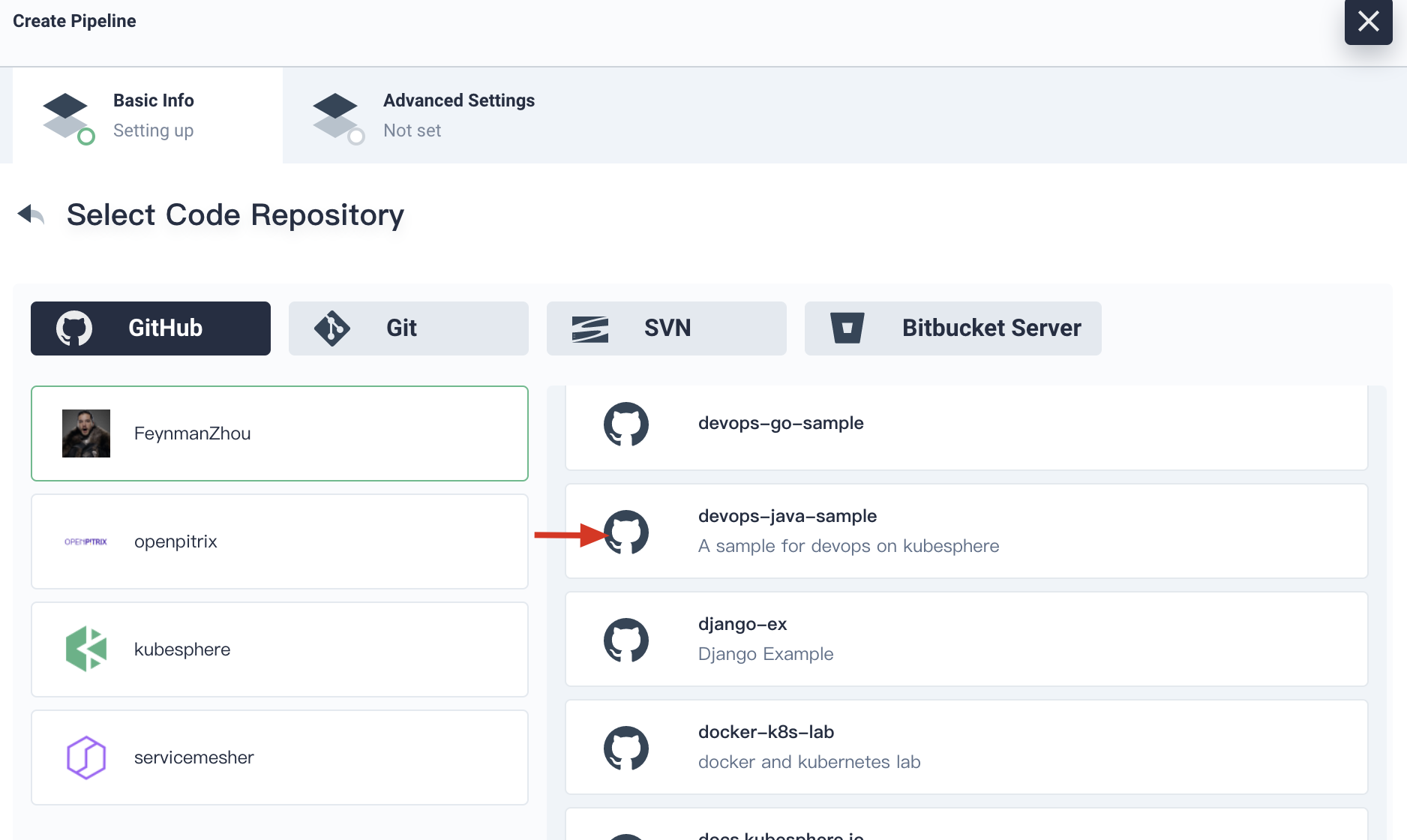

4.4. Click **Confirm**, choose your account. All the code repositories related to this token will be listed on the right. Select **devops-java-sample** and click **Select this repo**, then click **Next**.

|

||||

|

||||

|

||||

|

||||

#### Advanced Settings

|

||||

|

||||

Now we are on the advanced setting page.

|

||||

|

||||

<!--

|

||||

> Note:

|

||||

> The branches can be controlled by both of the preservation days and the branch number. If the branch has expired the preservation dates or exceeded the limitation number, the branch should be discarded. For example, if the preservation day is 2 and the branch number is 3, any branches that do not meet the requirements should be discarded. Set both of the limitation to -1 by default means not to delete branched automatically.

|

||||

>

|

||||

> Discarding old branches means that you will discard the branch record all together. The branch record includes console output, archive artifacts and other relevant data. Keeping less branches saves Jenkins' disk space. We provide two options to determine when to discard old branches:

|

||||

>

|

||||

> - Days for preserving the branches: If branch reaches the days, it must be discarded.

|

||||

> - Number of branches: If there is a significant number of branches, the oldest branches should be discarded. -->

|

||||

|

||||

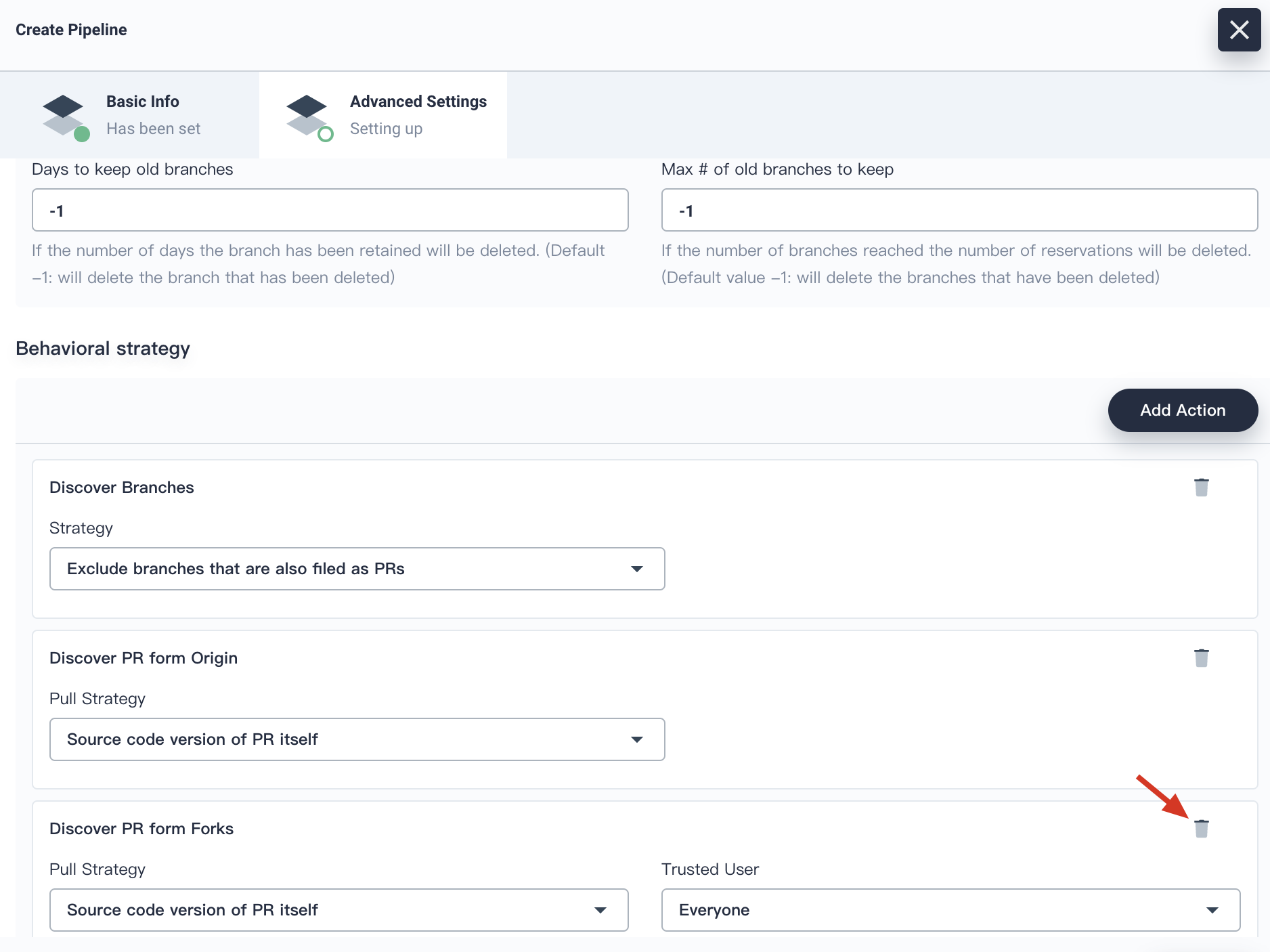

4.5. In the behavioral strategy, KubeSphere pipeline has set three strategies by default. Since this demo has not applied the strategy of **Discover PR from Forks,**, this strategy can be deleted.

|

||||

|

||||

|

||||

|

||||

<!-- > Note:

|

||||

> There types of discovering strategies are supported. When the Jenkins pipeline is activated, the Pull Request (PR) submitted by the developer will also be regarded as a separate branch.

|

||||

> Discover the branch:

|

||||

> - Exclude the branch as PR: Select this option means that CI will not scan the source branch as such Origin's master branch. These branches needs to be merged.

|

||||

> - Only the branched submitted as PR: Only scan the PR branch.

|

||||

> - All the branches: extract all the branches from the repository origin.

|

||||

>

|

||||

> Discover PR from the origin repository:

|

||||

> - The source code after PR merges with the branch: Once discovery operation is based on the source codes derived from merging the PR and the target branch. It is also based on the running pipeline.

|

||||

> - PR's source code edition: Once discovery operation is based on the pipeline build by PR's source codes.

|

||||

> - There will be two pipelines when the PR is found. One pipeline applies PR's source code and the other one uses the source code from merging the PR with the target branch: This is twice discovery operation. -->

|

||||

|

||||

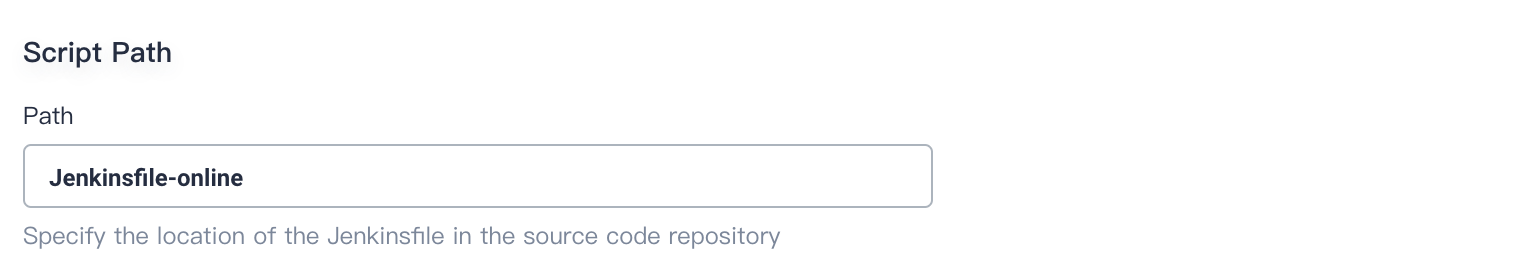

4.6. The path is **Jenkinsfile** by default. Please change it to `Jenkinsfile-online`, which is the file name of Jenkinsfile in the repository located in root directory.

|

||||

|

||||

> Note: Script path is the Jenkinsfile path in the code repository. It indicates the repository's root directory. If the file location changes, the script path should also be changed.

|

||||

|

||||

|

||||

|

||||

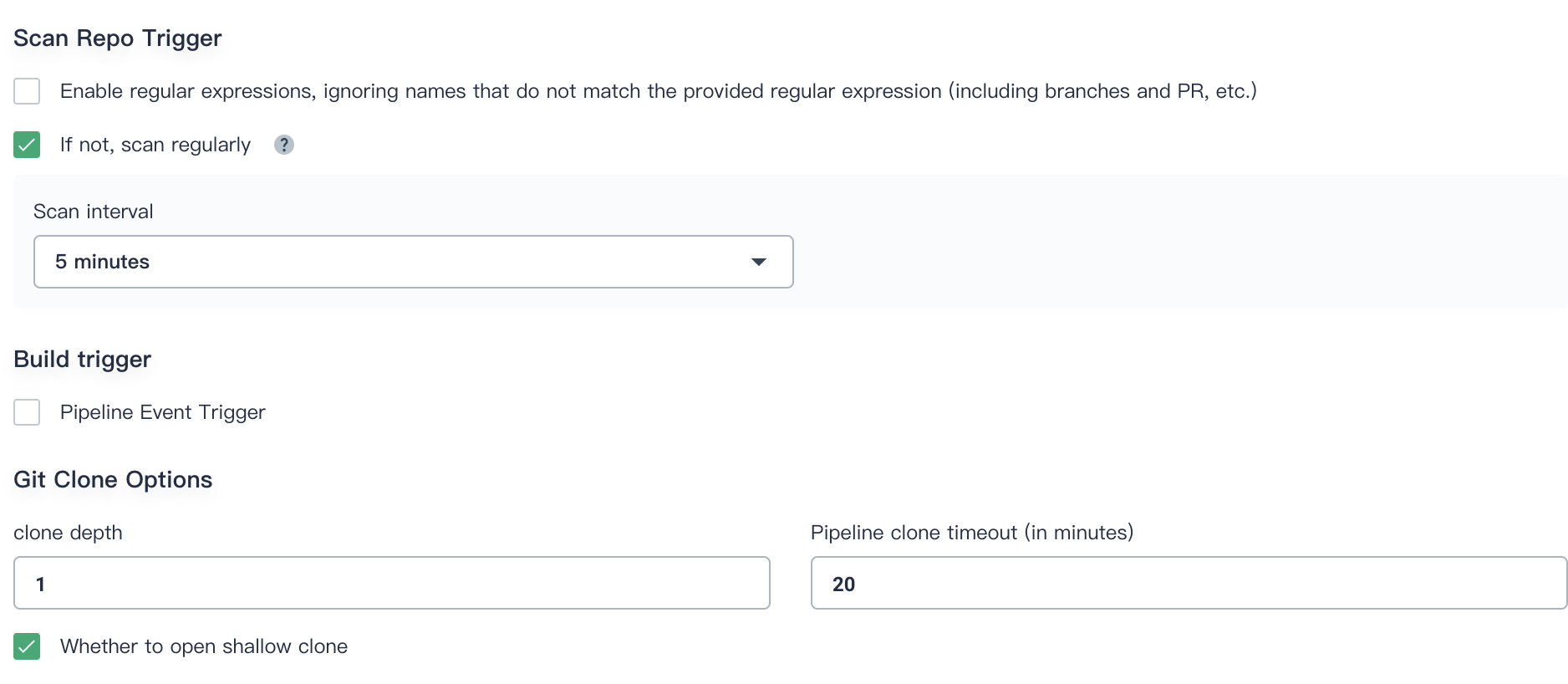

4.7. **Scan Repo Trigger** can be customized according to the team's development preference. We set it to `5 minutes`. Click **Create** when complete advanced settings.

|

||||

|

||||

<!-- > Note: Regular scaning is to set a cycle to require the pipeline scan remote repositories regularly. According to the **Behaviour Strategy **to check whether there is a code update or a new PR.

|

||||

>

|

||||

> Webhook Push:

|

||||

> Webhook is a high-efficiency way to detect the changes in the remote repository and automatically activate new operations. Webhook should play the main role in scanning Jenkins for GitHub and Git (like Gitlab). Please refer to the cycle time setting in the previous step. In this sample, you can run the pipeline manually. If you need to set automatic scanning for remote branches and active the operation, please refer to Setting automatic scanning - GitHub SCM.

|

||||

> -->

|

||||

|

||||

|

||||

|

||||

#### Run the Pipeline

|

||||

|

||||

Refresh browser manually or you may need to click `Scan Repository`, then you can find two activities triggered. Or you may want to trigger them manually as the following instructions.

|

||||

|

||||

4.8. Click **Run** on the right. According to the **Behavioral Strategy**, it will load the branches that have Jenkinsfile. Set the value of branch as `sonarqube`. Since there is no default value in the Jenkinsfile file, put in a tag number in the **TAG_NAME** such as `v0.0.1`. Click **OK** to trigger a new activity.

|

||||

|

||||

> Note: TAG\_NAME is used to generate release and images with tag in GitHub and DockerHub. Please notice that `TAG_NAME` should not duplicate the existing `tag` name in the code repository. Otherwise the pipeline can not run.

|

||||

|

||||

|

||||

|

||||

At this point, the pipeline for the sonarqube branch is running.

|

||||

|

||||

> Note: Click **Branch** to switch to the branch list and review which branches are running. The branch here is determined by the **Behavioral Strategy.**

|

||||

|

||||

|

||||

|

||||

#### Review Pipeline

|

||||

|

||||

When the pipeline runs to the step of `input`

|

||||

it will pause. You need to click **Continue** manually. Please note that there are three stages defined in the Jenkinsfile-online. Therefore, the pipeline will be reviewed three times in the three stages of `deploy to dev, push with tag, deploy to production`.

|

||||

|

||||

|

||||

|

||||

> Note: In real development or production scenario, it requires someone who has higher authority (e.g. release manager) to review the pipeline and the image, as well as the code analysis result. They have the authority to determine whether to approve push and deploy. In Jenkinsfile, the `input` step supports you to specify who to review the pipeline. If you want to specify a user `project-admin` to review, you can add a field in the Jenkinsfile. If there are multiple users, you need to use commas to separate them as follows:

|

||||

|

||||

```groovy

|

||||

···

|

||||

input(id: 'release-image-with-tag', message: 'release image with tag?', submitter: 'project-admin,project-admin1')

|

||||

···

|

||||

```

|

||||

|

||||

### Step 5: Check Pipeline Status

|

||||

|

||||

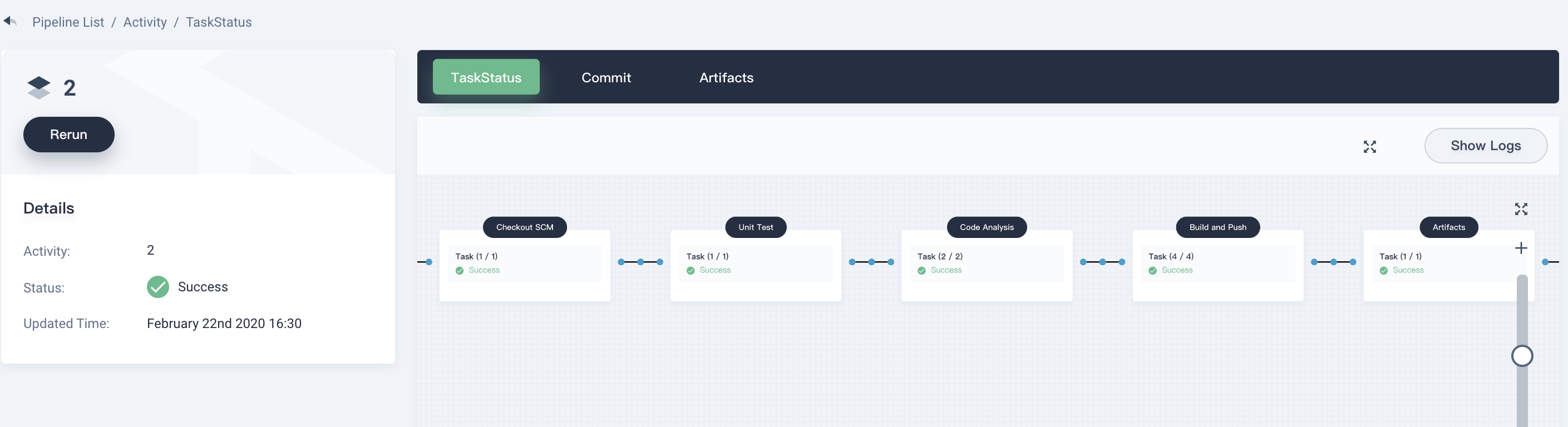

5.1. Click into **Activity → sonarqube → Task Status**, you can see the pipeline running status. Please note that the pipeline will keep initializing for several minutes when the creation just completed. There are eight stages in the sample pipeline and they have been defined individually in [Jenkinsfile-online](https://github.com/kubesphere/devops-java-sample/blob/sonarqube/Jenkinsfile-online).

|

||||

|

||||

|

||||

|

||||

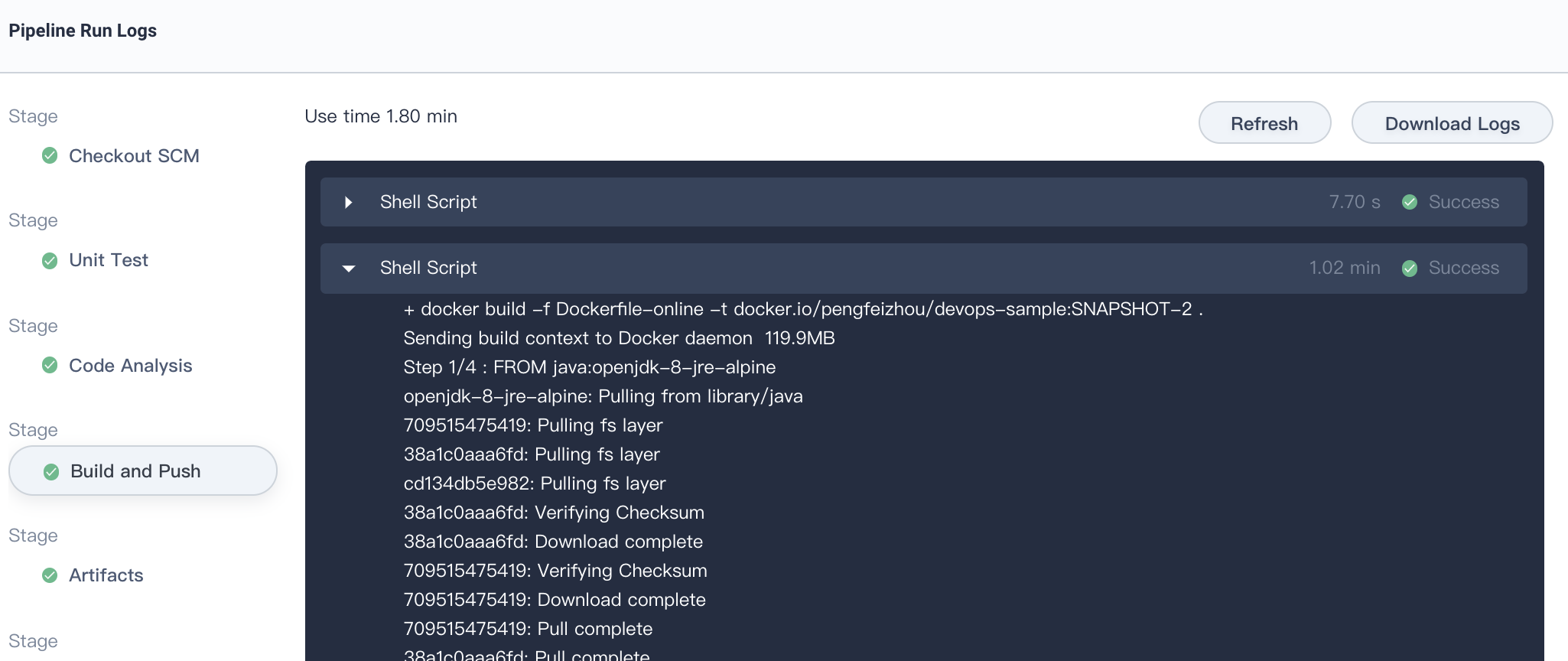

5.2. Check the pipeline running logs by clicking **Show Logs** at the top right corner. The page shows dynamic logs outputs, operating status and time etc.

|

||||

|

||||

For each step, click specific stage on the left to inspect the logs. The logs can be downloaded to local for further analysis.

|

||||

|

||||

|

||||

|

||||

### Step 6: Verify Pipeline Running Results

|

||||

|

||||

6.1. Once you successfully executed the pipeline, click `Code Quality` to check the results through SonarQube as the follows (reference only).

|

||||

|

||||

|

||||

|

||||

6.2. The Docker image built by the pipeline has been successfully pushed to DockerHub, since we defined `push to DockerHub` stage in Jenkinsfile-online. In DockerHub you will find the image with tag v0.0.1 that we configured before running the pipeline, also you will find the images with tags`SNAPSHOT-sonarqube-6`(SNAPSHOT-branch-serial number) and `latest` have been pushed to DockerHub.

|

||||

|

||||

|

||||

|

||||

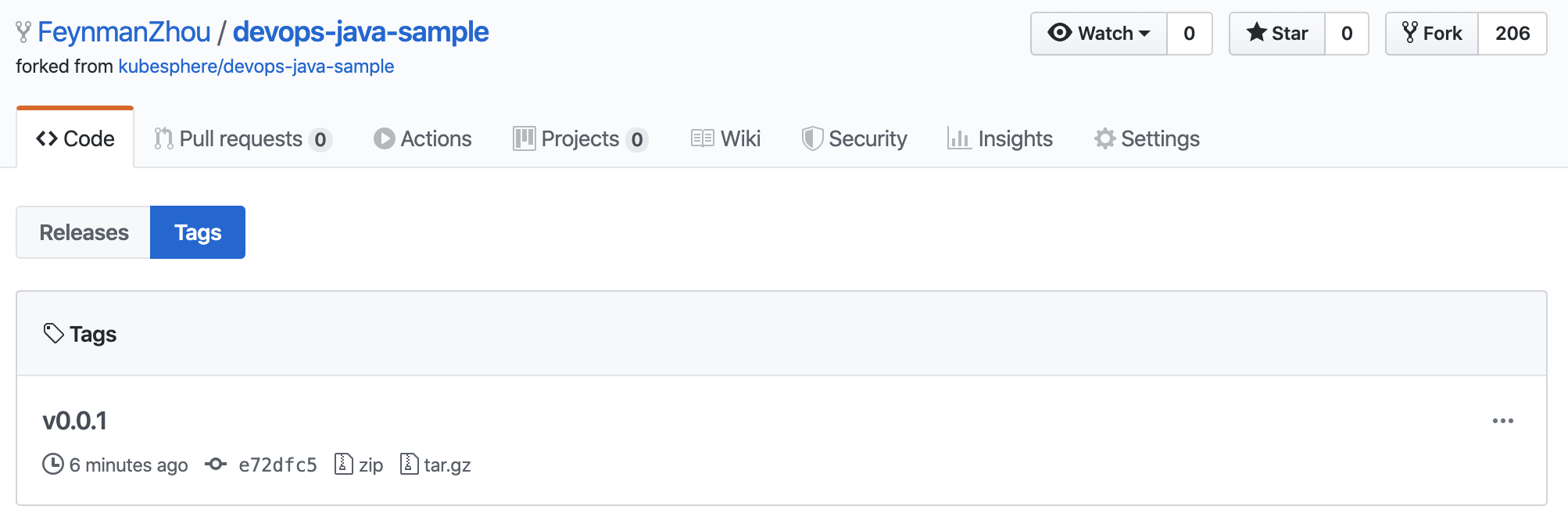

At the same time, a new tag and a new release have been generated in GitHub.

|

||||

|

||||

|

||||

|

||||

The sample application will be deployed to `kubesphere-sample-dev` and `kubesphere-sample-prod` as deployment and service.

|

||||

|

||||

| Environment | URL | Namespace | Deployment | Service |

|

||||

| :--- | :--- | :--- | :--- | :--- |

|

||||

| Dev | `http://{NodeIP}:{$30861}` | kubesphere-sample-dev | ks-sample-dev | ks-sample-dev |

|

||||

| Production | `http://{$NodeIP}:{$30961}` | kubesphere-sample-prod | ks-sample | ks-sample |

|

||||

|

||||

6.3. Enter into these two projects, you can find the application's resources have been deployed to Kubernetes successully. For example, lets verify the Deployments and Services under project `kubesphere-sample-dev`:

|

||||

|

||||

#### Deployments

|

||||

|

||||

|

||||

|

||||

#### Services

|

||||

|

||||

|

||||

|

||||

### Step 7: Visit Sample Service

|

||||

|

||||

7.1. You can switch to use `admin` account to open **web kubectl** from **Toolbox**. Enter into project `kubesphere-sample-dev`, select **Application Workloads → Services** and click into `ks-sample-dev` service.

|

||||

|

||||

|

||||

|

||||

7.2. Open **web kubectl** from **Toolbox**, try to access as the following:

|

||||

|

||||

> Note: curl Endpoints or {$Virtual IP}:{$Port} or {$Node IP}:{$NodePort}

|

||||

|

||||

```bash

|

||||

$ curl 10.233.102.188:8080

|

||||

Really appreciate your star, that's the power of our life.

|

||||

```

|

||||

|

||||

7.3. Similarly, you can test the service in project `kubesphere-sample-pro`

|

||||

|

||||

> Note: curl Endpoints or {$Virtual IP}:{$Port} or {$Node IP}:{$NodePort}

|

||||

|

||||

```bash

|

||||

$ curl 10.233.102.188:8080

|

||||

Really appreciate your star, that's the power of our life.

|

||||

```

|

||||

|

||||

Configurations! You are familiar with KubeSphere DevOps pipeline, and you can continue to learn how to build CI/CD pipeline with a graphical panel and visualize your workflow in the next tutorial.

|

||||

|

|

@ -0,0 +1,70 @@

|

|||

---

|

||||

title: "Credentials Management"

|

||||

keywords: 'kubernetes, docker, helm, jenkins, istio, prometheus'

|

||||

description: ''

|

||||

linkTitle: "Credentials Management"

|

||||

weight: 400

|

||||

---

|

||||

|

||||

A DevOps project user can configure credentials for Jenkins Pipeline. Once a user (e.g. Owner and Maintainer) adds/configures these credentials in DevOps project, the credentials can be used by DevOps projects to interact with these third-party applications.

|

||||

|

||||

Currently, it can store the following 4 types of credentials in DevOps project:

|

||||

|

||||

- Account credentials: Username and password - which could be handled as separate components or as a colon separated string in the format username:password, such as GitHub, GitLab, Docker Hub, etc.

|

||||

- SSH: Username with private key - an SSH public/private key pair.

|

||||

- Secret text: Secret content in a file.

|

||||

- kubeconfig: It's used to configure cross-cluster authentication, the page will automatically generate the contents of the kubeconfig file of the current Kubernetes cluster.

|

||||

|

||||

## Create Credential

|

||||

|

||||

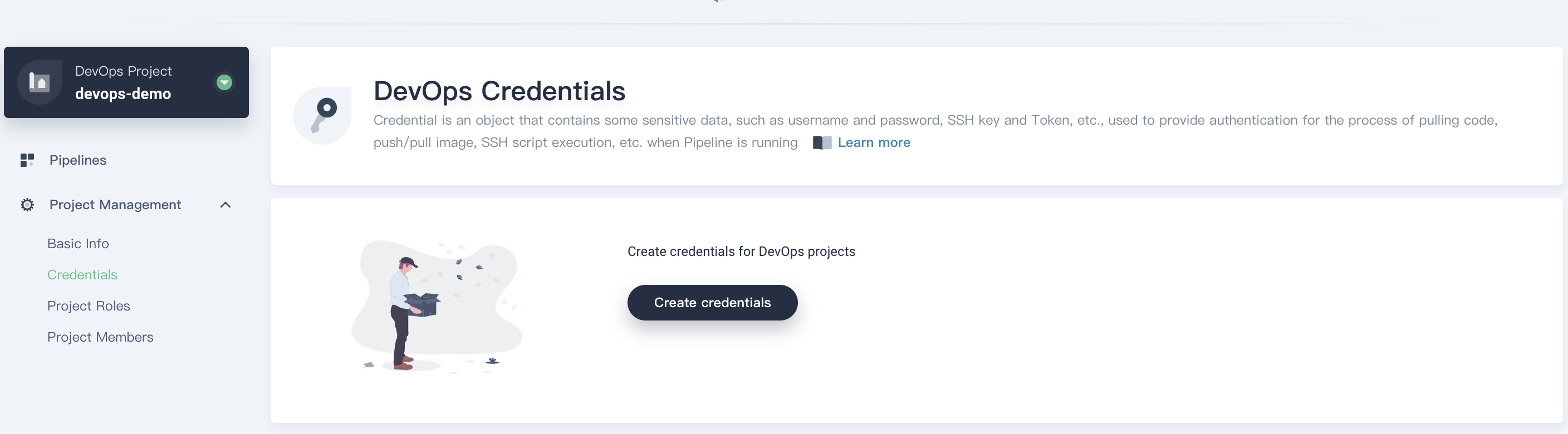

Sign in with `project-regular`, choose **Credentials** and click **Create Credential**.

|

||||

|

||||

|

||||

|

||||

### Create Credential for DockerHub

|

||||

|

||||

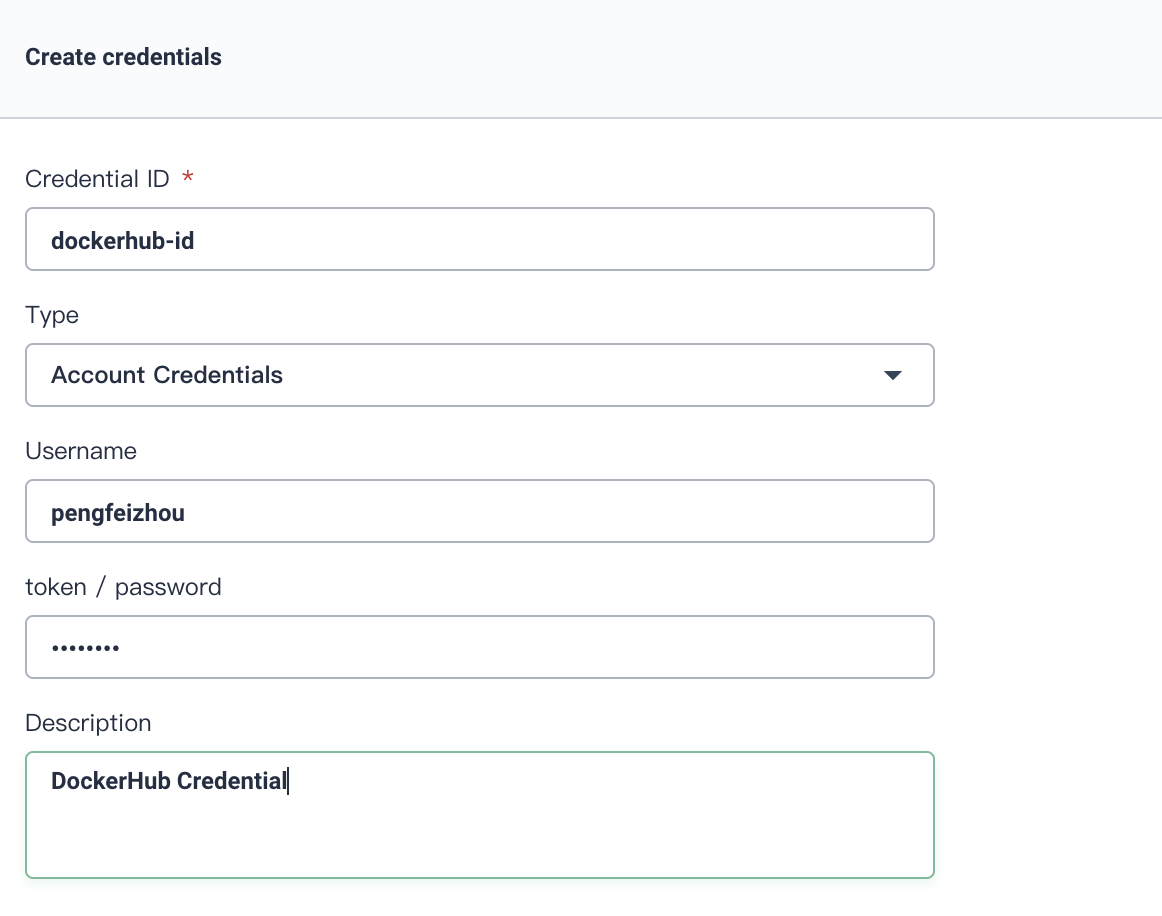

1. Click **Create**, fill in the basic information in the pop-up window.

|

||||

|

||||

- Credential ID: it will be used in pipeline, name it **dockerhub-id**

|

||||

- Type: Choose `Account Credentials`

|

||||

- Username: Your DockerHub account

|

||||

- Token/password: Your DockerHub password

|

||||

- Description: A brief introduction to this credential.

|

||||

|

||||

|

||||

Click **OK** when you've done.

|

||||

|

||||

|

||||

|

||||

3. Then you can see this credential has been created successully.

|

||||

|

||||

|

||||

|

||||

### Create Credential for GitHub

|

||||

|

||||

Similarly, we create a GitHub Credential and name it `github-id`, choose **Account Credentials**, others are the same with above.

|

||||

|

||||

> Note: If there is any special characters includes `@` `$`, it may cause unrecognizable error. You need to encode your account or password through third-party website, e.g. [urlencoder](https://www.urlencoder.org/), please convert it and paste to KubeSphere console.

|

||||

|

||||

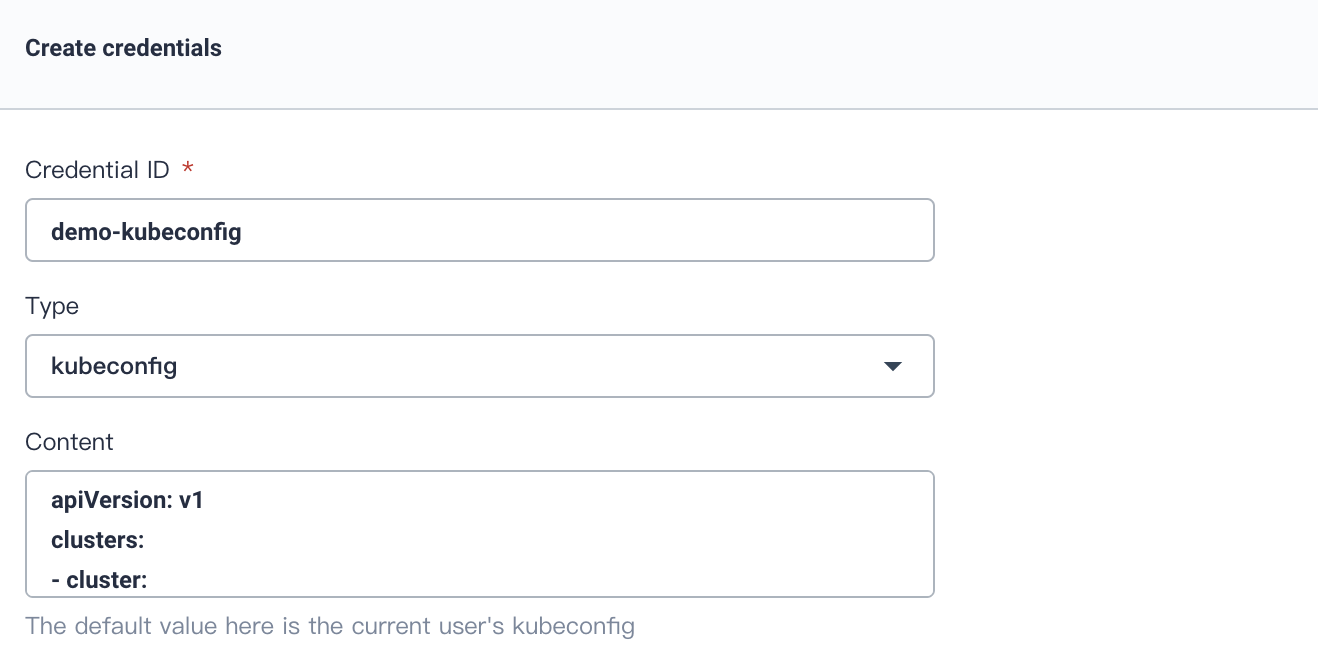

### Create KubeConfig Credential

|

||||

|

||||

The same as above, create a Credential, name it `demo-kubeconfig`, choose **kubeconfig**, click **Create** to complete creation.

|

||||

|

||||

> Note: A file that is used to configure access to clusters is called a kubeconfig file. Thus we create the kubeconfig credential to access the current Kubernetes cluster, which will be used in pipeline. You don't need to change the file since KubeSphere will automatically load the kubeconfig of the current Kubernetes cluster. On the contrary, you may need to change kubeconfig in KubeSphere when access other cluster.

|

||||

|

||||

|

||||

|

||||

## Using the Credential

|

||||

|

||||

1. When you creating a Pipeline, click on **Code Repository**.

|

||||

|

||||

|

||||

|

||||

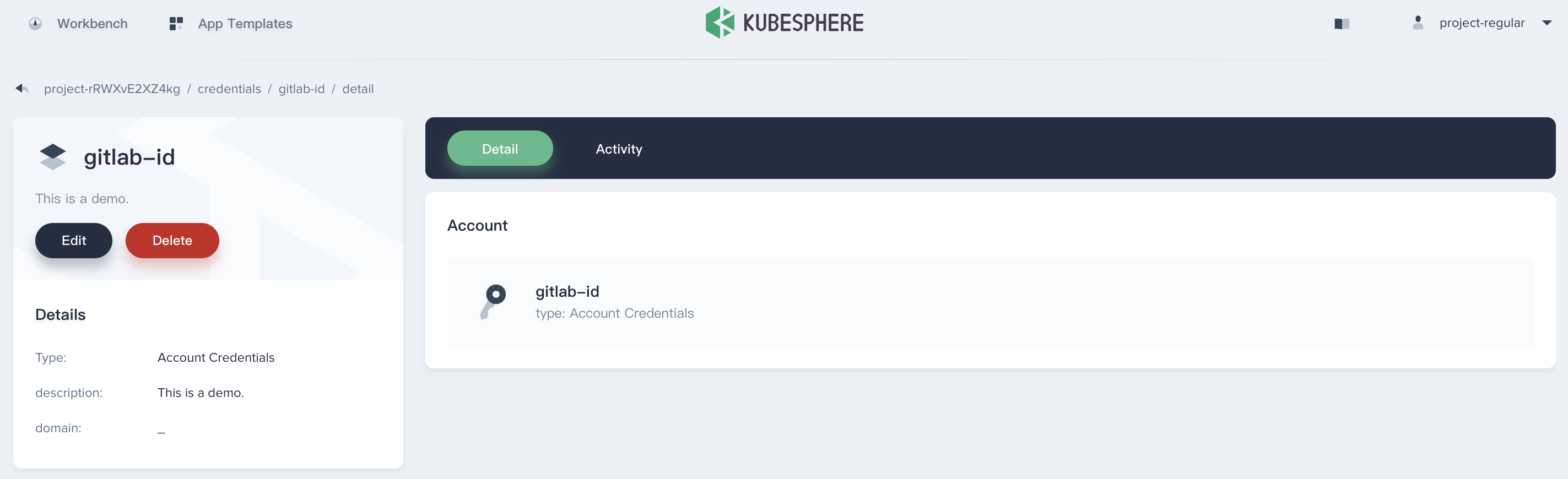

2. Choose **Git**, then select **gitlab-id** that we created at the last step. Thus you can use it directly.

|

||||

|

||||

|

||||

## Manage the Credential

|

||||

|

||||

Enter this credential's details page, then you can edit its information or delete it.

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,72 @@

|

|||

---

|

||||

title: "DevOps Project Management"

|

||||

keywords: 'kubernetes, docker, helm, jenkins, istio, prometheus'

|

||||

description: ''

|

||||

linkTitle: "DevOps Project Management"

|

||||

weight: 110

|

||||

---

|

||||

|

||||

## Prerequisites

|

||||

|

||||

- You need to create a workspace, a project and an account (project-admin). Please refer to Create Workspace, Project, Account and Role if they are not ready yet.

|

||||

- You need to enable KubeSphere DevOps system.

|

||||

|

||||

## Create a DevOps

|

||||

|

||||

1. Sign in with project-admin, choose `DevOps Projects` tap, then click **Create** and select **Create a DevOps project**.

|

||||

|

||||

|

||||

|

||||

2. Fill in the basic information for this DevOps project.

|

||||

|

||||

- Name: A concise and clear name for this DevOps project, which is convenient for users to browse and search, e.g. `demo-devops`.

|

||||

- Description: A brief introduction to DevOps project.

|

||||

|

||||

|

||||

|

||||

3. Then you will be able to view it has been created successfully.

|

||||

|

||||

|

||||

|

||||

## View the DevOps Project

|

||||

|

||||

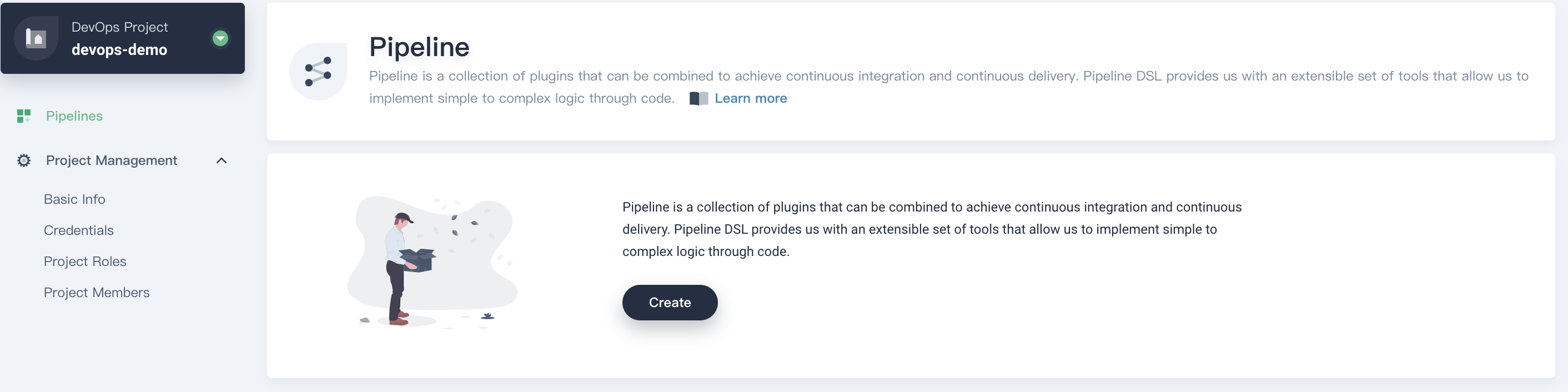

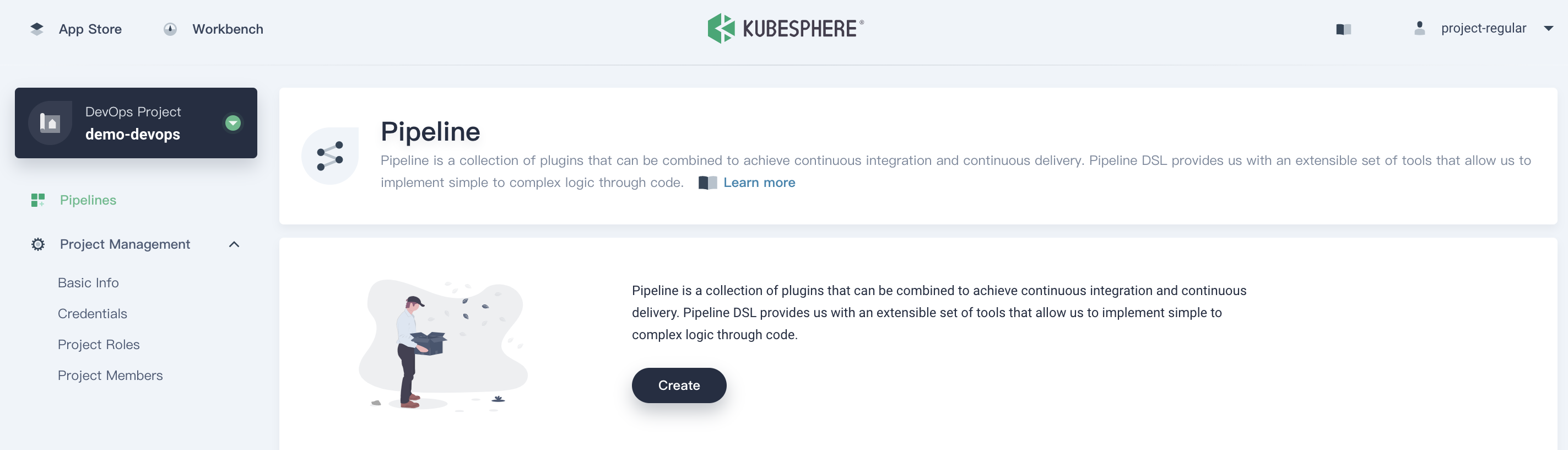

4. Enter into `demo-devops` page, it allows DevOps project admin to create CI/CD Pipelines and Credentials, as well as project management which includes basic information, roles and members.

|

||||

|

||||

|

||||

|

||||

### Pipeline

|

||||

|

||||

Pipeline is a suite of plugins which supports implementing and integrating continuous delivery pipelines into Jenkins.

|

||||

|

||||

### Credentials

|

||||

|

||||

A DevOps project user can configure credentials in the application for dedicated use by Jenkins Pipeline. Once a user (e.g. Owner and Maintainer) adds/configures these credentials in DevOps project, the credentials can be used by DevOps projects to interact with these 3rd party applications, such as GitHub, GitLab, Docker Hub, etc. See [Credentials Management](../credential-management/) for how to use the credentials.

|

||||

|

||||

### Member Roles

|

||||

|

||||

Currently, there are 4 kind of built-in roles in DevOps project as following list:

|

||||

|

||||

- Viewer: The viewer who can view all resources in the DevOps project.

|

||||

- Operator: The normal member in a DevOps project who can create pipelines and credentials in the DevOps project.

|

||||

- Admin: The administrator in the DevOps project who can perform any action on any resource. It gives full control over all resources in the DevOps project.

|

||||

|

||||

### Project Members

|

||||

|

||||

Click on the **Project Members** to see which users are currently in the project. Click the **Invite Member** button to invite developer, testing, or operation colleagues to join this DevOps project.

|

||||

|

||||

|

||||

|

||||

You can search for the member name in the pop-up page, click the “+” sign on the right to invite members from the user pool in the workspace to join the current DevOps project for collaborative work.

|

||||

|

||||

|

||||

|

||||

For example, you can grant invite `project-regular` into this DevOps project, and grant `project-regular` as Maintainer.

|

||||

|

||||

|

||||

Note that after the project-admin invites the member to the current DevOps project, in general, the resources (pipelines, credentials, etc.) created by the other members are visible to each other within the same group.

|

||||

|

||||

## Edit or Delete the DevOps Project

|

||||

|

||||

Choose **Project Management → Basic Info**, then click **···**, you will see the option for edit and delete button. It allows project admin to modify the basic information of this DevOps project.

|

||||

|

||||

|

||||

|

|

@ -0,0 +1,32 @@

|

|||

---

|

||||

title: "Set Email Server for KubeSphere Pipeline"

|

||||

keywords: 'kubesphere, kubernetes, notification, jenkins, devops, ci/cd, pipeline'

|

||||

description: 'Set Email Server for KubeSphere CI/CD pipeline'

|

||||

---

|

||||

|

||||

|

||||

The built-in Jenkins cannot share the same email configuration with platform notification system. Thus we need to set email server for KubeSphere DevOps pipeline separately.

|

||||

|

||||

> Note: Please be aware that the modification of the email server in `ks-jenkins` deployment below will restart the deployment itself. Consequently, the DevOps system will be unavailable for a few minutes. Please make such modification at an appropriate time.

|

||||

> Prerequisites: You need to enable KubeSphere DevOps System.

|

||||

|

||||

1. Log in KubeSphere by using `admin` account, navigate to **Platform**.

|

||||

|

||||

|

||||

|

||||

2. Then go to **Application Workloads → Workloads**, choose namespace and drill into **kubesphere-devops-system**. Then choose **ks-jenkins** to **Edit Yaml**.

|

||||

|

||||

|

||||

|

||||

Scroll down the panel you will see the following environments that you need to configure. Finally click **Update** to save the changes.

|

||||

|

||||

|

||||

|

||||

| Environment variable name | Description |

|

||||

|---|---|

|

||||

|EMAIL\_SMTP\_HOST | SMTP server address |

|

||||

|EMAIL\_SMTP\_PORT | SMTP server port (e.g. 25) |

|

||||

|EMAIL\_FROM\_ADDR | Email sender address |

|

||||

|EMAIL\_FROM\_NAME | Email sender name |

|

||||

|EMAIL\_FROM\_PASS | Email sender password |

|

||||

|EMAIL\_USE\_SSL | whether to open SSL configuration |

|

||||

|

|

@ -0,0 +1,51 @@

|

|||

---

|

||||

title: "Jenkins System Settings"

|

||||

keywords: 'kubernetes, docker, helm, jenkins, istio, prometheus'

|

||||

description: ''

|

||||

---

|

||||

|

||||

Jenkins is powerful and flexible and it has become the de facto standard for CI/CD workflow. But flexibility comes at a price: because in addition to the Jenkins itself, many plugins require some system-level configuration to get the job done.

|

||||

|

||||

KubeSphere DevOps is based on Jenkins for containerized CI/CD workflow functionality. To provide users with a schedulable Jenkins environment, KubeSphere uses **Configuration-as-Code** for Jenkins system settings, which requires the user to log in to Jenkins Dashboard and reload after KubeSphere modifies the configuration file. In the current release, Jenkins system settings are not yet available in KubeSphere, it will be supported in upcoming release.

|

||||

|

||||

### Modify ConfigMap

|

||||

|

||||

If you are a cluster-admin of KubeSphere and assume you need to modify the Jenkins system configuration, it is recommended that you use Configuration-as-Code (CasC) in KubeSphere.

|

||||

|

||||

Firstly, you need to modify `jenkins-casc-config` in KubeSphere, then log in to Jenkins Dashboard to perform **reload**. (Because system settings written directly through Jenkins Dashborad may be overwritten by CasC configuration after Jenkins rescheduling).

|

||||

|

||||

The built-in Jenkins CasC file is stored in `/system-workspace/kubesphere-devops-system/configmaps/jenkins-casc-config/` as **ConfigMap**.

|

||||

|

||||

1. Log in KubeSphere by using `admin` account, navigate to **Platform**.

|

||||

|

||||

|

||||

|

||||

2. Then go to **Configurations → ConfigMaps**, choose namespace and drill into **kubesphere-devops-system**. Then choose **jenkins-casc-config** to **Edit Yaml**.

|

||||

|

||||

|

||||

|

||||

The configuration template for jenkins-casc-config is a yaml type file as shown below. For example, you can modify the container image, label, etc. in the broker (Kubernetes Jenkins agent) in ConfigMap or add a container in the podTemplate.

|

||||

|

||||

|

||||

|

||||

After KubeSphere modifies **jenkins-casc-config**, you need to reload your updated system configuration on the **configuration-as-code** page under Jenkins Dashboard System Management.

|

||||

|

||||

### Login Jenkins to Reload

|

||||

|

||||

1. KubeSphere Installer will deploy Jenkins Dashboard for the first installation. Jenkins supports a form of KubeSphere's LDAP, so you can log in to Jenkins Dashboard with the username `admin` and its default password to access the Jenkins dashboard via `http://EIP:30180`. After logging in, click `Manage Jenkins` in the left navigation bar.

|

||||

|

||||

> Note: Accessing the Jenkins Dashboard may require port forwarding and firewalls to be released on the public network for access.

|

||||

|

||||

|

||||

|

||||

2. Find `Configuration as Code` at the bottom of the console and click Enter.

|

||||

|

||||

|

||||

|

||||

3. Click `Reload` in the Configuration as Code panel to reload and update the system configuration which is modified in KubeSphere's ConfigMap.

|

||||

|

||||

|

||||

|

||||

For details on how to set up the configuration via CasC, see [Jenkins Documentation](https://github.com/jenkinsci/configuration-as-code-plugin).

|

||||

|

||||

> Note: In the current version, not all plugins support CasC settings. CasC will only override plugin configurations that are set up using CasC.

|

||||

|

|

@ -3,7 +3,7 @@ title: "Role and Member Management"

|

|||

keywords: 'Kubernetes, KubeSphere, DevOps, role, member'

|

||||

description: 'Role and Member Management'

|

||||

|

||||

weight: 2240

|

||||

weight: 600

|

||||

---

|

||||

|

||||